Towards Predictable Data Centers Why Johnny can’t use the cloud and

37 Slides2.22 MB

Towards Predictable Data Centers Why Johnny can’t use the cloud and what we can do about it? Hitesh Ballani, Paolo Costa, Thomas Karagiannis, Greg O’Shea and Ant Rowstron Microsoft Research, Cambridge

Cloud computing

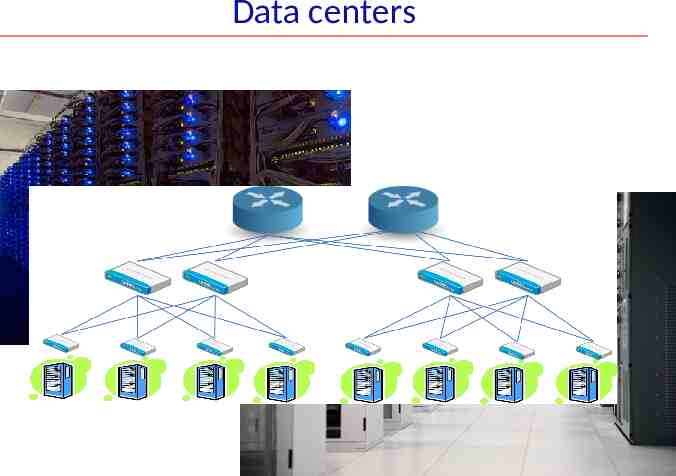

Data centers

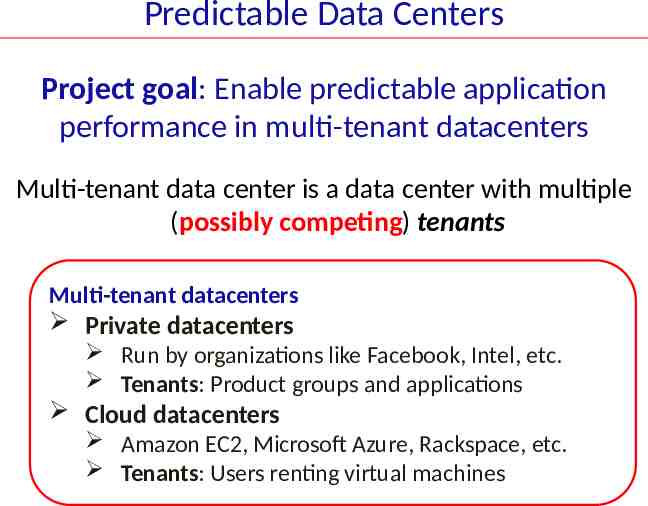

Predictable Data Centers Project goal: Enable predictable application performance in multi-tenant datacenters Multi-tenant data center is a data center with multiple (possibly competing) tenants Multi-tenant datacenters Private datacenters Run by organizations like Facebook, Intel, etc. Tenants: Product groups and applications Cloud datacenters Amazon EC2, Microsoft Azure, Rackspace, etc. Tenants: Users renting virtual machines

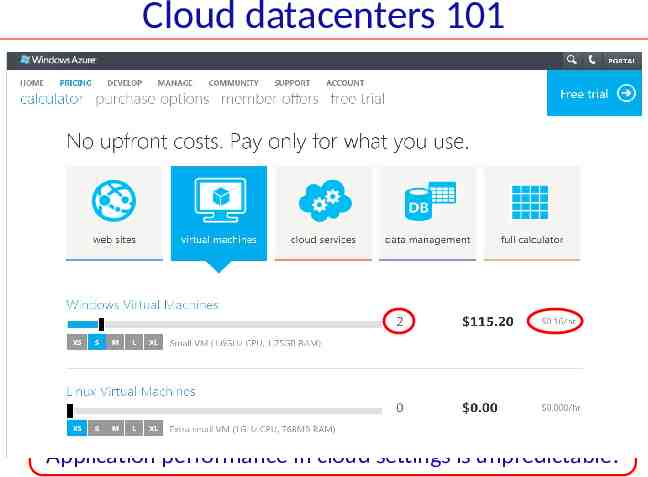

Cloud datacenters 101 Simple interface: Tenants ask for a set of VMs Tenant Web Interface Request VMs Tenants are charged for Virtual Machines (VMs) per hour Microsoft Azure small VMs: 0.08/hour Problem Application performance in cloud settings is unpredictable!

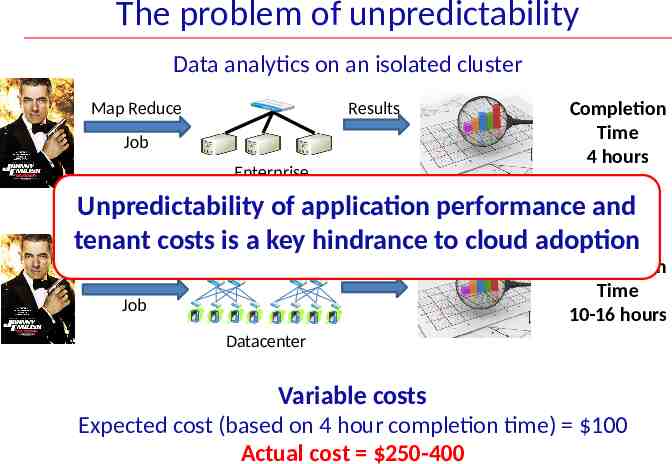

The problem of unpredictability Data analytics on an isolated cluster Map Reduce Results Job Enterprise Completion Time 4 hours Unpredictability of application performance and Data analytics in a multi-tenant datacenter tenant costs is a key hindrance to cloud adoption Map Reduce Results Job Completion Time 10-16 hours Datacenter Variable costs Expected cost (based on 4 hour completion time) 100 Actual cost 250-400

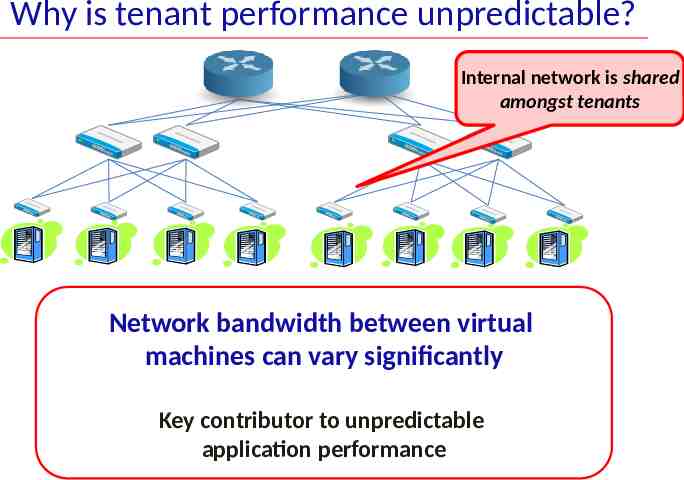

Why is tenant performance unpredictable? Internal network is shared amongst tenants Network bandwidth between virtual machines can vary significantly Key contributor to unpredictable application performance

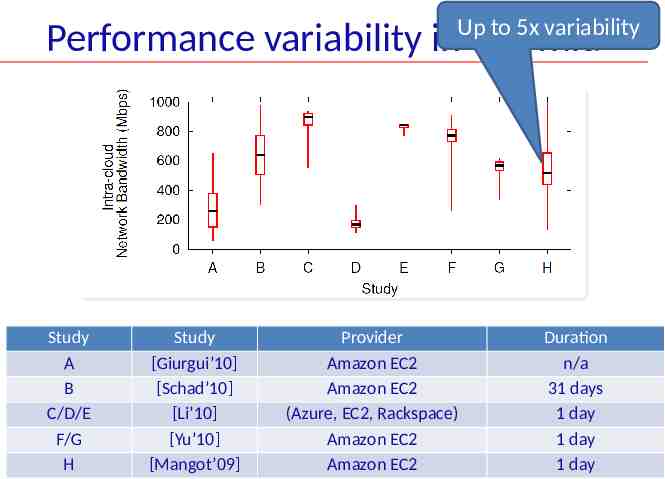

Up to 5x variability Performance variability in the wild Study A B C/D/E F/G H Study [Giurgui’10] [Schad’10] [Li’10] [Yu’10] [Mangot’09] Provider Amazon EC2 Amazon EC2 (Azure, EC2, Rackspace) Amazon EC2 Amazon EC2 Duration n/a 31 days 1 day 1 day 1 day

Oktopus Enable guaranteed network performance

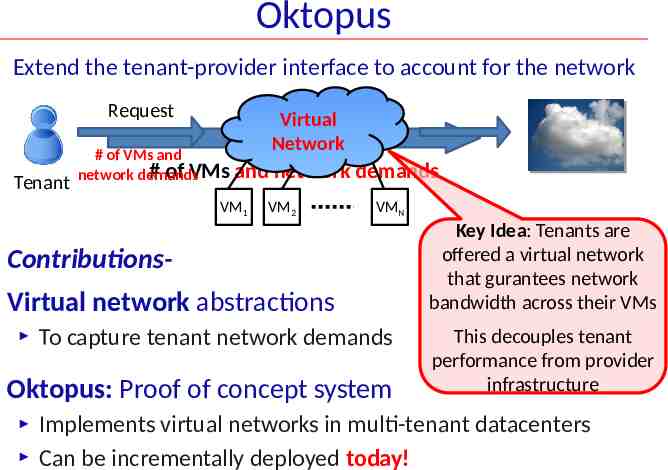

Oktopus Extend the tenant-provider interface to account for the network Request Tenant Request Virtual # of VMs and # of VMs network demands Network and network demands VM1 VM2 VMN ContributionsVirtual network abstractions To capture tenant network demands Oktopus: Proof of concept system Key Idea: Tenants are offered a virtual network that gurantees network bandwidth across their VMs This decouples tenant performance from provider infrastructure Implements virtual networks in multi-tenant datacenters Can be incrementally deployed today!

Key takeaway Exposing tenant network demands to providers enables a symbiotic tenant-provider relationship Tenants get predictable performance (and lower costs) Provider revenue increases

Talk Outline Introduction Virtual network abstractions Oktopus Allocating virtual networks Enforcing virtual networks Evaluation

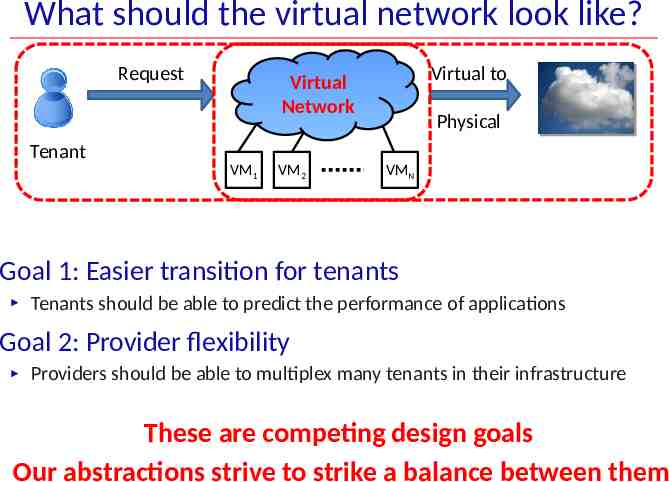

What should the virtual network look like? Request Virtual to Virtual Network Physical Tenant VM1 VM2 VMN Goal 1: Easier transition for tenants Tenants should be able to predict the performance of applications Goal 2: Provider flexibility Providers should be able to multiplex many tenants in their infrastructure These are competing design goals Our abstractions strive to strike a balance between them

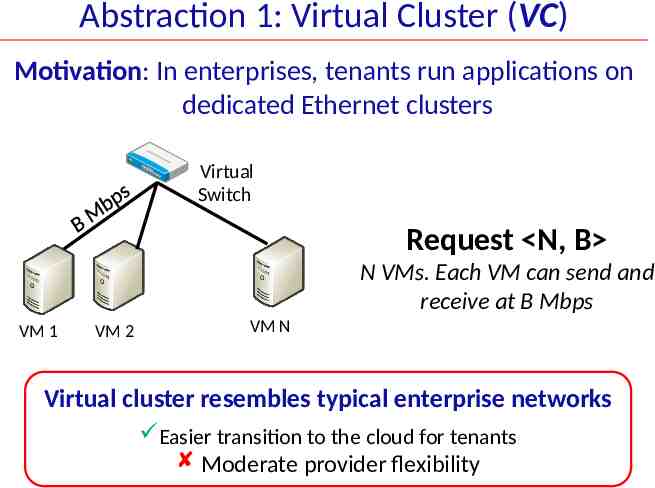

Abstraction 1: Virtual Cluster (VC) Motivation: In enterprises, tenants run applications on dedicated Ethernet clusters Virtual Switch Request N, B N VMs. Each VM can send and receive at B Mbps VM 1 VM 2 VM N Virtual cluster resembles typical enterprise networks Easier transition to the cloud for tenants Moderate provider flexibility

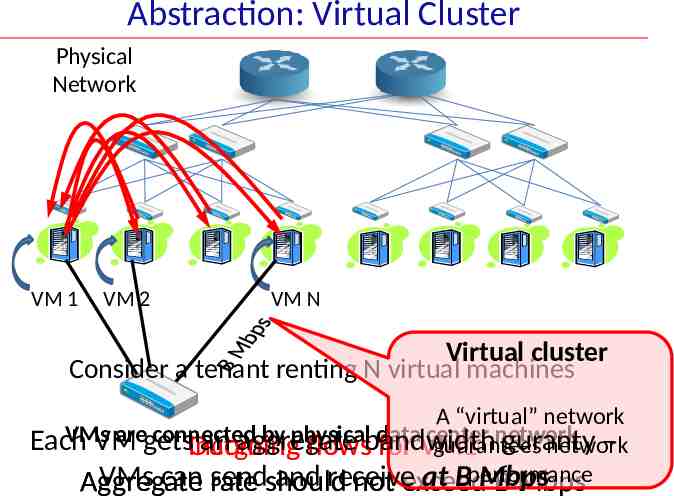

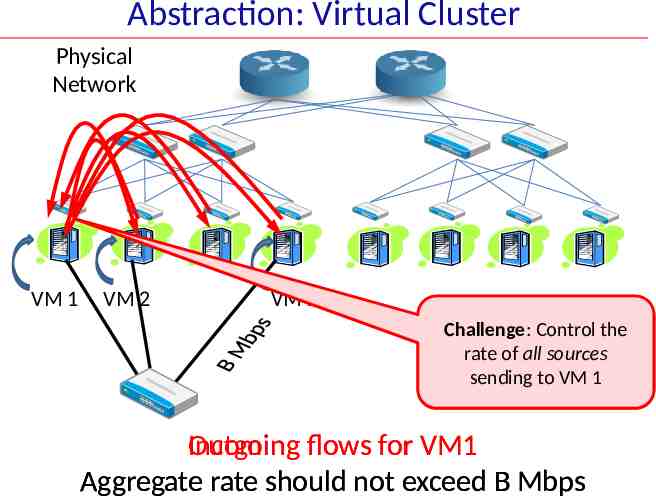

Abstraction: Virtual Cluster Physical Network VM 1 VM 2 VM N Virtual cluster Consider a tenant renting N virtual machines A “virtual” network VMs aregets connected by physicalbandwidth data center network Each VM an aggregate – guarantees network Incoming Outgoing flows for VM1 guranty VMs can rate sendshould and receive at Bperformance Mbps Aggregate not exceed B Mbps

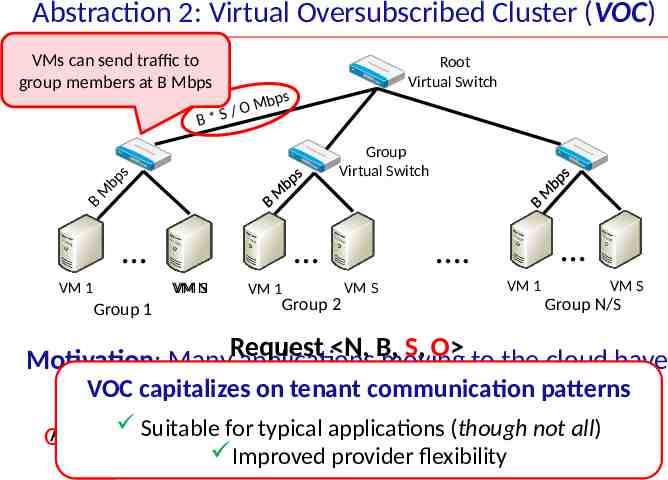

Abstraction 2: Virtual Oversubscribed Cluster (VOC) VMs can send traffic to group members at B Mbps B OM *S/ Root Virtual Switch bps B M bp s Group Virtual Switch VM 1 Group 1 VM N S VM 1 Group 2 . VM S VM 1 VM S Group N/S N, B, S, O to the cloud have Motivation: ManyRequest applications moving NVOC VMscapitalizes in groups of size S. Oversubscription factor O. on tenant communication patterns localized communication patterns Suitable for typical applications not all) Applications are composed groups with(though more traffic within No oversubscription for intra-group communication Oversubscription factor O of for inter-group communication Improved flexibility groups than across groups (captures the sparseness ofprovider inter-group communication) Intra-group communication is the common case!

Oktopus in operation Request Tenant # of VMs and network demands Step 1: Admission control VM placement Can network guarantees for the request be met? Step 2: Enforce virtual networks Ensure bandwidth guarantees are actually met

Talk Outline Introduction Virtual network abstractions Oktopus VM Placement Enforcing virtual networks Evaluation

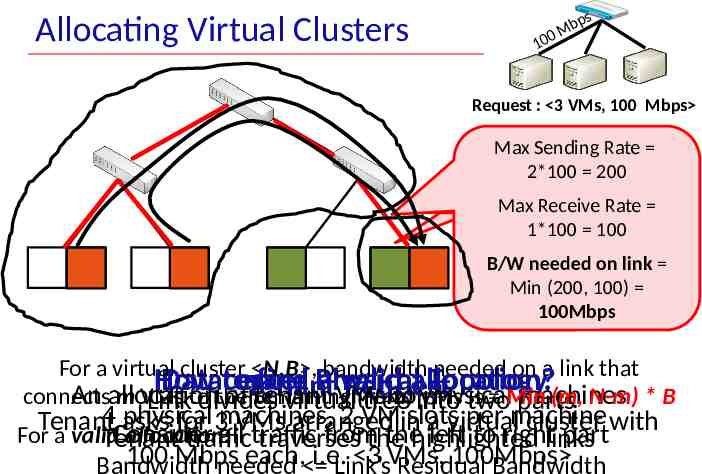

Allocating Virtual Clusters Mb 0 10 ps Request : 3 VMs, 100 Mbps Max Sending Rate 2*100 200 VM for an existing What bandwidth Maxneeds Receive tenant toRate be 1*100 100 reserved for the B/W needed on link tenant on this link? Min (200, 100) 100Mbps For a virtual cluster N,B , bandwidth needed on a link that How Datacenter to find Physical a valid allocation? Topology Tenant Request An allocation of remaining tenant VMs tointo physical machines connects m VMs the (N-m) VMs is Min (m, N-m) * B Linktodivides virtual tree two parts 4 asks physical machines, 2 VMin slots per machine Tenant for 3 VMs arranged a virtual cluster with For a valid allocation: Consider all traffic fromthe thehighlighted left to rightlinks part Tenant traffic traverses 100 Mbps each, i.e.Link’s 3 VMs, 100Mbps Bandwidth needed Residual Bandwidth

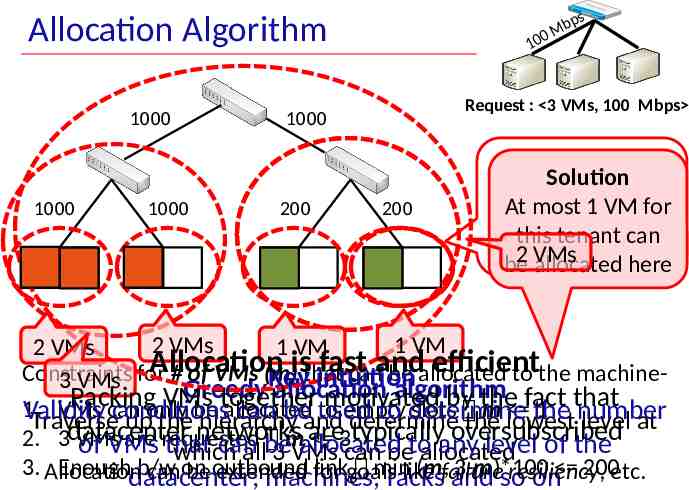

Allocation Algorithm 1000 1000 1000 Mb 0 10 Request : 3 VMs, 100 Mbps 1000 200 ps 200 HowSolution many VMs At most 1 VM for can be allocated can tothis thistenant machine? 2 VMs be allocated here 2 VMs 1 VM 2 VMs 1 VM Allocation isthat fast and efficient Constraints for # of VMs (m) can be allocated to the machineKey intuition 3 VMs Greedy allocation algorithm Packing VMs together motivated by the fact that 1. VMs can only be allocated to empty slots m 1 Validity conditions can beand used to determine the number Traverse up the hierarchy determine the lowest level at datacenter networks are typically oversubscribed 2. 3 VMs are requested mallocated 3 of VMs that can any level of the which allbe 3 VMs can betoallocated 3. Allocation Enoughdatacenter; b/w linkgoals min (m,failure 3-m)*100 canon be outbound extended for like resiliency, machines, racks and so on 200etc.

Talk Outline Introduction Virtual network abstractions Oktopus Allocating virtual networks Enforcing virtual networks Evaluation

Enforcing Virtual Networks Allocation algorithms assume No VM exceeds its bandwidth guarantees Enforcement of virtual networks To satisfy the above assumption Limit tenant VMs to the bandwidth specified by their virtual network Irrespective of the type of tenant traffic (UDP/TCP/.) Irrespective of number of flows between the VMs

Abstraction: Virtual Cluster Physical Network VM 1 VM 2 VM N Challenge: Controlby the Can be achieved rate of allthe sources controlling source sending torate VM 1 sending Incoming Outgoing flows for VM1 Aggregate rate should not exceed B Mbps

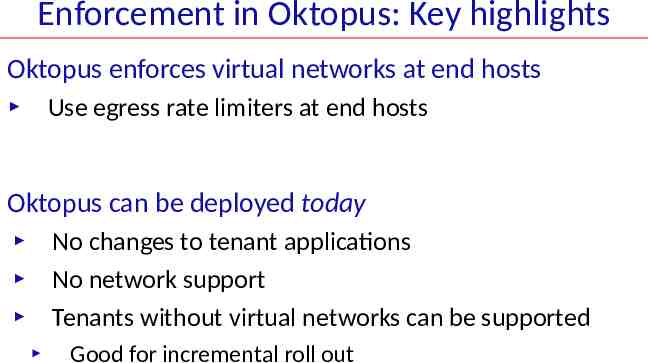

Enforcement in Oktopus: Key highlights Oktopus enforces virtual networks at end hosts Use egress rate limiters at end hosts Oktopus can be deployed today No changes to tenant applications No network support Tenants without virtual networks can be supported Good for incremental roll out

Talk Outline Introduction Virtual network abstractions Oktopus Allocating virtual networks Enforcing virtual networks Evaluation

Evaluation Oktopus deployment On a 25-node testbed Benchmark Oktopus implementation Cross-validate simulation results Large-scale simulation Allows us to quantify the benefits of virtual networks at scale The use of virtual networks benefits both tenants and providers

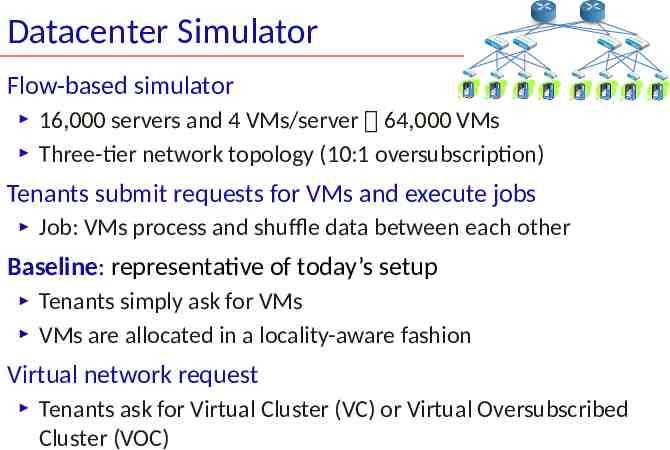

Datacenter Simulator Flow-based simulator 16,000 servers and 4 VMs/server 64,000 VMs Three-tier network topology (10:1 oversubscription) Tenants submit requests for VMs and execute jobs Job: VMs process and shuffle data between each other Baseline: representative of today’s setup Tenants simply ask for VMs VMs are allocated in a locality-aware fashion Virtual network request Tenants ask for Virtual Cluster (VC) or Virtual Oversubscribed Cluster (VOC)

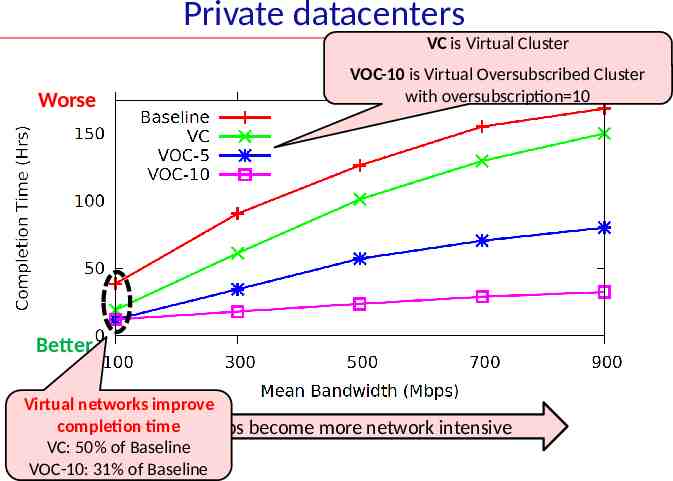

Private datacenters VC is Virtual Cluster Worse VOC-10 is Virtual Oversubscribed Cluster with oversubscription 10 Execute a batch of 10,000 tenant jobs Jobs vary in network intensiveness (bandwidth at which a job can generate data) Better Virtual networks improve completion time Jobs become more network intensive VC: 50% of Baseline VOC-10: 31% of Baseline

Private datacenters With virtual networks, tenants get guaranteed network b/w Job completion time is bounded With Baseline, tenant network b/w can vary significantly Job completion time varies significantly For 25% of jobs, completion time increases by 280% Lagging jobs hurt datacenter throughput Virtual networks benefit both tenants and provider Tenants: Job completion is faster and predictable Provider: Higher datacenter throughput

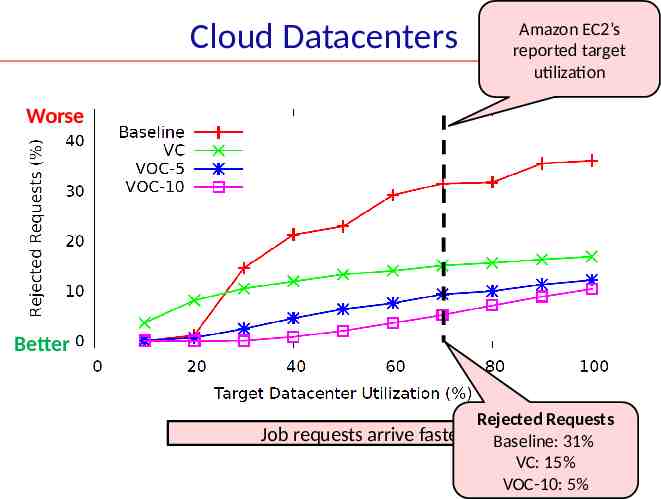

Cloud Datacenters Amazon EC2’s reported target utilization Worse Tenant job requests arrive over time Jobs are rejected if they cannot be accommodated on arrival (representative of cloud datacenters) Better Rejected Requests Job requests arrive faster Baseline: 31% VC: 15% VOC-10: 5%

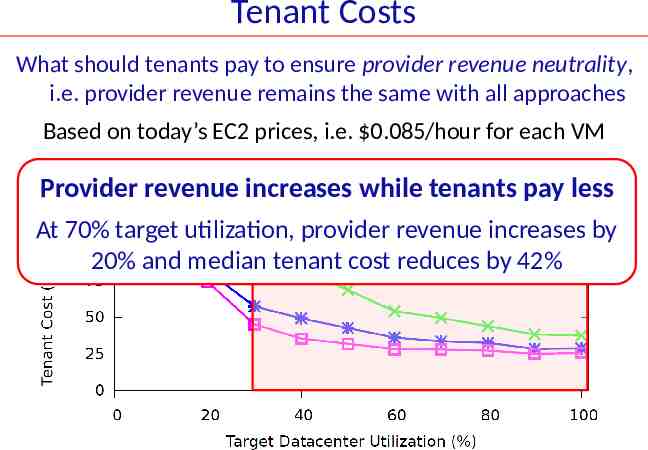

Tenant Costs What should tenants pay to ensure provider revenue neutrality, i.e. provider revenue remains the same with all approaches Based on today’s EC2 prices, i.e. 0.085/hour for each VM Provider revenue increases while tenants pay less At 70% target utilization, provider revenue increases by 20% and median tenant cost reduces by 42%

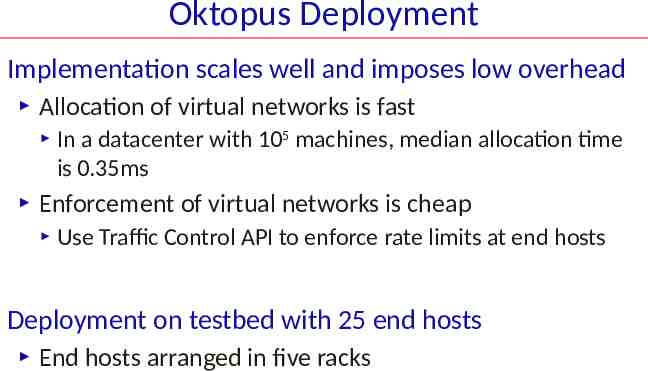

Oktopus Deployment Implementation scales well and imposes low overhead Allocation of virtual networks is fast In a datacenter with 105 machines, median allocation time is 0.35ms Enforcement of virtual networks is cheap Use Traffic Control API to enforce rate limits at end hosts Deployment on testbed with 25 end hosts End hosts arranged in five racks

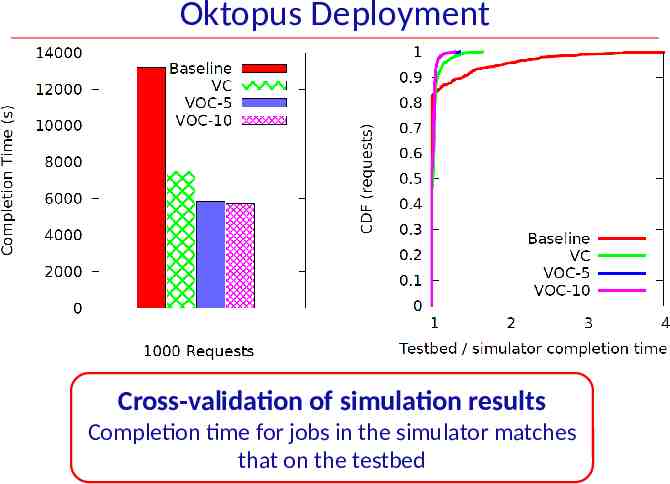

Oktopus Deployment Cross-validation of simulation results Completion time for jobs in the simulator matches that on the testbed

Summary Proposal: Offer virtual networks to tenants Virtual network abstractions Resemble physical networks in enterprises Make transition easier for tenants Proof of concept: Oktopus Tenants get guaranteed network performance Sufficient multiplexing for providers Win-win: tenants pay less, providers earn more! How to determine tenant network demands?

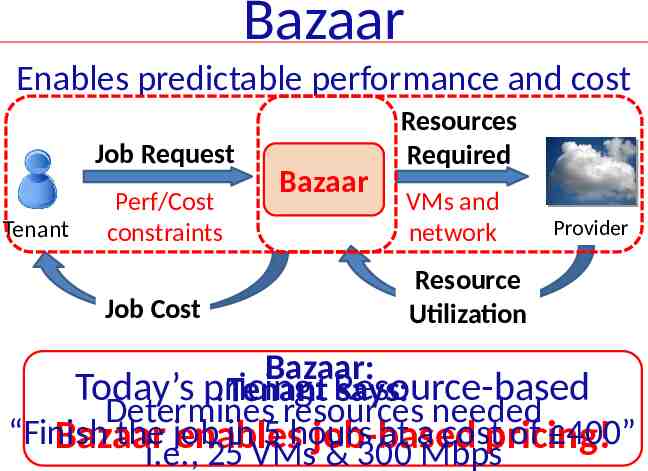

Bazaar Enables predictable performance and cost Job Request Tenant Perf/Cost constraints Job Cost Bazaar Resources Required VMs and network Provider Resource Utilization Bazaar: Today’s pricing: Tenant Resource-based says: Determines resources needed “Finish the job in 5 hours at a costpricing! of 400” Bazaar enables job-based i.e., 25 VMs & 300 Mbps

Thank you

Oktopus Offers virtual networks to tenants in datacenters Two main components Management plane: Allocation of tenant requests Allocates tenant requests to physical infrastructure Accounts for tenant network bandwidth requirements Data plane: Enforcement of virtual networks Enforces tenant bandwidth requirements Achieved through rate limiting at end hosts