Aamer Jaleel, Kevin B. Theobald, Simon C. Steely Jr. , Joel Emer

28 Slides719.13 KB

Aamer Jaleel, Kevin B. Theobald, Simon C. Steely Jr. , Joel Emer Intel Corporation High Performance Cache Replacement Using ReReference Interval Prediction (RRIP) The ACM IEEE International Symposium on Computer Architecture (ISCA) conference, June 19–23, 2010, Saint-Malo, France. Chien-Chih(Paul) Chao Chih-Chiang(Michael) Chang Instructor: Dr. Ann Gordon-Ross 1 / 20

Agenda Motivation Background Least Recently Used (LRU) policy Dynamic Insertion Policy (DIP) Least Frequently Used(LFU) policy Re-Reference Interval Prediction (RRIP) Not Recently Used policy Static RRIP Dynamic RRIP Experimental Methodology Results and Analysis Conclusion 2 / 20

Cache Replacement Policies Cache stores the frequently required data Discard items to make room for the new ones when cache is full. http://i.crn.com/enc/ CACHEMEM.GIF http://en.wikipedia.org/wiki/Cache algorithms http://www.mymodernmet.com/profiles/blogs/ 2100445:BlogPost:33176 3

Motivation Efficient last-level cache(LLC) utilization Avoid long latency cache misses to main memory Need a practical cache replacement policy that is not only thrashresistant but scan-resistant 4

Background LRU Replacement Policies DIP (Improvement of LRU) Cache Access Patterns LRU/ LFU Hybrid replacement policy Comparison of DIP and Hybrid(LRU/LFU) Improving LLC performance by targeting cache blocks that are dead upon cache insertion 5

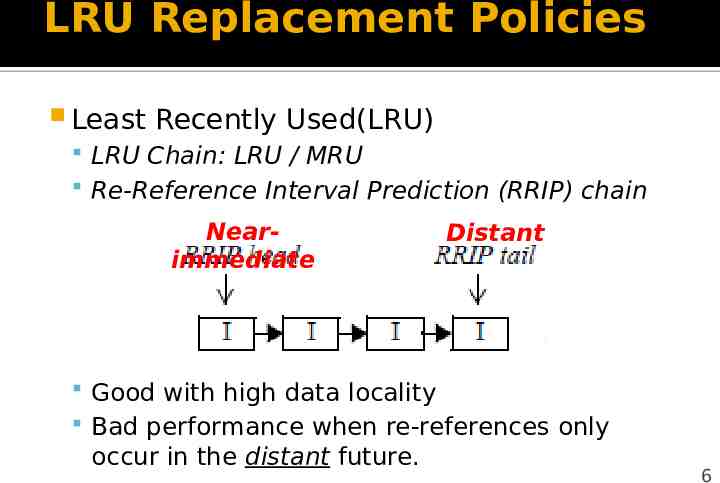

LRU Replacement Policies Least Recently Used(LRU) LRU Chain: LRU / MRU Re-Reference Interval Prediction (RRIP) chain Nearimmediate Distant Good with high data locality Bad performance when re-references only occur in the distant future. 6

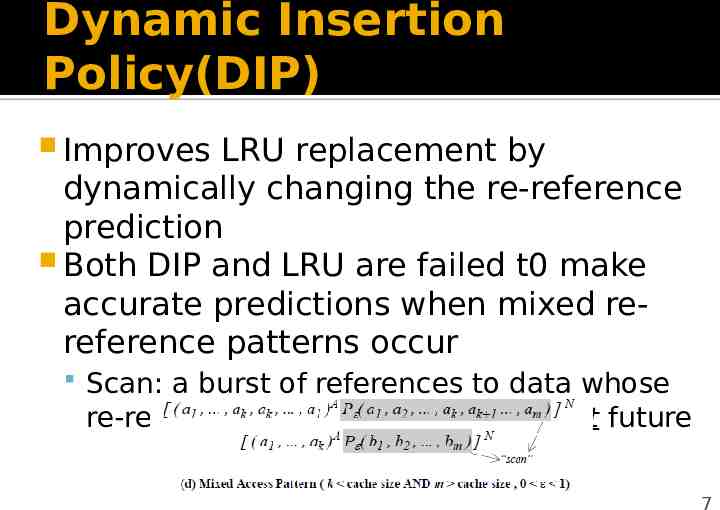

Dynamic Insertion Policy(DIP) Improves LRU replacement by dynamically changing the re-reference prediction Both DIP and LRU are failed t0 make accurate predictions when mixed rereference patterns occur Scan: a burst of references to data whose re-reference interval is in the distant future 7

Cache Access Patterns Recency-friendly Access Pattern Thrashing Access Pattern Streaming Access Pattern Mixed Access Pattern http://www.islington.gov.uk/education/libraries/ borrowingfromlibrary.asp 8

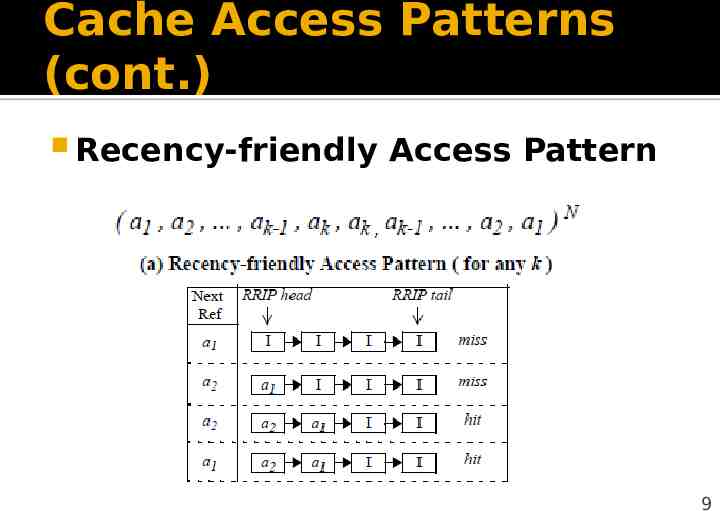

Cache Access Patterns (cont.) Recency-friendly Access Pattern 9

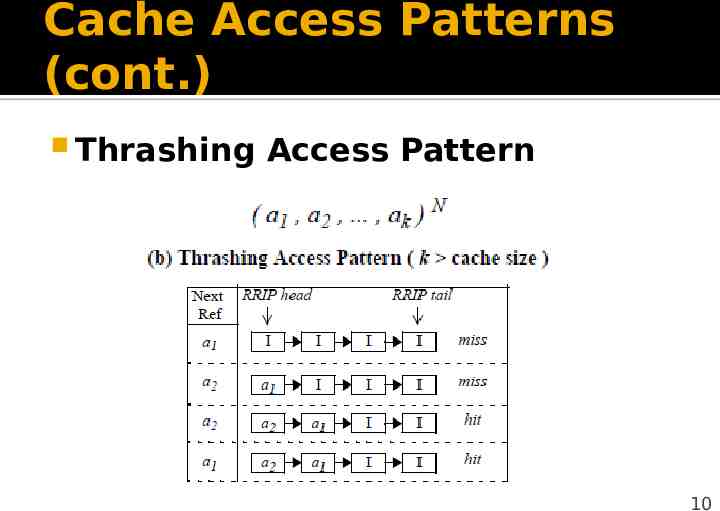

Cache Access Patterns (cont.) Thrashing Access Pattern 10

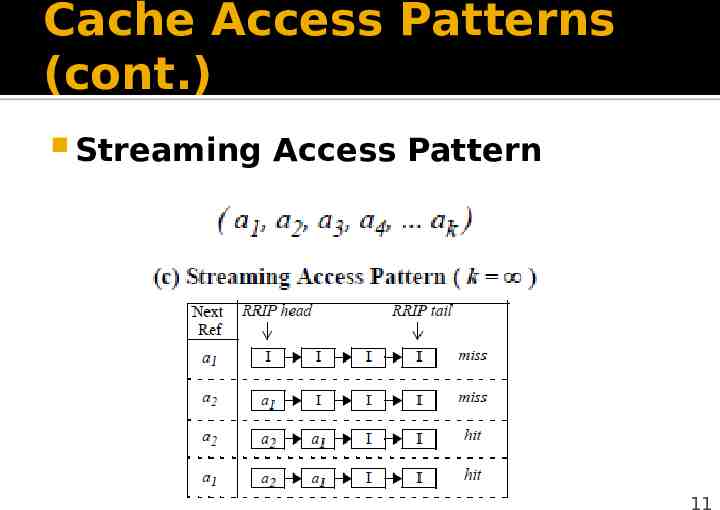

Cache Access Patterns (cont.) Streaming Access Pattern 11

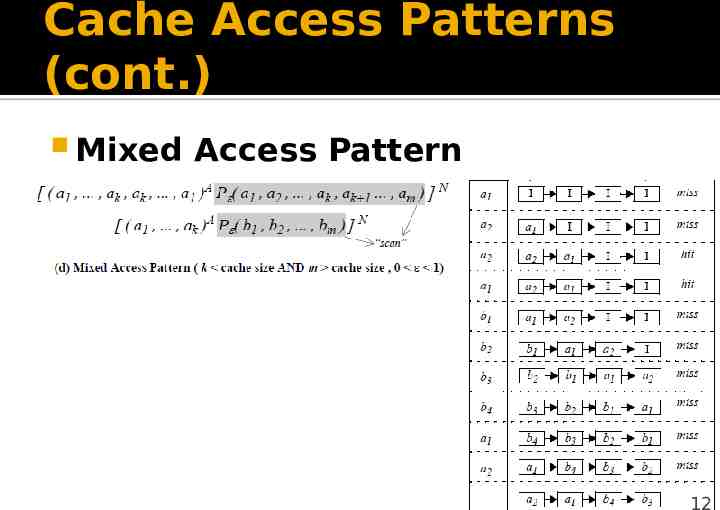

Cache Access Patterns (cont.) Mixed Access Pattern 12

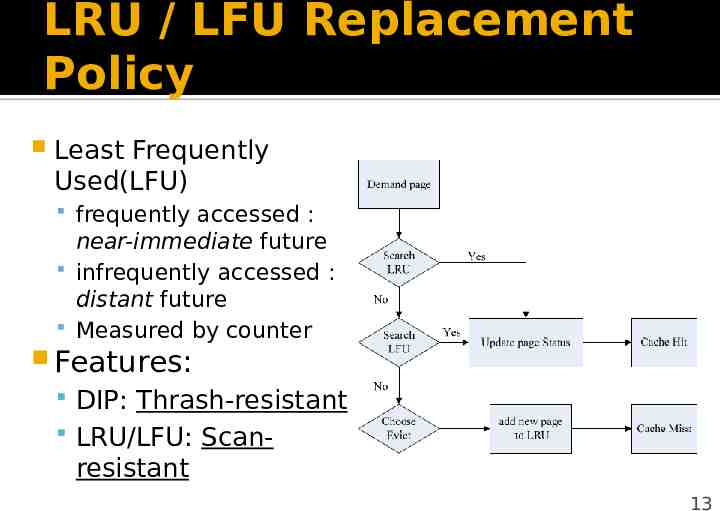

LRU / LFU Replacement Policy Least Frequently Used(LFU) frequently accessed : near-immediate future infrequently accessed : distant future Measured by counter Features: DIP: Thrash-resistant LRU/LFU: Scan- resistant 13

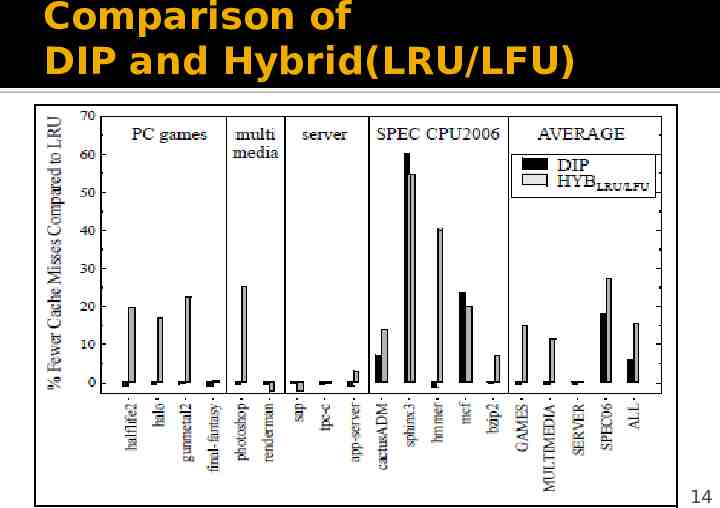

Comparison of DIP and Hybrid(LRU/LFU) 14

Re-Reference Intervall Prediction Not Recently Used (NRC) replacement policy Static RRIP SRRIP with Hit priority SRRIP with Frequency priority Dynamic Behavior RRIP for a Mixed Access Pattern Experimental methodology and Results Simulator 15 / 20

NRC replacement policy Motivation LRU cannot perform to mixed access patterns Chained-based LRU is impractical for highly associative caches The nru-bit Value of ‘1’ implies was recently used and is predicted to be re-referenced in the nearimmediate future Value of ‘0’ implies was not recently used and is predicted to be re-referenced in the distant future 16 / 20

Static RRIP Motivation One bit of information is not enough NRU cannot identify non-scan blocks in a mix access pattern M-bit Re-Reference Prediction Values (RRPV) 2M possible RRPV eables intermediate re-reference intervals predicton Hit Priority (HP) Updates RRIP to be near-immediate on a hit Prioritize replacement of blocks with no hits Frequency Priority Decrementing the RRPV register on cache hits Prioritize replacement of blocks with infrequently re-ref 17 / 20

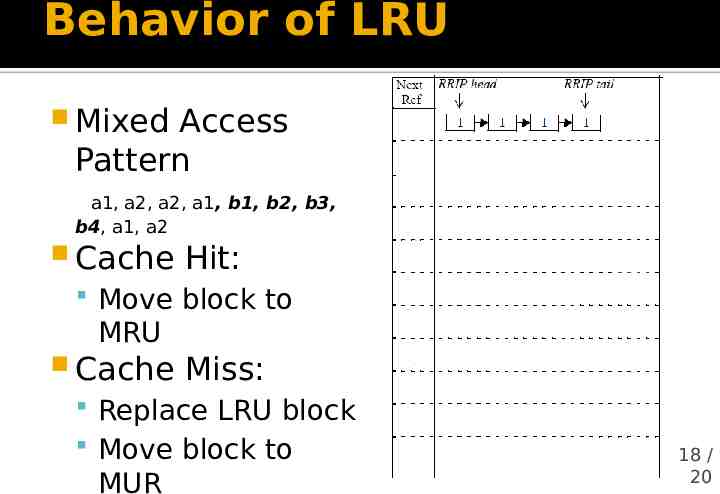

Behavior of LRU Mixed Access Pattern a1, a2, a2, a1, b1, b2, b3, b4, a1, a2 Cache Hit: Move block to MRU Cache Miss: Replace LRU block Move block to MUR 18 / 20

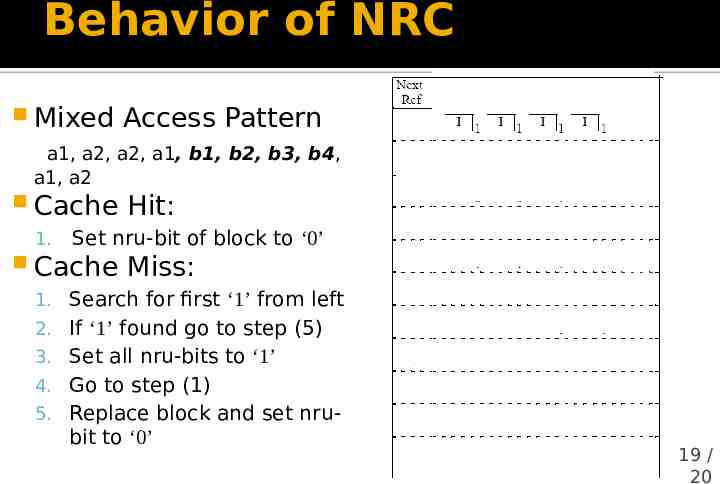

Behavior of NRC Mixed Access Pattern a1, a2, a2, a1, b1, b2, b3, b4, a1, a2 Cache 1. Hit: Set nru-bit of block to ‘0’ Cache Miss: 1. Search for first ‘1’ from left 2. If ‘1’ found go to step (5) 3. Set all nru-bits to ‘1’ 4. Go to step (1) 5. Replace block and set nru- bit to ‘0’ 19 / 20

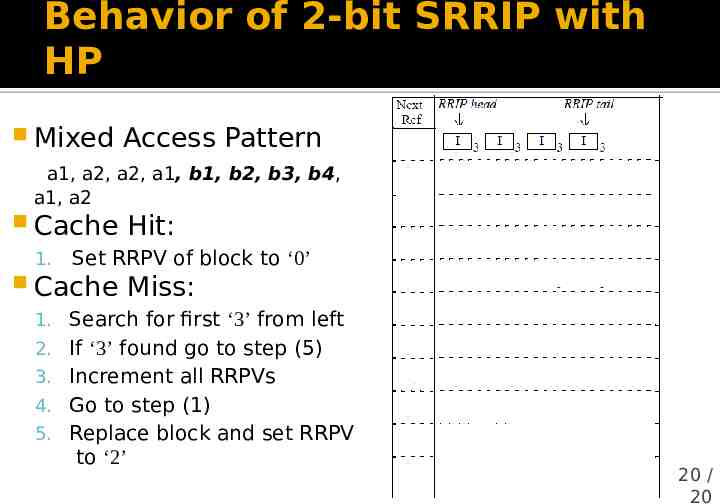

Behavior of 2-bit SRRIP with HP Mixed Access Pattern a1, a2, a2, a1, b1, b2, b3, b4, a1, a2 Cache 1. Hit: Set RRPV of block to ‘0’ Cache Miss: 1. Search for first ‘3’ from left 2. If ‘3’ found go to step (5) 3. Increment all RRPVs 4. Go to step (1) 5. Replace block and set RRPV to ‘2’ 20 / 20

Dynamic RRIP Motivation SRRIP does not thrash-resistant Bimodal RRIP (BRRIP) Similar to Bimodal Insertion Policy of DIP Insert majority of cache blocks with distant re-ref Insert infrequently with a long re-ref interval Set Dueling Choose between scan-resistant SRRIP and thrash- resistant BRRIP by using two Set Dueling Mointors Use a single policy selection counter 21 / 20

Experimental Methodology Simulator CMP IM 4-way out-of-oreder 128-entry reorder buffer 3 level cache hierarchy Benchmarks 5 workloads from SPEC CPU2006 9 “real world” workloads PC Games Multimedia Server 22 / 20

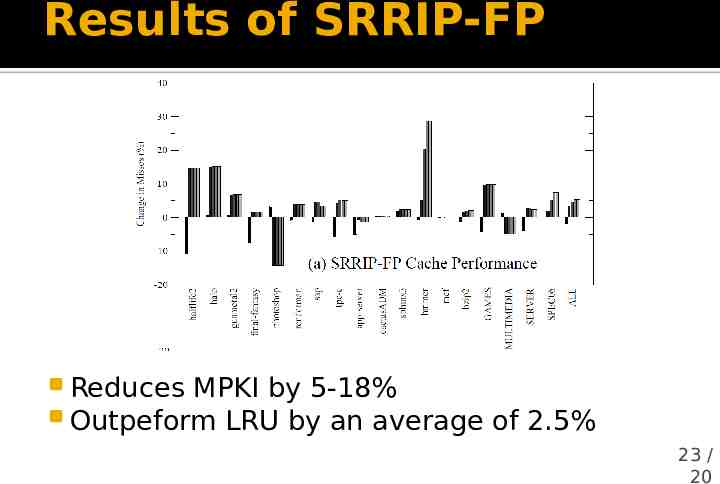

Results of SRRIP-FP Reduces MPKI by 5-18% Outpeform LRU by an average of 2.5% 23 / 20

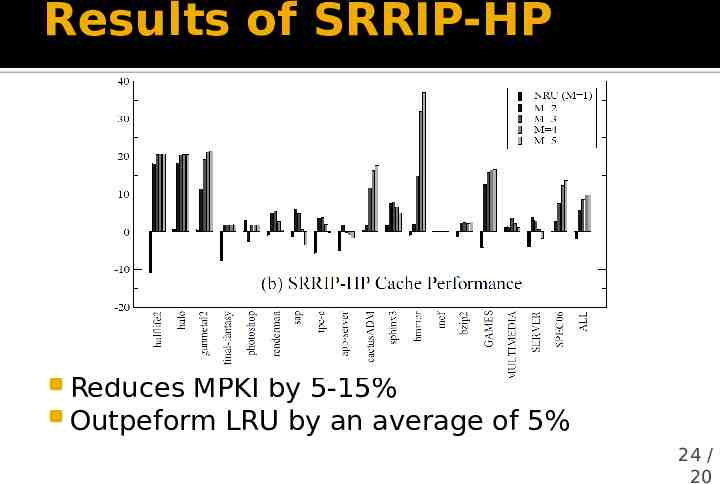

Results of SRRIP-HP Reduces MPKI by 5-15% Outpeform LRU by an average of 5% 24 / 20

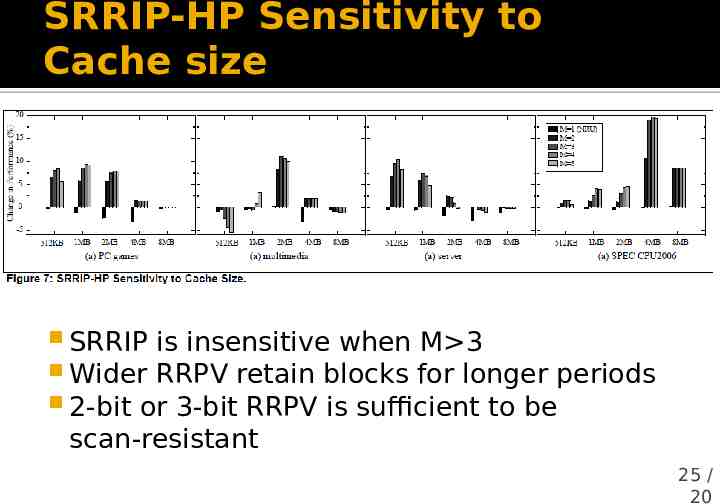

SRRIP-HP Sensitivity to Cache size SRRIP is insensitive when M 3 Wider RRPV retain blocks for longer periods 2-bit or 3-bit RRPV is sufficient to be scan-resistant 25 / 20

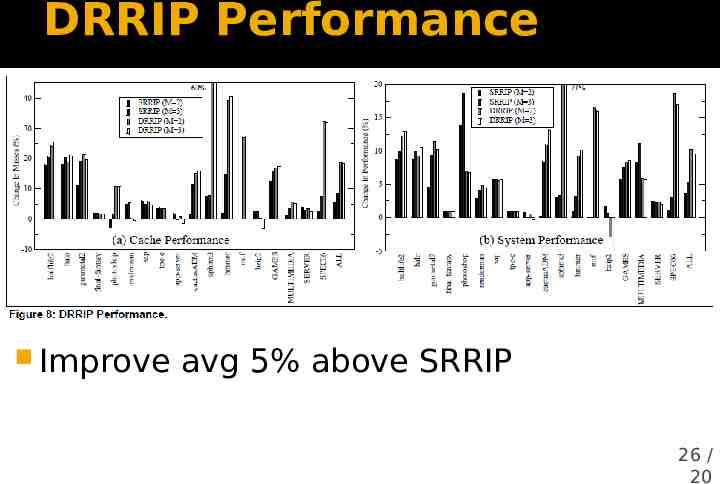

DRRIP Performance Improve avg 5% above SRRIP 26 / 20

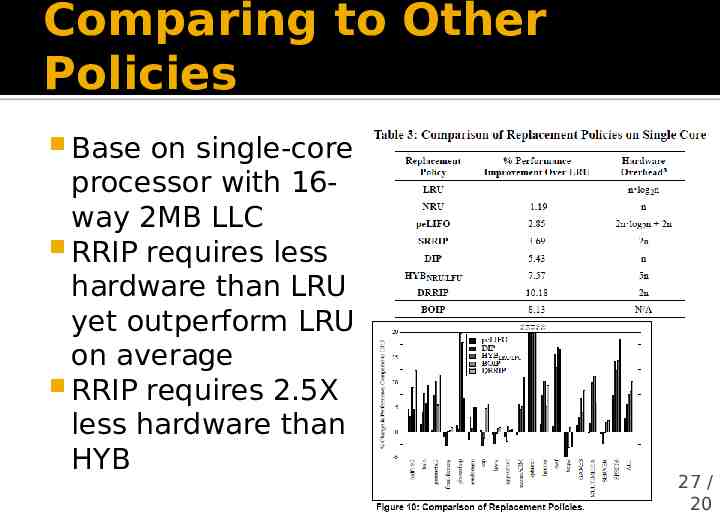

Comparing to Other Policies Base on single-core processor with 16way 2MB LLC RRIP requires less hardware than LRU yet outperform LRU on average RRIP requires 2.5X less hardware than HYB 27 / 20

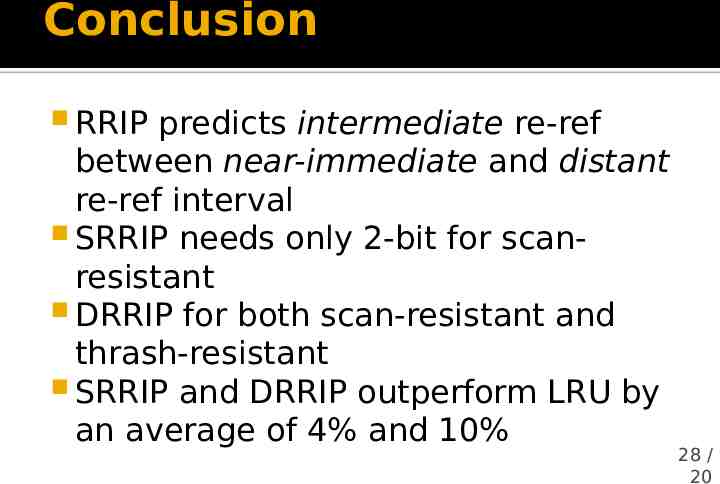

Conclusion RRIP predicts intermediate re-ref between near-immediate and distant re-ref interval SRRIP needs only 2-bit for scanresistant DRRIP for both scan-resistant and thrash-resistant SRRIP and DRRIP outperform LRU by an average of 4% and 10% 28 / 20