Survey Research Methods Refs: H. Müller , A. Sedley , and

28 Slides4.35 MB

Survey Research Methods Refs: H. Müller , A. Sedley , and E. Ferrall-Nunge

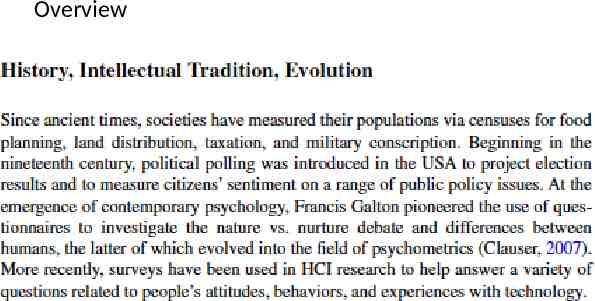

Overview A survey is a method of gathering information by asking questions to a subset of people, the results of which can be generalized to the wider target population. There are many different types of surveys, many ways to sample a population, and many ways to collect data from that population.

Overview A survey is a method of gathering information by asking questions to a subset of people, the results of which can be generalized to the wider target population. There are many different types of surveys, many ways to sample a population, and many ways to collect data from that population. Traditionally, surveys have been administered via mail, telephone, or in person. The Internet has become a popular mode for surveys due to the low cost of gathering data, ease and speed of survey administration, and its broadening reach across a variety of populations worldwide.

Overview In human–computer interaction (HCI) research can be useful to:

Overview

Overview

1. 2. 3. 4. 5. 6. 7. User Attitudes Intent Task Success User Experience Feedback User Characteristics Interactions with Technology User Awareness

1. Precise Behaviors 2. Underlying Motivations 3. Usability Evaluations!!

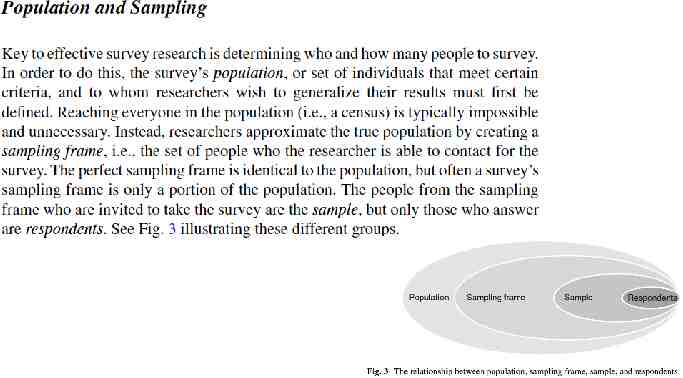

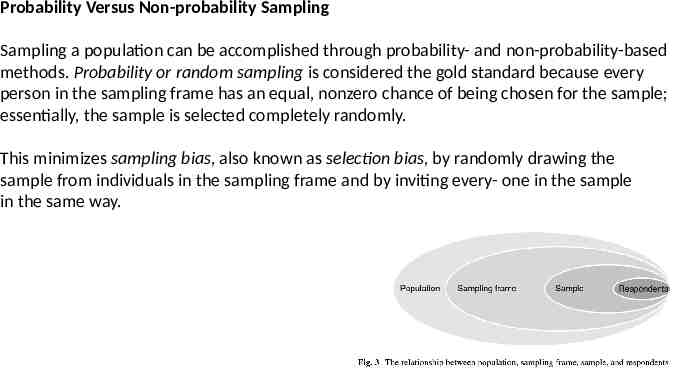

Probability Versus Non-probability Sampling Sampling a population can be accomplished through probability- and non-probability-based methods. Probability or random sampling is considered the gold standard because every person in the sampling frame has an equal, nonzero chance of being chosen for the sample; essentially, the sample is selected completely randomly. This minimizes sampling bias, also known as selection bias, by randomly drawing the sample from individuals in the sampling frame and by inviting every- one in the sample in the same way.

Convenience Sampling While probability sampling is ideal, it is often impossible to reach and randomly select from the entire target population, especially when targeting small populations (e.g., users of a specialized enterprise product or experts in a particular field) or investigating sensitive or rare behavior. In these situations, researchers may use non-probability sampling methods such as volunteer opt-in panels, unrestricted self-selected surveys (e.g., links on blogs and s ocial networks), snowball recruiting (i.e., asking for friends of friends), and convenience samples (i.e., targeting people readily available, such as mall shoppers).

Sample Size and Error Margin First, the researcher needs to determine approximately how many people make up the population being studied. Second, as the survey does not measure the entire population, the required level of precision must be chosen, which consists of the margin of error and the confidence level. The margin of error expresses the amount of sampling error in the survey, i.e., the range of uncertainty around an estimate of a population measure, assuming normally distributed data. For example, if 60 % of the sample claims to use a tablet computer, a 5 % margin of error would mean that actually 55–65 % of the population use tablet computers.

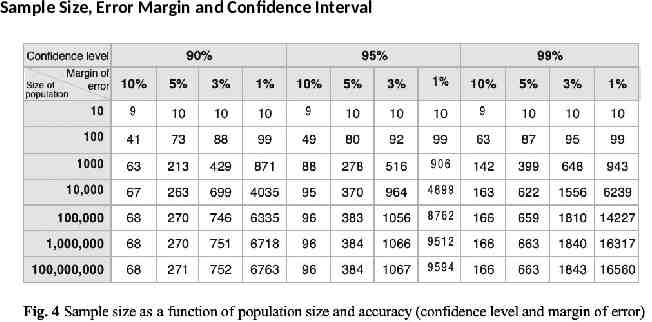

Sample Size and Confidence Interval The confidence level indicates how likely the reported metric falls within the margin of error if the study were repeated. A 95 % confidence level, for example, would mean that 95 % of the time, observations from repeated sampling will fall within the interval defined by the margin of error. Commonly used confidence levels are 99, 95, and 90 %. Figure 4, based on Krejcie and Morgan’s formula (1970), shows the appropriate sample size, given the population size, as well as the chosen margin of error and confidence level for your survey. Note that the table is based on a population proportion of 50% for the response of interest, the most cautious estimation. for a population larger than 100,000, a sample size of 384 is required to achieve a confidence level of 95 % and a margin of error of 5 %.

Sample Size, Error Margin and Confidence Interval

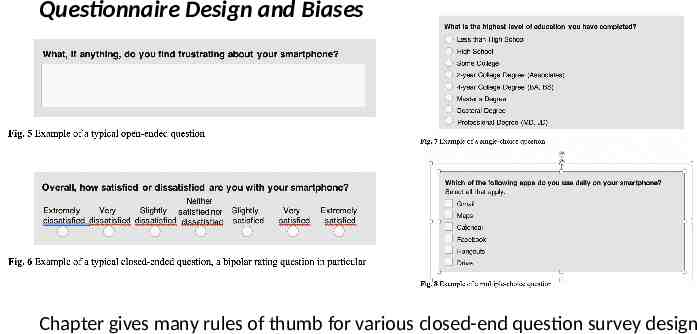

Questionnaire Design and Biases Chapter gives many rules of thumb for various closed-end question survey design

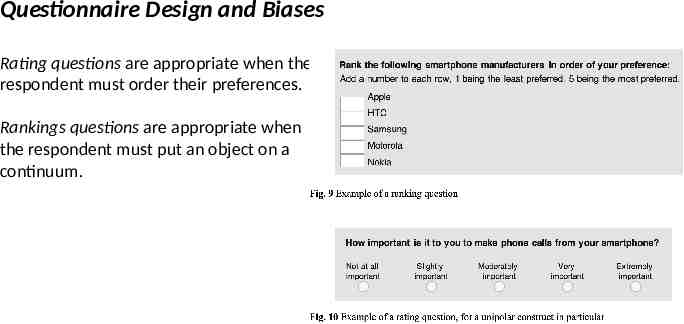

Questionnaire Design and Biases Rating questions are appropriate when the respondent must order their preferences. Rankings questions are appropriate when the respondent must put an object on a continuum.

Stay Safe Stay Warm Stay Safe

Questionnaire Biases Five common questionnaire biases: satisficing, acquiescence bias, social desirability, response order bias, and question order bias.

Satisficing Biases Satisficing occurs when respondents use a suboptimal amount of cognitive effort to answer questions. Instead, satisficers will typically pick what they consider to be the first acceptable response alternative. Satisficing compromises 1 or 4 cognitive steps: 1. Comprehension of the question, instructions, and answer options 2. Retrieval of specific memories to aid with answering the question 3. Judgement of the retrieved information and its applicability to the question 4. Mapping of judgement onto the answer options Satisficers shortcut this process by exerting less cognitive effort or by skipping one or more steps entirely. To minimize satisficing .

Satisficing Biases 1. Simple instead of complex questions 2. Avoid “don’t know” and “unsure” etc etc. 3. Avoid using same rating scale in back to back questions 4. Keep questionnaires short 5. Increase motivation by stressing participant-centered importance/gain 6. Ask for justification 7. Use of trap questions

Acquiescence Biases When presented with agree/disagree, yes/no, or true/false statements, some respondents are more likely to concur with the statement independent of its substance or source. Origins in both cognitive and personality variables. To minimize: Avoid questions with agree/disagree, yes/no, true/false, or similar answer options Where possible, ask construct-specific questions (i.e., questions that ask about the underlying construct in a neutral, non-leading way) instead of agreement statements Use reverse-keyed constructs; i.e., the same construct is asked positively and negatively in the same survey. The raw scores of both responses are then combined to correct for acquiescence bias.

Social Desirability Biases Social desirability occurs when respondents answer questions in a manner they feel will be positively perceived by others. Respondents are inclined to provide socially desirable answers when: - Their behavior or views go against the social norm - Asked to provide information on sensitive topics, making the respondent feel uncomfortable or embarrassed - They perceive a threat of disclosure or consequences to answering truthfully - Their true identity (e.g., name, address, phone number) is captured in the survey - The data is directly collected by another person (e.g., in-person or phone surveys). To minimize social desirability bias, respondents should be allowed to answer anonymously or the survey should be self-administered.

Response Order Biases Response order bias is the tendency to select the items toward the beginning (i.e., a primacy effect) or the end (i.e., a recency effect) of an answer list or scale. To minimize response order effects, the following may be considered: - Unrelated answer options should be randomly ordered across respondents - Rating scales should be ordered from negative to positive, with the most negative item first - The order of ordinal scales should be reversed randomly between respondents, and the raw scores of both scale versions should be averaged using the same value for each scale label. That way, the response order effects cancel each other out across respondents

Open-Ended Questions Avoid overly broad questions (broader than actually experienced) Avoid leading questions (don’t suggest a right answer) Avoid querying about multiple objects/options in a preference or ranking Avoid relying on highly accurate memory recall Avoid prediction questions (don’t ask to anticipate future behavior or future attitudes

Open-Ended Questions Avoid overly broad questions (broader than actually experienced) Avoid leading questions (don’t suggest a right answer) Avoid querying about multiple objects/options in a preference or ranking Avoid relying on highly accurate memory recall Avoid prediction questions (don’t ask to anticipate future behavior or future attitudes

Implementation There are many platforms and tools that can be used to implement Internet surveys, such ConfirmIt, Google Forms, Kinesis, LimeSurvey, SurveyGizmo, SurveyMonkey, UserZoom, Wufoo, and Zoomerang, to name just a few. When deciding on the appropriate platform, functionality, cost, and ease of use should be taken into consideration. The questionnaire may require a survey tool that supports functionality such as branching and conditionals, the ability to pass URL parameters, multiple languages, and a range of question types. Chapter discusses techniques for maximizing response rates, etc.