Life Cycle of Transactional Data in In-memory Databases Authors: Amit

24 Slides1.08 MB

Life Cycle of Transactional Data in In-memory Databases Authors: Amit Pathak, Aditya Gurajada, Pushkar Khadilkar SAP Labs India, Presented by: Pushkar Khadilkar April 16, 2018 PUBLIC

Agenda Background Overall Architecture Auto IMRS Partition Tuning Packing Cold Data Experiments Conclusions 2018 SAP SE or an SAP affiliate company. All rights reserved. ǀ PUBLIC 2

ASE Background SAP Adaptive Server Enterprise (ASE) is a fully featured, ANSI compliant, highly scalable, relational Database engine that provides portable, multi-platform system for high performance data management. High performance geared towards OLTP oriented applications. Traditionally disk based. ASE 16.0 SP03 : Extreme OLTP (XOLTP) architecture to enhance performance In-memory row storage (IMRS) added to support in-memory processing of hot rows Information life cycle management (ILM) handles hot-cold data classification. 2018 SAP SE or an SAP affiliate company. All rights reserved. ǀ PUBLIC 3

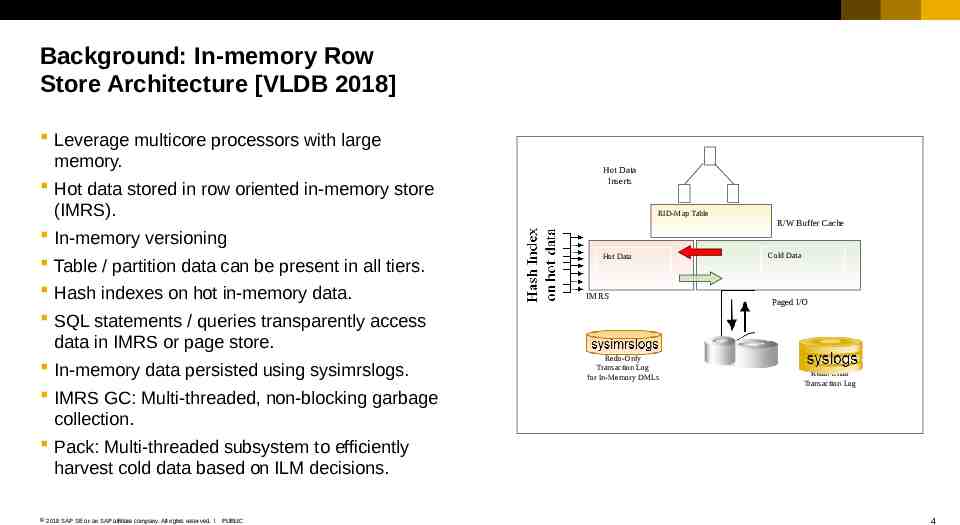

Background: In-memory Row Store Architecture [VLDB 2018] Leverage multicore processors with large memory. Hot data stored in row oriented in-memory store (IMRS). In-memory versioning Hot Data Inserts RID-Map Table R/W Buffer Cache Hot Data Table / partition data can be present in all tiers. Hash indexes on hot in-memory data. IMRS SQL statements / queries transparently access data in IMRS or page store. In-memory data persisted using sysimrslogs. Redo-Only Transaction Log for In-Memory DMLs IMRS GC: Multi-threaded, non-blocking garbage collection. Pack: Multi-threaded subsystem to efficiently harvest cold data based on ILM decisions. 2018 SAP SE or an SAP affiliate company. All rights reserved. ǀ PUBLIC Cold Data Paged I/O Redo/Undo Transaction Log 4

ILM Design Goals and Principles Objectives Keep cache utilization stable while retaining hot rows. Application compatibility. Minimal user tuning Respond to changing workloads. Low transaction impact. 2018 SAP SE or an SAP affiliate company. All rights reserved. ǀ PUBLIC Guiding Access Patterns Frequency of data access Contention in page store. For example, latch contention. Type of operation: Insert / Select / Update / Delete / Utilities Granularity: Row, partition, table. 5

Auto IMRS Partition Tuning

Auto IMRS Partition Tuning: Introduction Individual Partitions affected differently by the workload For example, partition containing latest orders could be hot. Partition Tuning: Disable or re-enable use in-memory storage for certain DMLs on the partition. Disable IMRS usage if rows are not significantly re-used by the workload. Enable IMRS usage for a partition if IMRS may provide large gains. For example, latch contention on page store. Auto IMRS Partition Tuning: Allows users to enable IMRS at database level and automatically tune partitions based on changing workload patterns. Monitor 2018 SAP SE or an SAP affiliate company. All rights reserved. ǀ PUBLIC Self tuning 7

Auto IMRS Partition Tuning: Monitoring Workload monitoring to identify usage of partition Number of inserts / updates / deletes Memory used Total number of rows Parameters: Per-CPU cache friendly counters. Aggregated infrequently on read. Row access timestamps maintained for hotness Immediately updated for new versions. Periodically updated for selects. 2018 SAP SE or an SAP affiliate company. All rights reserved. ǀ PUBLIC 8

Auto IMRS Partition Tuning: Self Tuning ASE internally tunes IMRS usage by partition in a background thread. Counters are tracked for multiple iterations and counter differences are used to identify new IMRS usage for a partition. Heuristic Parameters: Average re-use of rows Partition IMRS utilization Total IMRS cache utilization New IMRS usage by a partition Contention on page store. Partition enable / disable decision is made if partition is found useful / unused for several iterations. 2018 SAP SE or an SAP affiliate company. All rights reserved. ǀ PUBLIC 9

Packing Cold Data

Packing Cold Data From IMRS Cold data moved to page store by pack background threads. Steady Cache Utilization Percentage Pack thread modes Steady state Aggressive Identification of cold rows Locating cold rows 2018 SAP SE or an SAP affiliate company. All rights reserved. ǀ PUBLIC 11

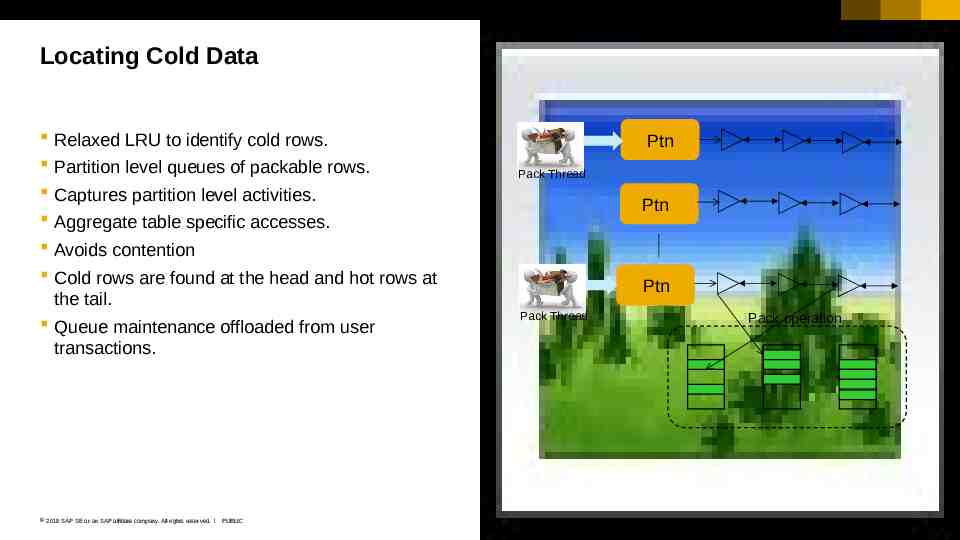

Click icon to add image Locating Cold Data Relaxed LRU to identify cold rows. Partition level queues of packable rows. Ptn Pack Thread Captures partition level activities. Aggregate table specific accesses. Avoids contention Cold rows are found at the head and hot rows at the tail. Queue maintenance offloaded from user transactions. 2018 SAP SE or an SAP affiliate company. All rights reserved. ǀ PUBLIC Ptn Ptn Pack Thread Pack operation 12

Locating Cold Data: Partition Aware Pack Selection Assumption: Partitions in OLTP workloads have similar coldness behavior. Patterns: Number of re-use operations Growing or stable partitions Pack cycle Time epochs Packs small percentage of total data in IMRS. Pack transactions Batch packing of a number of rows in partition. Partition specific transactions. Number of rows packed auto adjusted based on physical characteristics. 2018 SAP SE or an SAP affiliate company. All rights reserved. ǀ PUBLIC 13

Partition Aware Pack Selection: Pack Cycle Byte Distribution Objective: Distribute bytes to pack based on coldness of the partition. Usefulness Index (UI) Cache Utilization Index (CUI) Packability Index (PI) Bytes to pack * TotalBytesToPack 2018 SAP SE or an SAP affiliate company. All rights reserved. ǀ PUBLIC 14

Identifying Cold Data Time stamp filter (Ʈ) Approximates number of transactions which would cause a small fixed increase in IMRS cache size. Extrapolate to calculate number of transactions which would cause increase equivalent to “steady cache utilization” (P). Data accessed by most recently committed Ʈ transactions is retained. : begin commit timestamp, : Percentage of memory increase Commit timestamp after memory increase P: Steady cache utilization percentage. 2018 SAP SE or an SAP affiliate company. All rights reserved. ǀ PUBLIC 15

Experiments

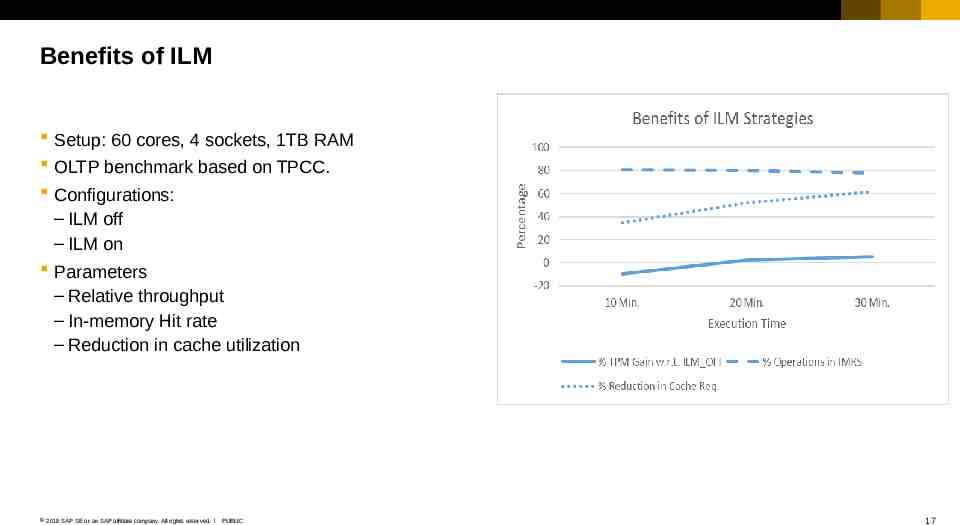

Benefits of ILM Click icon to add image Setup: 60 cores, 4 sockets, 1TB RAM OLTP benchmark based on TPCC. Configurations: – ILM off – ILM on Parameters – Relative throughput – In-memory Hit rate – Reduction in cache utilization 2018 SAP SE or an SAP affiliate company. All rights reserved. ǀ PUBLIC 17

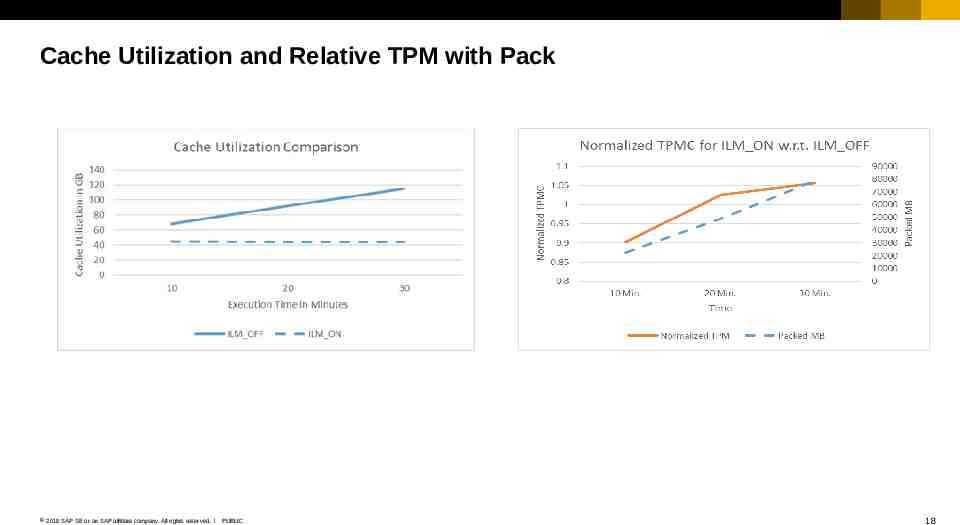

Cache Utilization and Relative TPM with Pack 2018 SAP SE or an SAP affiliate company. All rights reserved. ǀ PUBLIC 18

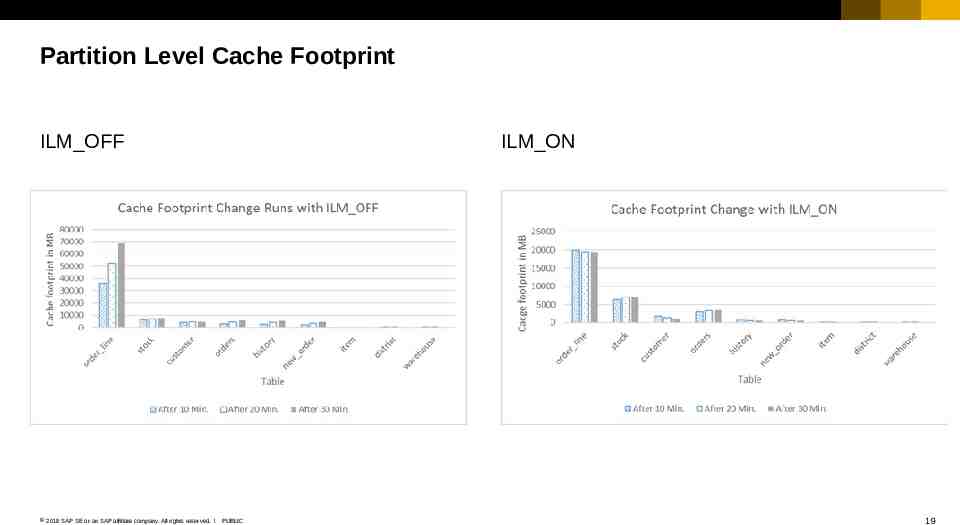

Partition Level Cache Footprint ILM OFF 2018 SAP SE or an SAP affiliate company. All rights reserved. ǀ PUBLIC ILM ON 19

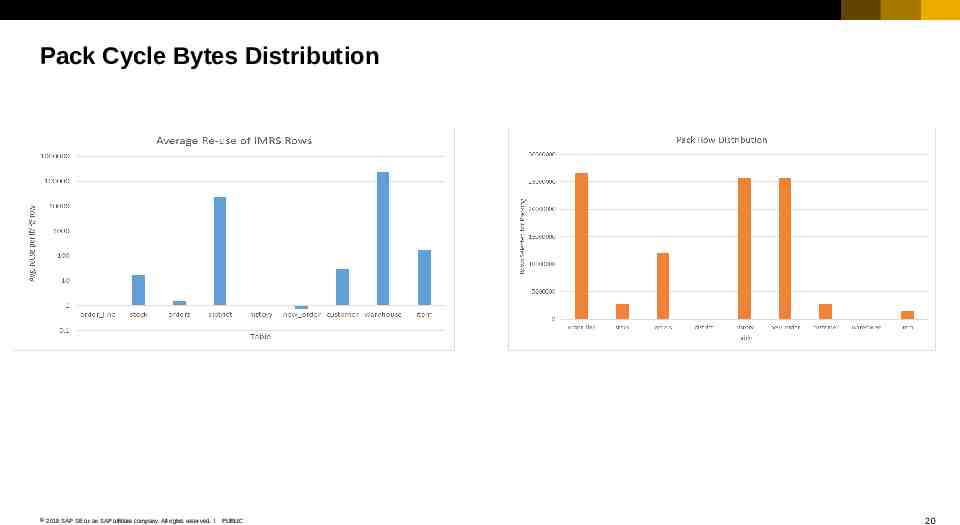

Pack Cycle Bytes Distribution 2018 SAP SE or an SAP affiliate company. All rights reserved. ǀ PUBLIC 20

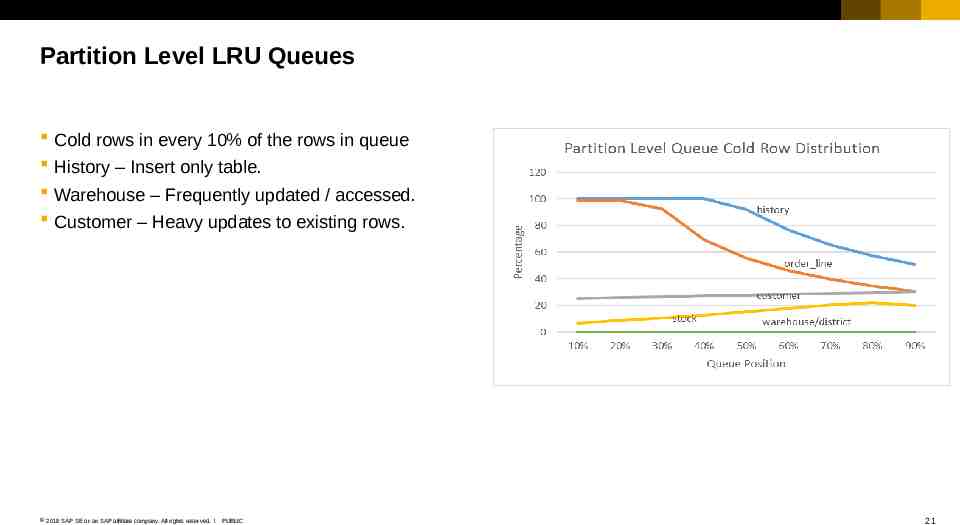

Partition Level LRU Queues Cold rows in every 10% of the rows in queue History – Insert only table. Warehouse – Frequently updated / accessed. Customer – Heavy updates to existing rows. 2018 SAP SE or an SAP affiliate company. All rights reserved. ǀ PUBLIC 21

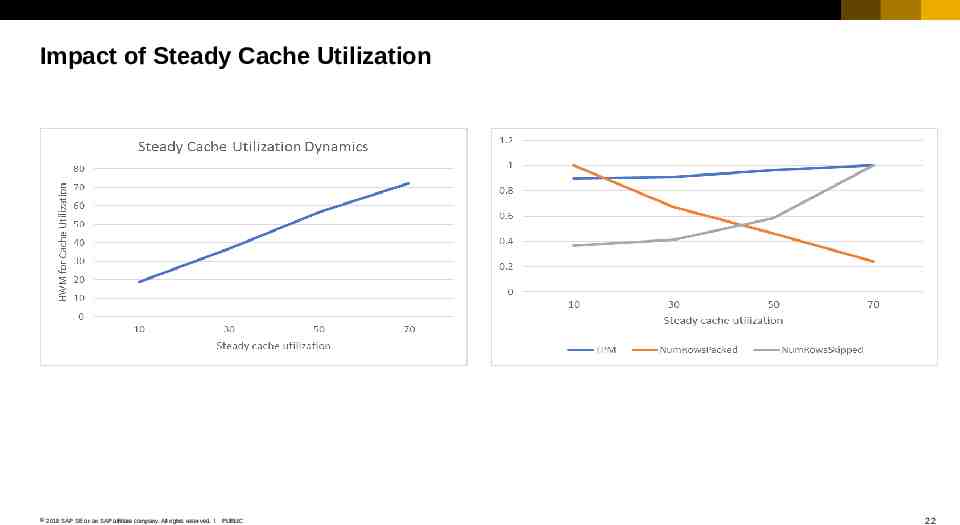

Impact of Steady Cache Utilization 2018 SAP SE or an SAP affiliate company. All rights reserved. ǀ PUBLIC 22

Conclusions ILM techniques retain only hot data in memory. Demonstrated tight integration of ILM techniques in OLTP database. Performance gains of in-memory processing possible on larger dataset. Stable cache utilization maintained without impacting throughput. Workload aware and auto tuned ILM reduces additional configuration overheads. 2018 SAP SE or an SAP affiliate company. All rights reserved. ǀ PUBLIC 23

Thank you. Contact information: Pushkar Khadilkar SAP Labs India [email protected] Partner logo