Expert Systems Reasonable Reasoning An Ad Hoc approach

21 Slides72.00 KB

Expert Systems Reasonable Reasoning An Ad Hoc approach

Problems with Logical Reasoning Brittleness: one axiom/fact wrong, system can prove anything (Correctness) Large proof spaces (Efficiency) “Probably” not representable (Representation) No notion of combining evidence Doesn’t provide confidence in conclusion.

Lecture Goals What is an expert system? How can they be built? When are they useful? General Architecture Validation: – How to know if they are right

Class News Quiz dates and homework dates updated: Extra day for probability hwk/quiz All homeworks must be typed

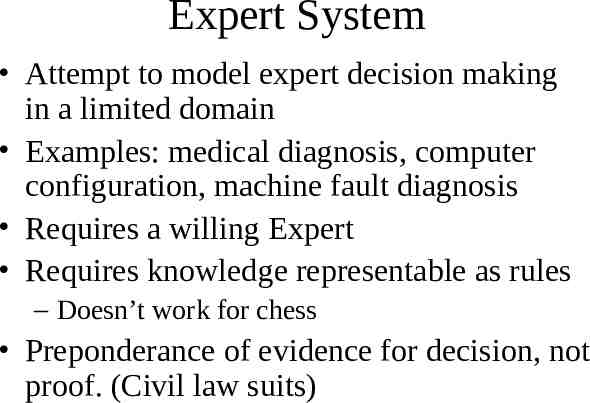

Expert System Attempt to model expert decision making in a limited domain Examples: medical diagnosis, computer configuration, machine fault diagnosis Requires a willing Expert Requires knowledge representable as rules – Doesn’t work for chess Preponderance of evidence for decision, not proof. (Civil law suits)

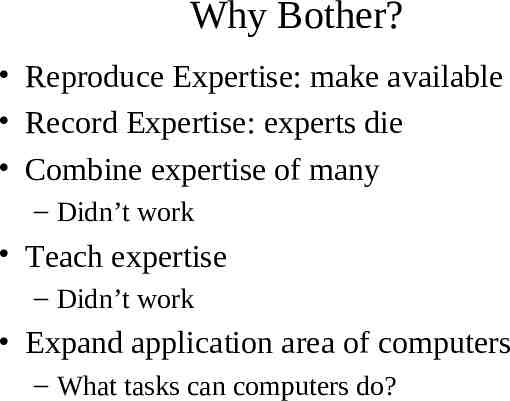

Why Bother? Reproduce Expertise: make available Record Expertise: experts die Combine expertise of many – Didn’t work Teach expertise – Didn’t work Expand application area of computers – What tasks can computers do?

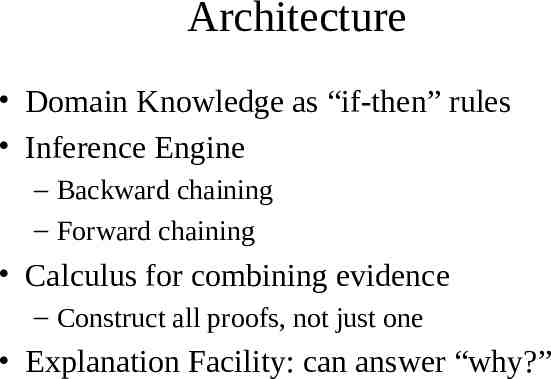

Architecture Domain Knowledge as “if-then” rules Inference Engine – Backward chaining – Forward chaining Calculus for combining evidence – Construct all proofs, not just one Explanation Facility: can answer “why?”

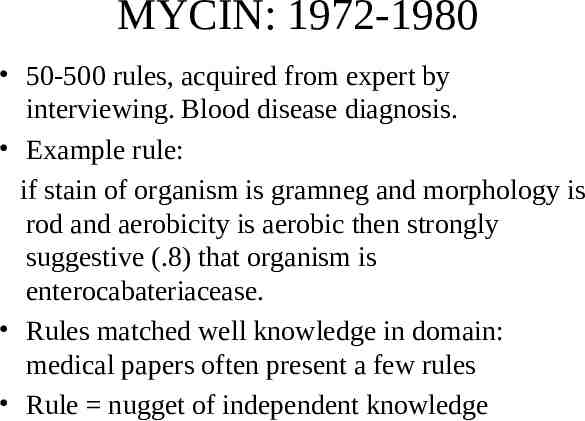

MYCIN: 1972-1980 50-500 rules, acquired from expert by interviewing. Blood disease diagnosis. Example rule: if stain of organism is gramneg and morphology is rod and aerobicity is aerobic then strongly suggestive (.8) that organism is enterocabateriacease. Rules matched well knowledge in domain: medical papers often present a few rules Rule nugget of independent knowledge

Facts are not facts Morphology is rod requires microscopic evaluation. – Is a bean shape a rod? – Is an “S” shape a rod? Morphology is rod is assigned a confidence. All “facts” assigned confidences, from 0 to 1.

MYCIN: Shortliffe Begins with a few facts about patient – Required by physicians but irrelevant Backward chains from each possible goal (disease). Preconditions either match facts or set up new subgoals. Subgoals may involve tests. Finds all “proofs” and weighs them. Explains decisions and combines evidence Worked better than average physician. Never used in practice. Methodology used.

Examples and non-examples Soybean diagnosis – Expert codified knowledge in form of rules – System almost as good – When hundreds of rules, system seems reasonable. Autoclade placement – Expert but no codification Chess – Experts but no codification in terms of rules

Forward Chaining Interpreter Repeat – Apply all the rules to the current facts. – Each rule firing may add new facts Until no new facts are added. Comprehensible Trace of rule applications that lead to conclusion is explanation. Answers why.

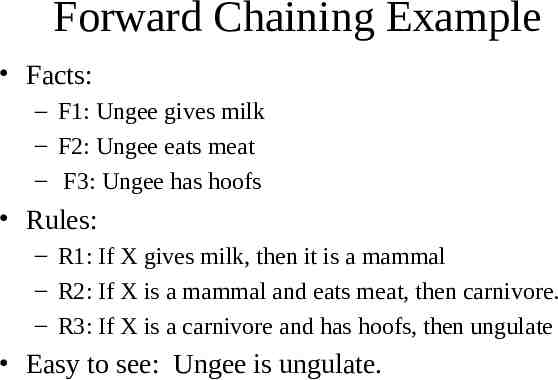

Forward Chaining Example Facts: – F1: Ungee gives milk – F2: Ungee eats meat – F3: Ungee has hoofs Rules: – R1: If X gives milk, then it is a mammal – R2: If X is a mammal and eats meat, then carnivore. – R3: If X is a carnivore and has hoofs, then ungulate Easy to see: Ungee is ungulate.

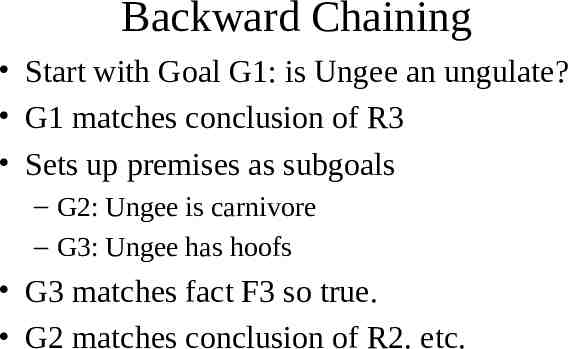

Backward Chaining Start with Goal G1: is Ungee an ungulate? G1 matches conclusion of R3 Sets up premises as subgoals – G2: Ungee is carnivore – G3: Ungee has hoofs G3 matches fact F3 so true. G2 matches conclusion of R2. etc.

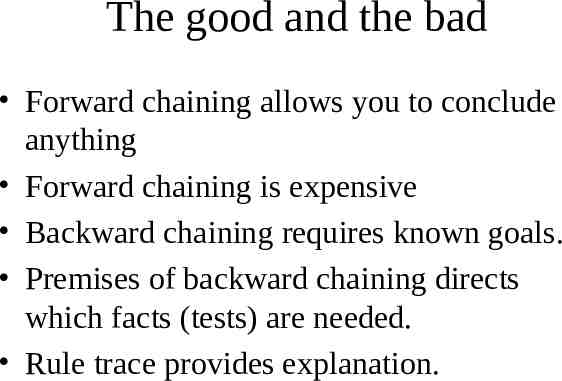

The good and the bad Forward chaining allows you to conclude anything Forward chaining is expensive Backward chaining requires known goals. Premises of backward chaining directs which facts (tests) are needed. Rule trace provides explanation.

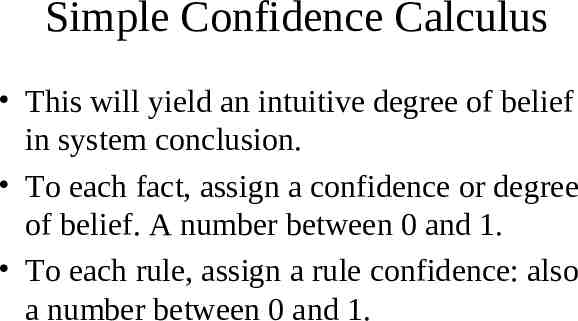

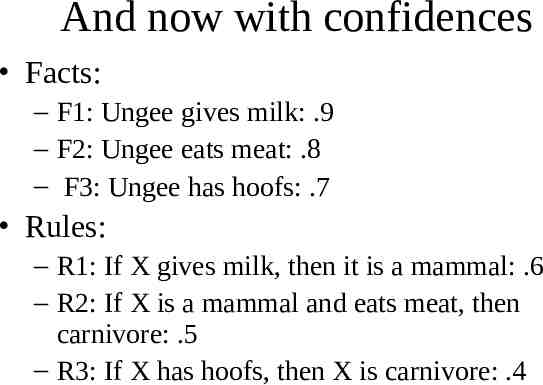

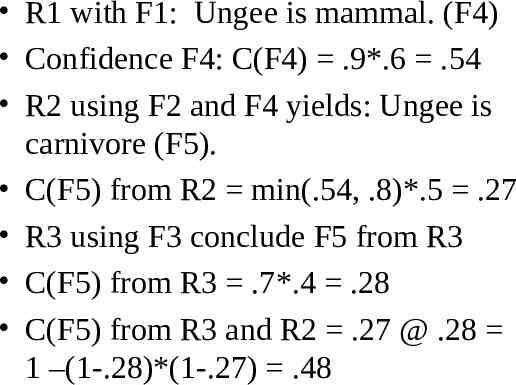

Simple Confidence Calculus This will yield an intuitive degree of belief in system conclusion. To each fact, assign a confidence or degree of belief. A number between 0 and 1. To each rule, assign a rule confidence: also a number between 0 and 1.

Confidence of premise of a rule – Minimum confidence of each condition – Intuition: strength of argument is weakest link Confidence in conclusion of a rule – (confidence in rule premise)*(confidence in rule) Confidence in conclusion from several rules: r1,r2,.rm with confidences c1,c2,.cm – c1 @ c2 @. cm – Where x @ y is 1- (1-x)*(1-y).

And now with confidences Facts: – F1: Ungee gives milk: .9 – F2: Ungee eats meat: .8 – F3: Ungee has hoofs: .7 Rules: – R1: If X gives milk, then it is a mammal: .6 – R2: If X is a mammal and eats meat, then carnivore: .5 – R3: If X has hoofs, then X is carnivore: .4

R1 with F1: Ungee is mammal. (F4) Confidence F4: C(F4) .9*.6 .54 R2 using F2 and F4 yields: Ungee is carnivore (F5). C(F5) from R2 min(.54, .8)*.5 .27 R3 using F3 conclude F5 from R3 C(F5) from R3 .7*.4 .28 C(F5) from R3 and R2 .27 @ .28 1 –(1-.28)*(1-.27) .48

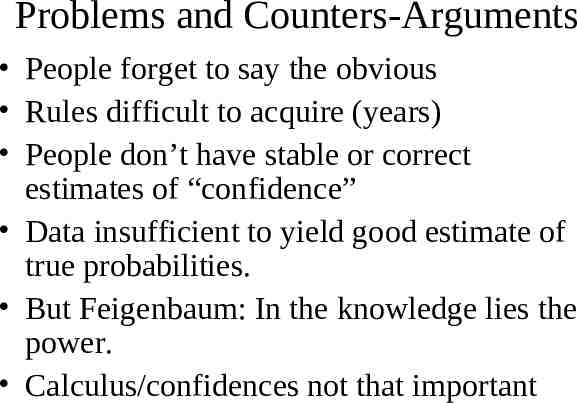

Problems and Counters-Arguments People forget to say the obvious Rules difficult to acquire (years) People don’t have stable or correct estimates of “confidence” Data insufficient to yield good estimate of true probabilities. But Feigenbaum: In the knowledge lies the power. Calculus/confidences not that important

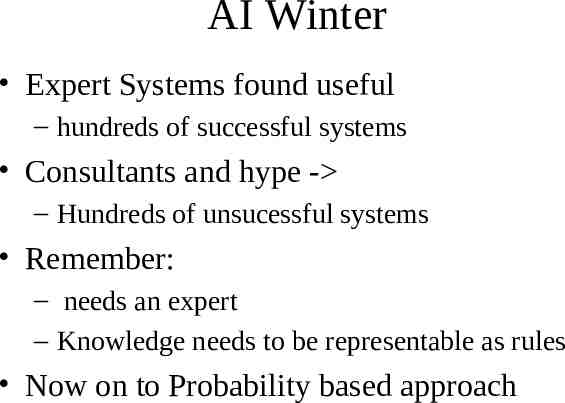

AI Winter Expert Systems found useful – hundreds of successful systems Consultants and hype - – Hundreds of unsucessful systems Remember: – needs an expert – Knowledge needs to be representable as rules Now on to Probability based approach