Search Agents Local Search Chapter 4.1 (extremely briefly)

17 Slides331.50 KB

Search Agents Local Search Chapter 4.1 (extremely briefly)

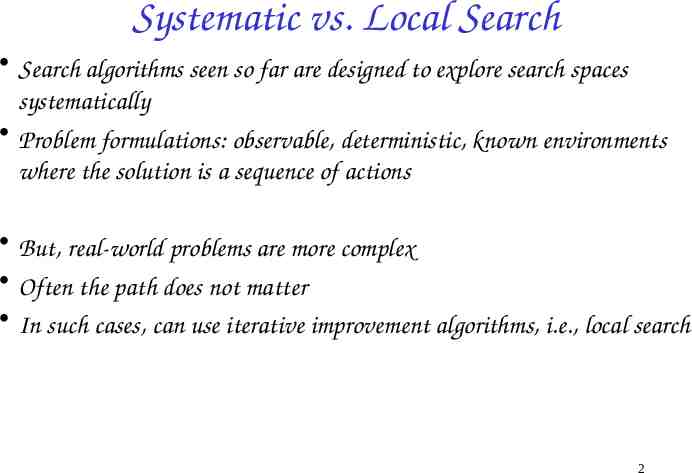

Systematic vs. Local Search Search algorithms seen so far are designed to explore search spaces systematically Problem formulations: observable, deterministic, known environments where the solution is a sequence of actions But, real-world problems are more complex Often the path does not matter In such cases, can use iterative improvement algorithms, i.e., local search 2

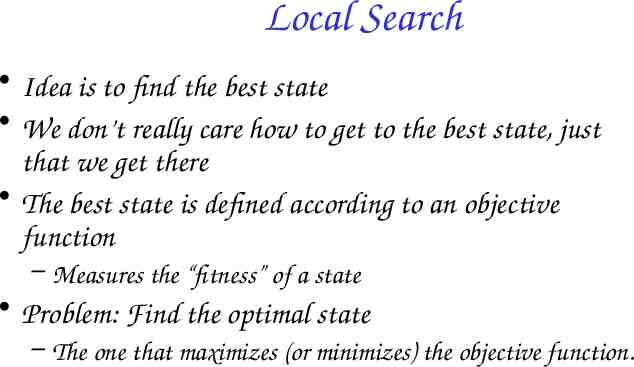

Local Search Idea is to find the best state We don’t really care how to get to the best state, just that we get there The best state is defined according to an objective function – Measures the “fitness” of a state Problem: Find the optimal state – The one that maximizes (or minimizes) the objective function.

Local search Idea: keep a single “current" state, and try to improve it Move only to neighbors of that node Advantages: 1. No need to maintain a search tree 2. Use very little memory 3. Can often find good enough solutions in continuous or large state spaces 4

Example Algorithms Hill climbing Simulated annealing (physics inspired) Local beam search Genetic algorithms (biology inspired) 5

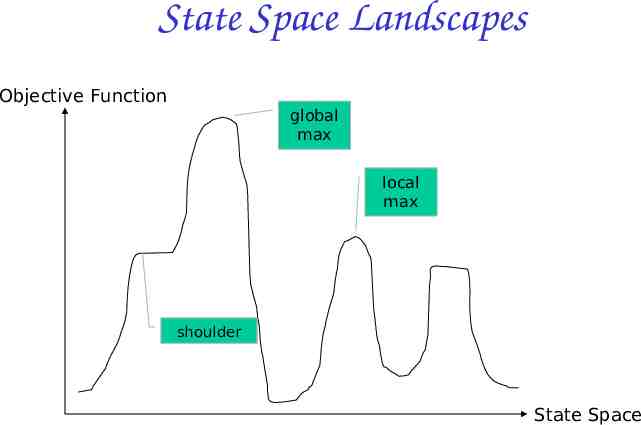

State Space Landscapes Objective Function global max local max shoulder State Space

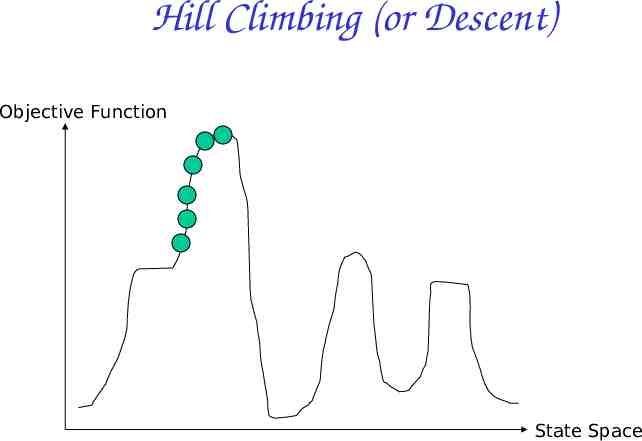

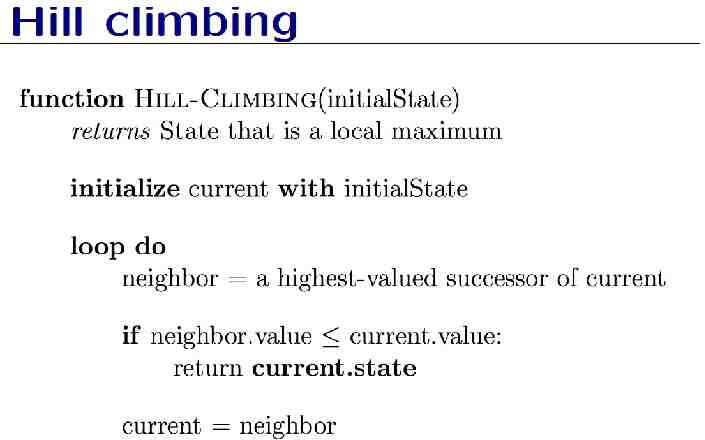

Hill Climbing Also called greedy local search. Looks only to immediate good neighbors and not beyond. Search moves uphill: moves in the direction of increasing elevation/value to find the top of the mountain. Terminates when it reaches a peak. Can terminate with a local maximum, global maximum or can get stuck and no progress is possible. 7

Problem Formulation Complete-state formulation – Start with an approximate solution and perturb n-queens problem – Place n queens on a board so that no queen is attacking another queen.

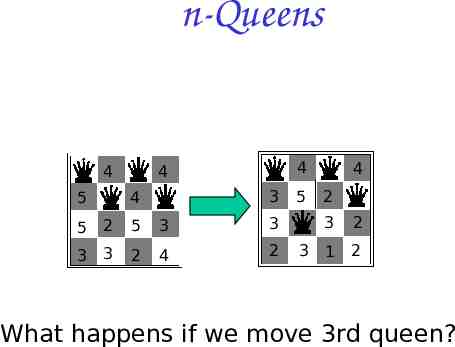

Problem Formulation Initial State: n queens placed randomly on the board, one per column. Successor function: States that obtained by moving one queen to a new location in its column. Heuristic/objective function: The number of pairs of attacking queens.

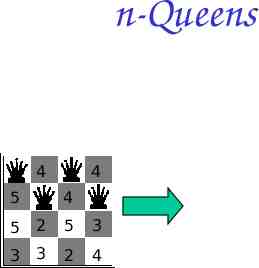

n-Queens 4 4 5 4 5 2 5 3 3 3 2 4

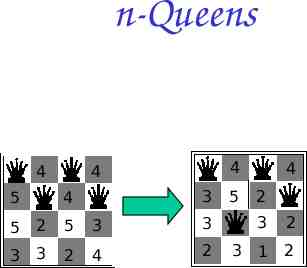

n-Queens 4 4 4 3 5 4 5 2 5 3 3 3 3 2 4 2 5 3 4 2 3 2 1 2

Hill Climbing (or Descent) Objective Function State Space

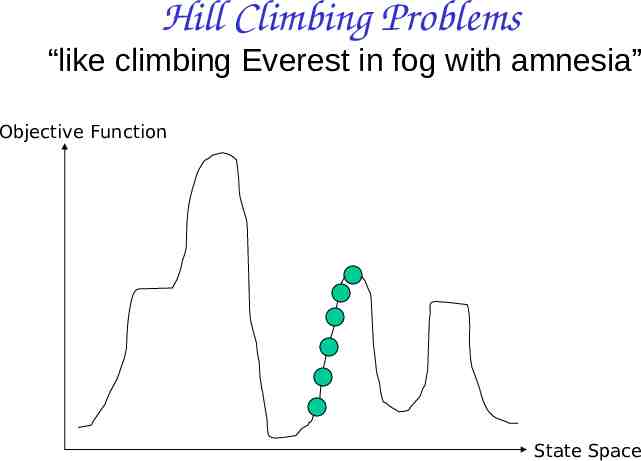

Hill Climbing Problems “like climbing Everest in fog with amnesia” Objective Function State Space

n-Queens 4 4 4 3 5 4 5 2 5 3 3 3 3 2 4 2 5 3 4 2 3 2 1 2 What happens if we move 3rd queen?

15

Possible Improvements Stochastic hill climbing – Choose at random from uphill moves – Probability of move could be influenced by steepness First-choice hill climbing – Generate successors at random until one is better than current. Random-restart – Execute hill climbing several times, choose best result. – If p is probability of a search succeeding, then expected number of restarts is 1/p.

Summary Search algorithms beyond the “classical” case of finding the shortest path to a goal in an observable, deterministic, discrete environment – Hill climbing is one example of a local search method – See other parts of chapter 4 for continuous spaces, nondeterministic actions, partial observations, unknown environments 17