Compliant Cloud+Campus Hybrid HPC Infrastructure Brenden Judson

14 Slides6.41 MB

Compliant Cloud Campus Hybrid HPC Infrastructure Brenden Judson Dept. of Computer Science University of Notre Dame [email protected] Matt Vander Werf Center for Research Computing University of Notre Dame [email protected] Paul Brenner Center for Research Computing University of Notre Dame [email protected]

What is CUI? CUI stands for Controlled Unclassified Information NIST SP 800-171 Rev. 1 came out in 2016 Outlined the regulation standards for CUI data held in non-federal IT infrastructure 110 requirement controls in 14 different categories CUI compliance required for many DOD or DARPA federal grants and by other federal

CUI at ND Multiple recent federal grants requiring CUI compliance Mostly DARPA and DOD grants One group with these grants is the Notre Dame Turbomachinery Laboratory (NDTL) Requires HPC environment (CRC responsibility) Cloud or on-premise?

Cloud Benefits Less regulation requirements to manage (taken care of by cloud vendor) More elastic and scalable than on-prem resources Newer, more powerful individual servers than in the average on-prem HPC environment Doesn’t require such large capital investments typical of large on-prem HPC purchases

Cloud Downsides All three major cloud vendors (AWS, Azure, GCP) all claim to support HPC But no cost-effective support for true, toptier HPC workloads HPC typically requires much higher utilization rates than enterprise or general IT systems HPC is significantly more expensive in the cloud than other IT segments

Cloud Downsides No cost-effective high-speed interconnect offerings, like InfiniBand Azure is the only major vendor that offers InfiniBand, but it isn’t cost-effective Low latency, fast network performance is a major concern for true, top-tier HPC in the cloud No readily available high performance file storage like Lustre or Panasas

Hybrid Approach Decision was made to implement a hybrid CUI environment for the NDTL group Takes advantage of the benefits of the cloud while performing true HPC in an on-campus data center Notre Dame already uses commercial AWS; AWS GovCloud is used for cloud CUI environment Collaboration with ND Office of Information

System Architecture Diagram http://www.crc.nd.edu/ mvanderw/ crc cui.pdf

Client Facing Users/administrators use Ericom Connect software to access ND CUI GovCloud environment Uses separate CUI environment-specific Active Directory (AD) domain for authentication Web, desktop, or mobile application interface Duo is used for 2FA Provides an interface for users to log into various workstations in different projects in the GovCloud CUI environment Users get a desktop for a Windows or Linux

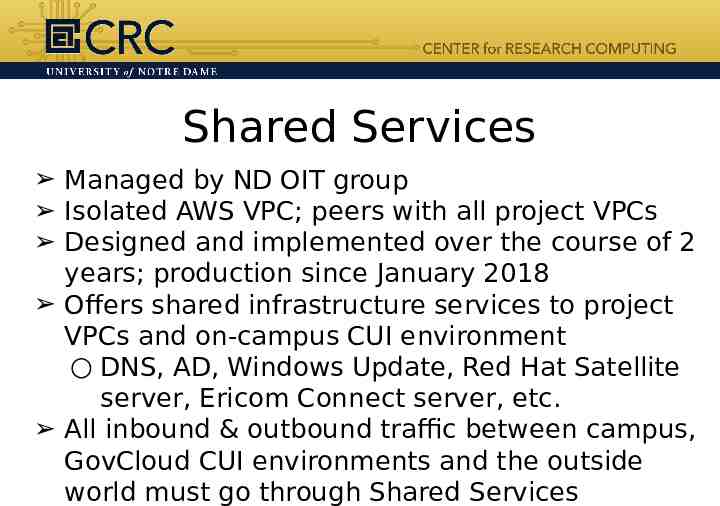

Shared Services Managed by ND OIT group Isolated AWS VPC; peers with all project VPCs Designed and implemented over the course of 2 years; production since January 2018 Offers shared infrastructure services to project VPCs and on-campus CUI environment DNS, AD, Windows Update, Red Hat Satellite server, Ericom Connect server, etc. All inbound & outbound traffic between campus, GovCloud CUI environments and the outside world must go through Shared Services

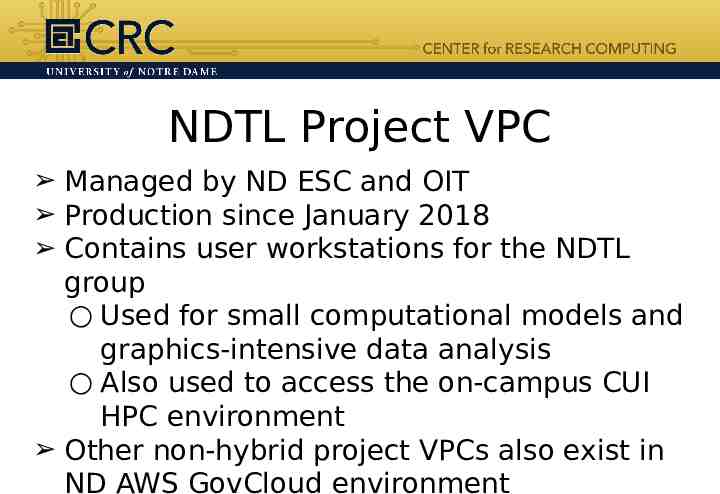

NDTL Project VPC Managed by ND ESC and OIT Production since January 2018 Contains user workstations for the NDTL group Used for small computational models and graphics-intensive data analysis Also used to access the on-campus CUI HPC environment Other non-hybrid project VPCs also exist in ND AWS GovCloud environment

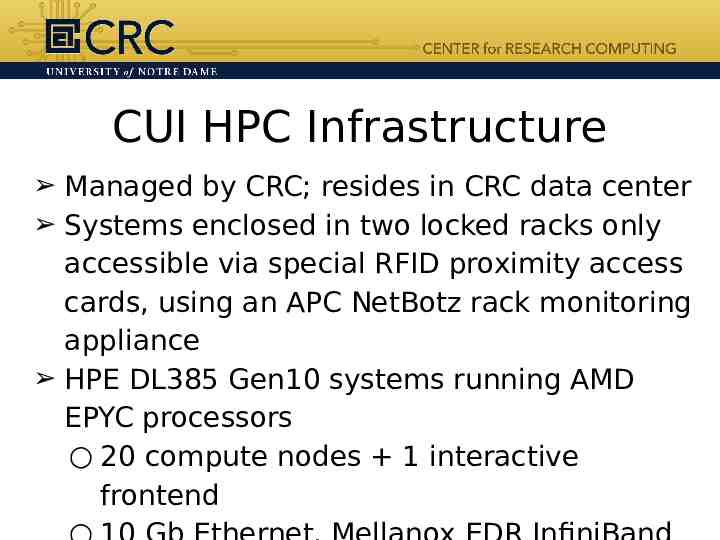

CUI HPC Infrastructure Managed by CRC; resides in CRC data center Systems enclosed in two locked racks only accessible via special RFID proximity access cards, using an APC NetBotz rack monitoring appliance HPE DL385 Gen10 systems running AMD EPYC processors 20 compute nodes 1 interactive frontend

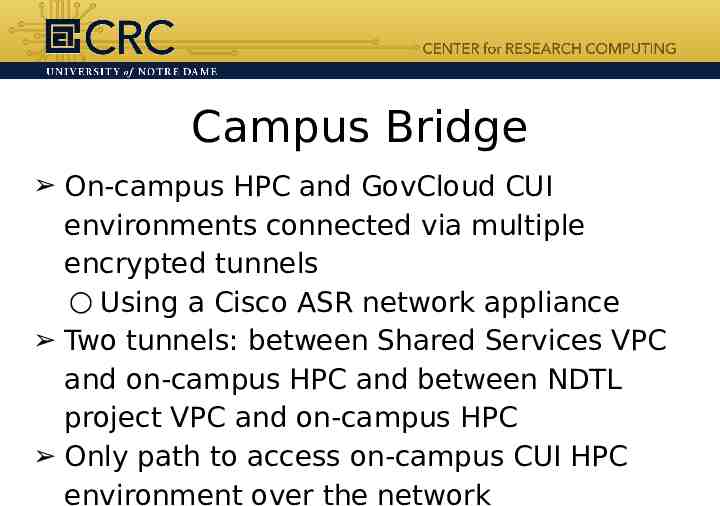

Campus Bridge On-campus HPC and GovCloud CUI environments connected via multiple encrypted tunnels Using a Cisco ASR network appliance Two tunnels: between Shared Services VPC and on-campus HPC and between NDTL project VPC and on-campus HPC Only path to access on-campus CUI HPC environment over the network