Building up to Macroprogramming: An Intermediate Language for

43 Slides809.00 KB

Building up to Macroprogramming: An Intermediate Language for Sensor Networks Ryan Newton, Arvind (MIT), and Matt Welsh (Harvard) @ IPSN 2005 (IEEE Int’l Conf on Information Processing in Sensor Networks) Presented by Ryo Sugihara @ largescale seminar (2005/08/15)

Programming Sensor Networks Difficult – Highly distributed system – Stringent resource constraint Energy-efficiency is mandatory in every application nesC: de-facto standard language – Low level Everything is mixed: Computation, Concurrency, Communication, Efficiency – Far too difficult for end-users to make programs Not experts in programming Necessity for software support

Previous Approach Query engines: Sensornet as a database – TinyDB, COUGAR, – Simple but limited use High-level programming abstraction – Abstract Regions, Hood, EnviroTrack, Neighborhood-based programming primitives and operations on them – For specific types of application: target tracking Programming toolkit – SNACK Reusable service library and compiler – Targeting general mechanism: not very easy to use Virtual machine – Maté VM architecture Intermediate code (cf. Java bytecode) – For efficient reprogramming – Similar approach, different objectives (discussed later)

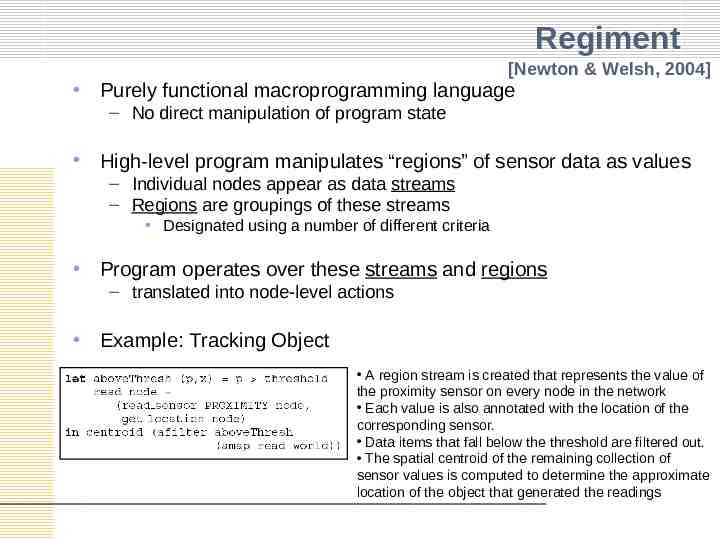

“Macroprogramming” Program sensornet as a whole – Easier than programming at the level of individual nodes e.g) Matrix multiplication Matrix notation vs. Parallel program in MPI – Compile into node-level programs “Regiment” [Newton & Welsh, 2004] – Purely functional macroprogramming language for sensornet – Example: tracking moving vehicle – A region stream is created that represents the value of the proximity sensor on every node in the network Each value is also annotated with the location of the corresponding sensor. Data items that fall below the threshold are filtered out. The spatial centroid of the remaining collection of sensor values is computed to determine the approximate location of the object that generated the readings

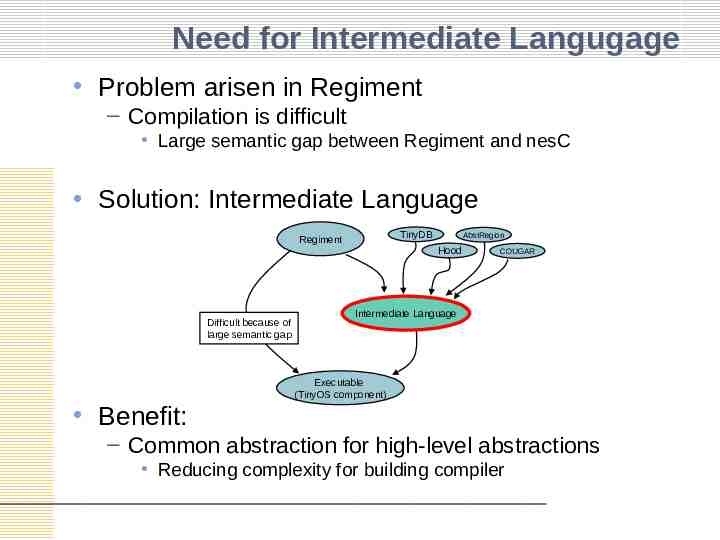

Need for Intermediate Langugage Problem arisen in Regiment – Compilation is difficult Large semantic gap between Regiment and nesC Solution: Intermediate Language TinyDB Regiment AbstRegion Hood Difficult because of large semantic gap Benefit: COUGAR Intermediate Language Executable (TinyOS component) – Common abstraction for high-level abstractions Reducing complexity for building compiler

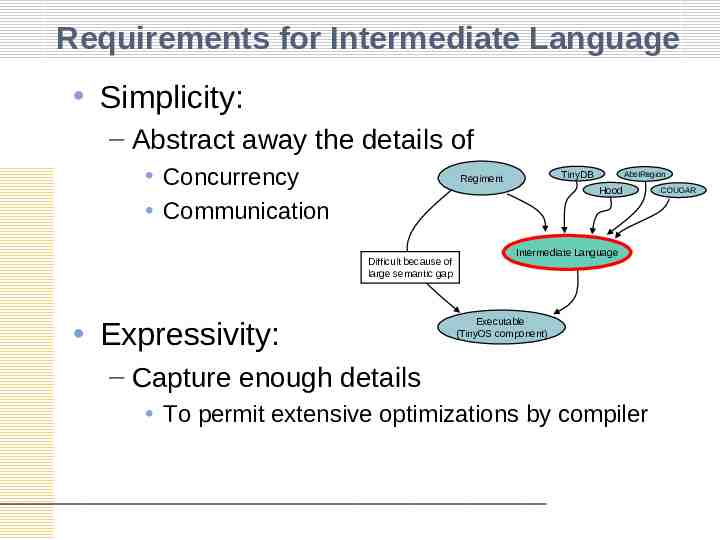

Requirements for Intermediate Language Simplicity: – Abstract away the details of Concurrency Communication TinyDB Regiment AbstRegion Hood Difficult because of large semantic gap Expressivity: Intermediate Language Executable (TinyOS component) – Capture enough details To permit extensive optimizations by compiler COUGAR

Goals Define an intermediate language that – Provides simple and versatile abstractions for Communication Data dissemination Remote execution – Constitutes a framework for network coordination That can be used to implement sophisticated algorithms: – Leader election – Group maintenance – In-network aggregation Note: Use by human is not a goal – But it turns out to be usable as a result

Outline Background & Motivation Macroprogramming – Need for intermediate language – Requirements & goals Distributed Token Machines (DTM) Model Token Machine Language (TML) Examples Evaluation / Conclusion / Future Works Discussion

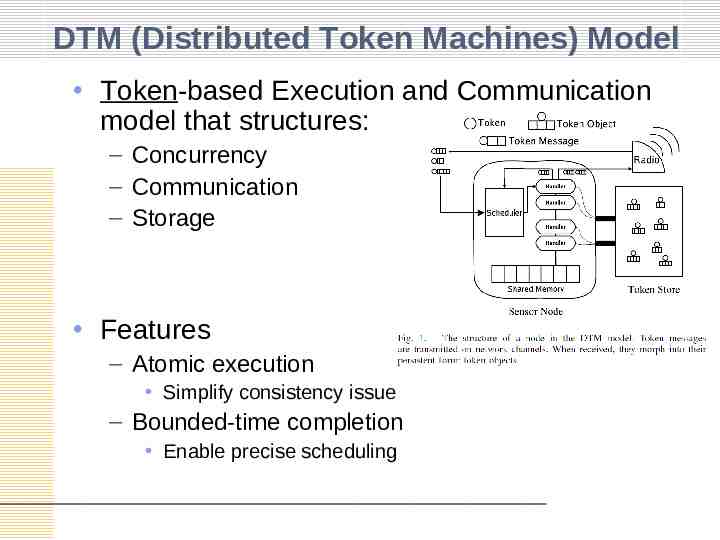

DTM (Distributed Token Machines) Model Token-based Execution and Communication model that structures: – Concurrency – Communication – Storage Features – Atomic execution Simplify consistency issue – Bounded-time completion Enable precise scheduling

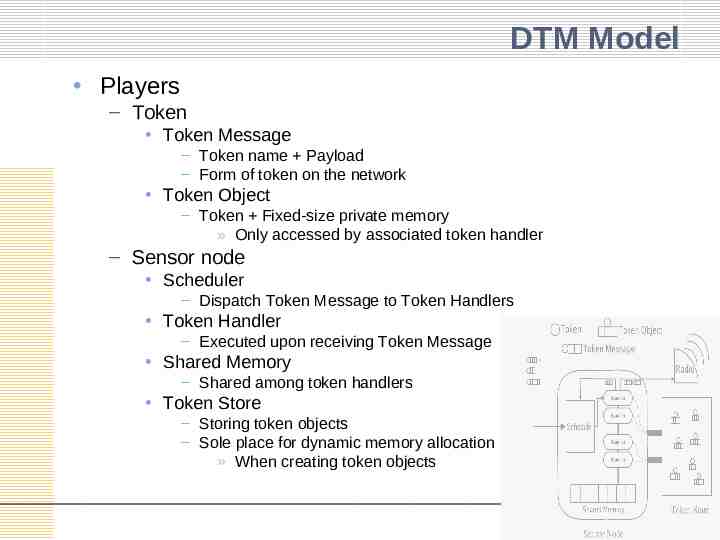

DTM Model Players – Token Token Message – Token name Payload – Form of token on the network Token Object – Token Fixed-size private memory » Only accessed by associated token handler – Sensor node Scheduler – Dispatch Token Message to Token Handlers Token Handler – Executed upon receiving Token Message Shared Memory – Shared among token handlers Token Store – Storing token objects – Sole place for dynamic memory allocation » When creating token objects

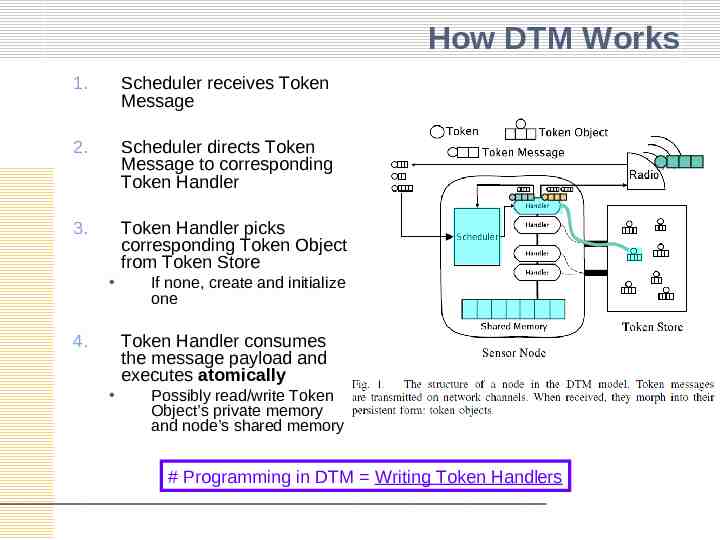

How DTM Works 1. Scheduler receives Token Message 2. Scheduler directs Token Message to corresponding Token Handler 3. Token Handler picks corresponding Token Object from Token Store 4. If none, create and initialize one Token Handler consumes the message payload and executes atomically Possibly read/write Token Object’s private memory and node’s shared memory # Programming in DTM Writing Token Handlers

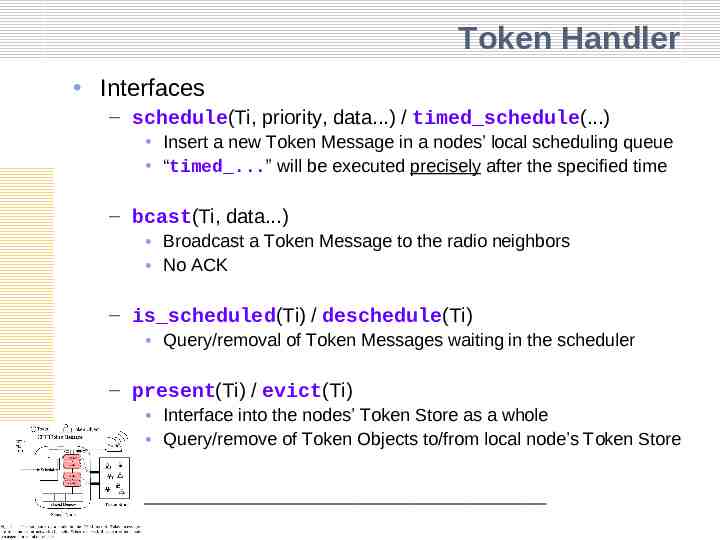

Token Handler Interfaces – schedule(Ti, priority, data.) / timed schedule(.) Insert a new Token Message in a nodes’ local scheduling queue “timed .” will be executed precisely after the specified time – bcast(Ti, data.) Broadcast a Token Message to the radio neighbors No ACK – is scheduled(Ti) / deschedule(Ti) Query/removal of Token Messages waiting in the scheduler – present(Ti) / evict(Ti) Interface into the nodes’ Token Store as a whole Query/remove of Token Objects to/from local node’s Token Store

Outline Background & Motivation Macroprogramming – Need for intermediate language – Requirements & goals Distributed Token Machines (DTM) Model Token Machine Language (TML) Examples Evaluation / Conclusion / Future Works Discussion

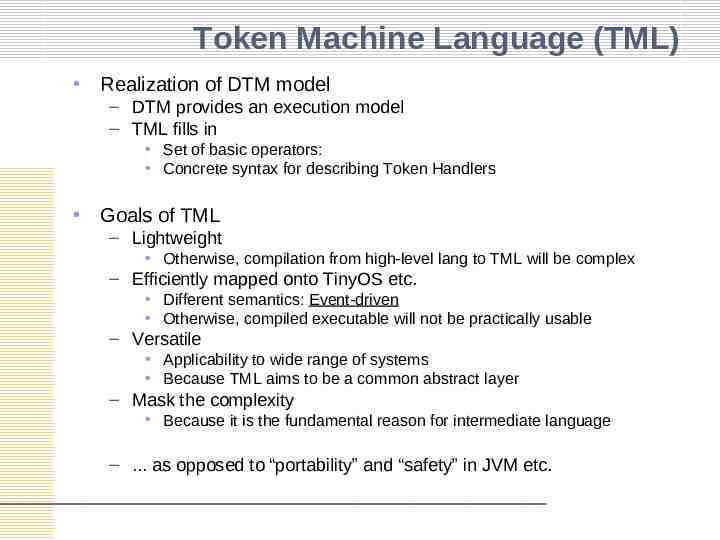

Token Machine Language (TML) Realization of DTM model – DTM provides an execution model – TML fills in Set of basic operators: Concrete syntax for describing Token Handlers Goals of TML – Lightweight Otherwise, compilation from high-level lang to TML will be complex – Efficiently mapped onto TinyOS etc. Different semantics: Event-driven Otherwise, compiled executable will not be practically usable – Versatile Applicability to wide range of systems Because TML aims to be a common abstract layer – Mask the complexity Because it is the fundamental reason for intermediate language – . as opposed to “portability” and “safety” in JVM etc.

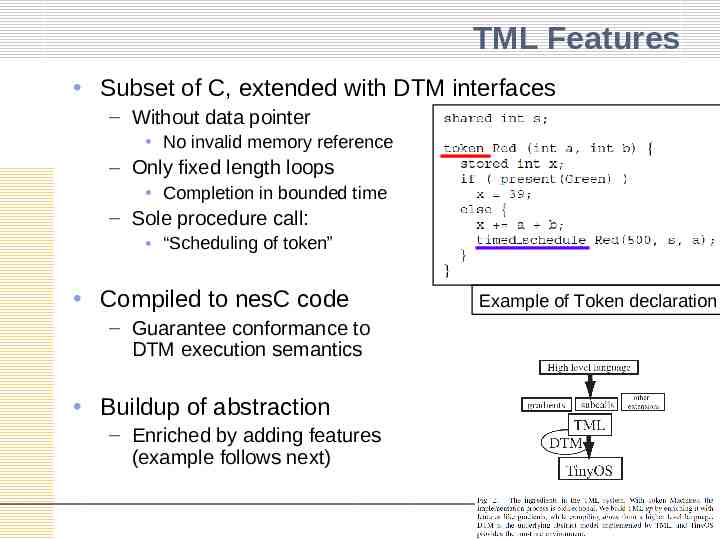

TML Features Subset of C, extended with DTM interfaces – Without data pointer No invalid memory reference – Only fixed length loops Completion in bounded time – Sole procedure call: “Scheduling of token” Compiled to nesC code – Guarantee conformance to DTM execution semantics Buildup of abstraction – Enriched by adding features (example follows next) Example of Token declaration

Building up Features: Subroutine Calls Subroutine calls – NOT preferred by system For small and fast atomic actions – Execution time could be unpredictable Introduce call-stack: another dynamic structure – Preferred by users For simplicity Solution: CPS transformation – CPS: Continuation Passing Style

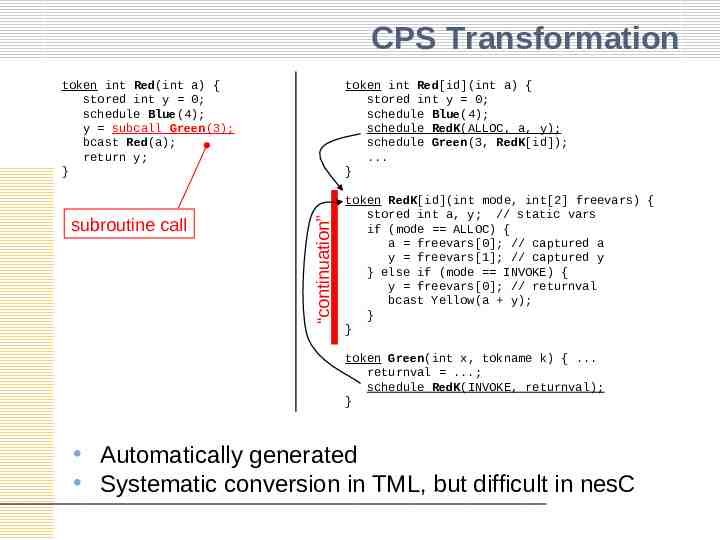

CPS Transformation subroutine call token int Red[id](int a) { stored int y 0; schedule Blue(4); schedule RedK(ALLOC, a, y); schedule Green(3, RedK[id]); . } “continuation” token int Red(int a) { stored int y 0; schedule Blue(4); y subcall Green(3); bcast Red(a); return y; } token RedK[id](int mode, int[2] freevars) { stored int a, y; // static vars if (mode ALLOC) { a freevars[0]; // captured a y freevars[1]; // captured y } else if (mode INVOKE) { y freevars[0]; // returnval bcast Yellow(a y); } } token Green(int x, tokname k) { . returnval .; schedule RedK(INVOKE, returnval); } Automatically generated Systematic conversion in TML, but difficult in nesC

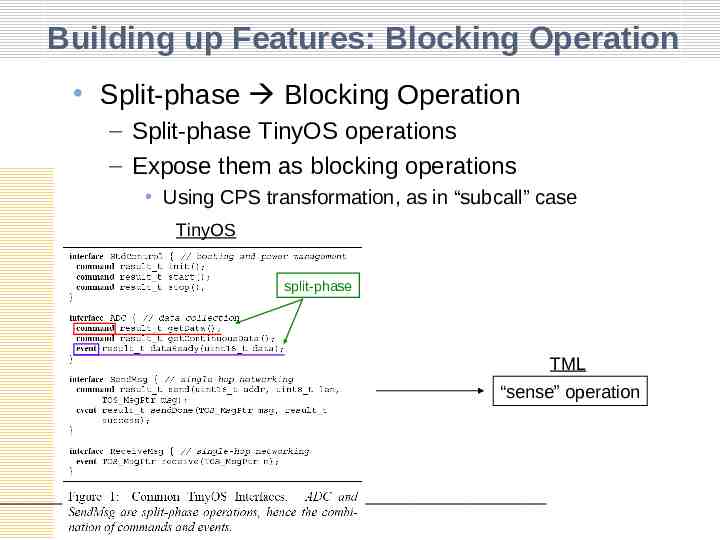

Building up Features: Blocking Operation Split-phase Blocking Operation – Split-phase TinyOS operations – Expose them as blocking operations Using CPS transformation, as in “subcall” case TinyOS split-phase TML “sense” operation

Outline Background & Motivation Macroprogramming – Need for intermediate language – Requirements & goals Distributed Token Machines (DTM) Model Token Machine Language (TML) Examples Evaluation / Conclusion / Future Works Discussion

Examples Building up Features: – Adding “gradient” interface TML examples – Timed data gathering – Distributed event detection – Leader election Intermediate Lang for Macroprogramming – Compiling Regiment

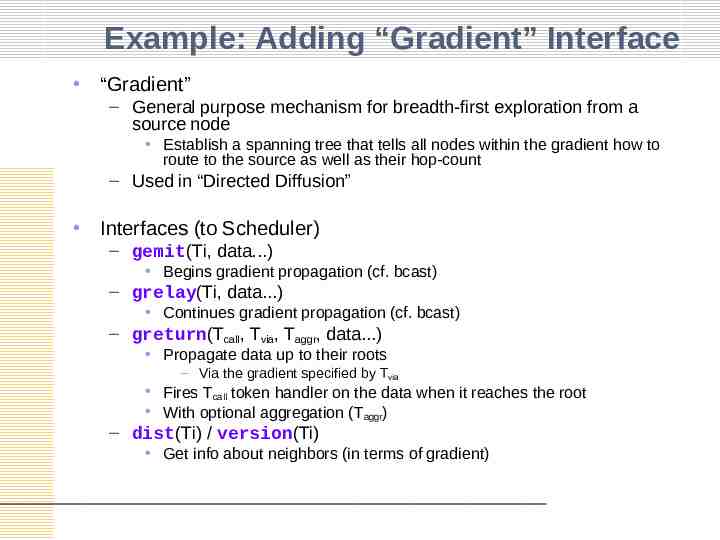

Example: Adding “Gradient” Interface “Gradient” – General purpose mechanism for breadth-first exploration from a source node Establish a spanning tree that tells all nodes within the gradient how to route to the source as well as their hop-count – Used in “Directed Diffusion” Interfaces (to Scheduler) – gemit(Ti, data.) Begins gradient propagation (cf. bcast) – grelay(Ti, data.) Continues gradient propagation (cf. bcast) – greturn(Tcall, Tvia, Taggr, data.) Propagate data up to their roots – Via the gradient specified by Tvia Fires Tcall token handler on the data when it reaches the root With optional aggregation (Taggr) – dist(Ti) / version(Ti) Get info about neighbors (in terms of gradient)

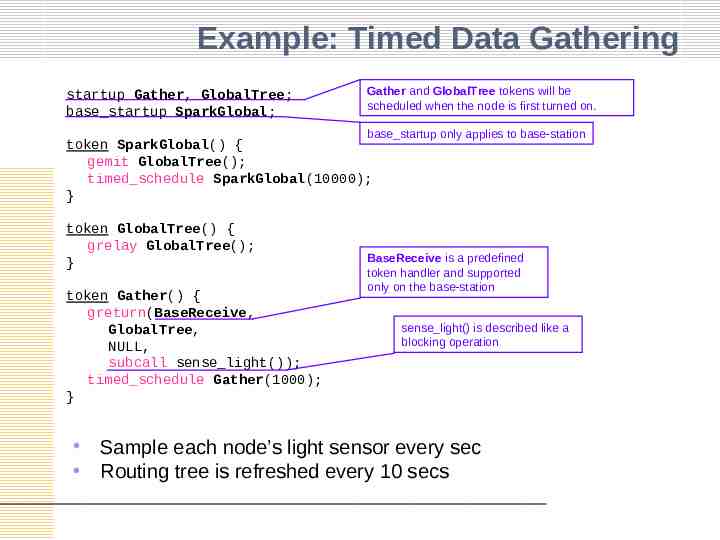

Example: Timed Data Gathering startup Gather, GlobalTree; base startup SparkGlobal; Gather and GlobalTree tokens will be scheduled when the node is first turned on. base startup only applies to base-station token SparkGlobal() { gemit GlobalTree(); timed schedule SparkGlobal(10000); } token GlobalTree() { grelay GlobalTree(); } token Gather() { greturn(BaseReceive, GlobalTree, NULL, subcall sense light()); timed schedule Gather(1000); } BaseReceive is a predefined token handler and supported only on the base-station sense light() is described like a blocking operation Sample each node’s light sensor every sec Routing tree is refreshed every 10 secs

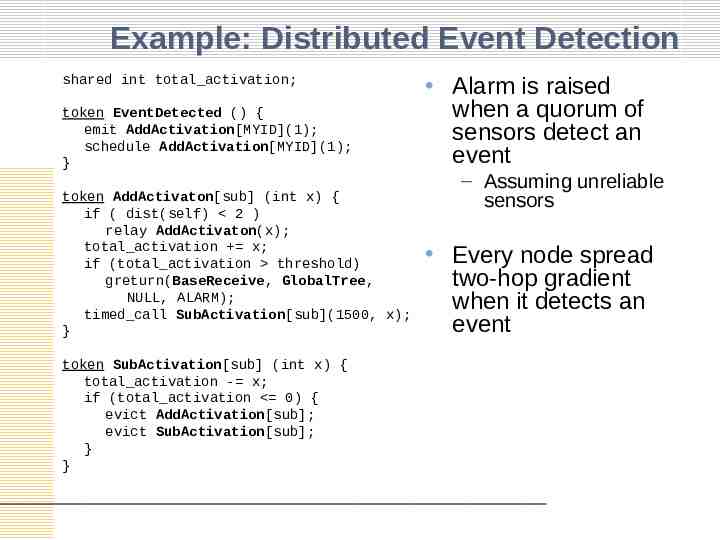

Example: Distributed Event Detection token EventDetected () { emit AddActivation[MYID](1); schedule AddActivation[MYID](1); } Alarm is raised when a quorum of sensors detect an event token AddActivaton[sub] (int x) { if ( dist(self) 2 ) relay AddActivaton(x); total activation x; if (total activation threshold) greturn(BaseReceive, GlobalTree, NULL, ALARM); timed call SubActivation[sub](1500, x); } Every node spread two-hop gradient when it detects an event shared int total activation; token SubActivation[sub] (int x) { total activation - x; if (total activation 0) { evict AddActivation[sub]; evict SubActivation[sub]; } } – Assuming unreliable sensors

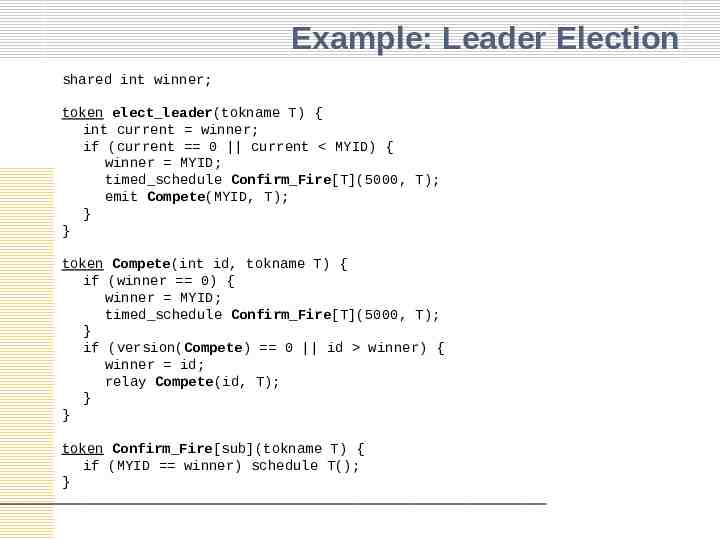

Example: Leader Election shared int winner; token elect leader(tokname T) { int current winner; if (current 0 current MYID) { winner MYID; timed schedule Confirm Fire[T](5000, T); emit Compete(MYID, T); } } token Compete(int id, tokname T) { if (winner 0) { winner MYID; timed schedule Confirm Fire[T](5000, T); } if (version(Compete) 0 id winner) { winner id; relay Compete(id, T); } } token Confirm Fire[sub](tokname T) { if (MYID winner) schedule T(); }

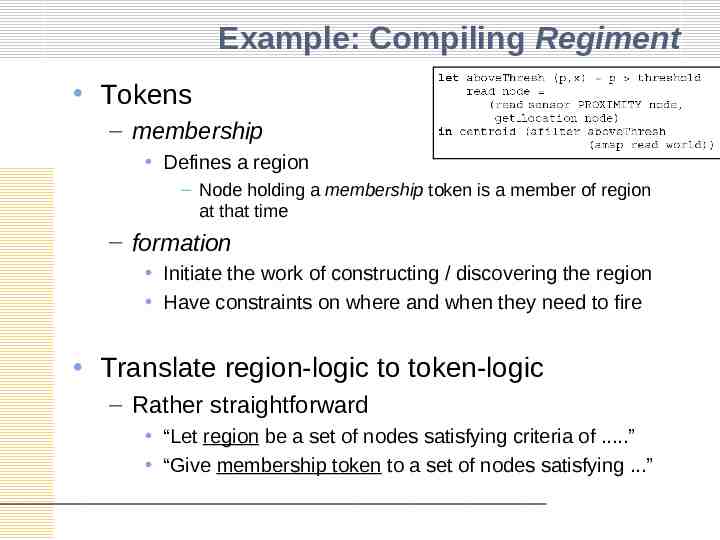

Example: Compiling Regiment Tokens – membership Defines a region – Node holding a membership token is a member of region at that time – formation Initiate the work of constructing / discovering the region Have constraints on where and when they need to fire Translate region-logic to token-logic – Rather straightforward “Let region be a set of nodes satisfying criteria of .” “Give membership token to a set of nodes satisfying .”

Outline Background & Motivation Macroprogramming – Need for intermediate language – Requirements & goals Distributed Token Machines (DTM) Model Token Machine Language (TML) Examples Evaluation / Conclusion / Future Works Discussion

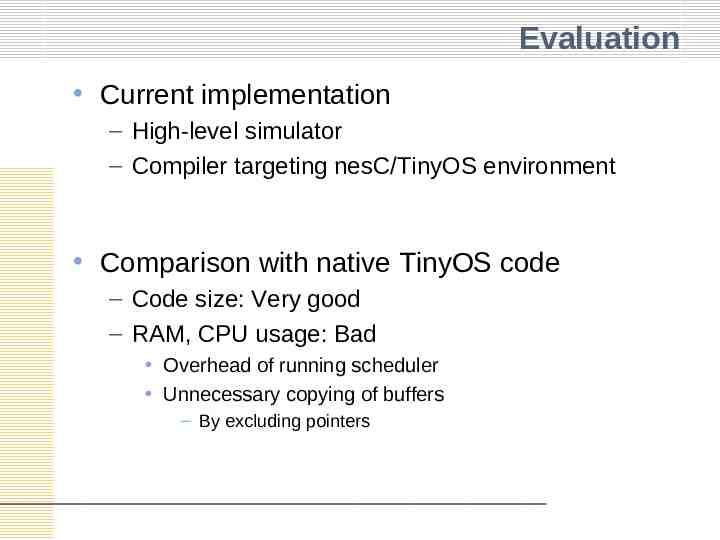

Evaluation Current implementation – High-level simulator – Compiler targeting nesC/TinyOS environment Comparison with native TinyOS code – Code size: Very good – RAM, CPU usage: Bad Overhead of running scheduler Unnecessary copying of buffers – By excluding pointers

Conclusion / Findings Atomic action model of concurrency is good because . – Preclude deadlocks – Easy reasoning about timing Communication bound to persistent storage ( tokens) is good because . – Give a way to refer to communications that have happened through the token they leave behind

Future Directions Dynamic retasking – As in Maté: Support Maté’s view: – “Separate representations for » End-user programming model » Code transport layer » Execution engine” – TML code should be compiled into Maté bytecode Focus on whole-program compilation – Numerous optimizations depend on whole program optimization Impossible in Maté architecture (Bytecode VM) Incorporate token-based algorithms – Routing, Consensus,.

Discussion Is DTM model enough capable? – To describe every mode of execution at each node Is token an appropriate abstraction? – How is it different from messages as in MPI? TML: explicit association between message and object Is TML – Versatile enough? Is macroprogramming a viable approach? – Easy? Expressive? Extensible? Flexible? What is missing? – Refinement of code for further optimization (by hand)?

References nesC: – TinyOS: – Samuel R. Madden, Mehul A. Shah, Joseph M. Hellerstein, and Vijayshankar Raman, “Continuously Adaptive Continuous Queries over Streams”, SIGMOD, 2002 COUGAR – Chalermek Intanagonwiwat, Ramesh Govindan and Deborah Estrin, “ Directed diffusion: A scalable and robust communication paradigm for sensor networks”, MobiCOM, 2000 TinyDB: – Ryan Newton and Matt Welsh, “Region Streams: Functional Macroprogramming for Sensor Networks”, DMSN, 2004 Directed Diffusion – Matt Welsh and Geoff Mainland , "Programming Sensor Networks Using Abstract Regions" [slides], NSDI, 2004 Regiment – Philip Levis and David Culler, "Maté: A Tiny Virtual Machine for Sensor Networks." ASPLOS-X, 2002 Philip Levis, David Gay, and David Culler, "Active Sensor Networks“, NSDI, 2005 Abstract Regions – Jason Hill, Robert Szewczyk, Alec Woo, Seth Hollar, David Culler and Kristofer Pister, “System architecture directions for network sensors” ASPLOS-IX, 2000 Maté/ASVM: – – David Gay, Philip Levis, Robert von Behren, Matt Welsh, Eric Brewer, and David Culler , "The nesC Language: A Holistic Approach to Network Embedded Systems" [citeseer], PLDI, 2003 Philippe Bonnet, J. E. Gehrke, and Praveen Seshadri, “Querying the Physical World”. IEEE Personal Communications, Vol. 7, No. 5, October 2000, pages 10-15 SNACK – Ben Greenstein, Eddie Kohler, and Deborah Estrin, “A Sensor Network Application Construction Kit (SNACK)”, SenSys, 2004

BACKUP SLIDES FROM HERE

TML Unified abstraction for – Communication Token is the only method of communication – Execution Token Handler is the only place of execution – Network state management Token Objects and Shared Memory

Subtoken Indexed token object All subtokens share a single token handler – for efficiency cf) component parameterization in nesC cf2) Can be seen as “multiple instances ( subtoken) of token class”

nesC [Gay, Levis, von Behren, Welsh, Brewer & Culler, 2003] C-based language Component-oriented – component with bidirectional interface Event-based execution model Concurrency model with extensive compiletime analysis – “Asynchronous code” / “Synchronous code” “Split-phase operations” – No blocking operations: Request / Completion

TinyOS [Hill, Szewczyk, Woo, Hollar, Culler & Pister, 2000] Implemented by nesC Event-based programming model Set of components – Rather than monolithic OS – Abstract hardware – Layering of components: “Command” and “Event” Services provided such as: – – – – RF messaging Periodic timer events Asynchronous access to UART data transfers Mechanism for static, persistent storage

Abstract Regions [Welsh & Mainland, 2004] Efficient communication primitives Provide interface for – Neighborhood discovery Initialize, may or may not be done continuously – Enumeration Get set of nodes in the region – Data sharing "Get" and "Put": tuple-space-like programming model – Reduction Reduce a shared variable across nodes in the region using the indicated associative operator (sum, max,.) Allow tuning the trade-off between accuracy and resource usage – By exposing low level tuning parameters: #retransmission, timeout interval.

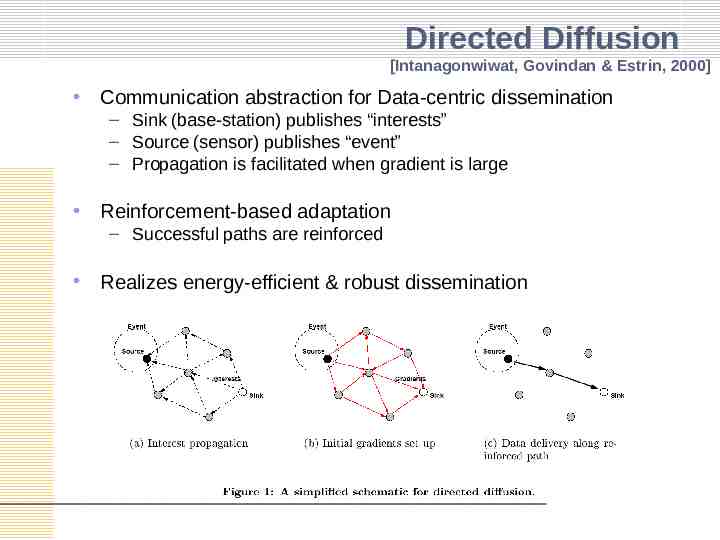

Directed Diffusion [Intanagonwiwat, Govindan & Estrin, 2000] Communication abstraction for Data-centric dissemination – Sink (base-station) publishes “interests” – Source (sensor) publishes “event” – Propagation is facilitated when gradient is large Reinforcement-based adaptation – Successful paths are reinforced Realizes energy-efficient & robust dissemination

Maté / ASVM [Levis & Culler, 2002], [Levis, Gay & Culler, 2005] Application specific virtual machine – To cope with different programming models for different application classes – Complete generality is not necessary for a given network Mainly for – Efficient dynamic reprogramming – Safety – (NOT for portability as in JVM) Expose new programming primitives by – Using application-specific opcodes

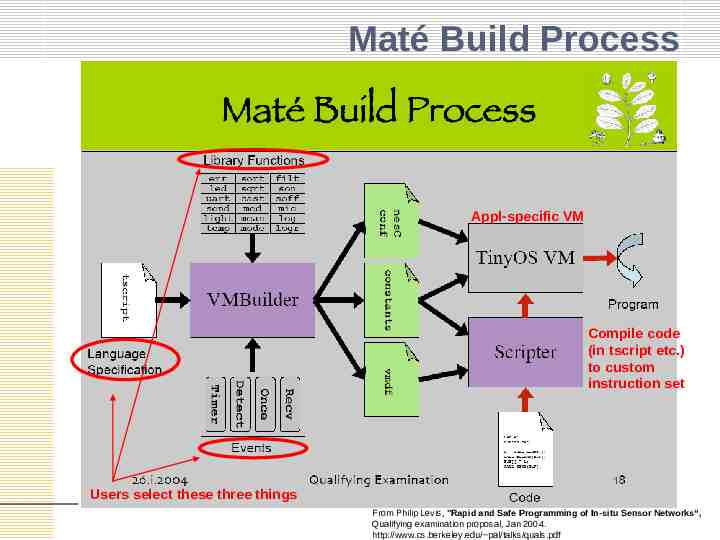

Maté Build Process Appl-specific VM Compile code (in tscript etc.) to custom instruction set Users select these three things From Philip Levis, "Rapid and Safe Programming of In-situ Sensor Networks“, Qualifying examination proposal, Jan 2004. http://www.cs.berkeley.edu/ pal/talks/quals.pdf

Maté / ASVM Features Customizable virtual machine framework – Event-driven, thread-based, stack architecture – Customizable execution events and instruction set Users select three things – A scripting language – A set of library functions – A set of events that trigger execution Maté builds a VM and scripting environment – – – – Instruction set customized for deployment VM provides needed services: routing, code propagation Scripts compile to custom instruction set VM automatically distributes new programs VM and scripter form an application-specific runtime

TML vs. Maté (According to TML paper) – Maté . provides only low-level radio communication directly within Maté, and uses application-specific opcodes – essentially a foreign function interface into other TinyOS components – to expose new communication primitives such as abstract regions. – In contrast, TML provides a coordinated communication and execution architecture, based on tokens, that is used to build up new communication abstractions. – Ideally, the user program should be compiled to a concise bytecode supported by a pre-installed virtual machine. We will look into targetting a virtual machine rather than native code, perhaps Maté itself. In short, – TML can be used on top of Maté

Regiment [Newton & Welsh, 2004] Purely functional macroprogramming language – No direct manipulation of program state High-level program manipulates “regions” of sensor data as values – Individual nodes appear as data streams – Regions are groupings of these streams Designated using a number of different criteria Program operates over these streams and regions – translated into node-level actions Example: Tracking Object A region stream is created that represents the value of the proximity sensor on every node in the network Each value is also annotated with the location of the corresponding sensor. Data items that fall below the threshold are filtered out. The spatial centroid of the remaining collection of sensor values is computed to determine the approximate location of the object that generated the readings