Big Data Quality the need for improved data quality for

52 Slides152.31 KB

Big Data Quality the need for improved data quality for effective management and decision making in a BD context Maria Teresa PAZIENZA a.a. 2018-19

Content How to use semantics to address the problem of big data variety/quality Variety refers to dealing with different types of sources, different formats of the data, and large numbers of sources.

Importing – challenges in integration 1. the ability to import different data formats into a common representation 2. when the sources are large it is not possible to read an entire source into main memory

Cleaning - challenges in integration 1. there are often noisy data, missing values, and inconsistencies that need to be identified and fixed. 2. the data in different sources is often represented in different and incompatible ways.

Modeling – challenges in integration One of the main challenges of integrating diverse data sets is to harmonize their representation. Even after we clean the data to normalize the values of individual attributes, we are left with two main problems. Nomenclature differences. Data sets from different providers often use different names (docenti/insegnanti/professori) to refer to attributes that have the same meaning Format and structure differences we need to convert all these into a common representation We could address these differences by modeling all data sets with respect to a common ontology

Modeling Modeling is the process of specifying how the different fields of a data set (columns in a database or spreadsheet, attributes in a JSON object, elements in an XML document) map to classes and properties in an ontology. In addition, it is necessary to specify how the different fields are related

Integrating data Information integration involves two main tasks: 1. integration at the schema level, that involves homogenizing differences in the schemas and nomenclature used to represent the data. 2. integration at the data level, that involves identifying records in different data sets that refer to the same real-world entity.

Data quality Data quality 1- is a subset of the larger challenge of ensuring that the results of the analysis are accurate or described in an accurate way. The problem is that you can almost never be sure in data quality. In most cases data are dirty. 2- has a significant effect on results and efficiency of

Data quality (as from intrerviews) Looking at basic statistics (central tendency and dispersion) about the data can give good insight into the data quality. No matter what you do, there’s always going to be dirty data lacking attributes entirely, missing values within attributes, and riddled with inaccuracies. Data quality management can involve checking for outliers/inconsistences, fixing missing values, making sure data in columns are within a reasonable range, data is accurate etc. Sometime you do not see problems with data immediately, but only after using them for training some models, where errors are accumulated and lead to wrong results and you have trace back what was wrong. It is not possible to “ensure” data quality, because you cannot say for sure that there isn’t something wrong with it somewhere

Data quality (as from intrerviews) Data quality is not enough, it must be automatically checked. In real applications it rarely happens that you get data once. Frequently you get a stream of data. To ensure data quality once you understand what problems may happen, you build data quality monitoring software. At every step of data processing pipelines I embed tests. They may check total amount of data, existence or non existence of certain values, anomalies in data, compare data to data from previous batch and so on. Data quality issues generally occur upstream in the data pipeline. Sometimes the data sources are within the same organization and sometimes data comes from a third-party application. You have to assume that data will not be clean and address the data quality issues in your application that processes data. To keep a data quality is mostly an adaptive process, for example, because provisions of national law may change or because the analytical aims and purposes of the data owner may vary. Data quality has many dimensions

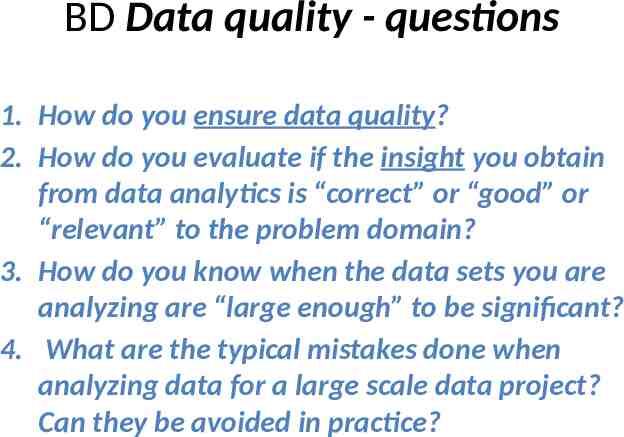

BD Data quality - questions 1. How do you ensure data quality? 2. How do you evaluate if the insight you obtain from data analytics is “correct” or “good” or “relevant” to the problem domain? 3. How do you know when the data sets you are analyzing are “large enough” to be significant? 4. What are the typical mistakes done when analyzing data for a large scale data project? Can they be avoided in practice?

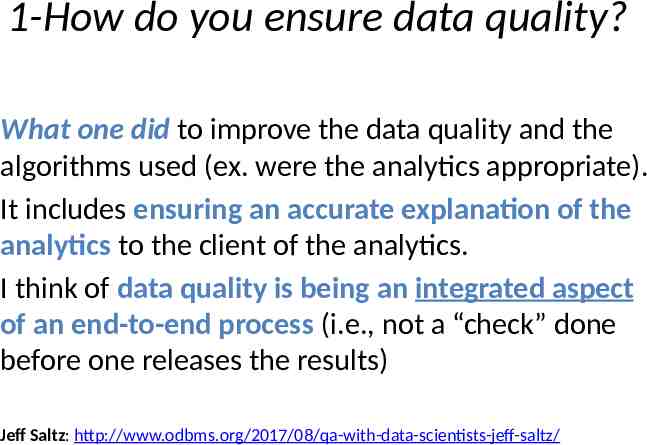

1-How do you ensure data quality? What one did to improve the data quality and the algorithms used (ex. were the analytics appropriate). It includes ensuring an accurate explanation of the analytics to the client of the analytics. I think of data quality is being an integrated aspect of an end-to-end process (i.e., not a “check” done before one releases the results) Jeff Saltz: http://www.odbms.org/2017/08/qa-with-data-scientists-jeff-saltz/

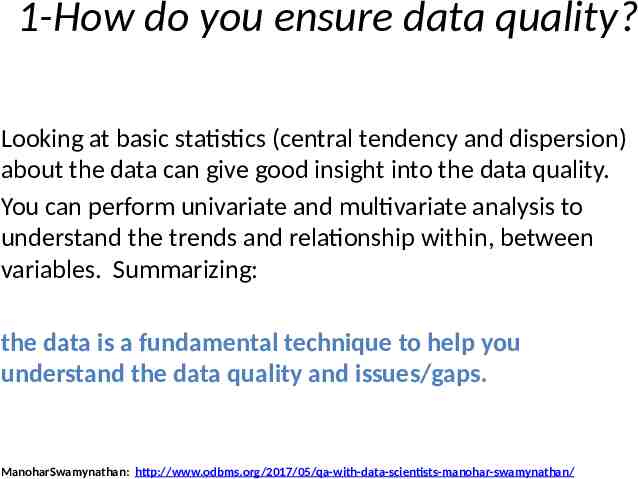

1-How do you ensure data quality? Looking at basic statistics (central tendency and dispersion) about the data can give good insight into the data quality. You can perform univariate and multivariate analysis to understand the trends and relationship within, between variables. Summarizing: the data is a fundamental technique to help you understand the data quality and issues/gaps. ManoharSwamynathan: http://www.odbms.org/2017/05/qa-with-data-scientists-manohar-swamynathan/

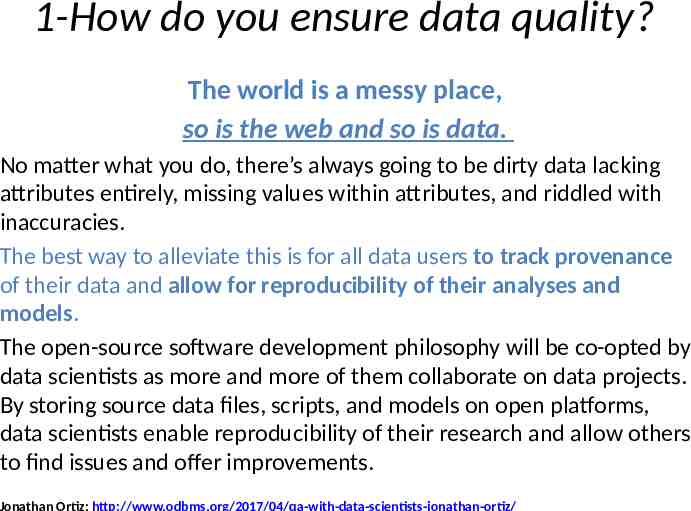

1-How do you ensure data quality? The world is a messy place, so is the web and so is data. No matter what you do, there’s always going to be dirty data lacking attributes entirely, missing values within attributes, and riddled with inaccuracies. The best way to alleviate this is for all data users to track provenance of their data and allow for reproducibility of their analyses and models. The open-source software development philosophy will be co-opted by data scientists as more and more of them collaborate on data projects. By storing source data files, scripts, and models on open platforms, data scientists enable reproducibility of their research and allow others to find issues and offer improvements.

1-How do you ensure data quality? Data quality management can involve checking for outliers/inconsistences, fixing missing values, making sure data in columns are within a reasonable range, data is accurate etc. All can be done during the data pre-processing and exploratory analysis stages. AnyaRumyantseva: http://www.odbms.org/2017/03/qa-with-data-scientists-anya-rumyantseva/

1-How do you ensure data quality? Understanding the data at hand by visual inspection. Ideally, browse through the raw data manually since our brain is a super powerful outlier detection apparatus. Do not try to check every value, just get an idea of how the raw data actually looks! Then, looking at the basic statistical moments (e.g. numbers and boxplots) to get a feeling how the data looks like. Once patterns are identified, parsers can be derived that apply certain rules to incoming data in a productive system. DirkTassiloHettich: http://www.odbms.org/2017/03/qa-with-data-scientists-dirk-tassilo/

1-How do you ensure data quality? It’s good practice to start with some exploratory data analysis before jumping to the modeling part. Doing some histograms and some time series is often enough to get a feeling for the data and know about potential gaps in the data, missing values, data ranges, etc. In addition, you should know where the data is coming from and what transformations it went through. Ones you know all this, you can start filling the gaps and cleaning your data. Eventually there is even another data set you want to take into account. For some model running in production, it’s a good idea to automate some data quality checks. These tests could be as simple as checking if the values are in the correct range or if there are any unexpected missing values And of course someone should be automatically notified if things go bad. Wolfgang Steitz:

1-How do you ensure data quality? For unsupervised problems: checking the contribution of the selected data to between groups heterogeneity and within groups homogeneity For supervised problems: checking the predictive performance of the selected data. PaoloGiudici: http://www.odbms.org/2017/03/qa-with-data-scientists-paolo-giudici/

1-How do you ensure data quality? The problem is that you can almost never be sure in data quality. In most cases data are dirty. You have to protect your customers from dirty data. You have to work to discover what problems with data you might have: problems are not trivial. Sometimes you can see them browsing data directly, frequently they can not. For example, in case of local business latitude longitude coordinates might be wrong because provided has a bad data geocoding system. To ensure data quality, once I understand what problems may happen, I build data quality monitoring software. At every step of data processing pipelines I embed tests, you may compare them with unit tests for traditional software development which checks quality of data. They may check total amount of data, existence or non existence of certain values, anomalies in data, compare data to data from previous batch and so on. It required significant error to build data quality tests, but it pays back, they protect from errors in data engineering, data science, incoming data, some system failures , it always pays back. Mike Shumpert: http://www.odbms.org/2017/03/qa-with-data-scientists-mike-shumpert/

1-How do you ensure data quality? This is again a vote for domain knowledge. I have someone with domain skills assess each data source manually. In addition I gather statistics on the accepted data sets so some significant changes will raise an alert which – again - has to be validated by a domain expert. RomeoKienzler: http://www.odbms.org/2017/03/qa-with-data-scientists-romeo-kienzler/

1-How do you ensure data quality? It is not possible to “ensure” data quality, because you cannot say for sure that there isn’t something wrong with it somewhere. In addition, there is also some research which suggests that compiled data are inherently filled with the (unintentional) bias of the people compiling it. You can attempt to minimize the problems with quality by ensuring that there is full provenance as to the source of the data, and err on the side of caution where some part of it is unclassified or possibly erroneous. One of the things we are researching at the moment is how best to leverage the wisdom of the crowd for ensuring quality of data, known as crowdsourcing. However, the best ways of optimising cost, accuracy or time remain to be determined and are different relative to the particular problem or motivation of the crowd one works with. Elena Simperl: http://www.odbms.org/2017/02/qa-with-data-scientists-elena-simperl/

1-How do you ensure data quality? It is a tough problem. Data quality issues generally occur upstream in the data pipeline. Sometimes the data sources are within the same organization and sometimes data comes from a third-party application. It is relatively easier to fix data quality issues if the source system is within the same organization. Even then, the source may be a legacy application that nobody wants to touch. So you have to assume that data will not be clean and address the data quality issues in your application that processes data. Data scientists use various techniques to address these issues. Again, domain knowledge helps. Mohammed Guller: http://www.odbms.org/2017/02/qa-with-data-scientists-mohammed-guller/

1-How do you ensure data quality? I tend to rely on the “wisdom of the crowd” by implementing similar analysis using multiple techniques and machine learning algorithms. When the results diverge, I compare the methods to gain any insight about the quality of both data as well as models. This technique works also well to validate the quality of streaming analytics: in this case the batch historical data can be used to double check the result in streaming mode, providing, for instance, end-ofday or end-of-month reporting for data correction and reconciliation. Natalino Busa:

1-How do you ensure data quality? Data quality is very important to make sure the analysis is correct and any predictive model we develop using that data is good. Very simply I would do some statistical analysis on the data, create some charts and visualize information. I also will clean data by making some choice at the time of data preparation. This would be part of the feature engineering stage that needs to be done before any modeling can be done. Vikas Rathee:

1-How do you ensure data quality? To keep a data quality is mostly an adaptive process for example, because provisions of national law may change or because the analytical aims and purposes of the data owner may vary. Therefore, the ensuring of a data quality should be performed regularly, it should be consistent with the law (data privacy aspects and others), and should be commonly performed by a team of experts of different education levels (e.g., data engineers, lawyers, computer scientists, mathematicians). ChristopherSchommer: http://www.odbms.org/2017/01/qa-with-data-scientists-christopher-schommer/

1-How do you ensure data quality? Make sure you know where the data comes from and what the records actually mean. Is it a static snapshot that was already processed in some way, or does it come from the primary source? Plotting histograms and profiling data in other ways is a good start to find outliners and data gaps that should undergo imputation (filling of data gaps with reasonable fillers). Measureing is key, so doing everything from inter-annotator agreement of the gold data over training, dev-test and test evaluations to human SME output grading consistently pays back the effort. Jochen Leidner: http://www.odbms.org/2017/01/qa-with-data-scientists-jochen-leidner/

1-How do you ensure data quality? The sad truth is – you cannot. Much is written about data quality and it is certainly a useful relative concept, but as an absolute goal it will remain an unachievable ideal (with the irrelevant exception of simulated data ). data quality has many dimensions it is inherently relative: the exact data can be quite good for one purpose and terrible for another data quality is a very different concept for ‘raw’ event log data vs. aggregated and processed data you almost never know what you don’t know about your data. In the end, all you can do is your best! Scepticism, experience, and some sense of data intuition are the best sources of guidance you will have. Claudia Perlich: http://www.odbms.org/2016/11/qa-with-data-scientists-claudia-perlich/

1-How do you ensure data quality? Data Quality is critical. We hear often from many of our clients that ensuring trust in the quality of information used for analysis is a priority. The thresholds and tolerance of data quality can vary across problem domains and industries but nevertheless data quality and validation processes should be tightly integrated into the data preparation steps. Data scientists should have full transparency on the profile and quality of datasets that they are working with and have tools at their disposal to remediate fixes with proper governance and procedures as necessary. Emerging data quality technologies are embedding leverages machine learning features to detect proactive data errors and make data quality a business-user friendly and an intelligent function more than ever it has been for years Ritesh Ramesh: http://www.odbms.org/2016/11/qa-with-data-scientists-ritesh-ramesh/

1-How do you ensure data quality? Data Quality is a fascinating question. It is possible to invest enormous levels of resource into attempting to ensure near perfect data quality and still fail. The critical question should, however, start from the Governance perspective of questions such as: What is the overall business Value of the intended analysis? How is the Value of the intended insight affected by different levels of data quality (or Veracity)? What is the level of Vulnerability to our organization (or other stakeholders) if the data is not perfectly correct in terms of reputation, or financial consequences? Once you have answers to those questions and the sensitivities of your project to various levels of data quality, you will then begin to have an idea of just what level of data quality you need to achieve. You will also then have some ideas about what metrics you need to develop and collect, in order to guide your data ingestion and data cleansing and filtering activities Richard J Self: http://www.odbms.org/2016/11/qa-with-data-scientists-richard-j-self/

1-How do you ensure data quality? Data Quality: good, correct, relevant, and missing data? good and correct: look for the predictive quality and explainability of the outcomes. relevant: should determine at the onset, which variables or parameters are relevant to the subject matter to be studied. Wait until the end, then it's already too late and irrelevant! missing data: should not be a problem, unless they are really severely missing. I rarely discard pertinent variables with missing data. Just use an appropriate segmentation (e.g. similarity) to group them with more fully data populated segment elements. In design, please remember you deal with "don't care" cell/situation very often.

2-How do you evaluate if the insight you obtain from data analytics is “correct” or “good” or “relevant” to the problem domain? With respect to being relevant, this should be addressed by our first topic of discussion – needing domain knowledge. It is the domain expert (either the data scientist or a different person) that is best positioned to determine the relevance of the results. Evaluating if the analysis is “good” or “correct” is much more difficult. It is one thing to try and do “good” analytics, but how does one evaluate if the analytics are “good” or “relevant”? I think this is an area ripe for future research. Today, there are various methods that I (and most others) use. While the actual techniques we use vary based on the data and analytics used, ensuring accurate results ranges from testing new algorithms with known data sets to point sampling results to ensure reasonable outcomes. Jeff Saltz: http://www.odbms.org/2017/08/qa-with-data-scientists-jeff-saltz/

2-How do you evaluate if the insight you obtain from data analytics is “correct” or “good” or “relevant” to the problem domain? Here’s a list of things I watch for: Proxy measurement bias. If the data is an accidental or indirect measurement, it may differ from the “real” behavior in some material way. Instrumentation coverage bias. The “visible universe” may differ from the “whole universe” in some systematic way. Analysis confirmation bias. Often the data will generate a signal for “the outcome that you look for”. It is important to check whether the signals for other outcomes are stronger. Data quality. If the data contains many NULL values, invalid values, duplicated data, missing data, or if different aspects or the data are not self-consistent, then the weight placed in the analysis should be appropriately moderated and communicated. Confirmation of well-known behavior. The data should reflect behavior that is common and well-known. For example, credit card transaction volumes should peak around well-known times of the year. If not, conclusions drawn from the data should be questioned. My view is that we should always view data and analysis with a healthy amount of skepticism, while acknowledging that many real-life decisions need only directional guidance from the data.

2- How do you evaluate if the insight you obtain from data analytics is “correct” or “good” or “relevant” to the problem domain? I think “good” insights are those that are both “relevant” and “correct,” and those are the ones you want to shoot for. Always have a baseline for comparison. You can do this either by experimenting, where you actually run a controlled test between different options and determine empirically which is the preferred outcome, or by comparing predictive models to the current ground truth or projected outcomes from current data. Also, solicit feedback about your results early and often by showing your customers, clients, and domain experts. Gather as much feedback as you can throughout the process in order to iterate on the models. JonathanOrtiz: http://www.odbms.org/2017/04/qa-with-data-scientists-jonathan-ortiz/

2- How do you evaluate if the insight you obtain from data analytics is “correct” or “good” or “relevant” to the problem domain? I would suggest constantly communicating with other people involved in a project. They can relate insights from data analytics to defined business metrics. For instance, if a developed data science solution decreases shutdown time of a factory from 5% to 4.5%, this is not that exciting for a mathematician. But for the factory owner it means going bankrupt or not! AnyaRumyantseva: http://www.odbms.org/2017/03/qa-with-data-scientists-anya-rumyantseva/

2- How do you evaluate if the insight you obtain from data analytics is “correct” or “good” or “relevant” to the problem domain? Presenting results to some domain experts and your customers usually helps. Try to get feedback early in the process to make sure you are working in the right direction and the results are relevant and actionable. Even better, collect expectations first to know how your work will be evaluated later-on. Wolfgang Steitz:

2- How do you evaluate if the insight you obtain from data analytics is “correct” or “good” or “relevant” to the problem domain? By testing its out-of-sample predictive performance we can check if it is correct. To check its relevance, the insights must be matched with domain knowledge models or consolidated results. PaoloGiudici: http://www.odbms.org/2017/03/qa-with-data-scientists-paolo-giudici/

2- How do you evaluate if the insight you obtain from data analytics is “correct” or “good” or “relevant” to the problem domain? Most frequently companies have some important metrics which describe company business. It might be the average revenue per session, the conversion rate, precision of the search engine etc. And your data insights are as good as they improve this metrics. Assume in e-commerce company, the main metrics is average revenue per session (ARPS). And you work on a project of improving extraction of a certain item attribute, for example, from non-structured text. Questions to ask yourself, will it help to improve ARPS by improving search because it will increase relevance for queries with color intents or faceted queries by color, or by providing better snippets, or by still other means. When one metric does not describe company business and many numbers are needed to understand it your data projects might be connected to other metrics. What’s important is to connect your data insight project to metrics which are representative of company business and improvement of these metrics will be as a significant impact to the company business. Such connection makes a good project. AndreiLopatenko: http://www.odbms.org/2017/03/qa-with-data-scientists-andrei-lopatenko/

2- How do you evaluate if the insight you obtain from data analytics is “correct” or “good” or “relevant” to the problem domain? This question links back to a couple of earlier questions nicely. The importance of having good enough domain knowledge comes into play in terms of answering the relevance question. Hopefully a data scientist will have a good knowledge of the domain, but if not then they need to be able to understand what the domain expert believes in terms of relevance to the domain. The correctness or value of the data then comes down to understanding how to evaluate machine learning algorithms in general, and using domain knowledge to apply to decide whether the trade-offs are appropriate given the domain. ElenaSimperl: http://www.odbms.org/2017/02/qa-with-data-scientists-elena-simperl/

2- How do you evaluate if the insight you obtain from data analytics is “correct” or “good” or “relevant” to the problem domain? This is where domain knowledge helps. In the absence of domain knowledge, it is difficult to verify whether the insight obtained from data analytics is correct. A data scientist should be able to explain the insights obtained from data analytics. If you cannot explain it, chances are that it may be just a coincidence. There is an old saying in machine learning, “if you torture data sufficiently, it will confess to almost anything.” Another way to evaluate your results is to compare it with the results obtained using a different technique. For example, you can do backtesting on historical data. Alternatively, compare your results with the results obtained using incumbent technique. It is good to have a baseline against which you can benchmark results obtained using a new technique. MohammedGuller: http://www.odbms.org/2017/02/qa-with-data-scientists-mohammed-guller /

2- How do you evaluate if the insight you obtain from data analytics is “correct” or “good” or “relevant” to the problem domain? Most of the time I interact with domain experts for a first review on the results. Subsequently, I make sure than the model is brought into “action”. Relevant insight, in my opinion, can always be assessed by measuring their positive impact on the overall application. Most of the time, as human interaction is part of the loop, the easiest method is to measure the impact of the relevant insight in their digital journey. Natalino Busa:

2-How do you evaluate if the insight you obtain from data analytics is “correct” or “good” or “relevant” to the problem domain? In order to make sure the insights are good and relevant we need to continuously ask ourselves what is the problem we are trying to solve and how it will be used. In simpler words, to make improvements in existing process we will need to understand the process and where the improvement is a requirement or of most value. For predictive modeling cases, we need to ask how the output of the predictive model will be applied and what additional business value can be derived from the output. We also need to convey what does the predictive model output means to avoid incorrect interpretation by non-experts. Once the context around a problem has been defined and we proceed to implement the machine learning solution. The immediate next stage is to verify if the solution will actually work. There are many techniques to measure the accuracy of predictions i.e. testing with historic data samples using techniques like k-fold cross validation, confusion matrix, r-square, absolute error, MAPE (Mean absolution percentage error), pvalue etc. We can choose from among many models which show most promising results. There are also ensemble algorithms which generalize the learning and avoid being over fit models.

2- How do you evaluate if the insight you obtain from data analytics is “correct” or “good” or “relevant” to the problem domain? In my understanding, an insight is already a valuable/evaluated information, which has been received after a detailed interpretation and which can be used for any kind of follow-up activities, for example to relocate the merchandise or to deeper dig in clusters showing a fraudulent behavior. However, it is less opportune to rely only on statistical values: an association rule, which shows a conditional probability of, e.g., 90% or more, may be an “insight”, but if the right-hand side of the rule refers to a plastic bag only (which is to be paid (3 cents), at least in Luxembourg), the discovered pattern might be uninteresting. ChristopherSchommer: http://www.odbms.org/2017/01/qa-with-data-scientists-christopher-schommer/

2- How do you evaluate if the insight you obtain from data analytics is “correct” or “good” or “relevant” to the problem domain? In a data rich domain, evaluation of the insight correctness is done either by applying the mathematical model to new “unseen” data or using cross-validation. This process is more complicated in human biology. As we have learned over the years, a promising cross-validation performance may not be reproducible in subsequent experimental data. The fact of the matter is, in life sciences, laboratory validation of computational insight is mandatory. The community perspective on computational or statistical discovery is generally skeptical until the novel analyse, therapeutic target, or biomarker is validated in additional confirmatory laboratory experiments, pre-clinical trials or human fluid samples SlavaAkmaev: http://www.odbms.org/2017/01/qa-with-data-scientists-slava-akmaev/

2- How do you evaluate if the insight you obtain from data analytics is “correct” or “good” or “relevant” to the problem domain? There is nothing quite as good as asking domain experts to vet samples of the output of a system. While this is time consuming and needs preparation (to make their input actionable), the closer the expert is to the real end user of the system (e.g. the customer’s employees using it day to day), the better. JochenLeidner: http://www.odbms.org/2017/01/qa-with-data-scientists-jochen-leidner/

2- How do you evaluate if the insight you obtain from data analytics is “correct” or “good” or “relevant” to the problem domain? One should not even have to ask whether the insight is relevant – one should have designed the analysis that led to the insight based on the relevant practical problem one is trying to solve! Let’s look at ‘correct’. What exactly does it mean? To me it somewhat narrowly means that it is ‘true’ given the data: did you do all the due diligence and right methodology to derive something from the data you had? Would somebody answering the same question on the same data come to the same conclusion (replicability)? You did not overfit, you did not pick up a spurious result that is statistically not valid, etc. Of course you cannot tell this from looking at the insight itself. You need to evaluate the entire process (or trust the person who did the analysis) to make a judgement on the reliability of the insight. Now to the ‘good’. To me good captures the leap from a ‘correct’ insight on the analyzed dataset to supporting the action ultimately desired. We do not just find insights in data for the sake of it. A good insight indeed generalizes beyond the (historical) data into the future. Lack of generalization is not just a matter of overfitting, it is also a matter of good judgement whether there is enough temporal stability in the process to hope that what I found yesterday is still correct tomorrow and maybe next week. Likewise we often have to make judgement calls when the data we really needed for the insight is simply not available. So we look at a related dataset (this is called transfer learning) and hope that it is similar enough for the generalization to carry over. Finally, good also incorporates the notion of correlation vs. causation. Many correlations are ‘correct’ but few of them are good for the action one is able to make. The (correct) fact that a person who is sick has temperature is ‘good’ for diagnosis, but NOT good for prevention of infection. At which point we are pretty much back to relevant! So think first about the problem and do good work next! ClaudiaPerlich: http://www.odbms.org/2016/11/qa-with-data-scientists-claudia-perlich /

2- How do you evaluate if the insight you obtain from data analytics is “correct” or “good” or “relevant” to the problem domain? The answer to this returns to the Domain Expert question. If you do not have adequate domain expertise in your team, this will be very difficult. Referring back to the USA election, one of the more unofficial pollsters, who got it pretty well right observed that he did it because he actually talked to real people. This is domain expertise and Small Data. All the official polling organizations have developed a total trust in Big Data and Analytics, because it can massively reduce the costs of the exercise. But they forget that we all lie unremittingly on line. See the first of the “All Watched Over by Machines of Loving Grace” documentaries at https://vimeo.com/groups/96331/videos/80799353 to get a flavour of this unreasonable trust in machines and big data.

2- How do you evaluate if the insight you obtain from data analytics is “correct” or “good” or “relevant” to the problem domain? If the model predicts that a there’s an 80% chance of success, you also need to read it as there’s still a 20% chance of failure. To really assess the ‘quality’ of insights from the model you may start with the below areas. Assess whether the model makes reasonable assumptions on the problem domain and takes into account all the relevant input variables and business context – I was recently reading an article on a U.S. based insurer who implemented an analytics model that looked for number of unfavorable traffic incidents to assess risk on the vehicle driver but they missed out on assigning weights to the severity of the traffic incident. If your model makes wrong contextual assumptions – the outcomes can backfire Assess whether the model is run on a sufficient sample of datasets. Modern scalable technologies have made executing analytical models on massive amounts of data possible. More data the better although every problem does not need large datasets of the same kind Assess where extraneous events like macroeconomic events, weather, consumer trends etc. are considered in the model constraints. Use of external data sets with real time API based integrations is highly encouraged since it adds more context to the model Assess the quality of data used as an input to the model. Feeding wrong data to a good analytics model and expecting it to produce the expected outcomes is an unreasonable expectation. Even successful organizations who execute seamlessly in generating insights struggle to “close the loop” in translating the insights into the field to drive shareholder value. It’s always a good practice to pilot the model on a small population, link its insights and actions to key operational and financial metrics, measure the outcomes and then decide whether to improve or discontinue the model

3- How do you know when the data sets you are analyzing are “large enough” to be significant? Don’t just collect a large pile of historic data from all sources and throw it to your big data engine. Note that many things might have changed over time such as business processes, operating condition, operating model, and systems/tools. So be cautious that your historic training dataset considered for model building should be large enough to capture the trends/patterns that are relevant to the current business problem. Let’s consider an example of a forecasting model which usually have three components i.e. seasonality, trend and cycle. If you are building a model that considers external weather factor as one of the independent variable, note that some parts of USA have seen comparatively extreme winters post 2015, however you do not know if this trend will continue or not. In this case you would require minimum of 2 years data to be able to confirm the seasonality trend repeats, but to be more confident on the trend you can look up to 5 or 6 years historic data, and anything beyond that might not be the actual representation of current trends. Manohar Swamynathan: http://www.odbms.org/2017/05/qa-with-data-scientists-manohar-swamynathan/

3- How do you know when the data sets you are analyzing are “large enough” to be significant? I understand the question like this: how do you know that you have enough samples? There is not a single formula for this, however in classification this heavily depends on the amount and distribution of classes you try to classify. Coming from a performance analysis point of view, one should ask how many samples are required in order to successfully perform n-fold crossvalidation. Then there is extensive work on permutation testing of machine learning performance results. Of course, Cohen’s d for effect size and or p-statistics deliver a framework for such assessment. DirkTassiloHettich: http://www.odbms.org/2017/03/qa-with-data-scientists-dirk-tassilo/

3- How do you know when the data sets you are analyzing are “large enough” to be significant? When estimations and/or predictions become quite stable under data and/or model variations. PaoloGiudici: http://www.odbms.org/2017/03/qa-with-data-scientists-paolo-giudici/

4- What are the typical mistakes done when analyzing data for a large scale data project? Can they be avoided in practice? Forget data quality and exploratory data analysis, rushing to the application of complex models. Forgetting that pre-processing is a key step, and that benchmarking the model versus simpler ones is always a necessary pre requisite. Paolo Giudici: http://www.odbms.org/2017/03/qa-with-data-scientists-paolo-giudici/

4- What are the typical mistakes done when analyzing data for a large scale data project? Can they be avoided in practice? Assuming that data are clean. Data quality should be examined and checked. AndreiLopatenko: http://www.odbms.org/2017/03/qa-with-data-scientists-andrei-lopatenko/