NASA IV&V Facility Software Independent Verification and Validation

36 Slides2.24 MB

NASA IV&V Facility Software Independent Verification and Validation (IV&V) NASA IV&V Facility Fairmont, West Virginia Judith N. Bruner Acting Director 304-367-8202 [email protected]

NASA IV&V Facility Content Why are we discussing IV&V? What is IV&V? How is IV&V done? IV&V process Why perform IV&V? Summary Points of Contact

NASA IV&V Facility Why are we discussing IV&V?

NASA IV&V Facility Setting the Stage In the 90s, the Commanding General of theArmy’s Operational Test and Evaluation Agency noted that 90 percent of systems that were not ready for scheduled operational tests had been delayed by immature software.

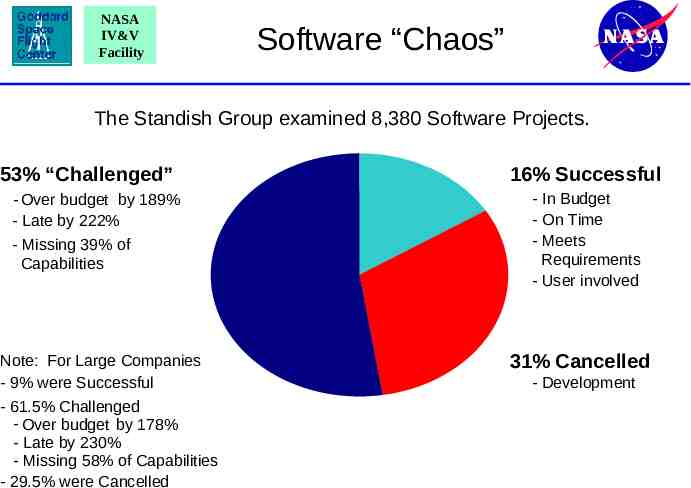

NASA IV&V Facility Software “Chaos” The Standish Group examined 8,380 Software Projects. 53% “Challenged” - Over budget by 189% - Late by 222% - Missing 39% of Capabilities Note: For Large Companies - 9% were Successful - 61.5% Challenged - Over budget by 178% - Late by 230% - Missing 58% of Capabilities - 29.5% were Cancelled 16% Successful - In Budget - On Time - Meets Requirements - User involved 31% Cancelled - Development

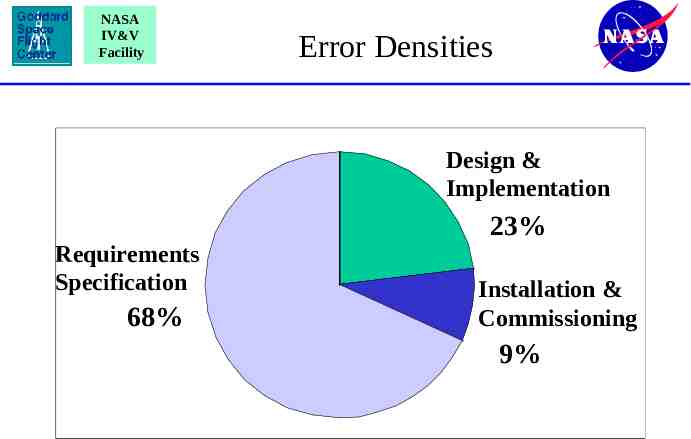

NASA IV&V Facility Error Densities 68 Design & Implementation Requirements Specification 68% 23% Installation & Commissioning 9%

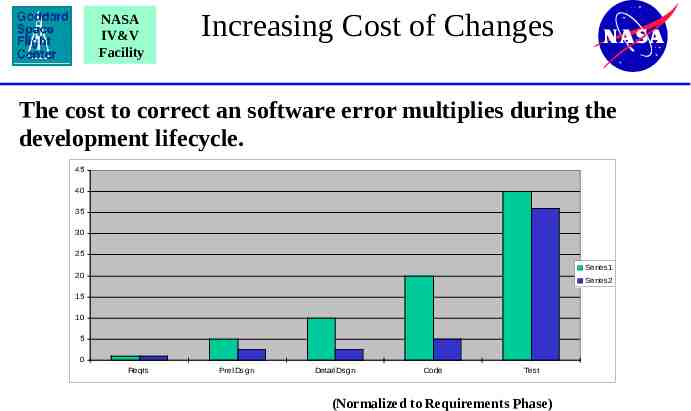

NASA IV&V Facility Increasing Cost of Changes The cost to correct an software error multiplies during the development lifecycle. 45 40 35 Cost scale factor 30 25 Series1 20 Series2 15 10 5 0 Reqts Prel Dsgn Detail Dsgn Code Test (Normalized to Requirements Phase)

NASA IV&V Facility What is IV&V?

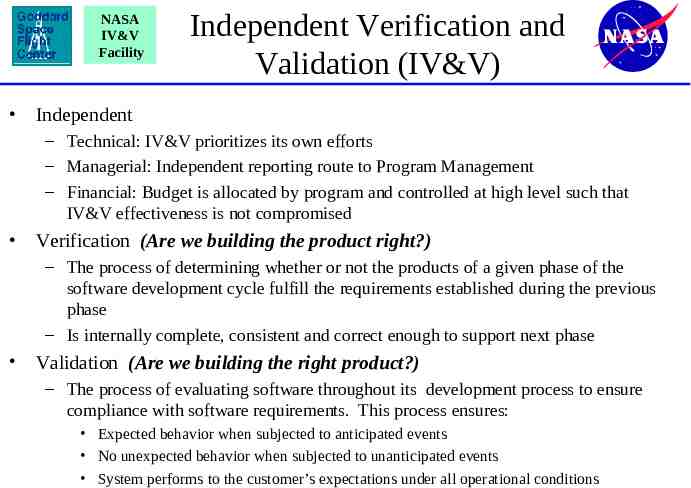

NASA IV&V Facility Independent Verification and Validation (IV&V) Independent – Technical: IV&V prioritizes its own efforts – Managerial: Independent reporting route to Program Management – Financial: Budget is allocated by program and controlled at high level such that IV&V effectiveness is not compromised Verification (Are we building the product right?) – The process of determining whether or not the products of a given phase of the software development cycle fulfill the requirements established during the previous phase – Is internally complete, consistent and correct enough to support next phase Validation (Are we building the right product?) – The process of evaluating software throughout its development process to ensure compliance with software requirements. This process ensures: Expected behavior when subjected to anticipated events No unexpected behavior when subjected to unanticipated events System performs to the customer’s expectations under all operational conditions

NASA IV&V Facility Independent Verification & Validation Software IV&V is a systems engineering process employing rigorous methodologies for evaluating the correctness and quality of the software product throughout the software life cycle Adapted to characteristics of the target program

NASA IV&V Facility How is IV&V done?

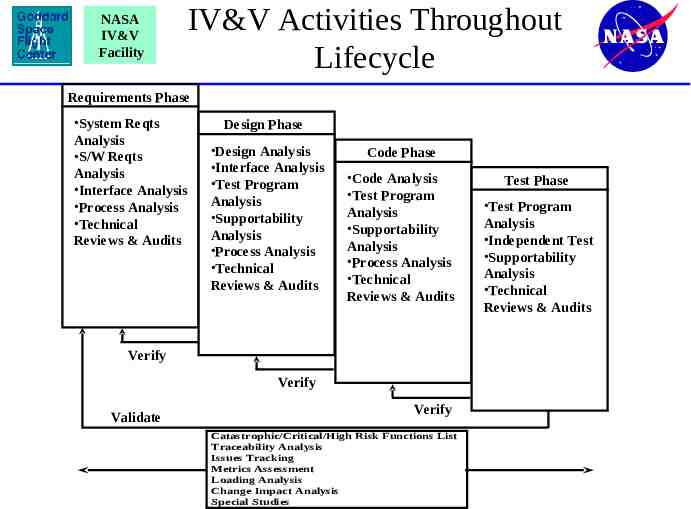

NASA IV&V Facility IV&V Activities Throughout Lifecycle Requirements Phase System Reqts Analysis S/W Reqts Analysis Interface Analysis Process Analysis Technical Reviews & Audits Design Phase Design Analysis Interface Analysis Test Program Analysis Supportability Analysis Process Analysis Technical Reviews & Audits Code Phase Code Analysis Test Program Analysis Supportability Analysis Process Analysis Technical Reviews & Audits Verify Verify Validate Verify Catastrophic/Critical/High Risk Functions List Traceability Analysis Issues Tracking Metrics Assessment Loading Analysis Change Impact Analysis Special Studies Test Phase Test Program Analysis Independent Test Supportability Analysis Technical Reviews & Audits

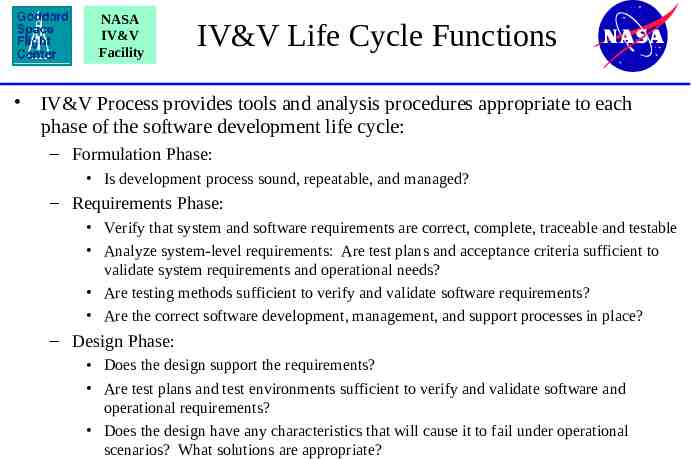

NASA IV&V Facility IV&V Life Cycle Functions IV&V Process provides tools and analysis procedures appropriate to each phase of the software development life cycle: – Formulation Phase: Is development process sound, repeatable, and managed? – Requirements Phase: Verify that system and software requirements are correct, complete, traceable and testable Analyze system-level requirements: Are test plans and acceptance criteria sufficient to validate system requirements and operational needs? Are testing methods sufficient to verify and validate software requirements? Are the correct software development, management, and support processes in place? – Design Phase: Does the design support the requirements? Are test plans and test environments sufficient to verify and validate software and operational requirements? Does the design have any characteristics that will cause it to fail under operational scenarios? What solutions are appropriate?

NASA IV&V Facility IV&V Life Cycle Functions (cont.) Typical IV&V functions by Software life-cycle phase (cont.): – Coding Phase: Does the code reflect the design? Is the code correct? Verify that test cases trace to and cover software requirements and operational needs Verify that software test cases, expected results, and evaluation criteria fully meet testing objectives Analyze selected code unit test plans and results to verify full coverage of logic paths, range of input conditions, error handling, etc. – Test Phase: Analyze correct dispositioning of software test anomalies Validate software test results versus acceptance criteria Verify tracing and successful completion of all software test objectives – Operational Phase: Verify that regression tests are sufficient to identify adverse impacts of changes

NASA IV&V Facility IV&V Testing Involvement IV&V identifies deficiencies in program’s test planning Program changes their procedures to address deficiencies vice IV&V independently test IV&V may independently test highly critical software using an IV&V testbed – – – – Whitebox Stress Endurance Limit Developer motivated to show software works IV&V attempts to break software

NASA IV&V Facility IV&V Process

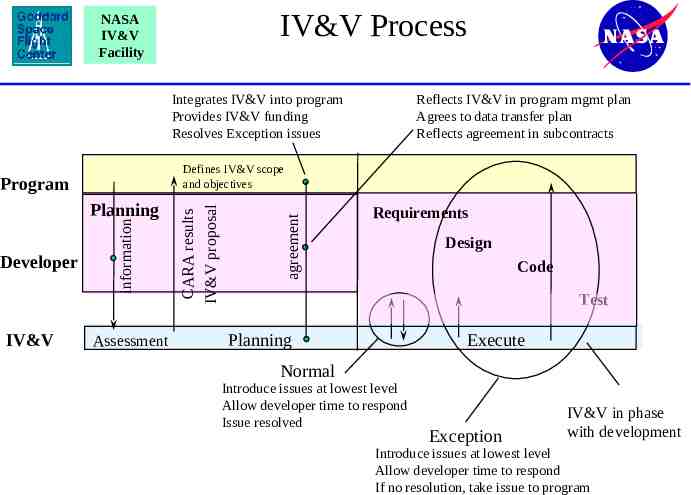

IV&V Process NASA IV&V Facility Integrates IV&V into program Provides IV&V funding Resolves Exception issues IV&V Assessment agreement information Planning CARA results IV&V proposal Defines IV&V scope and objectives Program Developer Reflects IV&V in program mgmt plan Agrees to data transfer plan Reflects agreement in subcontracts Requirements Design Code Test Planning Execute Normal Introduce issues at lowest level Allow developer time to respond Issue resolved Exception IV&V in phase with development Introduce issues at lowest level Allow developer time to respond If no resolution, take issue to program

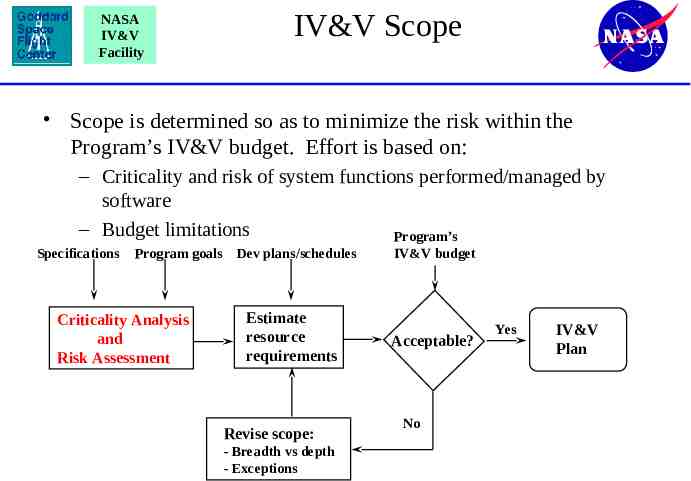

NASA IV&V Facility IV&V Scope Scope is determined so as to minimize the risk within the Program’s IV&V budget. Effort is based on: – Criticality and risk of system functions performed/managed by software – Budget limitations Program’s Specifications Program goals Criticality Analysis and Risk Assessment Dev plans/schedules Estimate resource requirements Revise scope: - Breadth vs depth - Exceptions IV&V budget Acceptable? No Yes IV&V Plan

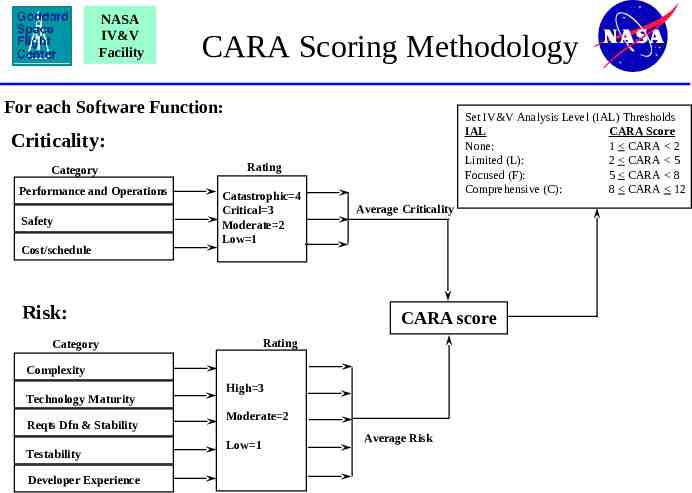

NASA IV&V Facility CARA Scoring Methodology For each Software Function: Set IV&V Analysis Level (IAL) Thresholds IAL CARA Score None: 1 CARA 2 Limited (L): 2 CARA 5 Focused (F): 5 CARA 8 Comprehensive (C): 8 CARA 12 Criticality: Category Performance and Operations Safety Cost/schedule Rating Catastrophic 4 Critical 3 Moderate 2 Low 1 Risk: Average Criticality CARA score Rating Category Complexity Technology Maturity Reqts Dfn & Stability Testability Developer Experience High 3 Moderate 2 Low 1 Average Risk

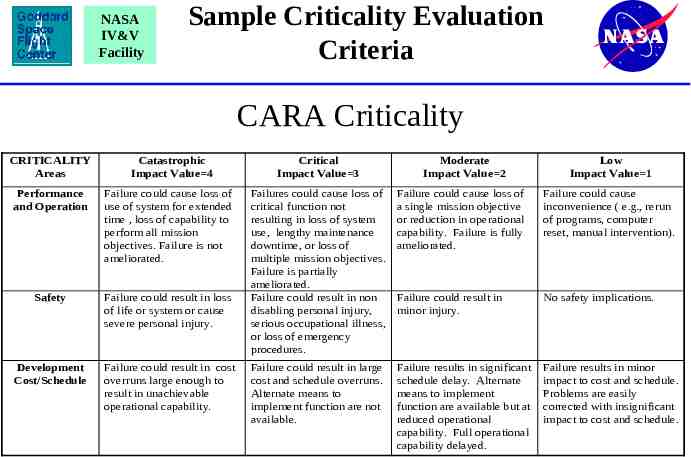

NASA IV&V Facility Sample Criticality Evaluation Criteria CARA Criticality CRITICALITY Areas Catastrophic Impact Value 4 Performance and Operation Failure could cause loss of use of system for extended time , loss of capability to perform all mission objectives. Failure is not ameliorated. Safety Failure could result in loss of life or system or cause severe personal injury. Development Cost/Schedule Failure could result in cost overruns large enough to result in unachievable operational capability. Critical Impact Value 3 Moderate Impact Value 2 Low Impact Value 1 Failures could cause loss of critical function not resulting in loss of system use, lengthy maintenance downtime, or loss of multiple mission objectives. Failure is partially ameliorated. Failure could result in non disabling personal injury, serious occupational illness, or loss of emergency procedures. Failure could cause loss of a single mission objective or reduction in operational capability. Failure is fully ameliorated. Failure could cause inconvenience ( e.g., rerun of programs, computer reset, manual intervention). Failure could result in minor injury. No safety implications. Failure could result in large cost and schedule overruns. Alternate means to implement function are not available. Failure results in significant schedule delay. Alternate means to implement function are available but at reduced operational capability. Full operational capability delayed. Failure results in minor impact to cost and schedule. Problems are easily corrected with insignificant impact to cost and schedule.

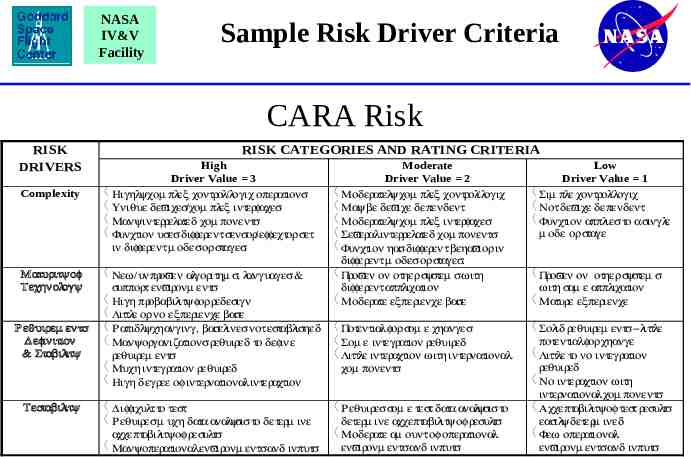

NASA IV&V Facility Sample Risk Driver Criteria CARA Risk RISK DRIVERS Complexity Maturity of Technolog y R equirem ents D efinition & Stability Testability RISK CATEGORIES AND RATING CRITERIA High Driver Value 3 〈 Highly com plex control/logic operations 〈 Unique devices/com plex interfaces 〈 Many interrelated com ponents 〈 Function uses different sensor/effector set in different m odes or stages. 〈 New/unproven algorithm s, languages & support environm ents 〈 High probability for redesign 〈 Little orno experience base 〈 R apidly changing, baselines not established 〈 Many organizations required to define requirem ents 〈 Much integration required 〈 High degree of internationalinteraction 〈 D ifficult to test 〈 R equires m uch data analysis to determ ine acceptability of results 〈 Many operationalenvironm ents and inputs Moderate Driver Value 2 〈 Moderately com plex control/logic 〈 May be device dependent 〈 Moderately com plex interfaces 〈 Severalinterrelated com ponents 〈 Function has different behavior in different m odes or stages. 〈 Proven on other system s with different application 〈 Moderate experience base Low Driver Value 1 〈 Sim ple control/logic 〈 Not device dependent 〈 Function applies to a single m ode or stage 〈 Potentialfor som e changes 〈 Som e integration required 〈 Little interaction with international com ponents 〈 Solid requirem ents - little potentialfor change 〈 Little to no integration required 〈 No interaction with internationalcom ponents 〈 Acceptability of test results easily determ ined 〈 Few operational environm ents and inputs 〈 R equires som e test data analysis to determ ine acceptability of results 〈 Moderate am ount of operational environm ents and inputs 〈 Proven on other system s with sam e application 〈 Mature experience

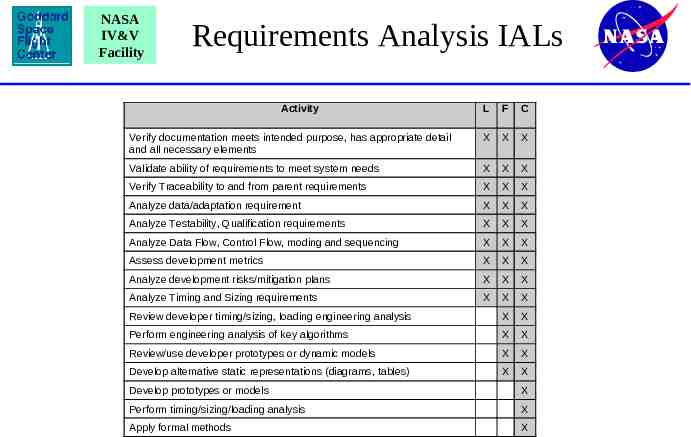

NASA IV&V Facility Requirements Analysis IALs Activity L F C Verify documentation meets intended purpose, has appropriate detail and all necessary elements X X X Validate ability of requirements to meet system needs X X X Verify Traceability to and from parent requirements X X X Analyze data/adaptation requirement X X X Analyze Testability, Qualification requirements X X X Analyze Data Flow, Control Flow, moding and sequencing X X X Assess development metrics X X X Analyze development risks/mitigation plans X X X Analyze Timing and Sizing requirements X X X Review developer timing/sizing, loading engineering analysis X X Perform engineering analysis of key algorithms X X Review/use developer prototypes or dynamic models X X Develop alternative static representations (diagrams, tables) X X Develop prototypes or models X Perform timing/sizing/loading analysis X Apply formal methods X

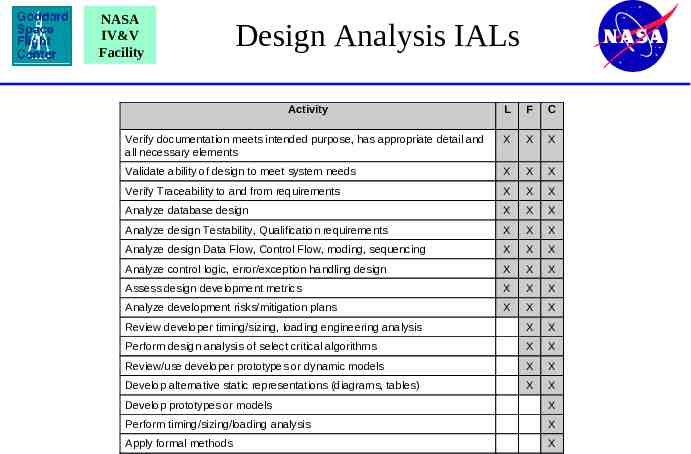

NASA IV&V Facility Design Analysis IALs Activity L F C Verify documentation meets intended purpose, has appropriate detail and all necessary elements X X X Validate ability of design to meet system needs X X X Verify Traceability to and from requirements X X X Analyze database design X X X Analyze design Testability, Qualification requirements X X X Analyze design Data Flow, Control Flow, moding, sequencing X X X Analyze control logic, error/exception handling design X X X Assess design development metrics X X X Analyze development risks/mitigation plans X X X Review developer timing/sizing, loading engineering analysis X X Perform design analysis of select critical algorithms X X Review/use developer prototypes or dynamic models X X Develop alternative static representations (diagrams, tables) X X Develop prototypes or models X Perform timing/sizing/loading analysis X Apply formal methods X

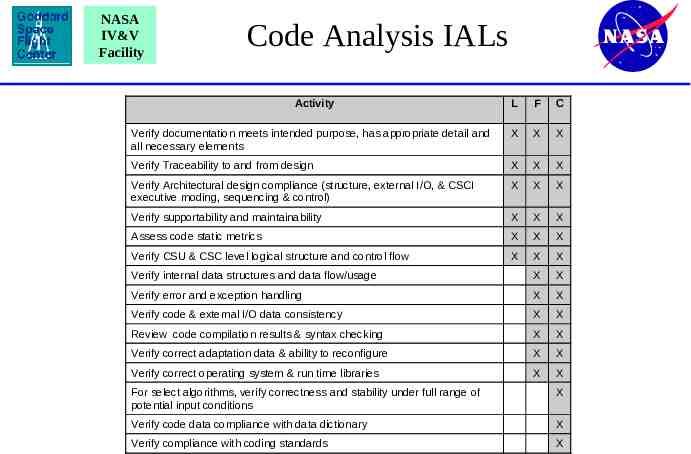

NASA IV&V Facility Code Analysis IALs Activity L F C Verify documentation meets intended purpose, has appropriate detail and all necessary elements X X X Verify Traceability to and from design X X X Verify Architectural design compliance (structure, external I/O, & CSCI executive moding, sequencing & control) X X X Verify supportability and maintainability X X X Assess code static metrics X X X Verify CSU & CSC level logical structure and control flow X X X Verify internal data structures and data flow/usage X X Verify error and exception handling X X Verify code & external I/O data consistency X X Review code compilation results & syntax checking X X Verify correct adaptation data & ability to reconfigure X X Verify correct operating system & run time libraries X X For select algorithms, verify correctness and stability under full range of potential input conditions X Verify code data compliance with data dictionary X Verify compliance with coding standards X

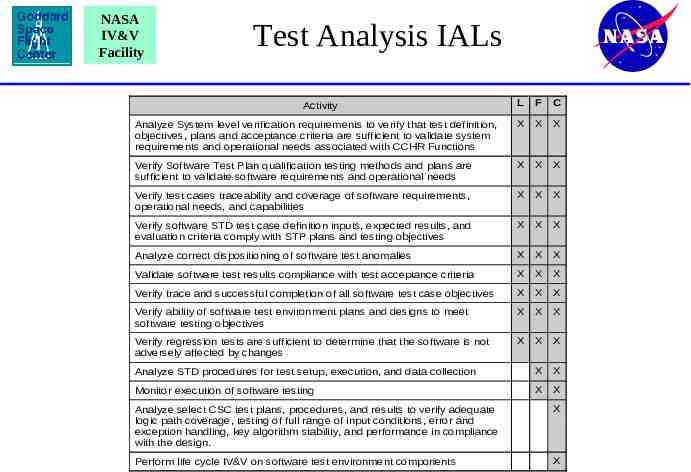

NASA IV&V Facility Test Analysis IALs L F C Analyze System level verification requirements to verify that test definition, objectives, plans and acceptance criteria are sufficient to validate system requirements and operational needs associated with CCHR Functions X X X Verify Software Test Plan qualification testing methods and plans are sufficient to validate software requirements and operational needs X X X Verify test cases traceability and coverage of software requirements, operational needs, and capabilities X X X Verify software STD test case definition inputs, expected results, and evaluation criteria comply with STP plans and testing objectives X X X Analyze correct dispositioning of software test anomalies X X X Validate software test results compliance with test acceptance criteria X X X Verify trace and successful completion of all software test case objectives X X X Verify ability of software test environment plans and designs to meet software testing objectives X X X Verify regression tests are sufficient to determine that the software is not adversely affected by changes X X X Analyze STD procedures for test setup, execution, and data collection X X Monitor execution of software testing X X Activity Analyze select CSC test plans, procedures, and results to verify adequate logic path coverage, testing of full range of input conditions, error and exception handling, key algorithm stability, and performance in compliance with the design. X Perform life cycle IV&V on software test environment components X

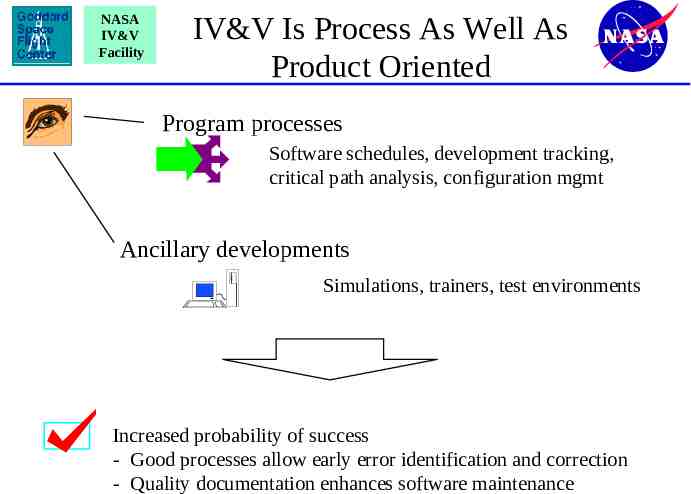

NASA IV&V Facility IV&V Is Process As Well As Product Oriented Program processes Software schedules, development tracking, critical path analysis, configuration mgmt Ancillary developments Simulations, trainers, test environments Increased probability of success - Good processes allow early error identification and correction - Quality documentation enhances software maintenance

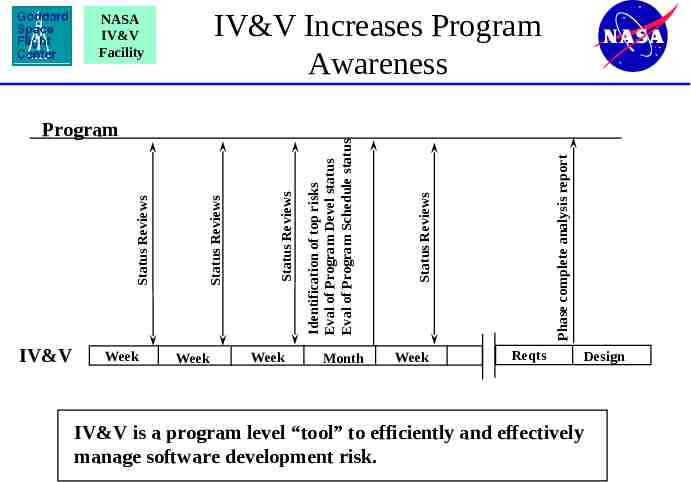

IV&V Week Week Week Month Phase complete analysis report Status Reviews Status Reviews Status Reviews Status Reviews Program Identification of top risks Eval of Program Devel status Eval of Program Schedule status IV&V Increases Program Awareness NASA IV&V Facility Week Reqts IV&V is a program level “tool” to efficiently and effectively manage software development risk. Design

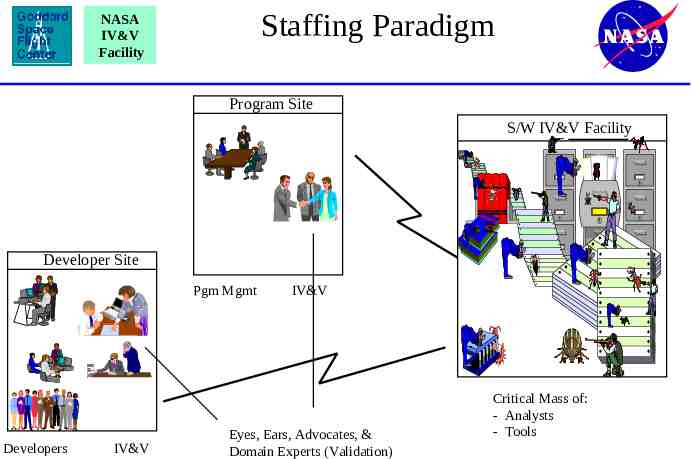

Staffing Paradigm NASA IV&V Facility Program Site S/W IV&V Facility Developer Site Pgm Mgmt Developers IV&V IV&V Eyes, Ears, Advocates, & Domain Experts (Validation) Critical Mass of: - Analysts - Tools

NASA IV&V Facility Why perform IV&V?

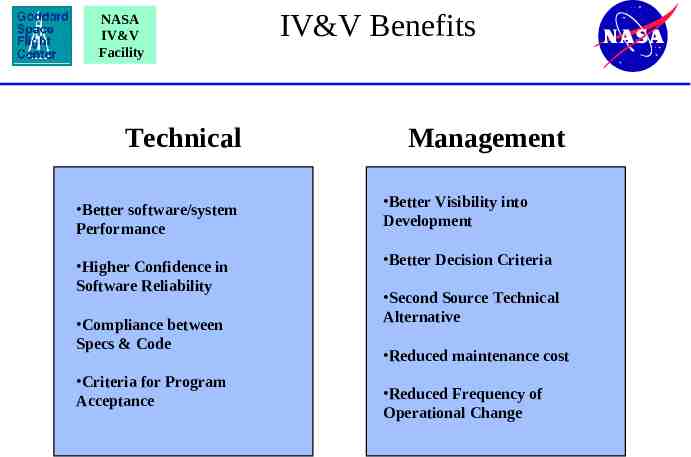

NASA IV&V Facility Technical IV&V Benefits Management Better software/system Performance Better Visibility into Development Higher Confidence in Software Reliability Better Decision Criteria Compliance between Specs & Code Criteria for Program Acceptance Second Source Technical Alternative Reduced maintenance cost Reduced Frequency of Operational Change

NASA IV&V Facility Summary

NASA IV&V Facility IV&V Key Points IV&V works with the Project – Goal is project success IV&V is an engineering discipline – IV&V processes are defined and tailored to the specific program – Mission, operations and systems knowledge is used to perform engineering analyses of system components IV&V is most effective when started early – 70% of errors found in testing are traceable to problems in the requirements and design IV&V works problems at the lowest possible level – Primarily work via established informal interfaces with the development organization - working groups, IPTs, etc. – Elevate issues only when necessary

NASA IV&V Facility IV&V Approach Efficiently Mitigates Risk It is not necessary or feasible to perform all IV&V analyses on all software functions IV&V resources allocated to reduce overall exposure to operational, development, and cost/schedule risks – Software functions with higher cirticality and development risk receive enhanced levels of analysis (‘CARA’ process) – Systems analyses performed to reduce costly interface and integration problems – Process analyses performed to verify ability to produce desired result relative to program plans, needs and goals IV&V working interfaces promote timely problem resolution – Proactive participation on pertinent development teams – Emphasis on early identification of technical problems – Engineering recommendations provided to expedite solution development and implementation

NASA IV&V Facility Analyses Are Value Added and Complementary - Not Duplicative Analyses performed from a systems perspective considering mission needs and system use, hazards and interfaces – Discipline experts assigned to perform analysis across all life cycle phases – Horizontal specialty skills are matrixed across IV&V functional teams to verify correct systems integration – Specialized tools and simulations perform complex analyses IV&V testing activities complement developer testing enhancing overall software confidence – Developer testing focuses on demonstrating nominal behavior, IV&V testing activities try to break the software Overall program integration, test and verification approach analyzed for completeness, integrity and effectiveness

NASA IV&V Facility Why use NASA IV&V Facility? Software IV&V, as practiced by the NASA Software IV&V Facility, is a well-defined, proven, systems engineering discipline designed to reduce the risk in major software developments.

NASA IV&V Facility NASA IV&V Facility Points of Contact Judy Bruner Acting Director 304-367-8202 [email protected] Bill Jackson Deputy Director 304-367-8215 [email protected]