Grid Job and Information Management (JIM) for D0 and CDF

22 Slides407.50 KB

Grid Job and Information Management (JIM) for D0 and CDF Gabriele Garzoglio for the JIM Team

Overview Introduction Grid-level Management SAM-Grid SAM JIM Job Management Information Management Fabric-level Management Running jobs on grid resources Local sandbox management The DZero Application Framework Running MC at UWisc

Context D0 Grid project started in 2001-2002 to handle D0’s expanded needs for globally distributed computing JIM complements the data handling system (SAM) with jobs and info management JIM is funded by PPDG (our team here), GridPP (Rod Walker in the UK) Collaborative effort with the experiments. CDF joined later in 2002

History Delivered JIM prototype for D0, Oct 10, 2002: Remote job submission Brokering based on data cached Web-based monitoring SC-2002 demo – 11 sites (D0, CDF), big success May 2003 – started deployment of V1 Now – working on running MC in production on the Grid

Overview Introduction Grid-level Management SAM-Grid SAM JIM Job Management Information Management Fabric-level Management Running jobs on grid resources Local sandbox management The DZero Application Framework Running MC at UWisc

SAM-Grid Logistics User UserInterface Interface Flow of: Submission Grid Client data User UserInterface Interface User UserInterface Interface Global Job Queue job meta-data User UserInterface Interface Submission Submission Resource Selector Match Making Global DH Services Info Gatherer SAM Naming Server Info Collector SAM Log Server Resource Optimizer MSS Cluster Data Handling Local Job Handling SAM Station ( other servs) Grid Gateway SAM Stager(s) Local Job Handler (CAF, D0MC, BS, .) JIM Advertise Dist.FS AAA Worker Nodes Cache Site SAM DB Server RC MetaData Catalog Bookkeeping Service Info Manager MDS Info Providers Web Serv Grid Monitoring XML DB server Site Conf. Glob/Loc JID map . User Tools Site Site Site Site Site Site

Job Management Highlights We distinguish grid-level (global) job scheduling (selection of a cluster to run) from local scheduling (distribution of the job within the cluster) We consider 3 types of jobs analysis: data intensive monte carlo: CPU intensive reconstruction: data and CPU intensive

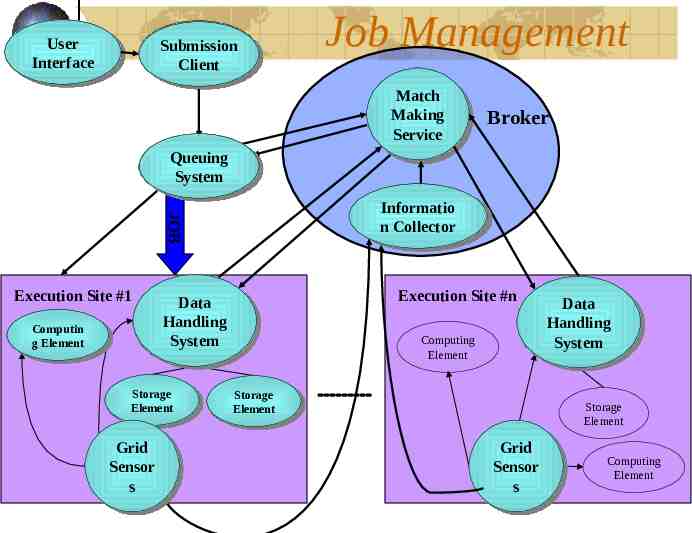

Job Management – Distinct JIM Features Decision making is based on both: Information existing irrespective of jobs (resource description) Functions of (jobs,resource) Decision making is interfaced with data handling middleware Decision making is entirely in the Condor framework (no own RB) – strong promotion of standards, interoperability Brokering algorithms can be extended via plug-ins

User User Interface Interface Interface Submission Submission Submission Client Client Job Management Match Match Match Making Making Making Service Service Broker Queuing Queuing Queuing System System JOB Informatio Information Informatio nnCollector Collector Collector Execution Site #1 Computin Computing Computin ggElement Element Element Storage Storage Storage Element Element Element Grid Grid Grid Sensor Sensor Sensors ss Execution Site #n Data Data Data Handling Handling Handling System System System Computing Element Storage Storage Storage Element Element Element Data Data Data Handling Handling Handling System System System Storage Element Grid Grid Grid Sensor Sensor Sensors ss Computing Element

Information Management In JIM’s view, this includes: configuration framework resource description for job brokering infrastructure for monitoring Main features Sites (resources) and jobs monitoring Distributed knowledge about jobs etc Incremental knowledge building GMA for current state inquiries, Logging for recent history studies All Web based

Information Management via Site Configuration Template XML Main Site/cluster Config XMLDB XSLT XSLT Resource Advertisement classad XSLT Monitoring Configuration LDIF XSLT Service Instantiation XML

Overview Introduction Grid-level Management SAM-Grid SAM JIM Job Management Information Management Fabric-level Management Running jobs on grid resources Local sandbox management The DZero Application Framework Running MC at UWisc

Running jobs on Grid resources The trend: Grid resources are not dedicated to a single experiment Translation: no daemons running on the worker nodes of a Batch System no experiment specific software installed

Running jobs on Grid resources The situation today is transitioning: Worker nodes typically access the software via shared FS: not scalable! Generally, experiments can install specific services on a node close to the cluster. Local resource configuration still too diverse to easily plug into the Grid

The JIM local sandbox management It keeps the job executable (from the Grid) at the head node and knows where its product dependencies are It transports and installs the software to the worker node It can instantiate services at the worker node It sets up the environment for the job to run It packages the output and hands it over to the Grid, so that it becomes available for the download at the submission site

Running a DZero application We have JIM sandbox: where is the problem now? JIM sandbox could immediately use the DZero Run Time Environment, but Not all the DZero packages are RTE Compliant User don’t have experience/incentives in using it today

Overview Introduction Grid-level Management SAM-Grid SAM JIM Job Management Information Management Fabric-level Management Running jobs on grid resources Local sandbox management The DZero Application Framework Running MC at UWisc

Running Monte Carlo at UWisc University of Wisconsin offered DZero the opportunity of using a 1000 node non-dedicated condor cluster We are concentrating on putting it to use to run MC with mc runjob (in production by year end)

The challenges I MC code is not RTE compliant today Chain of 3-5 stages. Each binary 50-200 MB, dynamically linked Are compiled from 40 packages (total for D0 621). Need these packages at run time for RPC files Root, Motif, X11, Ace libraries are found as dependencies (for MC generators ) MC tarballs exist but are hand-crafted (and bug-prone) every time. Size unpacked 2GB (versus 12-15 GB full D0 app tree).

The challenges II About every advanced C feature, every libc library call, every system call, are used One can get different results on two RedHat 7.2 systems. Total release tree takes N hours (up to 20 ) to build – not something easy to do dynamically at remote site

Summary The SAM-Grid offers an extensible working framework for Grid-level Job/Data/Info Management JIM provides Fabric-level management tools for sandboxing The applications need to be improved to run on Grid resources