The Best Ways to Design a Quality Scorecard Martin Jukes

16 Slides658.50 KB

The Best Ways to Design a Quality Scorecard Martin Jukes

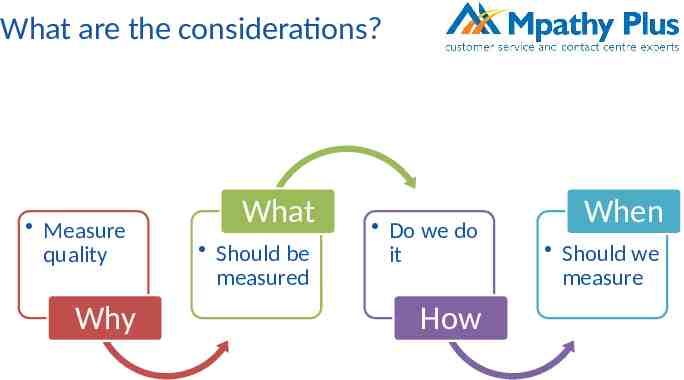

What are the considerations? Measure quality Why What Should be measured Do we do it How When Should we measure

Why have a Quality Scorecard? Measure Advisor performance To ensure we are compliant with regulations Report as a KPI To change Advisor behaviour Measure the Customer Experience Check up on our Agents To motivate the team

Extract from Improving Agent Performance Manage Measure Share Act Contacts handled, AHT, RFT, outcomes Communicate performance Intervene and manage Quality and quantity Individual performance Use the data Identify trends Team performance Improvement plans

Can we download one? Yes . about 239,000,000 results (0.55 seconds)

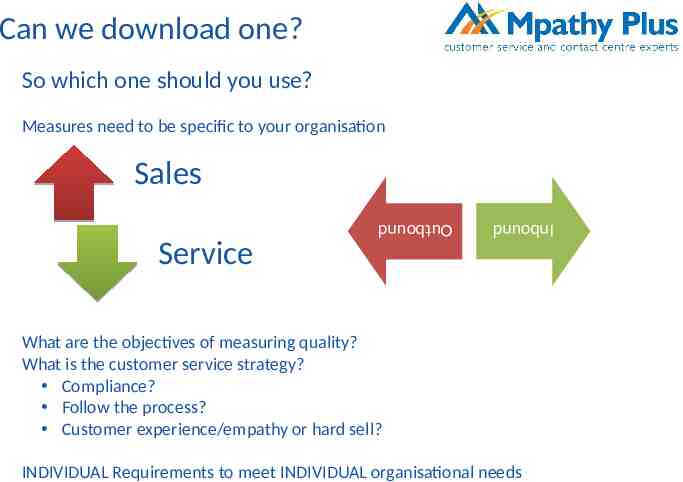

Can we download one? So which one should you use? Measures need to be specific to your organisation Sales Inbound Outbound Service What are the objectives of measuring quality? What is the customer service strategy? Compliance? Follow the process? Customer experience/empathy or hard sell? INDIVIDUAL Requirements to meet INDIVIDUAL organisational needs

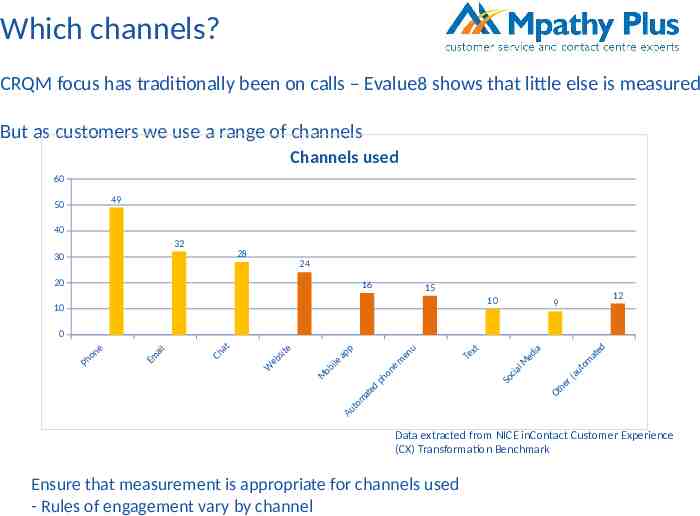

Which channels? CRQM focus has traditionally been on calls – Evalue8 shows that little else is measured But as customers we use a range of channels Channels used 60 49 50 40 32 28 30 24 20 16 15 10 10 12 9 0 e on h P ail Em at Ch W e sit b e ob M p ap e il A ed at m o ut u en m e on ph xt Te ia ed lM cia o S r he t O ed at m o ut (a Data extracted from NICE inContact Customer Experience (CX) Transformation Benchmark Ensure that measurement is appropriate for channels used - Rules of engagement vary by channel

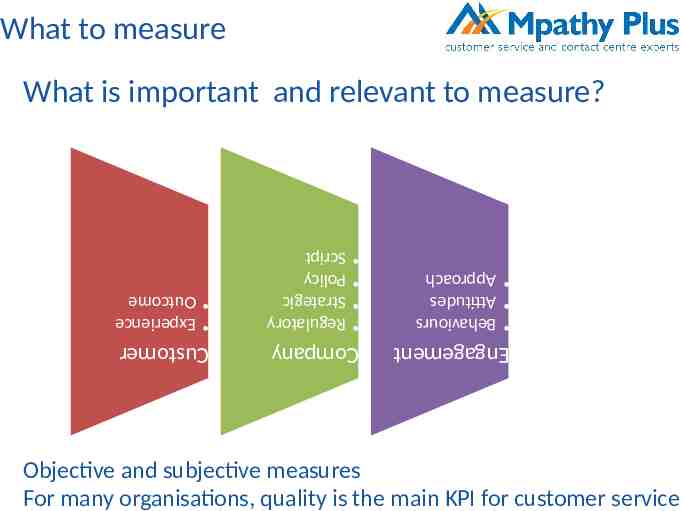

What to measure What is important and relevant to measure? Regulatory Strategic Policy Script Behaviours Attitudes Approach Company Engagement Experience Outcome Customer Objective and subjective measures For many organisations, quality is the main KPI for customer service

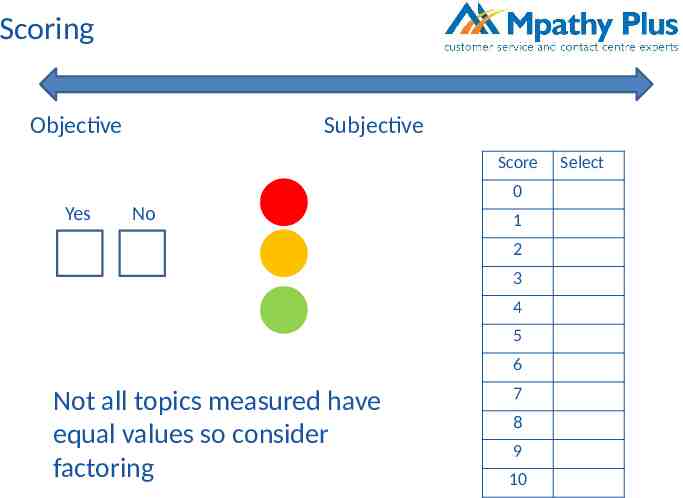

Scoring Objective Subjective Score 0 Yes No 1 2 3 4 5 6 Not all topics measured have equal values so consider factoring 7 8 9 10 Select

Not applicable/Not appropriate Score Not every measure is applicable or appropriate for every contact. N/A is a valid entry in some cases. 0 1 2 Mistakes observed 3 4 Inappropriate rules Irrelevant questions A good contact should be relevant and personalised to that customer even if it does not tick every box Don’t create a scorecard that kills empowerment! 5 N/A Select

Usability Very easy to complicate Manual System Recordings Live monitoring Ensure easy to record and share e.g. Q5 has a factor of 3.5 and a score range of 1 – 10 with a number of different ‘must have’ phrases that must be used in the same sequence or the score reduces by 35% etc. Did it feel right?

Using the data Feedback in one to ones Identifying training needs Review of overall performance Present positive and negative findings

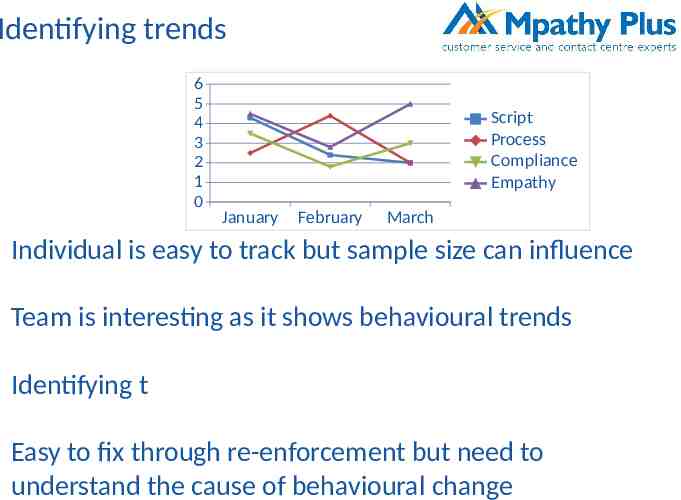

Identifying trends 6 5 4 3 2 1 0 Script Process Compliance Empathy January February March Individual is easy to track but sample size can influence Team is interesting as it shows behavioural trends Identifying t Easy to fix through re-enforcement but need to understand the cause of behavioural change

How much? How often? Sufficient to enable performance and quality management but not too much that it becomes onerous! A number of factors Measures Services Scale Channels Purpose Can increase or decrease in size but would recommend monthly measure for all team to align with one to ones

1 Communicate the purpose and the benefits 2 Share the model and the questions 3 Involve Advisors in developing the scorecard and the process Implementing Quality Monitoring

Summary Think about your specific requirements Measure quality across all channels but modify Ensure flexibility and have ‘not applicable’ as an option One size does not fit all Use the data