LEARNING SEMANTICS OF WORDS AND PICTURES TEJASWI DEVARAPALLI

40 Slides3.89 MB

LEARNING SEMANTICS OF WORDS AND PICTURES TEJASWI DEVARAPALLI

CONTENT INTRODUCTION MODELING IMAGE DATASET STATISTICS HIERARCHICAL MODEL TESTING AND USING BASIC MODEL AUTO ILLUSTRATION AUTO ANNOTATION RESULTS DISCUSSIONS

SEMANTICS LANGUAGE USES A SYSTEM OF LINGUISTIC SIGNS, EACH OF WHICH IS A COMBINATION OF MEANING AND PHONOLOGICAL AND/OR ORTHOGRAPHIC FORMS. SEMANTICS IS TRADITIONALLY DEFINED AS THE STUDY OF MEANING IN LANGUAGE.

ABSTRACT A STATISTICAL MODEL FOR ORGANIZING IMAGE COLLECTIONS. INTEGRATES SEMANTIC INFORMATION PROVIDED BY ASSOCIATED TEXT AND VISUAL INFORMATION PROVIDED BY IMAGE FEATURES. PROMISING MODEL FOR INFORMATION RETRIEVAL TASKS LIKE DATABASE BROWSING, SEARCHING FOR IMAGES. USED FOR NOVEL APPLICATIONS.

INTRODUCTION METHOD FOR ORGANIZING IMAGE DATABASES. INTEGRATES TWO KINDS OF INFORMATION DURING MODEL CONSTRUCTION. LEARNS LINKS BETWEEN IMAGE FEATURES AND SEMANTICS. LEARNINGS USEFUL IN BETTER BROWSING BETTER SEARCH NOVEL APPLICATIONS

INTRODUCTION(CONTINUED) MODELS STATISTICS ABOUT OCCURRENCE AND CO-OCCURRENCE OF WORD AND FEATURES. HIERARCHICAL STRUCTURE. GENERATIVE MODEL, IMPLICITLY CONTAINS PROCESSES FOR PREDICTING IMAGE COMPONENTS WORDS AND FEATURES

COMPARISON THIS MODEL SUPPORTS BROWSING FOR THE IMAGE RETRIEVAL PURPOSES SYSTEMS FOR SEARCHING IMAGE DATABASES INCLUDES SEARCH BY QUERY. TEXT IMAGE FEATURE SIMILARITY SEGMENT FEATURES IMAGE SKETCH

MODELING IMAGE DATASET STATISTICS GENERATIVE HIERARCHICAL MODEL COMBINATION OF ASYMMETRIC CLUSTERING MODEL (MAPS DOCUMENTS INTO CLUSTERS) SYMMETRIC CLUSTERING MODEL(MODELS JOINT DISTRIBUTION OF DOCUMENTS AND FEATURES). DATA MODELED AS FIXED HIERARCHY OF NODES. NODES GENERATE WORD IMAGE SEGMENT

ILLUSTRATION DOCUMENTS MODELED AS SEQUENCE OF WORDS AND SEQUENCE OF SEGMENTS USING BLOBWORLD REPRESENTATION. "BLOBWORLD" REPRESENTATION IS CREATED BY CLUSTERING PIXELS IN A JOINT COLOR-TEXTURE-POSITION FEATURE SPACE. THE DOCUMENT IS MODELED BY SUM OVER THE CLUSTERS, TAKING ALL CLUSTERS INTO CONSIDERATION.

HIERARCHICAL MODEL EACH NODE HAS A PROBABILITY OF GENERATING A WORD/ IMAGE W.R.T THE DOCUMENT UNDER CONSIDERATION. CLUSTER DEFINES THE PATH. CLUSTER, LEVEL IDENTIFIES THE NODE. Higher level nodes emit more general words and blobs. (e. g . sky) Moderately general words and blobs. (e. g . Sun,sea) Lower level nodes emit more specific words and blobs. (e. g . Waves) Sun Sky Sea Waves

Mathematical Process for generating set of observations ‘D’ associated with a document ‘d’ is described by C – clusters, i – items, l – levels.

GAUSSIAN DISTRIBUTIONS NUMBER OF FEATURES LIKE ASPECTS OF SIZE, POSITION, COLOR, TEXTURE AND SHAPE ALL TOGETHER FORM FEATURE VECTOR ‘X’. PROBABILITY DISTRIBUTION OVER IMAGE SEGMENTS BY USUAL FORMULA:-

MODELING IMAGE DATASET STATISTICS THIS MODEL USES HIERARCHICAL MODEL AS IT BEST SUPPORTS BROWSING OF LARGE COLLECTIONS OF IMAGES COMPACT REPRESENTATION PROVIDES IMPLEMENTATION DETAILS FOR AVOIDING OVER TRAINING. THE TRAINING PROCEDURE CLUSTERS A FEW THOUSAND IMAGES IN A FEW HOURS ON A STATE OF THE ART PC.

MODELING IMAGE DATASET STATISTICS RESOURCE REQUIREMENTS LIKE “MEMORY” INCREASE RAPIDLY WITH NO.OF IMAGES. SO WE NEED EXTRA CARE. THERE ARE DIFFERENT APPROACHES FOR AVOIDING OVER-TRAINING AND RESOURCE USAGE.

FIRST APPROACH WE TRAIN ON RANDOMLY SELECTED SUBSET OF IMAGES UNTIL LOG LIKELYHOOD FOR HELD OUT DATA, RANDOMLY SELECTED FROM REMAINING DATA BEGINS TO DROP. THE MODEL SO FOUND IS USED AS A STARTING POINT FOR NEXT TRAINING ROUND USING SECOND RANDOM SET OF IMAGES.

SECOND APPROACH SECOND METHOD FOR REDUCING RESOURCE USAGE IS TO LIMIT CLUSTER MEMBERSHIP. FIRST COMPUTE APPROXIMATE CLUSTERING BY TRAINING ON A SUBSET. THEN CLUSTER ON ENTIRE DATASET, MAINTAIN PROBABILITY THAT A POINT IS IN A CLUSTER FOR TOP TWENTY CLUSTERS. REST OF THE MEMBERSHIP PROBABILITIES ASSUMED TO BE ZERO FOR NEXT FEW ITERATIONS.

TESTING AND USING BASIC MODEL METHOD STABILITY IS TESTED BY RUNNING FITTING PROCESS. FITTING PROCESS IS RUN ON SAME DATA SEVERAL TIMES WITH DIFFERENT INITIAL CONDITIONS AS EXPECTATION MAXIMIZATION(EM) PROCESS IS SENSITIVE TO THE STARTING POINT. THE CLUSTERING POINT DEPENDS MORE ON STARTING POINT THAN ON EXACT IMAGES CHOSEN FOR TRAINING. THE SECOND TEST IS TO VERIFY WHETHER CLUSTERING ON BOTH IMAGE AND TEXT HAS ADVANTAGE OR NOT.

TESTING AND USING THE BASIC MODEL THIS FIGURE SHOWS 16 IMAGES FROM A CLUSTER FOUND USING TEXT ONLY

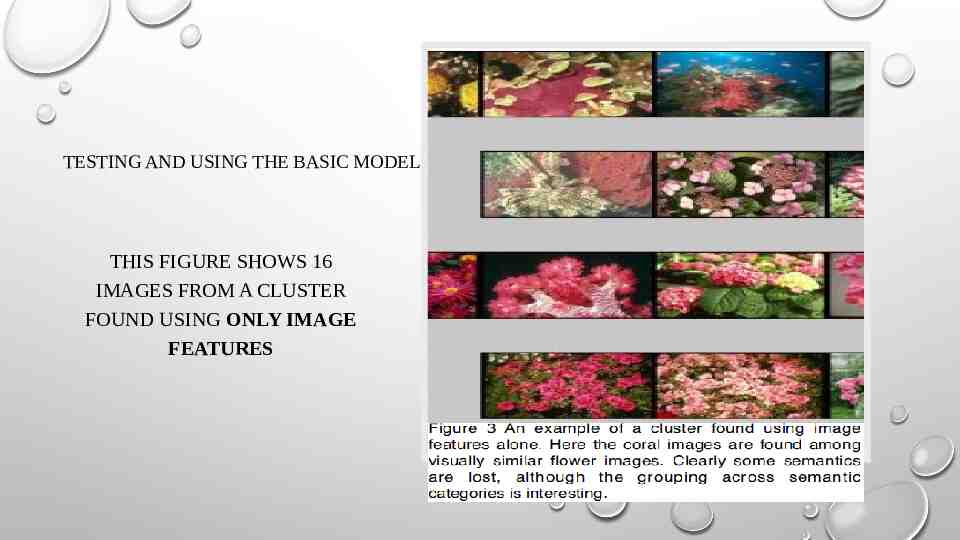

TESTING AND USING THE BASIC MODEL THIS FIGURE SHOWS 16 IMAGES FROM A CLUSTER FOUND USING ONLY IMAGE FEATURES

TESTING AND USING THE BASIC MODEL

BROWSING MOST IMAGE RETRIEVAL SYSTEMS DO NOT SUPPORT BROWSING. THEY FORCE USER TO SPECIFY A QUERY. THE ISSUE IS WHETHER THE CLUSTERS FOUND THROUGH BROWSING MAKE SENSE TO THE USER. IF THE USER FINDS THE CLUSTERS COHERENT THEN THEY CAN BEGIN TO INTERNALIZE THE KIND OF STRUCTURE THEY REPRESENT.

BROWSING USER STUDY GENERATE 64 CLUSTERS FOR 3000 CLUSTERS. GENERATE 64 RANDOM CLUSTERS FROM THE SAME IMAGES. PRESENT RANDOM CLUSTER TO USER, ASK TO RATE COHERENCE(YES/NO). 94% ACCURACY

IMAGE SEARCH SUPPLY A COMBINATION OF TEXT AND IMAGE FEATURES. APPROACH : COMPUTE FOR EACH CANDIDATE IMAGE, THE PROBABILITY OF EMITTING THE QUERY ITEMS. Q SET OF QUERY ITEMS D CANDIDATE DOCUMENT.

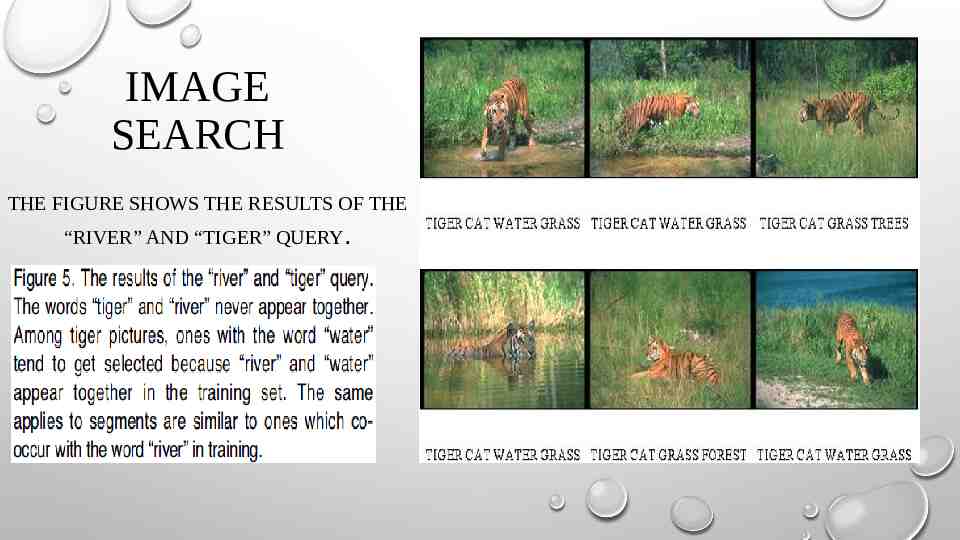

IMAGE SEARCH THE FIGURE SHOWS THE RESULTS OF THE “RIVER” AND “TIGER” QUERY.

IMAGE SEARCH SECOND APPROACH FINDING THE PROBABILITY THAT EACH CLUSTER GENERATES A QUERY AND THEN SAMPLE ACCORDING TO WEIGHTED CLUSTERS. CLUSTER MEMBERSHIP PLAYS IMPORTANT ROLE IN GENERATING DOCUMENTS, WE CAN SAY CLUSTERS ARE COHERENT.

IMAGE SEARCH PROVIDING MORE FLEXIBLE METHOD OF SPECIFYING IMAGE FEATURES IS AN IMPORTANT NEXT STEP. THIS IS AS EXPLORED IN MANY “QUERY BY EXAMPLE” IMAGE RETRIEVAL SYSTEMS. EXAMPLE :WE CAN QUERY FOR A DOG WITH WORD DOG AND IF WE WANT BLUE SKY THEN WE CAN GET IT BY ADDING IMAGE SEGMENT FEATURE TO THE QUERY.

PICTURES FROM WORDS AND WORDS FROM PICTURES THERE ARE TWO TYPES OF APPROACHES FOR LINKING WORDS TO PICTURES AND PICTURES TO WORDS. AUTO ILLUSTRATION AUTO ANNOTATION

AUTO ILLUSTRATION “AUTO ILLUSTRATION” – THE PROCESS OF LINKING PICTURES TO WORDS. GIVEN A SET OF QUERY ITEMS, Q AND A CANDIDATE DOCUMENT D, WE CAN EXPRESS THE PROBABILITY THAT A DOCUMENT PRODUCES THE QUERY BY:

AUTO ANNOTATION GENERATE WORDS FOR A GIVEN IMAGE CONSIDER THE PROBABILITY OF THE IMAGE BELONGING TO THE CURRENT CLUSTER. CONSIDER THE PROBABILITY OF THE ITEMS IN THE IMAGE BEING GENERATED BY THE NODES AT VARIOUS LEVELS IN THE PATH ASSOCIATED TO THE CLUSTER. WORK THE ABOVE OUT FOR ALL CLUSTERS.

AUTO ANNOTATION WE ARE COMPUTING THE PROBABILITY THAT AN IMAGE EMITS A PROPOSED WORD, GIVEN THE OBSERVED SEGMENTS, B:

AUTO ANNOTATION THE FIGURE SHOWS SOME ANNOTATION RESULTS SHOWING THE ORIGINAL IMAGE, THE BLOBWORLD SEGMENTATION, THE COREL KEYWORDS, AND THE PREDICTED WORDS IN RANK ORDER.

AUTO ANNOTATION THE TEST IMAGES WERE NOT IN THE TRAINING SET, BUT THEY COME FROM SAME SET OF CD’S USED FOR TRAINING. THE KEYWORDS IN UPPER-CASE ARE IN THE VOCABULARY.

AUTO ANNOTATION TESTING THE ANNOTATION PROCEDURE: WE USE THE MODEL TO PREDICT THE IMAGE WORDS BASED ONLY ON THE SEGMENTS, THEN COMPARE THE WORDS WITH SEGMENTS. PERFORM TEST ON TRAINING DATA AND TWO DIFFERENT TEST SETS. THEY ARE 1ST SET - RANDOMLY SELECTED HELD OUT SET FROM PROPOSED TRAINING DATA COMING FROM COREL CD’S. 2ND SET - IMAGES FROM OTHER CD’S

AUTO ANNOTATION QUANTITATIVE PERFORMANCE USE 160 COREL CD’S , EACH WITH 100 IMAGES(GROUPED BY THEME) SELECT 80 OF THE CDS, SPLIT INTO TRAINING (75%) AND TEST (25%). REMAINING 80 CDS ARE A ‘HARDER’ TEST SET. MODEL SCORING: N NUMBER OF WORDS FOR THE IMAGE , R NUMBER OF WORDS RECTLY.

RESULTS ANNOTATION RESULTS ON THREE KINDS OF TEST DATA, WITH THREE DIFFERENT SCORING METHODS.

RESULTS THE ABOVE TABLE SUMMARIZES THE ANNOTATION RESULT USING THE THREE SCORING METHODS AND THE THREE HELD OUT SETS. WE AVERAGE THE RESULTS OF 5 SEPARATE RUNS WITH DIFFERENT HELD OUT SETS. USING THE COMPARISON OF SAMPLING FROM THE WORD PRIOR , WE SCORE 3.14 ON THE TRAINING DATA, 2.70 ON NON-TRAINING DATA FROM THE SAME CD SET AS THE TRAINING DATA AND 1.65 FOR TEST DATA TAKEN FROM COMPLETELY DIFFERENT SET OF CD’S.

DISCUSSION PERFORMANCE OF THE SYSTEM CAN BE MEASURED BY TAKING ADVANTAGE OF ITS PREDICTIVE CAPABILITIES. WORDS WITH NO RELEVANCE TO VISUAL CONTENT CAUSE RANDOM NOISE, BY TAKING AWAY PROBABILITY FROM MORE RELEVANT WORDS. SUCH WORDS CAN BE REMOVED BY OBSERVING THEIR EMISSION PROBABILITIES ARE SPREAD OUT OVER THE NODES. THIS IS AUTOMATIC IMAGE REDUCTION METHOD WORKS DEPENDING ON THE NATURE OF THE DATA SET.

REFERENCES LEARNING SEMANTICS OF WORDS AND PICTURES BY KOBUS BARNARD AND DAVID FORSYTH, COMPUTER DIVISION, UNIVERSITY OF CALIFORNIA, BERKELEY HTTP://WWW.WISDOM.WEIZMANN.AC.IL/ VISION/COURSES/2003 2/BARNARD00LEARNING.PDF C.CARSON, S.BELONGE, H. GREENSPAN AND J.MALIK, “BLOBWORLD: IMAGE SEGMENTATION USING EXPECTATION MAXIMIZATION AND ITS APPLICATION TO IMAGE QUERYING”, IN REVIEW. HTTP://WWW.CS.BERKELEY.EDU/ MALIK/PAPERS/CBGM-BLOBWORLD.PDF

QUERIES

THANK YOU