“Human” error

44 Slides4.61 MB

“Human” error

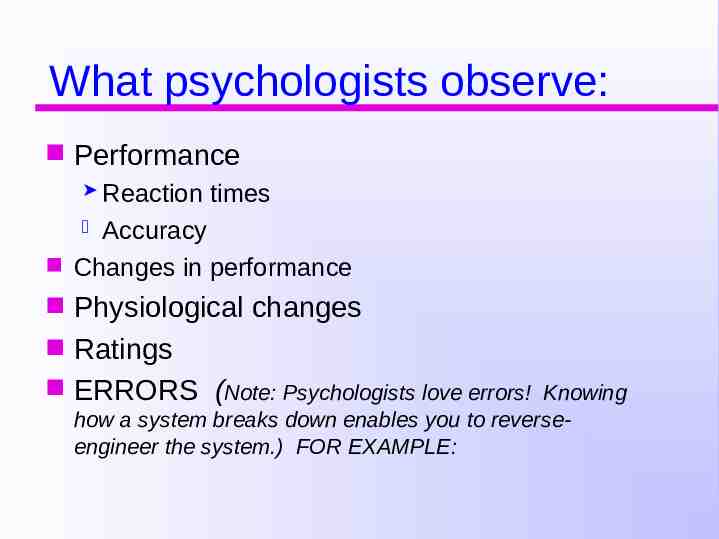

What psychologists observe: Performance Reaction times Accuracy Changes in performance Physiological changes Ratings ERRORS (Note: Psychologists love errors! Knowing how a system breaks down enables you to reverseengineer the system.) FOR EXAMPLE:

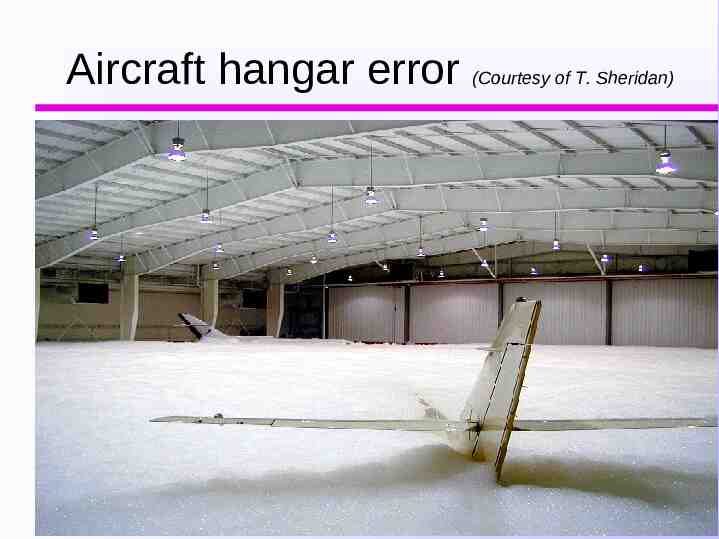

Aircraft hangar error (Courtesy of T. Sheridan)

What causes errors? Lack of knowledge Ambiguity Distraction, interference, similarity Physiological things (stress, exhaustion) (This is far from a complete taxonomy!)

Error messages in HCI (Unix) SEGMENTATION VIOLATION! Error #13 ATTEMPT TO WRITE INTO READ-ONLY MEMORY! Error #4: NOT A TYPEWRITER

Ambiguity in communication A command shortcut in the manual: Control . Does this mean press Control and while holding it, tap the plus sign key? or: press Control and then tap a period? Ex from Jef Raskin's book, The Human Interface

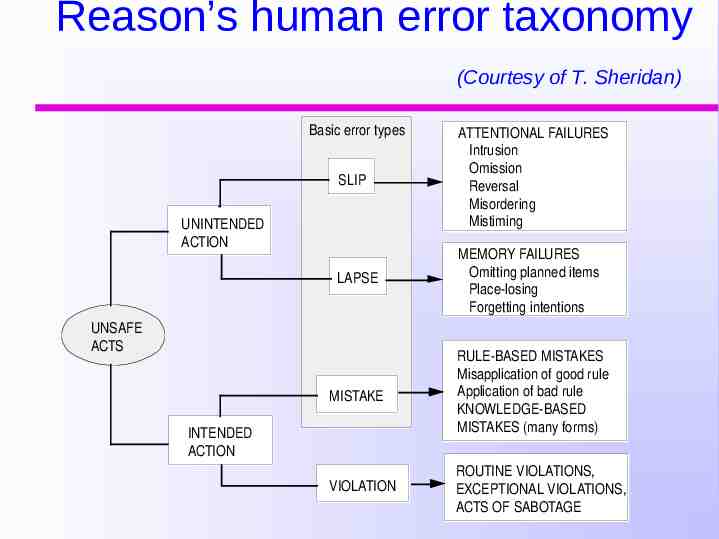

Reason’s human error taxonomy (Courtesy of T. Sheridan) Basic error types SLIP ATTENTIONAL FAILURES Intrusion Omission Reversal Misordering Mistiming LAPSE MEMORY FAILURES Omitting planned items Place-losing Forgetting intentions MISTAKE RULE-BASED MISTAKES Misapplication of good rule Application of bad rule KNOWLEDGE-BASED MISTAKES (many forms) VIOLATION ROUTINE VIOLATIONS, EXCEPTIONAL VIOLATIONS, ACTS OF SABOTAGE UNINTENDED ACTION UNSAFE ACTS INTENDED ACTION

Taxonomies of errors (Hollnagel, 1993) Important to distinguish between: manifestation of error (how it appears) cause of error (why it happened) Hollnagel argues that errors may be hard to classify (slip v. lapse v. mistake); this requires “an astute combination of observation and inference.” Temporal errors alone can be of many types (premature start, delayed start, premature finish, delayed finish, etc.) Also distinguishes “person-related” from “system-related” (but this seems to assign blame!)

Norman’s taxonomy of slips (Ch. 5) Capture errors Description errors Data-driven errors Associative activation errors Loss-of-activation errors Mode errors

The importance of feedback: Detection of a slip can take place only if there’s feedback! But that’s no guarantee either, if the user can’t associate the feedback with the action that caused it.

Modes when the very same command is interpreted differently in one context than in another (the system has more functions than the user has gesures) So the user must specify the context for a gesture or action and keep track of it

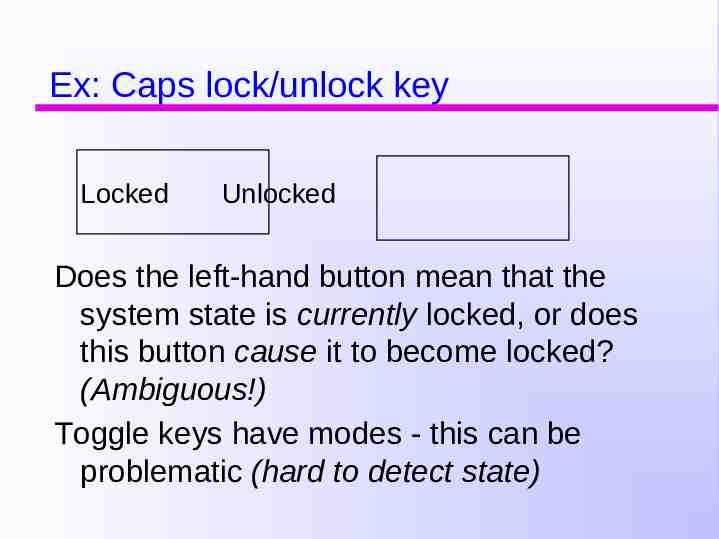

Ex: Caps lock/unlock key Locked Unlocked Does the left-hand button mean that the system state is currently locked, or does this button cause it to become locked? (Ambiguous!) Toggle keys have modes - this can be problematic (hard to detect state)

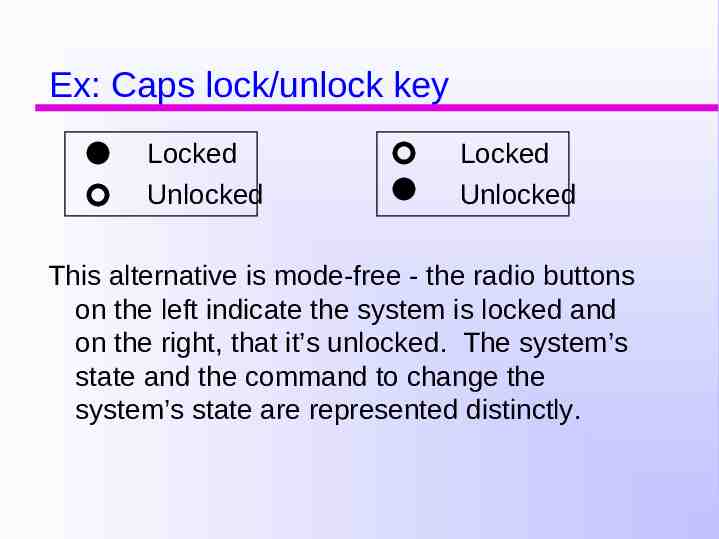

Ex: Caps lock/unlock key Locked Unlocked Locked Unlocked This alternative is mode-free - the radio buttons on the left indicate the system is locked and on the right, that it’s unlocked. The system’s state and the command to change the system’s state are represented distinctly.

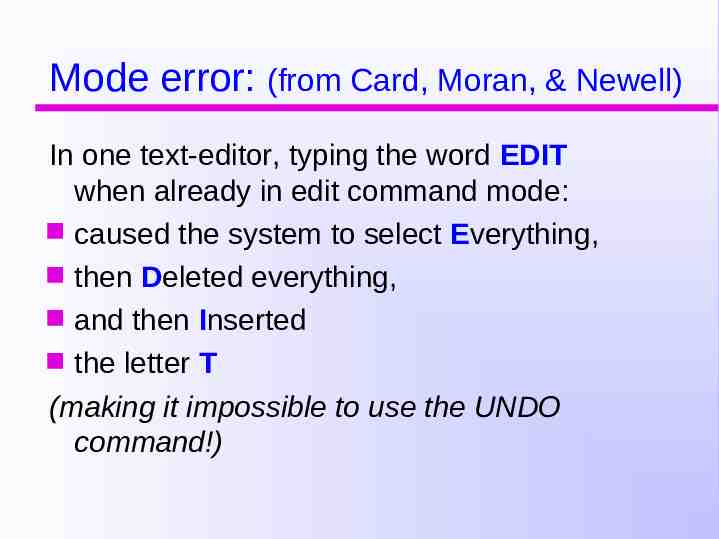

Mode error: (from Card, Moran, & Newell) In one text-editor, typing the word EDIT when already in edit command mode: caused the system to select Everything, then Deleted everything, and then Inserted the letter T (making it impossible to use the UNDO command!)

Jakob Nielsen: Try to prevent errors Try to prevent errors from occurring in the first place. Example: Inputting information on the Web is a common source of errors for users. How might you make errors less likely?

Try to prevent errors (Nielsen’s advice) Try to prevent errors from occurring in the first place. Example: Inputting information on the Web is a common source of errors for users. How might you make errors less likely? Have the user type the input twice (passwords). Ask the user to confirm their input (see ex.) Check what users type to make sure it’s spelled correctly or represents a legitimate choice. Avoid typing - have users choose from a menu. Observe users and redesign the interface

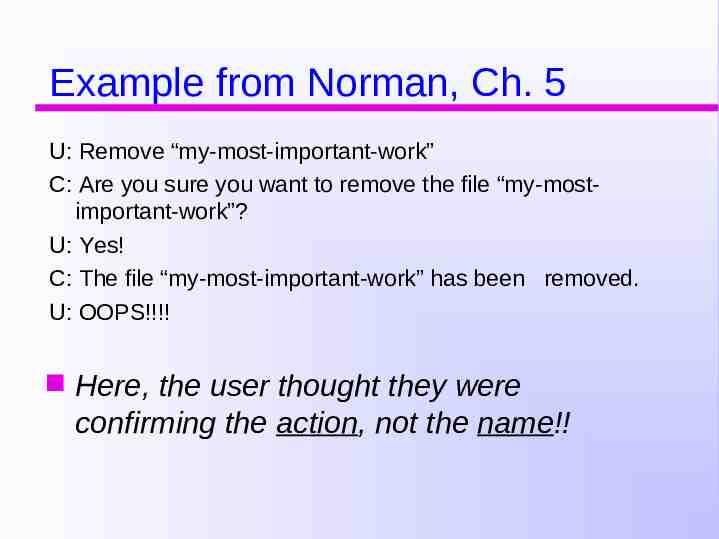

Example from Norman, Ch. 5 U: Remove “my-most-important-work” C: Are you sure you want to remove the file “my-mostimportant-work”? U: Yes! C: The file “my-most-important-work” has been removed. U: OOPS!!!! Here, the user thought they were confirming the action, not the name!!

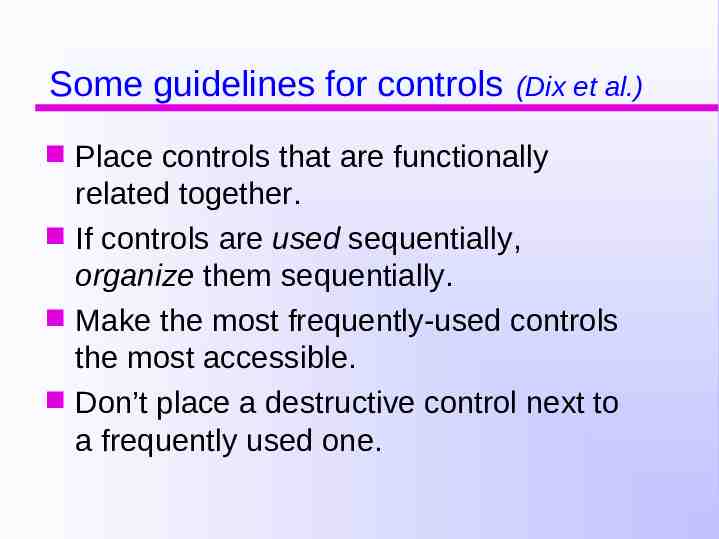

Some guidelines for controls (Dix et al.) Place controls that are functionally related together. If controls are used sequentially, organize them sequentially. Make the most frequently-used controls the most accessible. Don’t place a destructive control next to a frequently used one.

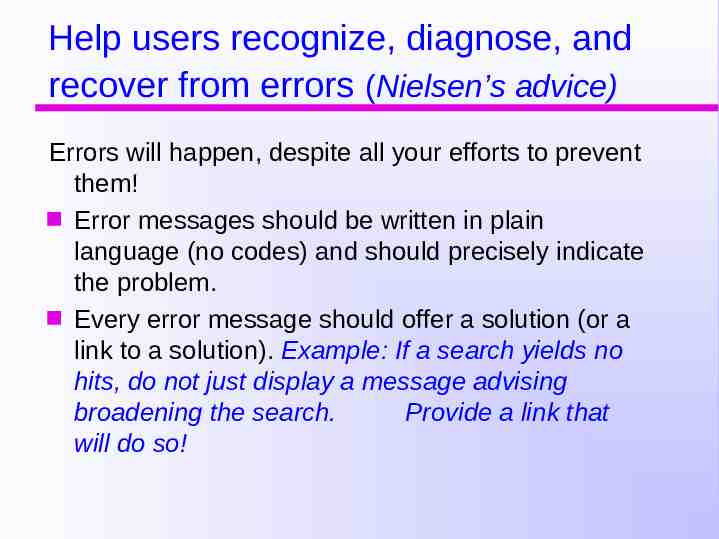

Help users recognize, diagnose, and recover from errors (Nielsen’s advice) Errors will happen, despite all your efforts to prevent them! Error messages should be written in plain language (no codes) and should precisely indicate the problem. Every error message should offer a solution (or a link to a solution). Example: If a search yields no hits, do not just display a message advising broadening the search. Provide a link that will do so!

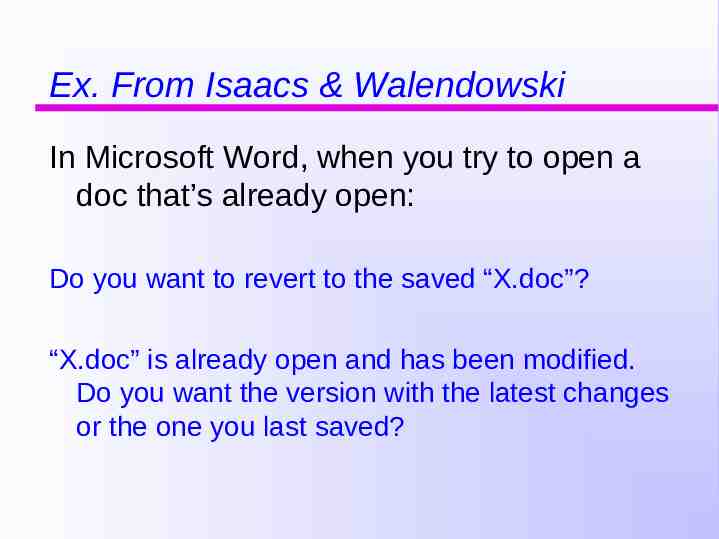

Ex. From Isaacs & Walendowski In Microsoft Word, when you try to open a doc that’s already open: Do you want to revert to the saved “X.doc”?

Ex. From Isaacs & Walendowski In Microsoft Word, when you try to open a doc that’s already open: Do you want to revert to the saved “X.doc”? “X.doc” is already open and has been modified. Do you want the version with the latest changes or the one you last saved?

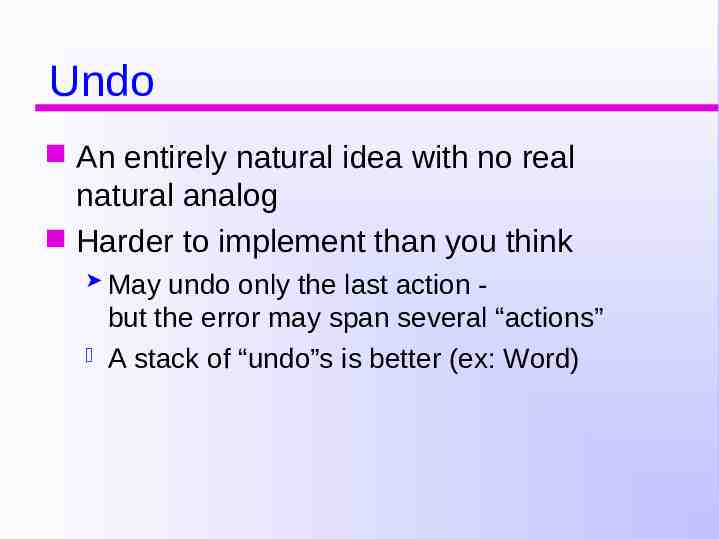

Undo An entirely natural idea with no real natural analog Harder to implement than you think May undo only the last action but the error may span several “actions” A stack of “undo”s is better (ex: Word)

Why aren’t interactions with computers as easy to manage as conversations with people?

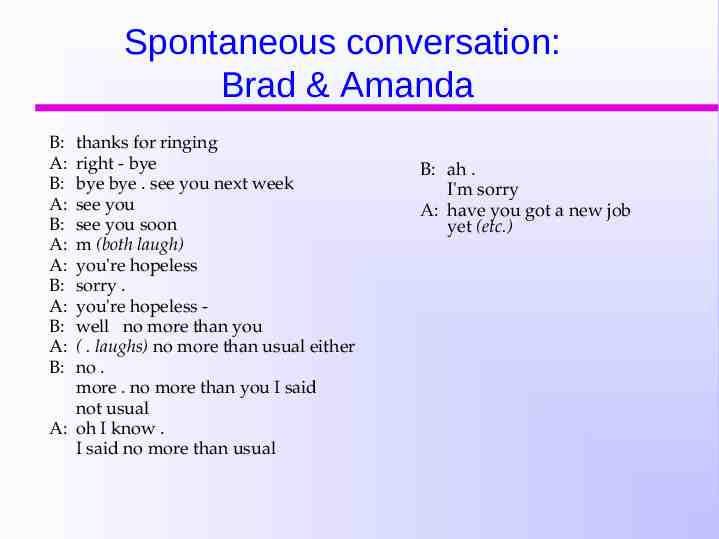

Spontaneous conversation: Brad & Amanda B: A: B: A: B: A: A: B: A: B: A: B: thanks for ringing right - bye bye bye . see you next week see you see you soon m (both laugh) you're hopeless sorry . you're hopeless well no more than you ( . laughs) no more than usual either no . more . no more than you I said not usual A: oh I know . I said no more than usual B: ah . I'm sorry A: have you got a new job yet (etc.)

Repair in human conversation A: you don’t have any nails, do you? B: no

Repair in human conversation A: you don’t have any nails, do you? B: (pause) fingernails? A: no, nails to nail into the wall (pause) when I get bored here I’m going to go put up those pictures. B: no

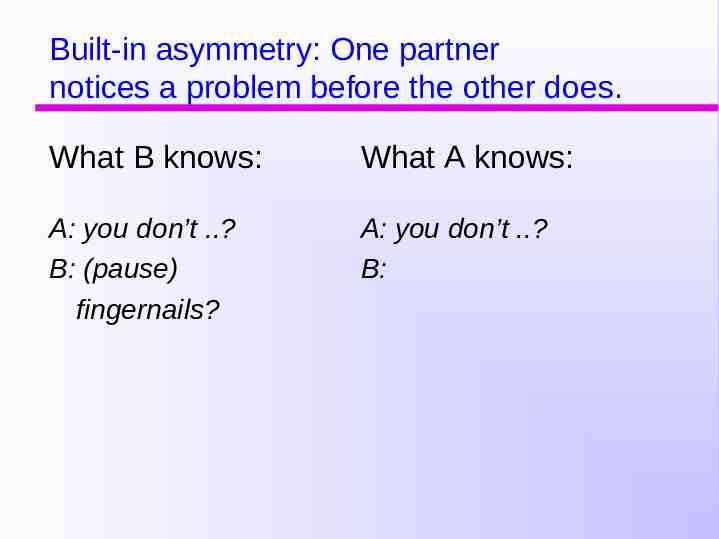

Built-in asymmetry: One partner notices a problem before the other does. What B knows: What A knows: A: you don’t .? B: (pause) fingernails? A: you don’t .? B:

A premise: People transfer some (but not all) of their expectations about human dialog to human-computer dialog.

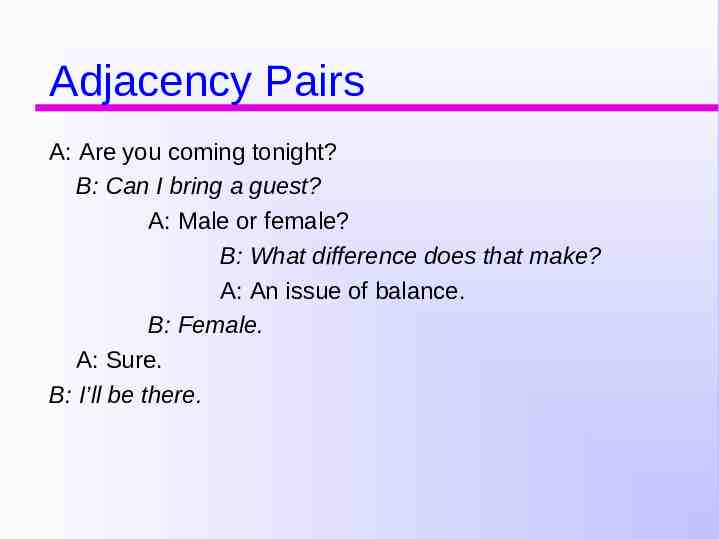

Adjacency Pairs A: Are you coming tonight? B: Can I bring a guest? A: Male or female? B: What difference does that make? A: An issue of balance. B: Female. A: Sure. B: I’ll be there.

Error messages should provide specific information that helps people repair the problem! (Examples from HPNL, a natural language database query program)

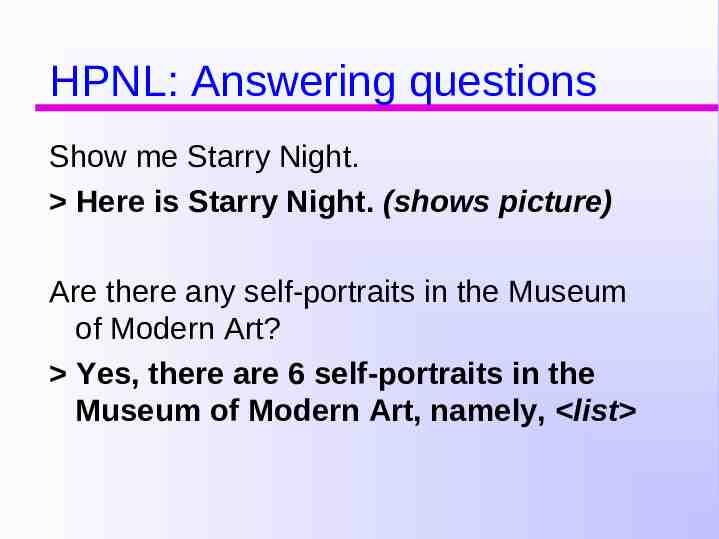

HPNL: Answering questions Show me Starry Night. Here is Starry Night. (shows picture) Are there any self-portraits in the Museum of Modern Art? Yes, there are 6 self-portraits in the Museum of Modern Art, namely, list

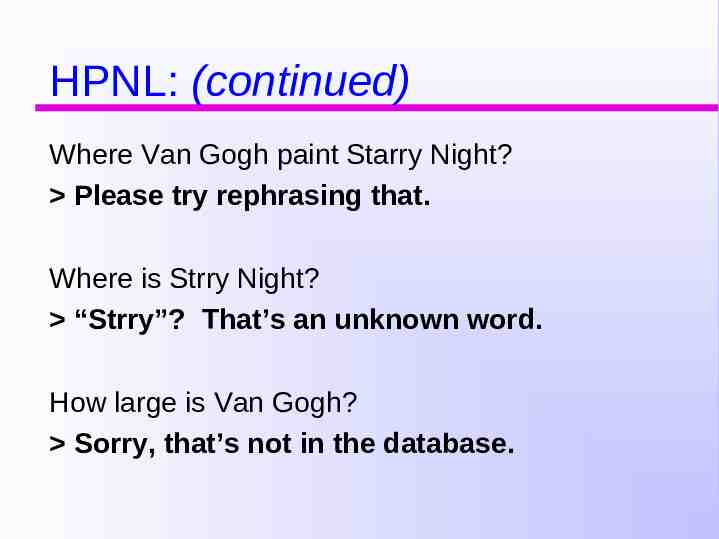

HPNL: (continued) Where Van Gogh paint Starry Night? Please try rephrasing that. Where is Strry Night? “Strry”? That’s an unknown word. How large is Van Gogh? Sorry, that’s not in the database.

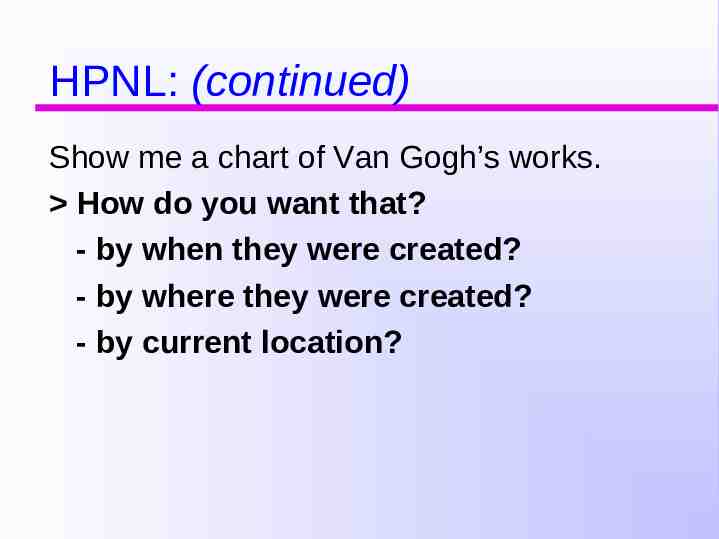

HPNL: (continued) Show me a chart of Van Gogh’s works. How do you want that? - by when they were created? - by where they were created? - by current location?

Give error messages at the level at which the system “breaks”, so that the user can interrupt or figure out how best to recover.

Back Seat Driver (Davis, 1989) The very first GPS system! Based on how passengers give their drivers directions

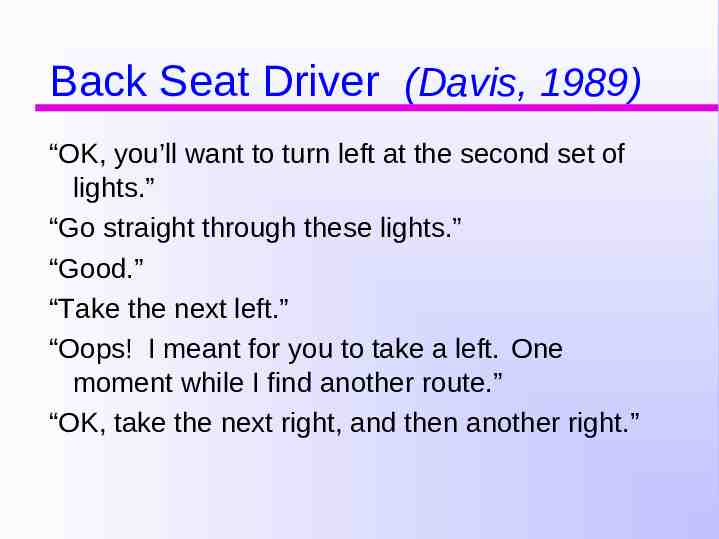

Back Seat Driver (Davis, 1989) “OK, you’ll want to turn left at the second set of lights.” “Go straight through these lights.” “Good.” “Take the next left.” “Oops! I meant for you to take a left. One moment while I find another route.” “OK, take the next right, and then another right.”

(revisit example from conversation:) Susan: you don’t have any nails, do you? Bridget: pause fingernails? Susan: no, nails to nail into the wall. pause when I get bored here I’m going to go put up those pictures. Bridget: no.

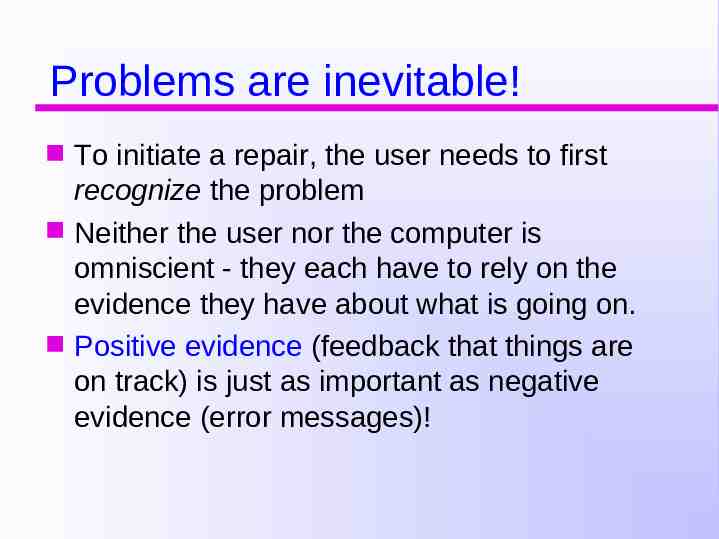

Problems are inevitable! To initiate a repair, the user needs to first recognize the problem Neither the user nor the computer is omniscient - they each have to rely on the evidence they have about what is going on. Positive evidence (feedback that things are on track) is just as important as negative evidence (error messages)!

(Returning to our discussion of human error in general - what can we conclude about designing complex systems?)

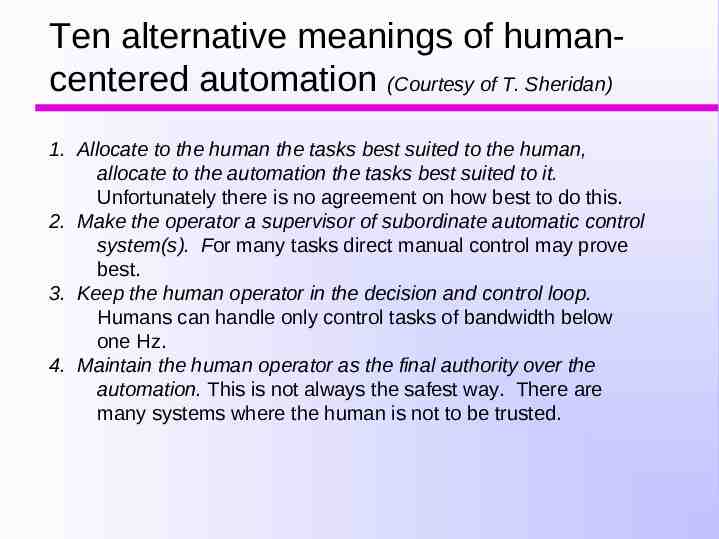

Ten alternative meanings of humancentered automation (Courtesy of T. Sheridan) 1. Allocate to the human the tasks best suited to the human, allocate to the automation the tasks best suited to it. Unfortunately there is no agreement on how best to do this. 2. Make the operator a supervisor of subordinate automatic control system(s). For many tasks direct manual control may prove best. 3. Keep the human operator in the decision and control loop. Humans can handle only control tasks of bandwidth below one Hz. 4. Maintain the human operator as the final authority over the automation. This is not always the safest way. There are many systems where the human is not to be trusted.

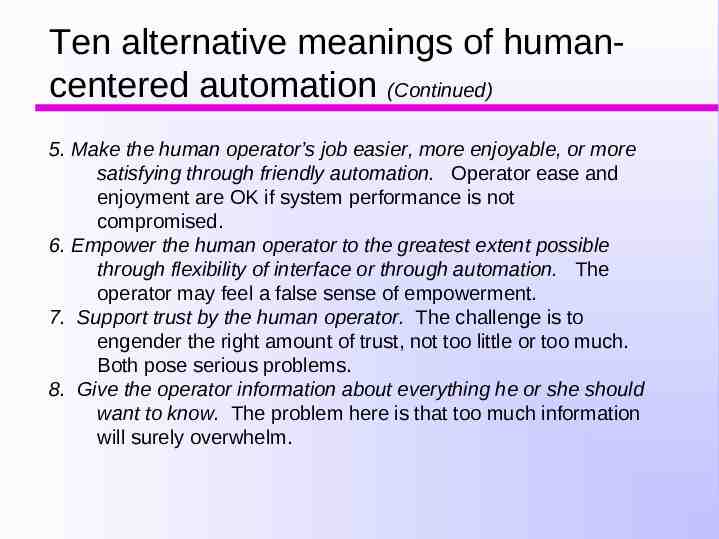

Ten alternative meanings of humancentered automation (Continued) 5. Make the human operator’s job easier, more enjoyable, or more satisfying through friendly automation. Operator ease and enjoyment are OK if system performance is not compromised. 6. Empower the human operator to the greatest extent possible through flexibility of interface or through automation. The operator may feel a false sense of empowerment. 7. Support trust by the human operator. The challenge is to engender the right amount of trust, not too little or too much. Both pose serious problems. 8. Give the operator information about everything he or she should want to know. The problem here is that too much information will surely overwhelm.

Ten alternative meanings of humancentered automation (Continued) 9. Engineer the automation to minimize human error and response variability. Error is a curious thing. Darwin taught us about requisite variety years ago. A good system tolerates some error. 10. Achieve the best combination of human and automatic control, where best is defined by explicit system objectives. Don’t we wish we always had explicit system objectives!

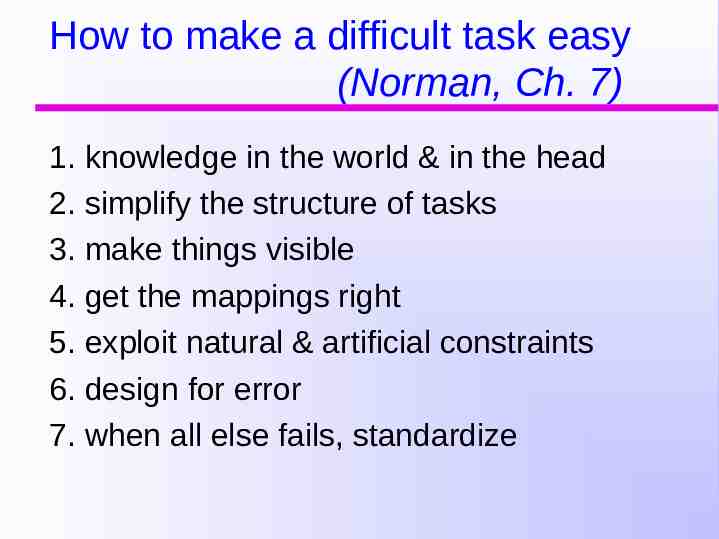

How to make a difficult task easy (Norman, Ch. 7) 1. knowledge in the world & in the head 2. simplify the structure of tasks 3. make things visible 4. get the mappings right 5. exploit natural & artificial constraints 6. design for error 7. when all else fails, standardize