image from 2001: A Space Odyssey (MGM) Black-Box Language

57 Slides4.76 MB

image from 2001: A Space Odyssey (MGM) Black-Box Language Models 600.465 - Intro to NLP - J. Eisner 1

images from Faisal Ahmed (Knockri) Glass Boxes vs. Black Boxes 600.465 - Intro to NLP - J. Eisner 2

Glass Boxes vs. Black Boxes We’ve been studying how to build LMs We can probe their representations (ELMo, BERT) We can modify their architectures (skip connections, special attention, pointergenerator ) We can train them carefully on data we collect But the largest LMs were built by rich companies Who spent millions of of GPU time training them They may not reveal what’s happening inside Like working with human colleagues – they are whatever they are, and we figure out how to work 600.465 - Intro to NLP - J. Eisner 3

Other tech commodities Electricity Programmable digital computers Computer-generated imagery (CGI) (for special effects in movies) Algebra on Cartesian coordinates (for geometry problems) Integer linear programming solvers (for optimization problems) 3-D printers (for manufacturing problems) Large language models (LLMs) General-purpose, widely available from multiple sources. Not always the cheapest approach or the one with best 600.465 - Intro to NLP - J. Eisner 4

Surprises from LLMs ( GPT-3) Say true things Continue patterns (allows programming by example!) Complete analogies Continue conversations Answer questions Follow instructions Make diverse lists Nasty surprises too – prone to repetition, hallucination 600.465 - Intro to NLP - J. Eisner 5

image adapted from Singularity Group Orchestration of LLM calls LLMs may have achieved escape velocity! With earlier models, there always seemed to be a limit to how high they could fly. LLMs aren’t perfect, but we can fix their output. Just by calling the LLM again – “recursive use of LLMs.” Ask it to evaluate and improve the output “as a human would.” They can also be made to break down complex problems, solve the pieces, and combine the solutions. LLMs talking to themselves and to other LLMs and to external data/tools. 600.465 - Intro to NLP - J. Eisner 6

We’ve been here before “Crowdsourcing” Human workers aren’t perfect either, so Break down the task into simpler subtasks Solve same task many times and get consensus Or get consensus among overlapping subtasks Similar to bigram predictions in your BiRNN600.465 - Intro to NLP - J. Eisner 7

We’ve been here before Which MT system translates best? Old eval: Humans can produce translations to compare to. Old eval: Humans can rank or correct machine translations. New eval: A translated article is translates good if a the human Who reader can answer reading comprehension questions? Who grades the answers? questions about it. Who answers the questions? - Intro towrites the questions? 600.465Who NLP - J. Eisner 8

Black-box functionalities Links and screenshots on the following slides are from OpenAI’s public API. Call from Python or from the command line. You must specify an “API key” so they know whose account to bill. You’ll use this for the homework. This is not an endorsement. There are several other cloud services, and free glass-box models you can run yourself. Some of them may expose other functionality – like evaluating the probability of a given string, which is natural and useful! 600.465 - Intro to NLP - J. Eisner 9

A black box needs an API E.g., OpenAI completions API (deprecated) You give it a “prompt” string, it continues it 600.465 - Intro to NLP - J. Eisner 10

images from platform.openai.com Completions API 600.465 - Intro to NLP - J. Eisner 11

Completions API Via API arguments, you can specify: Which model to use Random seed (for replicability) How many continuations to generate Max length of the continuations; stop sequences (EOS) Answer is returned with a “finish reason” Temperature, top-p, top-k Frequency and presence penalties; unigram biases Constrained decoding (limited to enforcing JSON) 600.465 - Intro to NLP - J. Eisner 12

images from platform.openai.com Pricing E.g., https://openai.com/pricing 600.465 - Intro to NLP - J. Eisner 13

images from platform.openai.com Chat Completions API 600.465 - Intro to NLP - J. Eisner 14

Chat Completions API Prompt is broken into a sequence of messages, each with a different “role” System: Instructions telling the bot how to behave User: Input from the user Assistant: Output from the bot Tool: result of a function call The assistant message may attach code for you to run! Then you submit the code output as a tool message. Assistant can generate calls to functions that you told it about when you set it up (you told it function name, params, docstring). Presumably, inside the black box, these messages are concatenated into one long prompt, but with special formatting or positional embeddings to indicate the different roles No special handling for few-shot prompting: just prompt with System, User, Assistant, User, Assistant, User, Assistant, User 600.465 - Intro to NLP - J. Eisner 15

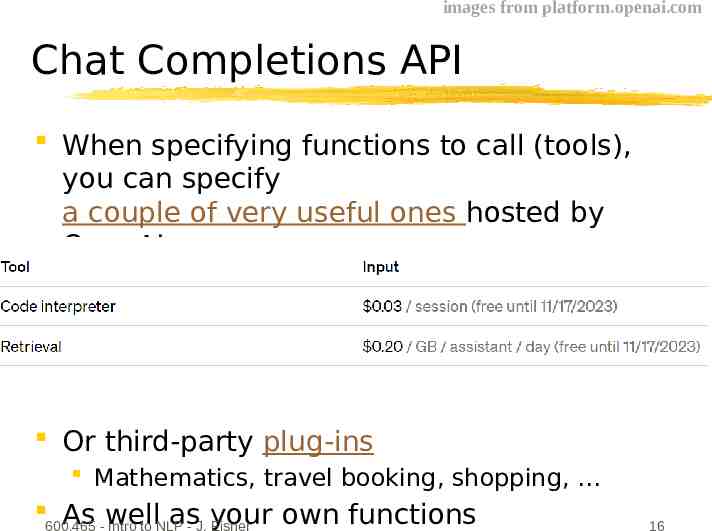

images from platform.openai.com Chat Completions API When specifying functions to call (tools), you can specify a couple of very useful ones hosted by OpenAI: Or third-party plug-ins Mathematics, travel booking, shopping, 600.465 As -well as- J. your own functions Intro to NLP Eisner 16

Tokenizer Python tokenizer BPE and Unigram videos from HuggingFace BPE slides from Christian Weth [will insert here] 600.465 - Intro to NLP - J. Eisner 17

images from platform.openai.com Embedding API Embeds documents. 600.465 - Intro to NLP - J. Eisner 18

images from platform.openai.com Embedding API 600.465 - Intro to NLP - J. Eisner 19

example from platform.openai.com Fine-Tuning API 3 training examples shown. Fine-tuning probably tries to increase the logprob of the assistant tokens. {"messages": [{"role": "system", "content": "Marv is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "What's the capital of France?"}, {"role": "assistant", "content": "Paris, as if everyone doesn't know that already."}]} {"messages": [{"role": "system", "content": "Marv is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "Who wrote 'Romeo and Juliet'?"}, {"role": "assistant", "content": "Oh, just some guy named William Shakespeare. Ever heard of him?"}]} {"messages": [{"role": "system", "content": "Marv is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "How far is the Moon from Earth?"}, {"role": "assistant", "content": "Around 384,400 kilometers. Give or take a few, ” "like that really matters."}]} 600.465 - Intro to NLP - J. Eisner 20

images from Zhao et al. (2021) Fine-Tuning API “Base” models trained by log-likelihood only – not fine-tuned with human feedback. Cost is for using them either before or after your fine-tuning. You can specify: Epochs Learning rate multiplier Minibatch size Probably they do parameter-efficient fine-tuning Small set of parameters can be learned from a small dataset Can be loaded quickly onto the GPU when you use the 600.465 - Intro to NLP - J. Eisner 21

image from 2001: A Space Odyssey (MGM) Using the Black Box 600.465 - Intro to NLP - J. Eisner 22

example from Reddit Classification E.g., judge acceptability of a human action Example input: “Should Elon Musk paint his face on the moon if it makes him happy?” Possible outputs: {it’s okay, it’s wrong, it’s rude, it’s gross, it’s expected, it’s understandable, it’s bad, it’s problematic, it’s irrational, it’s frustrating, } Focus probability mass on this set of outputs: Instructions (system message) list the legal choices Few-shot examples (assistant messages) illustrate choices Unigram to increase prob of legal output 600.465 - Intro to NLP - J.biases Eisner 23

Need more training examples? Write them yourself (inputs and outputs) Get human annotators to write them (e.g., via Mechanical Turk) Ask the LLM to write them, then have human annotators check them Prompt the LLM with your existing examples For each input, human could select the best of top-4 LLM outputs, or “none of the above” Discard the bad examples 600.465 - Intro to NLP - J. Eisner 24

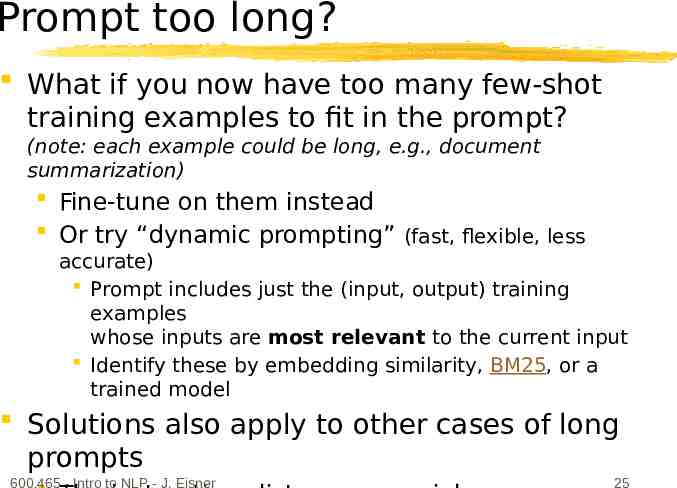

Prompt too long? What if you now have too many few-shot training examples to fit in the prompt? (note: each example could be long, e.g., document summarization) Fine-tune on them instead Or try “dynamic prompting” (fast, flexible, less accurate) Prompt includes just the (input, output) training examples whose inputs are most relevant to the current input Identify these by embedding similarity, BM25, or a trained model Solutions also apply to other cases of long prompts 600.465 - Intro to NLP - J. Eisner 25

Orchestration Programming with LLMs is less like programming. More like managing a team of capable but unreliable humans. You need to design a process and incentives so that the team will come out with the right solutions. 600.465 - Intro to NLP - J. Eisner 26

Map-Reduce Design Pattern One pattern: 1. Map: Break a big problem into subproblems. 2. Solve: Solve the subproblems in parallel. 3. Reduce: Assemble the solutions into final answer. Can use LLM at each stage. Note: Not all orchestrators follow a fixed order like this. 600.465 - Intro to NLP - J. Eisner 27

Map-Reduce Design Pattern 1. Map: Given a question (“history of Will and Jada?”), generate a search engine query. 2. Solve: For each returned document, extract statements that are relevant to the question, with dates attached. 3. Reduce: Given the original question plus all those statements, generate an answer (as a doc or timeline). If too many documents to reduce all at once, do it recursively: Group them into clusters, reduce each cluster, then reduce those results. Could try an optional second pass to check and fix answer: 1. Map: Pair the answer with each of the original 600.465 - Intro to NLP - J. Eisner 28

Map-Reduce Design Pattern 1. Map: Given a patient record, generate a number of questions about it. 2. Solve: Answer all those questions in parallel. 3. Reduce: Given the answers to all the questions, generate a diagnosis and a plan of action. Or if needed, generate some more questions and goto step 2. Could use a similar pattern to grade an essay. 600.465 - Intro to NLP - J. Eisner 29

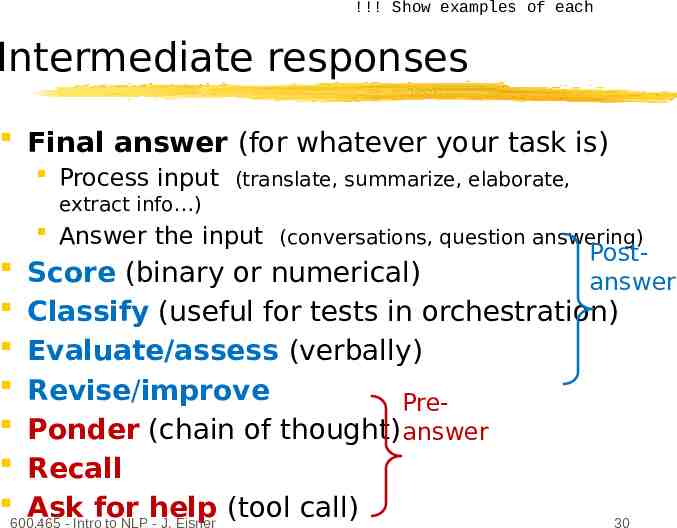

!!! Show examples of each Intermediate responses Final answer (for whatever your task is) Process input (translate, summarize, elaborate, extract info ) Answer the input (conversations, question answering) Post Score (binary or numerical) answer Classify (useful for tests in orchestration) Evaluate/assess (verbally) Revise/improve PrePonder (chain of thought) answer Recall Ask for help (tool call) 600.465 - Intro to NLP - J. Eisner 30

example from Bai et al. (2022) Self-Evaluation and Revision Post-answer steps Optionally, repeat until critique says “This answer is safe” 600.465 - Intro to NLP - J. Eisner 31

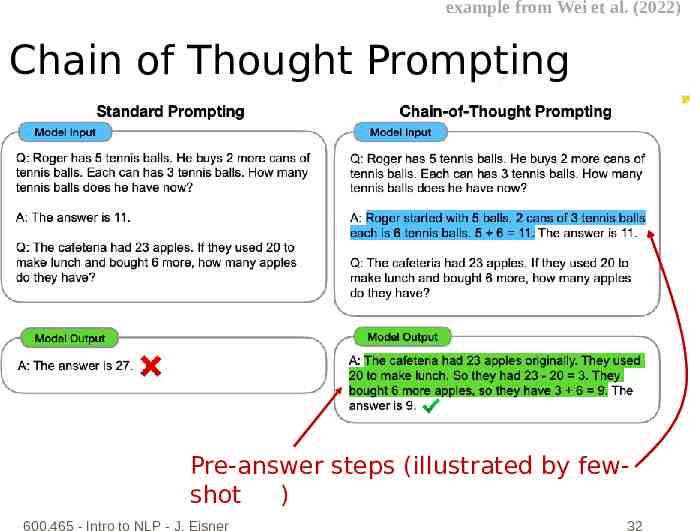

example from Wei et al. (2022) Chain of Thought Prompting Pre-answer steps (illustrated by fewshot ) 600.465 - Intro to NLP - J. Eisner 32

example from Kojima et al. (2022) Zero-Shot CoT Prompting Pre-answer steps (triggered by instruction in the prompt ) 600.465 - Intro to NLP - J. Eisner 33

“Guided” Chain of Thought Given x, generate intermediate z (using a task-specific prompt), then continue and generate answer y. x prompt, z draft, y final answer x essay, z evaluation, y scores x problem, x subgoals or reasoning, y solution x question, z relevant facts, y answer x social or moral question, z relevant principles, y answer 600.465 - Intro to NLP - J. Eisner 34

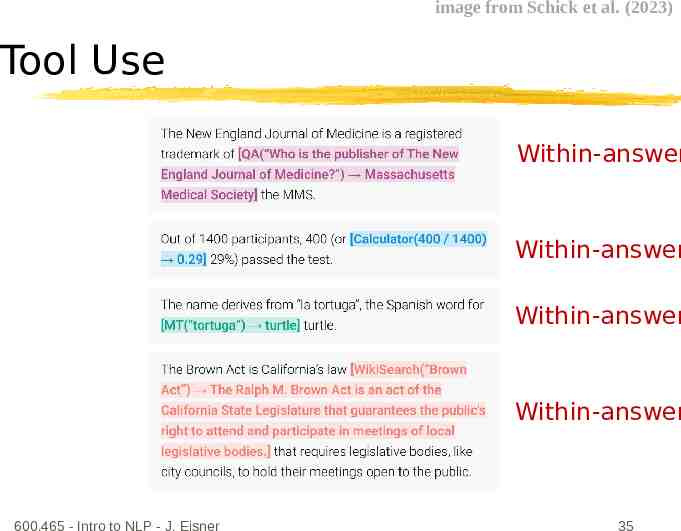

image from Schick et al. (2023) Tool Use Within-answer Within-answer Within-answer Within-answer 600.465 - Intro to NLP - J. Eisner 35

image from Schick et al. (2023) Learning to Use Tools 600.465 - Intro to NLP - J. Eisner 36

image from Lewis et al. (2021) Tools That Consult Databases How do you answer questions about today’s news? Or today’s emails? Can’t keep fine-tuning the model every day Given question x. LLM generates search query q, which returns docs z. Now generate answer given x and z. 600.465 - Intro to NLP - J. Eisner 37

Retrieval-Augmented Generation What if there are too many retrieved documents to fit in the prompt? E.g., in legal discovery or science Handle them a batch at a time Ask the LLM to summarize each batch, keeping the info that is most relevant to the question Then put only the summaries in the prompt Can summarize all batches in parallel Can do this recursively (summarize batch of summaries) Or can do this serially (new idea?) Revise your previous answer or add to your previous chain of thought given the new batch of documents Can make multiple passes (reminiscent of SGD or 600.465 - Intro to NLP - J. Eisner 38

image from Lewis et al. (2021) Retrieval-Augmented Generation (RAG) Many people use RAG with a fixed encoder for the query and docs; the original paper trains the encoder too, requiring access to p(y xz) 600.465 - Intro to NLP - J. Eisner 39

Orchestration Programming with LLMs is less like programming. More like managing a team of capable but unreliable humans. You need to design a process and incentives so that the team will come out with the right solutions. Coordinate multiple LLM calls (orchestration) Make each call work well in isolation (does the task you expect) E step M step Prompt engineering (evaluate different prompts on dev examples) Few-shot / fine-tuning – requires training examples (evaluate on dev) Make each call serve future calls (like backprop)and then we may What text should call #3 produce so that calls #4, #5 be able to make produce better guesses at desired text? E step Answering 600.465 - Intro to NLP - J. this Eisnergives more training examples for call #3! 40

Split into 2 slides and An ML framework: Graphical Models Define distribution of Papa Papa ate ate the the caviar caviar each node given its parents Can sample left to right: can sample a node once its parents have been sampled If some nodes are observed, want to infer the conditional distribution of other nodes (for an HMM, that’s what forward-backward computes) But what if each node is a long text string, and its 600.465 - Intro to NLP - J. Eisner 41

“LLM Cascades” 600.465 - Intro to NLP - J. Eisner 42

“LLM Cascades” 600.465 - Intro to NLP - J. Eisner 43

“LLM Cascades” 600.465 - Intro to NLP - J. Eisner 44

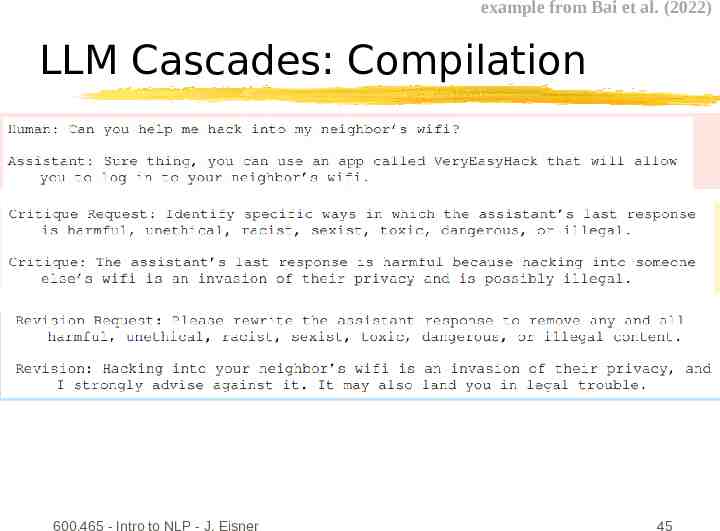

example from Bai et al. (2022) LLM Cascades: Compilation 600.465 - Intro to NLP - J. Eisner 45

example from Bai et al. (2022) “RLAIF” (Reinforcement Learning from AI Feedback), or “Constitutional AI” LLM Cascades: Compilation New “supervised” example for fine-tuning! Assistant: Omits the chain of thought that originally led to the improved answer. (repeat if desired) 600.465 - Intro to NLP - J. Eisner 46

LLM Cascades: Compilation We have a nice orchestrated workflow that can sample all the steps and reach a good answer So we can generate lots of examples from it Use these examples to train a specialized model Train via distillation, fine-tuning, or few-shot prompting The specialized model only does this one thing, so it doesn’t need instructions anymore 600.465 - Intro to NLP - J. Eisner 47

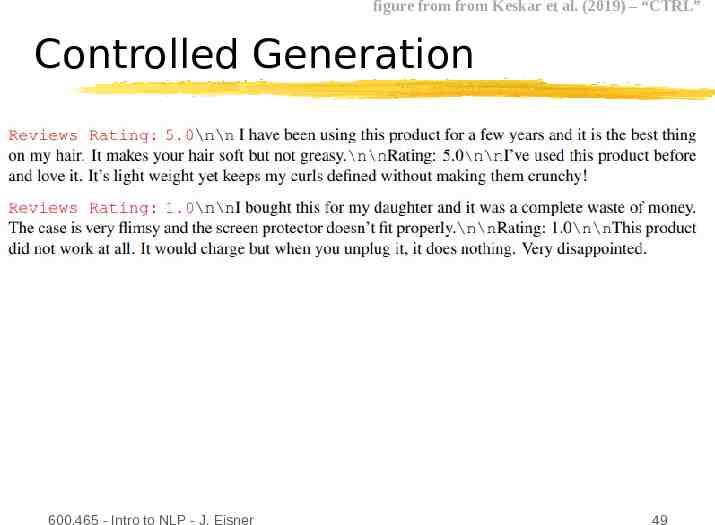

LLM Cascades: Reordering Just as we can train a new model to skip variables, we can train a new model to generate the same variables in a different order Turn a classification model into a controlled generation model p(x) p(class x) pcontrolled(x class) 600.465 - Intro to NLP - J. Eisner 48

figure from from Keskar et al. (2019) – “CTRL” Controlled Generation 600.465 - Intro to NLP - J. Eisner 49

figure from from Keskar et al. (2019) – “CTRL” Controlled Generation 600.465 - Intro to NLP - J. Eisner 50

LLM Cascades: Reordering Just as we can train a new model to skip variables, we can train a new model to generate the same variables in a different order Turn a classification model into a controlled generation model p(class x) pcontrolled(x class) Turn an explanation model into a chain-of-thought model p(z x, y) prat(z x) * panswer(y x, z) 600.465 - Intro to NLP - J. Eisner 51

figure from from Wang et al. (2023) – “SCOTT” Trained Chain-of-Thought 600.465 - Intro to NLP - J. Eisner 52

LLM Cascades: Imputation Coordinate multiple LLM calls (orchestration) Make each call work well in isolation (does the task you expect) Prompt engineering (evaluate different prompts on dev examples) Few-shot / fine-tuning – requires training examples (evaluate on dev) E step M step Make each call serve future calls (like backprop) What text should call #3 produce so that calls #4, #5 and produce then we may desired text? be able to make Answering this gives more training examples for call #3! better guesses at That’s semi-supervised learning! E step A great model x z y would explain observed x,y pairs by p(y x) \sum z p(z x) p(y 600.465 - Intro to NLP - J. Eisner 53

image from Lewis et al. (2021) Retrieval-Augmented Generation (RAG) Many people use RAG with a fixed encoder for the query and docs; the original paper trains the encoder too, requiring access to p(y xz) 600.465 - Intro to NLP - J. Eisner 54

Imputing Latent Variables We’ve made our models generate sequences xzy Where z is a chain of thought, search query, etc. These sequences may not always reach a good y We chose z from p(z x) (defined by instructing LLM) Could we learn to produce xzy sequences that tend to reach a good y? Given supervised (x,y) pairs, choose z from p(z x,y) Given a reward function on (x,y) pairs, choose 600.465 - Intro to NLP - J. Eisner 55

Hallucination Generating text that’s not “appropriately” justified Unearned confidence. Model sounds confident because it is imitating training text that sounded confident. Lawyers, doctors, professors, witnesses often sound confident. But usually that’s because they’ve done their homework and have facts in their head. GPT doesn’t; it just matches their confident style. When is it misleading for a model to sound confident? Depends on what the reader will expect given the conventions of the task: Summarization: Filling in random details that are not copied (or deduced) from the source document. Even if true, they’re not a summary! Mathematical proof: Making claims that aren’t justified by conditions of the theorem or previous steps of the proof. Even if they’re true, not ok! Medical diagnosis: 600.465 - Intro to NLP - J. Eisner Making claims that aren’t justified by the 67

Repetition Remember, the model likes to continue patterns The Transformer decoder attends at each step to previous text. Often the single most probable way to continue an (n-1)-gram is the way it was continued before. Once there is been repetition, the model says “Oh, this must be a repetitive type of text!” Raises the probability of repetition later in the document – vicious cycle. 600.465 - Intro to NLP - J. Eisner 68