Compositional Design and Analysis of Timing-Based

53 Slides315.00 KB

Compositional Design and Analysis of Timing-Based Distributed Algorithms Nancy Lynch Theory of Distributed Systems MIT Third MURI Workshop Arlington-Ballston, Virginia December 10, 2002 1

MIT Participants Leader – Nancy Lynch Postdoctoral associates – Idit Keidar, Dilsun Kirli Graduate students – Roger Khazan, Carl Livadas, Ziv Bar-Joseph, Rui Fan, Seth Gilbert, Sayan Mitra Collaborators – Alex Shvartsman and students, Frits Vaandrager, Roberto Segala 2

Project Overview Design and analyze distributed algorithms that implement global services with strong guarantees, e.g.: – Reliable communication – Strongly coherent data services Dynamic environment, where processes join, leave, and fail. Algorithms composed of sub-algorithms. Analyze performance conditionally, under various assumptions about timing and failures. Develop underlying mathematical modeling framework, based on interacting state machines (IOA, TIOA), capable of: – Describing precisely all the algorithms we study. – Supporting compositional and conditional analysis. 3

Algorithms Studied Scalable group communication [Khazan, Keidar] Early-delivery dynamic atomic broadcast [Bar-Joseph, Keidar, Lynch] Reconfigurable atomic memory [Lynch, Shvartsman] Scalable reliable multicast [Livadas, Keidar, Lynch] In progress: – Reconfigurable atomic memory – Peer-to-peer: Fault-tolerant location services, data services – Mobile: Topology control, clock synchronization, tracking 4

This Talk I. Completed work: Scalable group communication Early-delivery dynamic atomic broadcast II. III. IV. V. Reconfigurable atomic memory Reliable multicast Modeling framework Plans for the next two years 5

I. Completed Work Scalable Group Communication [Keidar, Khazan 00, 02], [Khazan 02], [Keidar, Khazan, Lynch, Shvartsman 02] [Taraschanskiy 00] GCS 6

Group Communication Services Cope with changing participants using abstract groups of client processes with changing membership sets. Processes communicate with group members indirectly, by sending messages to the group as a whole. GC services support management of groups: – Maintain membership information. Form new views in response to changes. – Manage communication. Communication respects views. Provide guarantees about ordering and reliability of message delivery. Virtual synchrony Applications: Managing replicated data; distributed multiplayer games; collaborative work 7

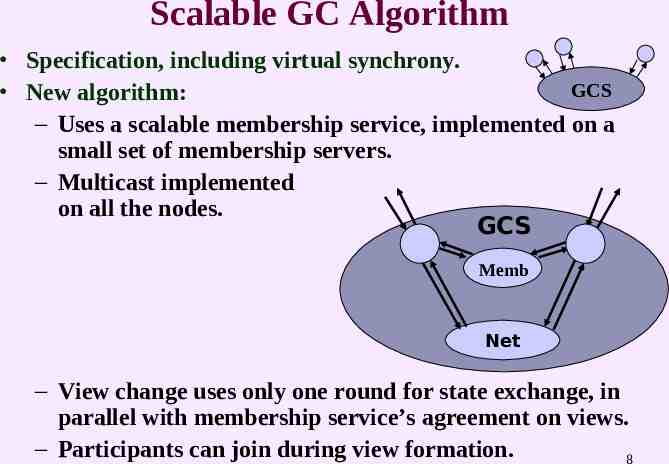

Scalable GC Algorithm Specification, including virtual synchrony. GCS New algorithm: – Uses a scalable membership service, implemented on a small set of membership servers. – Multicast implemented on all the nodes. GCS Memb Net – View change uses only one round for state exchange, in parallel with membership service’s agreement on views. – Participants can join during view formation. 8

Analysis Safety proofs, using incremental proof methods. Liveness proofs. Performance analysis: – – – – S S’ A A’ Time from when network stabilizes until GCS announces final view. Message latency. Conditional analysis, based on input, failure, and timing assumptions. Compositional analysis, based on performance of membership service and Net. Modeled and analyzed data-management application running on top of the new GCS. Distributed implementation [Taraschanskiy 00]. 9

Completed Work: Early-Delivery Dynamic Atomic Broadcast [Bar-Joseph, Keidar, Lynch 02] DAB 10

Dynamic Atomic Broadcast join leave mcast(m) join-ack leave-ack rcv(m) DAB Atomic broadcast, where processes may join, leave, or fail. Safety: Sending, receiving orders are consistent with a single global message ordering (no gaps). Liveness: Eventual completion of joins, leaves. Eventual delivery, including the process’ own messages. Fast delivery, even with joins, leaves. Application: Distributed multiplayer interactive games. 11

Implementing DAB join DAB net-join Net Processes: – Timing-dependent, have approximately-synchronized clocks. Net: – Pairwise FIFO delivery – Low latency – But does not guarantee a single total order, nor that all processes see the same messages from a failing process. 12

Dynamic Atomic Bcast Algorithm Processes coordinate message delivery: – Divide time into slots using local clock, assign messages to slots. – Deliver messages in order of (slot, sender id). – Determine members of each slot, deliver only messages from members. Processes must agree on slot membership: – Joining process selects join-slot, informs others. – Similarly for leaving process. – Failed process results in consensus on failure slot. Requires a new kind of consensus service: Consensus with Uncertain Participants (CUP). – Participants not known a priori. – Each participant submits its perceived “World”. – Processes may abstain. 13

The DAB Algorithm Using CUP DAB fail DABi1 DABi2 fail CUP(j) Net 14

Consensus with Uncertain Participants CUP Problem: – Guarantees agreement, validity, termination. – Assumes submitted worlds are “close”: Process that initiates is in other processes’ worlds Process in anyone’s world initiates, abstains, leaves, or fails. CUP Algorithm – A new early-stopping consensus algorithm. – Similar to [Dolev, Reischuk, Strong 90], but: Tolerates uncertainty about participants. Tolerates processes leaving. – Terminates in two rounds when failures stop, even if leaves continue. – Latency linear in number of actual failures 15

Analysis Compositional analysis: Properties of CUP used to prove properties of DAB: – Safety: CUP agreement and validity imply DAB atomic broadcast consistency guarantees. – Liveness: CUP safety and liveness properties (e.g., termination) imply DAB liveness properties (e.g., eventual delivery). – Latency: CUP decision bounds imply DAB message delay bounds. Message latency: – No failures: Constant, even when participants join and leave. – With failures: Linear in the number of failures. – Improves upon algorithms using group communication. 16

II. Reconfigurable Atomic Memory for Dynamic Distributed Environments [Lynch, Shvartsman 02] RAMBO 17

Reconfigurable Atomic Memory Implement atomic read/write shared memory in a dynamic network setting. – Participants may join, leave, fail. – Mobile networks, peer-to-peer networks. High availability, low latency. Atomicity for all patterns of asynchrony and change. Good performance under reasonable limits on asynchrony and change. Applications: – Battle data for teams of soldiers in military operation. – Game data for players in multiplayer game. 18

Approach: Dynamic Quorums Objects are replicated at several network locations. To accommodate small, transient changes: – Uses quorum configurations: members, read-quorums, write-quorums. – Maintains atomicity during stable situations. – Allows concurrency. To handle larger, more permanent changes: – – – – Reconfigure Maintains atomicity across configuration changes. Any configuration can be installed at any time. Reconfigure concurrently with reads/writes; no heavyweight view change. 19

RAMBO Reconfigurable Atomic Memory for Basic Objects (dynamic atomic read/write shared memory). Global service specification: RAMBO Algorithm: – – – – Reads and writes objects. Chooses new configurations, notifies members. Identifies, garbage-collects obsolete configurations. All concurrently. 20

RAMBO Algorithm Structure Main algorithm reconfiguration service Loosely coupled RRAMBO Recon service: Reco – Provides a consistent sequence of configurations. Main algorithm: n Recon Net – Handles reading, writing. – Receives, disseminates new configuration information; no formal installation. – Garbage-collects old configurations. – Reads/writes may use several configurations. 21

Main algorithm: Reading and Writing read, write new-config Recon Net Run a version of the standard static two-phase quorumbased read/write algorithm [Vitanyi, Awerbuch], [Attiya, Bar-Noy, Dolev]. Use all current configurations. 22

Static Read/write Protocol Quorum configuration: – read-quorums, write-quorums – For any R in read-quorums, W in write-quorums, R W . Replicate object at all locations. At each location, keep: – value – tag (sequence number, location) Read, write use two phases: – Phase 1: Read (value, tag) from a read-quorum – Phase 2: Write (value,tag) to a write-quorum Highly concurrent. Quorum intersection implies atomicity 23

Static Read/write Protocol Details Write at location i: – Phase 1: Read (value, tag) from a read-quorum. Determine largest seq-number among the tags that are read. Choose new-tag : (larger sequence-number, i). – Phase 2: Propagate (new-value, new-tag) to a write-quorum. Read at location i: – Phase 1: Read (value, tag) from a read-quorum. Determine largest (value,tag) among those read. – Phase 2: Propagate this (value,tag) to a write-quorum. Return value. 24

Dynamic Read/write Protocol Perform two-phase static protocol, using all current configurations. – Phase 1: Collect object values from read-quorums of current configurations. – Phase 2: Propagate latest value to write-quorums of current configurations. When new configuration is provided by Recon: – Start using it too. – Do not abort reads/writes in progress, but do extra work to access additional processes needed for new quorums. Our communication mechanism: – Background gossiping – Terminate by fixed-point condition, involving a quorum from each active configuration. 25

Removing Old Configurations Garbage-collect them in the background. Two-phase garbage-collection procedure: – Phase 1: Inform write-quorum of old configuration about the new configuration. Collect object values from read-quorum of the old configuration. – Phase 2: Propagate the latest value to a write-quorum of the new configuration. Garbage-collection concurrent with reads/writes. Implemented using gossiping and fixed points. 26

Implementation of Recon Uses distributed consensus to determine successive configurations 1, 2, 3, Recon Consensus Net Members of old configuration propose new configuration. Proposals reconciled using consensus. Consensus is a heavyweight mechanism, but: – Only used for reconfigurations, infrequent. – Does not delay read/write operations. 27

Consensus Implementation init(v) decide(v) init(v) Consensus Use a variant of timing-based Paxos algorithm [Lamport] Agreement, validity guaranteed absolutely (independent of timing). Termination guaranteed when underlying system stabilizes. Leader chosen using failure detectors; conducts two-phase algorithm with retries. 28

Analysis We prove atomicity for arbitrary patterns of asynchrony and change, using partial order methods. Analyze performance conditionally, based on failure and timing assumptions. E.g., under reasonable “steady-state” assumptions: – Removing old configurations takes time at most 6d. – Reads and writes take time at most 8d. LAN implementation [Musial 02]. 29

Other Approaches Use consensus to agree on total order of operations: [Lamport 89] – Not resilient to transient failures. – Termination of reads/writes depends on termination of consensus. Totally-ordered broadcast over group communication: [Amir, Dolev, Melliar-Smith, Moser 94], [Keidar, Dolev 96] – View formation takes a long time, delays reads/writes. – One change may trigger view formation. 30

III. Reliable Multicast Protocols [Livadas, Keidar, Lynch 01], [Livadas, Keidar 02], [Livadas, Lynch 02] 31

Physical System Model h1 r3 r1 h2 h3 r2 r6 h4 h5 r5 r4 h6 Infinite # of symmetric hosts i.e., same resources, processes Network of interconnected routers Failures: fail-stop host crashes and packet drops 32

Reliable Multicast Service (RMS) RM-Client1 RM-Client2 rm-leave rm-join rm-send rm-join-ack 11 1(p) 1 rm-recv2(p) RM( ) Overview: – Single reliable multicast group & single client process/host – RM( ) encompasses behavior of all other processes on hosts and functionality of underlying network – Parameter bounds the reliable delivery delay Membership: – A host becomes a member of the group upon the acknowledgment of its join request – A host ceases to be a member upon issuing a leave request 33

Multicast Reliability: Properties Let h,s be hosts and p,p’ be packets from s such that p p’ Eventual Delivery: If p’ remains active forever after its transmission, h delivers p, and h remains a member thereafter, then h delivers p’. Time-Bounded Delivery: Let T denote the time interval ranging from the transmission time t of p’ to the point in time time units past t. If p’ remains active throughout T, h delivers p prior to the expiration of T, and h remains a member thereafter within T, then h delivers p’ within T. 34

Reliable Multicast Implementation (RMI) Scalable Reliable Multicast (SRM) [Floyd et. al., 97] – Retransmission-based protocol using NACKs – Uses best-effort IP multicast as communication primitive Augment SRM so as to precisely specify: – when a host becomes a member of the group – which packets each member should attempt to recover 35

SRM’s Recovery Scheme Each host schedules a request for each missing packet Any capable host schedules a reply to each such request Duplicate requests/replies limited using deterministic and probabilistic suppression schemes repl h rqst h’ s 36

RMI Timed I/O Automaton Model RM-Client1 RM-Client2 RM-rep1 RM-mem1 RM-rep2 RM-mem2 RM-IPbuff1 RM-rec1 RM-IPbuff2 RM-rec2 IP-mcast 37

Analysis of RMI Correctness Analysis: RMI implements RMS; i.e., RMI delivers appropriate packets to appropriate members of the reliable multicast group as dictated by RMS. Conditional Timeliness Analysis: Presuming no leaves, no crashes, bounded transmission latencies and latency estimates, bounded loss detection delays, and a fixed number k of packet drops per packet transmission/recovery, packets are guaranteed delivery within particular delivery delay upper bound (k). 38

Byproduct of RMI Timeliness Analysis Constraints on SRM scheduling parameters – C3 C1 : back-off abstinence does not affect next round requests – D1 D2 2 2 C1: replies received prior to transmission of next round requests – D1 D2 D3 2 C1: requests not discarded due to prior round reply abstinence Violating these guidelines may lead to superfluous traffic and unwarranted recovery round failure 39

Caching-Enhanced SRM (CESRM) Enhance SRM with caching scheme – determines and caches optimal requestor/replier pair for each loss – expedites recovery of losses based on requestor/replier pair cache exp-repl h h’ exp-rqst s 40

CESRM Timed I/O Automaton Model RM-Client1 RM-Client2 RM-rep1 RM-mem1 RM-rep2 RM-mem2 RM-IPbuff1 RM-rec1 RM-IPbuff2 RM-rec2 IP-mcast IP-ucast 41

CESRM: Conditional Timeliness Analysis Definition: A cache hit corresponds to a recovery scenario in which: – hosts that share the loss also share optimal requestor-replier pair, – the optimal requestor shares the loss, and – the optimal replier does not share the loss. Claim: For any execution where no recovery packets are dropped, cache hits lead to packet recovery within at most: DET-BOUND dreorder-delay 2d as opposed to: DET-BOUND (C1 C2)d d (D1 D2)d d For C1 C2 D1 D2 1, worst-case recovery delay 42 following detection reduced from 3 RTT to 1

Estimating the Frequency of Cache Hits Analyzed 14 multicast transmission traces [Yajnik et al. 95/96] On average, 1/3 of losses recoverable by expedited recoveries More precise identification of loss locations may lead to the recovery of 1/2 of losses by expedited recoveries Abstract loss location representationActual loss location representation 43

IV. Modeling Framework To support all this analysis, we need a well-designed mathematical foundation, capable of: – Describing all the algorithms we want to consider. – Supporting compositional and conditional analysis. We use a framework based on interacting state machines. – Basic asynchronous model (I/O automata) – Augmented models: Timed, hybrid (continuous/discrete), probabilistic. 44

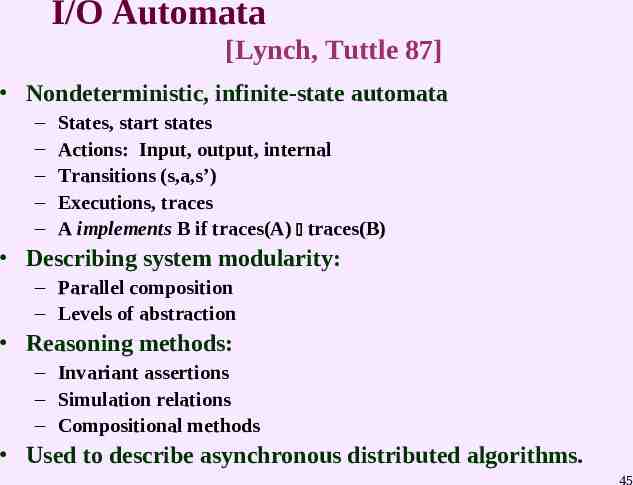

I/O Automata [Lynch, Tuttle 87] Nondeterministic, infinite-state automata – – – – – States, start states Actions: Input, output, internal Transitions (s,a,s’) Executions, traces A implements B if traces(A) traces(B) Describing system modularity: – Parallel composition – Levels of abstraction Reasoning methods: – Invariant assertions – Simulation relations – Compositional methods Used to describe asynchronous distributed algorithms. 45

Timed I/O Automata (TIOA) [Merritt, Modugno,Tuttle], [Lynch, Vaandrager] Add time-passage actions Used to describe: – Timeout-based algorithms. – Local clocks, clock synchronization. – Timing/performance characteristics. 46

Hybrid I/O Automata (HIOA) [Lynch, Segala, Vaandrager 01, 02] Automata with continuous and discrete transitions – – – – – – – States: Input, output, internal variables; start states Actions: Input, output, internal Discrete transitions (s,a,s’) Trajectories , mapping time intervals to states Execution 0 a1 1 a2 2 Trace: Project on external variables, external actions. A implements B if traces(A) traces(B). Composition, levels of abstraction. Invariants, simulation relations, compositional reasoning Used to describe: – Controlled systems – Automated transportation systems – Embedded systems 47

Timed I/O Automata (TIOA), Revisited [Lynch, Segala, Vaandrager, Kirli] Have reformulated TIOA as a special case of HIOA: – No external variables: states consist of internal variables only. Use trajectories to describe time-passage, instead of timepassage actions. Monograph on modeling timed systems: – – – – Theory Analysis methods Examples Relationships with other timed models [Alur, Dill], [Merritt, Modugno, Tuttle], [Maler, Manna, Pnueli] 48

Probabilistic Automata (PIOA, PTIOA) [Segala 95] [Segala, Vaandrager, Lynch 02] Add probabilistic transitions (s,a, ) Work in progress [Segala, Vaandrager, Lynch], [de Alfaro, Henzinger]: – External behavior notion. – Composition theorems. – Implementation relationships Used to describe: – Probabilistic and nondeterministic behavior. – Randomized distributed algorithms – Systems with probabilistic assumptions 49

V. Plans for the Next Two Years 50

Plans: Distributed Algorithms Reconfigurable atomic memory – LAN implementation [Musial, Shvartsman] – More analysis: “Normal behavior” starting from some point – Algorithmic improvements: Concurrent garbage-collection [Gilbert] Reduced communication Better join protocol Faster reads – Extensions: “Leave” protocol Backup strategies for when configurations fail Support for choosing configurations 51

Plans: Distributed Algorithms Reliable multicast protocols [Livadas]: – Extend SRM analysis to handle nodes leaving and failing. – Finish CESRM analysis. – Analyze LMS protocol [Papadopoulos, Varghese 98]. Mobile systems: – – – – – Topology control [Hajiaghayi, Mirrokni] Time synchronization Tracking Resource allocation Data management Peer-to-peer systems [Lynch, Stoica]: – Location services that are provably fault-tolerant under reasonable steady-state assumptions. – Data management over location services 52

Plans: Semantic Framework Timed models: – Composition theorems for timing properties. – Structured TIOAs to support conditional performance analysis. – Relate TIOA to other models, e.g., reactive modules [Alur, Henzinger]. Probabilistic models: – Composition theorems [de Alfaro, Henzinger] Integrate timed and probabilistic models into one semantic framework. 53