Case-based reasoning and learning Prof. Gheorghe Tecuci Learning

32 Slides332.50 KB

Case-based reasoning and learning Prof. Gheorghe Tecuci Learning Agents Laboratory Computer Science Department George Mason University 2002, G.Tecuci, Learning Agents Laboratory 1

Overview Introduction Protos: A case-based reasoning and learning system Knowledge representation and organization Learning Recommended reading 2002, G.Tecuci, Learning Agents Laboratory 2

Case-based reasoning Case-Based Reasoning (CBR) is a name given to a reasoning method that uses specific past experiences rather than a corpus of general knowledge. It is a form of problem solving by analogy in which a new problem is solved by recognizing its similarity to a specific known problem, then transferring the solution of the known problem to the new one. CBR systems consult their memory of previous episodes to help address their current task, which could be: -planning of a meal, -classifying the disease of a patient, -designing a circuit, etc. 2002, G.Tecuci, Learning Agents Laboratory 3

Classification tasks What is a classification? Classification is assigning a given input to one of the categories in a preenumerated list. Many case-based reasoning systems perform classification tasks. 2002, G.Tecuci, Learning Agents Laboratory 4

Case-based reasoning for classification tasks Case-based reasoning for classification is a kind of instance-based learning, where the instances have a more complex (structural) description. In CBR systems, a concept ci is represented extensionally as a collection of examples (called exemplars or cases) ci {ei1, ei2, .}. Then ‘a’ belongs to the concept ci if ‘a’ is similar to an element eij of ci, and this similarity is greater than the similarity between ‘a’ and any other concept exemplar. 2002, G.Tecuci, Learning Agents Laboratory 5

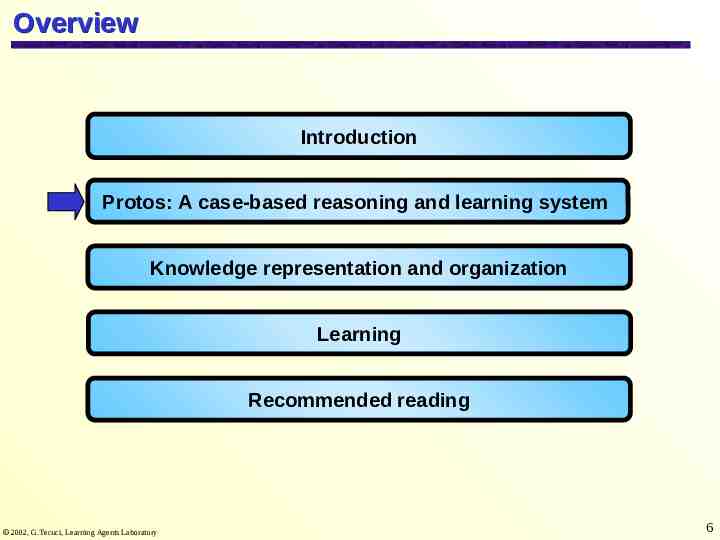

Overview Introduction Protos: A case-based reasoning and learning system Knowledge representation and organization Learning Recommended reading 2002, G.Tecuci, Learning Agents Laboratory 6

The PROTOS system Protos is a case-based problem solving and learning system for heuristic classification tasks. The main features of the system will be presented in the context of a task for the classification of hearing disorders. In Protos, a concept ci is represented extensionally as a collection of examples (called exemplars or cases): ci {ei1, ei2, .}. Classifying an input NewCase involves searching for a concept exemplar ejk that strongly matches NewCase. If such an exemplar is found then Protos asserts that NewCase belongs to the concept cj (the concept whose exemplar is ejk). 2002, G.Tecuci, Learning Agents Laboratory 7

The classification and learning algorithm Input: a set of exemplar-based categories C {c1, c2, . , cn} and a case (NewCase) to classify. REPEAT Classify: Find an exemplar of ci C that strongly matches NewCase and classify NewCase as ci. Explain the classification. Learn: If the expert disagrees with the classification or explanation then acquire classification and explanation knowledge and adjust C so that NewCase is correctly classified and explained. UNTIL the expert approves the classification and explanation. 2002, G.Tecuci, Learning Agents Laboratory 8

The PROTOS system: explaining a classification C is the set of all concepts recognized by the system, each concept being represented extensionally as a set of representative exemplars. How could one explain the classification of a case to a concept? Explaining the classification involves showing the line of reasoning used during matching. Which would be a simple type of explanation? 2002, G.Tecuci, Learning Agents Laboratory 9

Explaining a classification (cont.) Which would be a simple type of explanation? The simplest explanation is a list of the matched features of the case and the exemplar. Which would be a more detailed explanation? A more detailed explanation may include justifications of the flexible matches performed as, for instance, in the case of classifying chairs: 'pedestal' was matched with 'legs(4)' because both are specializations of seat support" or 'seat' was matched with 'backrest' because seat enables 'hold(person)' and backrest enables 'hold(person)' 2002, G.Tecuci, Learning Agents Laboratory 10

The PROTOS system: learning When would a CBR system like PROTOS need to learn? When it makes mistakes. What kind of mistakes could PROTOS make? Errors of classification and errors of explanation. How could it learn? 2002, G.Tecuci, Learning Agents Laboratory 11

The PROTOS system: learning How could it learn? Adjust the categories so that the case will be properly classified and explained. Which is a simple way to assure that the case will be correctly classified in the future? Add the case to the correct category as a new exemplar. 2002, G.Tecuci, Learning Agents Laboratory 12

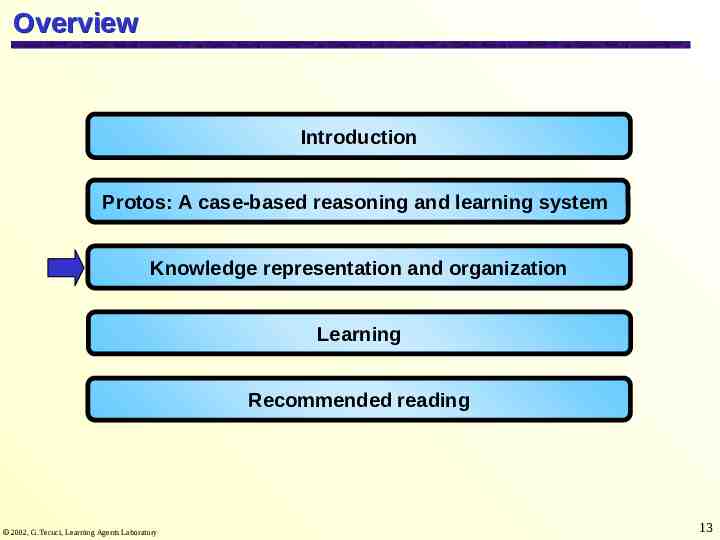

Overview Introduction Protos: A case-based reasoning and learning system Knowledge representation and organization Learning Recommended reading 2002, G.Tecuci, Learning Agents Laboratory 13

Knowledge representation and organization In Protos, the exemplars and the cases to be classified are represented as collections of features. The description of a case (or exemplar) may be incomplete, in the sense that it does not include some of the features present in other case descriptions. Also, the features with which cases are described may not directly indicate category membership. Therefore, one has to make inferences. 2002, G.Tecuci, Learning Agents Laboratory 14

Sample case description Case to be classified as belonging to one of the categories: {normal ear, cochlear noise, cochlear age, otitis media, .}: NewCase: sensorineural: mild notch at 4K history: noise speech: normal oc acoustic reflex: normal oi acoustic reflex: elevated i acoustic reflex: normal c acoustic reflex: normal static: normal tympanogram: a air: normal 2002, G.Tecuci, Learning Agents Laboratory 15

Organization of the exemplars and concepts remindings feature-j . w1 feature-k censors feature-m . feature-n w2 concept-j concept-i strongly prototypical exemplar exemplar-1 of concept-i 2002, G.Tecuci, Learning Agents Laboratory weakly prototypical exemplar difference(feature-p) exemplar-2 of concept-i 16

Remindings and Censors Remindings associate features with categories or particular exemplars. Such associations provide Protos with hypotheses during classification, which restrict its search for a matching exemplar. For example, "air: normal" would be a reminder of the category "normal ear". Remindings are compiled from explanations of the relevance of features to categories or exemplars. A reminding has a strength that estimates the conditional probability p(category feature) or p(exemplar feature). Censors are negative remindings. A censor is a feature that tends to rule out a classification. For example, "temperature: fever" would be a censor for the category "healthy patient". 2002, G.Tecuci, Learning Agents Laboratory 17

Prototypicality and difference links Prototypicality ratings provide a partial ordering on exemplars within a category. Exemplars of a category which have the highest family resemblance (i.e. are most similar to other members of the category) are most prototypical. A difference link connects two exemplars (in the same or different categories) and records important featural differences between them which may suggest alternate classifications and better exemplars for use during classification. 2002, G.Tecuci, Learning Agents Laboratory 18

Case classification Hypothesize classifications based on the case's features by using remindings and censors. The remindings and censors associated with the features of a new case are combined to produce an ordered list of possible classifications. Attempt to confirm a hypothesis by matching the new case with prototypical exemplars. A process of knowledge-based pattern matching determines the similarity of the case and each exemplar. It uses previously acquired domain knowledge to explain how features of the case provide the same evidence as features of the exemplar. Overall similarity of the two cases is asserted by evaluating the quality of the resulting explanation and the importance of unmatched features. If a match is imperfect, Protos searches for a more similar exemplar by traversing difference links associated with the current exemplar. If the match is strong (i.e., adequately explained), it is presented to the user for approval and discussion. If it is weak, Protos considers other hypotheses and exemplars. It reports failure if its hypotheses are exhausted without finding an adequate match. 2002, G.Tecuci, Learning Agents Laboratory 19

Use of reminders to suggest classifications of input Some of the features of NewCase are reminders for two possible diagnosis, "normal ear" and "cochlear age“: sensorineural: mild notch at 4K history: noise speech: normal oc acoustic reflex: normal oi acoustic reflex: elevated i acoustic reflex: normal c acoustic reflex: normal static: normal tympanogram: a air: normal 2002, G.Tecuci, Learning Agents Laboratory normal ear cochlear age 20

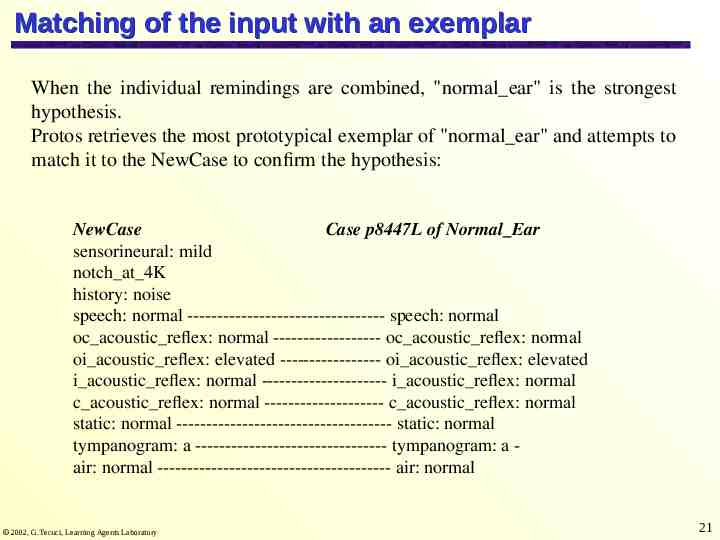

Matching of the input with an exemplar When the individual remindings are combined, "normal ear" is the strongest hypothesis. Protos retrieves the most prototypical exemplar of "normal ear" and attempts to match it to the NewCase to confirm the hypothesis: NewCase Case p8447L of Normal Ear sensorineural: mild notch at 4K history: noise speech: normal --------------------------------- speech: normal oc acoustic reflex: normal ------------------ oc acoustic reflex: normal oi acoustic reflex: elevated ----------------- oi acoustic reflex: elevated i acoustic reflex: normal --------------------- i acoustic reflex: normal c acoustic reflex: normal -------------------- c acoustic reflex: normal static: normal ------------------------------------ static: normal tympanogram: a -------------------------------- tympanogram: a air: normal --------------------------------------- air: normal 2002, G.Tecuci, Learning Agents Laboratory 21

Overview Introduction Protos: A case-based reasoning and learning system Knowledge representation and organization Learning Recommended reading 2002, G.Tecuci, Learning Agents Laboratory 22

Protos-teacher dialog Protos believes the match to be strong since all the exemplar's features are matched. However the teacher rejects the classification of the NewCase as a "normal ear". Dialog with the user helps Protos to improve its knowledge. Protos asks about the features of the NewCase that were not matched by the exemplar and is told that all are incompatible with "normal ear". How could this information be used? This information will be used to define a difference link associated with the “normal ear” exemplar, which will point toward the current (but not yet known) classification of the NewCase. Protos also asks whether the exemplar has additional features that discriminate it from NewCase, but the teacher does not identify any. 2002, G.Tecuci, Learning Agents Laboratory 23

Protos-teacher dialog (cont.) Protos then tries to confirm its second hypothesis, "cochlear age", but it fails to find a good match with any exemplar. This means that Protos exhausted all the hypotheses. What can be done in this situation? Protos asks the user to classify the NewCase and it is told that the classification is "cochlear noise". Since the system has no exemplar of this category, NewCase is retained as an exemplar. 2002, G.Tecuci, Learning Agents Laboratory 24

Protos-teacher dialog (cont.) Dialog with the user helps Protos to acquire general knowledge of "cochlear noise". Protos asks the teacher to explain the relevance of each case feature to the classification and receives explanations such as: "history:noise is required by cochlear noise" How could this information be used? The feature “is required” indicates Protos to define "history:noise" as a strong remainding to "cochlear noise". 2002, G.Tecuci, Learning Agents Laboratory 25

Protos-teacher dialog (cont.) Another explanation is: "notch at 4k is usually caused by cochlear noise" How could this information be used? The relationship “is usually caused” indicates to define "notch at 4k" as a less strong remainding to "cochlear noise". Protos provides a set of relationships that the user may use in explanations. Protos also installs a difference link between the exemplar p8447L of the "normal ear" and the NewCase and annotates it with the features of NewCase that the teacher has previously stated that are incompatible with normal ear. 2002, G.Tecuci, Learning Agents Laboratory 26

The role of explanations in Protos What role do explanations play in learning? Explanations describe the relevance of exemplar features to categories. From such explanations Protos extracts remindings and assesses their strength. Such an explanation is: "history:noise is required by cochlear noise" Explanations describe how different features provide equivalent evidence for classification. Such explanations provide knowledge to match features that are not identical. Examples of such explanations are: notch at 4k is definitionally equivalent to notch 4k or if the category is cochlear noise then c acoustic reflex: normal is sometimes interchangeable with c acoustic reflex: elevated 2002, G.Tecuci, Learning Agents Laboratory 27

The learning algorithm GIVEN: FIND: a new case a classification of the case and an explanation of the classification Search for an exemplar that matches the new case IF not found THEN {classification failure} Ask teacher for classification Acquire explanations relating features to classification Compile remindings Retain case as an exemplar ELSE IF the teacher disapproves THEN {discrimination failure} Reassess remindings Discuss featural matches with the teacher Ask for discriminating features Remember unmatched features to add difference link ELSE {classification is correct} Increase exemplar's prototypicality rating IF match is incompletely explained THEN {explanation failure} Ask the teacher for explanation of featural equivalence IF not given THEN Retain case as exemplar ELSE {processing was successful} 2002, G.Tecuci, Learning Agents Laboratory 28

Exercise At a high level of generality, CBR systems can be described as performing the following 4-step process: 1. Retrieve the most similar case 2. Use the case to produce a tentative solution of the input problem 3. Revise the proposed solution 4. Learn from this experience to improve performance in the future. Explain how each of this step is performed in Protos. 2002, G.Tecuci, Learning Agents Laboratory 29

Exercise What is the difference between instance-based learning, on one hand, and case-based reasoning and learning, on the other hand? What are the relative characteristic features of instance-based learning? Instance-based learning: -is a special (simplified) type of case-based reasoning for classification tasks; -uses a simple (feature-vector) representation of the exemplars; -compensates the lack of guidance from general domain knowledge by using a large number of examples. What are the relative characteristic features of CBR? Case-based reasoning and learning: -is used for other tasks besides classification; -in general, a case has a complex structure, not just a feature vector; -a retrieved case is generally modified, when applied to a new problem; -utilizes general domain knowledge. 2002, G.Tecuci, Learning Agents Laboratory 30

Exercise What is the difference between case-based reasoning, on one hand, and analogical reasoning, on the other hand? What are the relative characteristic features of case-based reasoning? All the cases are from the same domain. Therefore it does not need to deal with the ACCESS problem. It is generally regarded as a special type of analogical reasoning. What are the relative characteristic features of analogical reasoning? The sources and the target are generally from different domains. 2002, G.Tecuci, Learning Agents Laboratory 31

Recommended reading reading Recommended Porter B.W, Bareiss R., Holte R.C., Concept Learning and Heuristic Classification in Weak-Theory Domains, in Readings in Knowledge Acquisition and Learning, Morgan Kaufmann, 1992. Bareiss R., Porter B.W, Murray K.S., Supporting Start-to-Finish Development of Knowledge Bases, Machine Learning, 4, 259-283, 1989. Bareiss R., Exemplar-Based Knowledge Acquisition, Academic Press, 1989. Aamodt A., Knowledge Acquisition and learning by experience – the role of casespecific knowledge, in Tecuci G. and Kodratoff Y. (eds.), Machine Learning and Knowledge Acquisition: Integrated Approaches, pp.197-245, Academic Press, 1995. Sycara K. Miyashita K., Learning control knowledge through case-based acquisition of user optimization preferences in ill-structured domains, in Tecuci G. and Kodratoff Y. (eds.), Machine Learning and Knowledge Acquisition: Integrated Approaches, pp. 247-275, Academic Press, 1995. 2002, G.Tecuci, Learning Agents Laboratory 32