CAOC Performance Assessment System “CPAS” Information Age

27 Slides6.03 MB

CAOC Performance Assessment System “CPAS” Information Age Metrics Working Group 26 October 2005 JOHNS HOPKINS U N I V E R S I T Y Applied Physics Laboratory Precision Engagement - Operational Command & Control Technical Manager: Glenn Conrad – (240) 228-5031 – [email protected] Project Manager: F.T. Case – (240) 228-8740 – [email protected] 1

Background CAOC performance assessment system (CPAS) project evolved from an the FY05 C2ISRC-sponsored project DeCAIF. 505th OS leadership identified the need for an “ACMI-like” capability for the CAOC-N instructor cadre. The system should be capable of: Tracking targets through the F2T2EA “kill chain” Highlighting procedural errors that occur during execution Automatically generating the CAOC-N’s debriefing “shot sheet” APL was tasked to develop and demonstrate a prototype CAOC “instrumentation” capability that would support TST operations at the CAOC-N and the TBONE initiative. C2 Battle Lab leadership approved APL’s proposed CPAS project as an initiative in Jan 05. APL worked with CAOC-N subject matter experts (SMEs) on the spiral development of CPAS from Feb 05 – Aug 05 to establish the required info items and the look & feel of how CPAS would display them. APL engineers demonstrated the functional CPAS X-1 prototype at CAOC-N during RED FLAG 05-4.2 (mid-Aug 05) and the X-2 prototype at the C2 Transformation Center during TBONE LOE#5 (JEFX 06 Spiral 0) in Sep 05. 2

2/14/2003 CAOC PAS Demonstrates a prototype “ACMI-like” instrumentation capability for Falconer instructor cadre. Enables near-real-time, end-to-end performance assessment and reconstruction of TST operations to support performance assessment. Navy RPTS BDA Targeteer Rerole Cord W DTRA UK Targeteer W DTRA RAAF SOF SOLE FIRES W Coalition SOF UK SOF Targeteer W SOF US SOF Attack Cord W Navy UK Rerole Cord N Navy Targeteer N Targeteer N TST Cell Attack Cord N SOLE FIRES N/S C2 Duty Officer Rerole Cord S Surf Track Cord Targeteer S Dep TST Chief Dep SIDO TST Cell Chief Navy Targeteer S Navy Attack Cord S Navy Marine DCO UK, AF & Navy CAOC PERFORMANCE ASSESSMENT SYSTEM (CPAS) CAOC PAS provides a means to collect and store time-stamped information in near realtime during TST operations. Develops and refines filters and algorithms that provide key information items required for Falconer crewmember situational awareness and performance assessment Provides an end-user defined, near real-time presentation of time-lined activity information for presentation to individuals and groups during post-mission operations 3

Project Objective Demonstrate a capability to reconstruct and display events and decisions that occur within a TST cell to support post-mission assessment. GOALS Develop a “non-intrusive” data capture (collection and archiving) capability for TST-related information at CAOC-N Develop a reconstruction capability that will portray event and decision activities that occurred during the execution of TST ops Develop an information injection capability that enables an instructor or assessor to input comments/observations into the data archive Implement the CAOC Assessment System capability at CAOC-N and demonstrate it during Red Flag 05-4.2 (Sep 2005) Develop initial capability to work with the C2B TBONE initiative and CAOC processes using TBONE 4

CPAS Operational Architecture for CAOC-N Individual Presentation CPAS Algorithms NELLIS Range Operation s CPAS DR CPAS Presentation Display CAOC-N network O-O-D-A Navy RPTS Rerole Cord W DTRA UK Targeteer W DTRA RAAF SOF SOLE FIRES W Coalition SOF UK SOF Targeteer W SOF US SOF Attack Cord W Instructor Injection Capability BDA Targeteer Rerole Cord N Navy Targeteer N Targeteer N TST Cell Attack Cord N SOLE FIRES N/S Navy C2 Duty Officer Rerole Cord S Navy Surf Track Cord Targeteer S UK Dep TST Chief Dep SIDO Targeteer S Navy Attack Cord S Navy TST Cell Chief Marine DCO UK, AF & Navy Group After Action Review (AAR) 5

CPAS Prototype Software Developed in Microsoft Visual Studio (IDE) using Microsoft .NET Software Framework 20K lines of C# and C code ; Runtime executable 2 Mb Built on top of MS Access (DBMS) 3 logical constructs of software: collectors, algorithms, user interface Layered software architecture for both CAOC-N and TBONE Presentation Layer Presentation Layer CAOC-N LAN Jabber Server Database IMTDS Link 16 Collector CPAS Algorithms Jabber Chat Collector ADOCS Collector CPAS Algorithms WEEMC Collector ADOCS Server Data Layer Data Layer CAOC-N (X-1) TBONE LOE #5 (X-2) Exported WEEMC Msn History File 6

CPAS Functionality Maintains Status Monitor of Targets in Near Real-Time Summary of Procedural Errors ADOCS “Status Light” Slide Bar ADOCS “Remarks” Search Engine Calculates TST measures and metrics of interest Creates and Exports Summary of Target Activity “Drill-down” to F2T2EA Details for any Target Elementary search engine for IM chat dialogue 7

CPAS X-1 Demo CPAS ADOCS Server ADOCS Client 8

Next Steps in CPAS Evolution APL Focus Develop additional “collectors” and “correlation/fusion algorithms” for integration into CPAS X-1 and/or X-2 framework(s) CAOC-N Focus Employ CPAS X-1 at CAOC-N during AWC sponsored exercises and training events Develop and deliver CPAS V-1.0 capability to CAOC-N and evolve V-1 as required AFRL/HE Focus Incorporate the CPAS X-1 “data collection instrument” with AFRL/HE’s (Mesa) subjective evaluation capability (Performance Measurement System) to provide an enhanced AOC Performance Measurement System AFEO Focus Evolve and employ CPAS X-2 during JEFX Spiral 2/3/MainEx in support of AFEO assessment team Develop and deliver CPAS V-2.0 capability for use by AFEO assessment team during JEFX 2006 MainEx Work with JFIIT to integrate CPAS V-2.0 with WAM to provide an enhanced data collection and presentation capability for use during JEFX 2006 MainEx 9

BACKUP SLIDES

Why a CAOC Assessment System ? The Problem: There is no efficient means to collect, store, correlate, and retrieve key information items needed to reconstruct a time history of activities and events that occur during real-time CAOC operations to answer the question “What happened ?” The Need: (C2-C-N-06-05 & C2-A-N-02-03) A means to capture and archive time-stamped information from multiple CAOC operational sources, Algorithms to support correlation of events, and A means to visually portray both activities performed and decisions made for both operators and instructors alike. The Payoff: Enable experts to assess what happened during training and operational sessions, Provide the information required to improve process shortfalls, identify training needs, and to highlight the things that are being done right Support archiving for future after-action review (AAR) 11

CPAS Spiral Development Robust DR Final Presentation Procedural Algorithms CAOC-N DR CAOC-N Algorithms CAOC-N Presentation WEEMC Data TBONE Functional Demo CAOC-N Functional Demo Aug 05 Feb 05 Prototype Screens Sep 05 KA on Presentation Prototype TBONE Interface Research Aug 05 KA with CAOC-N Instructor Cadre Aug 05 CAOC-N Integration Test #2 (SP2A) Completed DR Refine Presentation Mar 05 Updated Screens Prototype DR Refine Presentation Prototype May 05 Updated DR Refine Presentation Initial Algorithms 12

TST Process @ CAOC - N 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12. 13. White Force (instructors) provide initial input to TST cell on potential target Potential target info entered into ADOCS TDN manager and Intell develops target Target is classified as a TST and entered into ADOCS TDL list Targeting data is developed for target folder by Intel and targeting Attack Coordinator searches ATO for available weapon platforms to be tasked to engage TST. Nominations are provided to TST cell chief who approves pairing. Positive target ID is accomplished by the Intell cell and SODO approves tasking order. Tasking order (15-line text msg) is drafted by C2DO & GTC and transmitted via Link-16 or voice to controller (AWACS) AWACS acknowledges receipt and passes info to weapon platform who accepts or rejects tasking. Acknowledgment is provided to C2DO with estimated TOT from the weapon platform Target is attacked Weapon platform provides MISREP to AWACS from fighter with actual TOT and BDA (if available). Target kill chain is complete Navy RPTS BDA Targeteer Rerole Cord W Rerole Cord N DTRA UK Targeteer W Targeteer N DTRA RAAF SOF SOLE FIRES W Targeteer N Coalition SOF UK SOF Targeteer W SOF US SOF Attack Cord W Navy UK TST Cell Navy Attack Cord N SOLE FIRES N/S C2 Duty Officer Rerole Cord S Surf Track Cord Targeteer S Dep TST Chief Dep SIDO TST Cell Chief Navy Targeteer S Navy Attack Cord S Navy Marine DCO UK, AF & Navy CCO White Force SADO BCD SOLE SIDO MARLO ACF Analysis Coord Fusion IDO ISR SCIF SIGINT MASINT IMINT SODO NALE TST Ch GTC C2DO ATK COORD Target Duty OFC (TDO) Tgt’eer Wpn’eer 13

Reactive Targeting Coordination Card 14

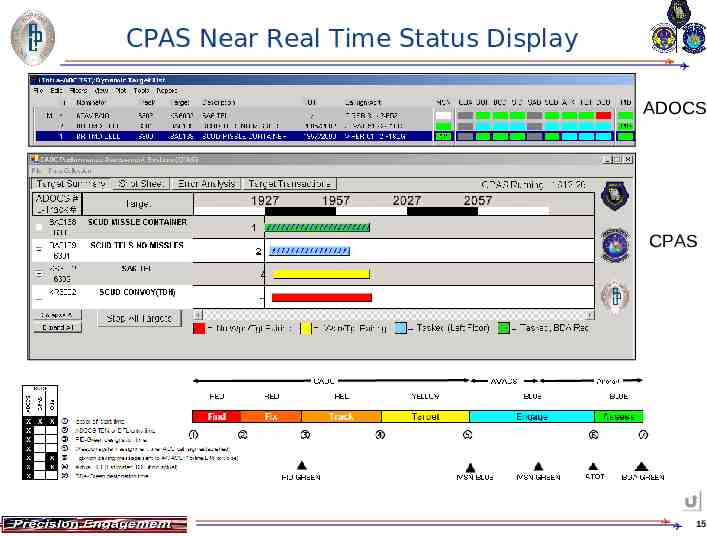

CPAS Near Real Time Status Display ADOCS CPAS 15

Generates Summary of Process Errors 16

“Drill-down” to Kill-Chain Details for any Target 17

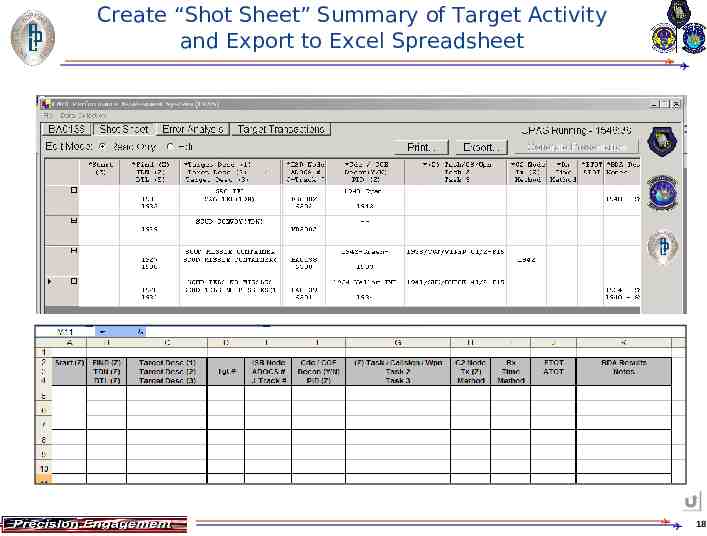

Create “Shot Sheet” Summary of Target Activity and Export to Excel Spreadsheet 18

IM Chat Correlation and Search Correlates milestone events with IM chat dialogue 19

Chat Log Search and Catalogue 20

Measures & Metrics These were measures of interest for CAOC-N instructors at RED FLAG 05-4.2 Number of targets prosecuted listed by their priority number Total number of targets prosecuted Average time of target prosecution Average time a target stayed in each kill chain segment 21

TST Measures of Interest* TST Measure of Interest 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 Detected entities correctly identified (%) Detected entities incorrectly identified (%) Detected entities reported without sufficient ID (%) Source of detection and ID (entity name or node) Source of ID error (entity name or node) Detection to to ID (elapsed time) ID to target nominated (elapsed time) Target nominated to target approved (elapsed time) Target approved to weapon tasked (elapsed time) Positional accuracy of reported targets coordinates (TLE) ISR reported targets on Track Data Nominator (quantity) ISR reported targets on ITM-Dynamic Target List (quantity) Completeness of ISR reported targets (%) Targets tasked to shooters (quantity) Targets tasked to shooters via data link (quantity) Targets tasked to shooters via voice (quantity) Targets tasked to shooters via both data link voice (quantity) Target location provided verbally to shooters via coordinates or bullseye (quantity) Target location provided verbally to shooters via talk-on from geographical features (quantity) Target location provided via digital cueing-e.g., track number and SPOI (quantity) Targets successfully attacked (quantity) Targets unsuccessfully attacked (quantity) Targets assigned (quantity) Time from target tasked to target attacked (quantity) Targets attacked (quantity) Targets not attacked (quantity) Time form target detected to target successfully attacked (elapsed time) Unsuccessful Attacks: Attacked wrong enemy target (quantity) Unsuccessful Attacks: Attacked friendly (quantity) Unsuccessful Attacks: Other (quantity and characterization) Cause of unsuccessful attacks: C2 error (quantity) Cause of unsuccessful attacks: Aircrew error (quantity) Cause of unsuccessful attacks: Environmental (quantity) Cause of unsuccessful attacks: Other (quantity) Timeliness of DT processing (time) ? *e-mail correspondence - Bill Euker to F.T. Case - Friday, September 16, 2005 4:33 PM CPAS X-1 can calculate 34% of these measures of interest Green – can do now (12) Yellow – could do (7) White – harder to do - need additional collectors (15) Red – can’t do (1) 22

CPAS Calculation of the TST MOI 23 6 14 25 24 21 21 22 26 35 9 8 23

CPAS Data Collection Sources During JEFX 2002, the following systems in the TCT section, on the Combat Ops floor, and on the Nellis ranges had the capability to automatically record time tagged information for post processing: * ADSI. Records link data received at the ADSI. Data is written to JAZZ disks for replay on ADSI. Sheltered JTIDS System (SJS). Records link data received and transmitted at the SJS. Data will be translated into ASCII format and provided on a CR-ROM on a daily basis. Joint Services Workstation (JSWS). Records data received and displayed on the JSWS. Data is written to 8mm DAT tapes for replay on JSWS. Information Workspace (IWS). IWS voice will be recorded using VCRS connected to primary TCT nodes on the AOC floor. IWS text chat will be logged and downloaded at the end of each day. IWS peg-board activity will be manually captured using screen capture and stored for post-event analysis. Digital Collection Analysis and Review System (DCARS). DCARS will record all simulation traffic including ground game target locations. Tip OFF. A laptop loaded with Tip Off software will be connected to the TRS in the JICO cell and will log all incoming TIBS and TDDS traffic received at that node. GALE. The GALE system used by the Threat Analyst (SIGINT Tracker) will log all incoming SIGINT data and provide post event hard and soft copy detection and convolution data. WebTAS. WebTAS can be interfaced with the TBMCS SAA system and record all track information received and displayed on the SAA. Data is translated into ASCII format for inclusion into the master JEFX 02 TCT assessment database. * From AFC2TIG, JEFX 02 TIME CRITICAL TARGETING DATA MANAGEMENT AND ANALYSIS PLAN, July 2002, para 4.2, pg 11. 24

Future Focus Areas “Automate” scenario selection/tailored training – Scenarios designed to address specific individual and team objectives based on performance – Warehoused vignettes based on desired knowledge, skills, and experiences Make real-time interventions during training event via coaching, tutoring, scenario changes Part-Task Training capability for planning AOC Brief/Debrief System 25

AOC Performance Measurement Success in Meeting Training Objectives Training Objectives How well are training objectives met? Success of training program Measurement Challenges Improve by X% Individual Team Team-of-Teams Competencies, Knowledge and Skills KSA Assessment What KSAs do learners have/lack? Diagnose individuals’ needs for additional training Stimulated or Trained by. Tasks Training Development Challenges Performance Measures by Task How well did learners perform? Put together into vignettes. Simulated Training Scenario Data Collection Instruments 26

AOC Performance Measurement Research objective: – Design and validate measurement methods, criteria, and tools Importance to the warfighter: – Enables performance-based personnel readiness assessment – not “box-checking” – Quantitative & Qualitative assessment of training effectiveness Status: – Testing at CAOC-N (Jun 05) – Approved Defense Technology Objective (HS.52) 27