Research Heaven, West Virginia A Framework for Early

27 Slides254.50 KB

Research Heaven, West Virginia A Framework for Early Reliability Assessment (WVU UI: Integrating Formal Methods and Testing in a Quantitative Software Reliability Assessment Framework 2003) Bojan Cukic, Erdogan Gunel, Harshinder Singh, Lan Guo, Dejan Desovski West Virginia University Carol Smidts, Ming Li University of Maryland

Overview Research Heaven, West Virginia Introduction and Motivation. Software Reliability Corroboration Approach. Case Studies. Applying Dempster Shafer Inference to NASA datasets. Summary and Further Work. 2

Introduction Research Heaven, West Virginia Quantification of the effects of V&V activities is always desirable. Is software reliability quantification practical for safety/mission critical systems? – Time and cost considerations may limit the appeal. Reliability growth applicable only to integration testing, the tail end of V&V. Estimation of operational usage profiles is rare. 3

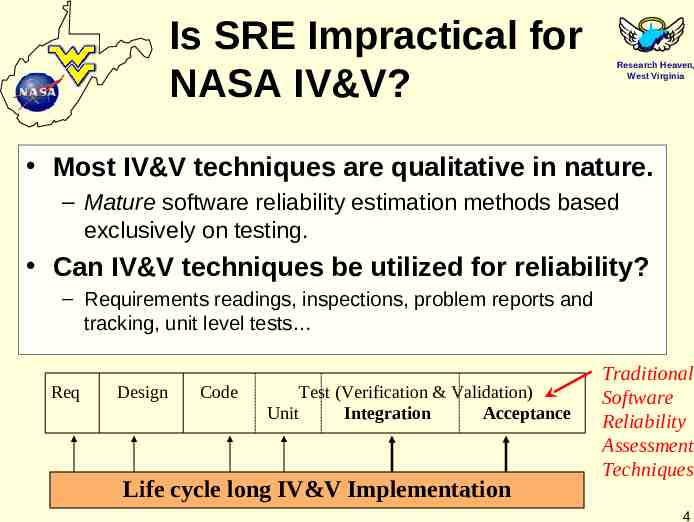

Is SRE Impractical for NASA IV&V? Research Heaven, West Virginia Most IV&V techniques are qualitative in nature. – Mature software reliability estimation methods based exclusively on testing. Can IV&V techniques be utilized for reliability? – Requirements readings, inspections, problem reports and tracking, unit level tests Req Design Code Test (Verification & Validation) Unit Integration Acceptance Life cycle long IV&V Implementation Traditional Software Reliability Assessment Techniques 4

Contribution Research Heaven, West Virginia Develop software reliability assessment methods that build on: – Stable and mature development environments. – Lifecycle long IV&V activities. – Utilize all relevant available information Static (SIAT), dynamic, requirements problems, severities. – Qualitative (formal and informal) IV&V methods. Strengthening the case for IV&V across NASA enterprise. – Accurate, stable reliability measurement and tracking. – Available throughout the development lifecycle. 5

Assessment vs. Corroboration Research Heaven, West Virginia Current thinking – Software reliability “tested into” the product through the integration and acceptance testing. Our thinking – Why “waste” the results of all the qualitative IV&V activities. – Testing should corroborate that the life-cycle long IV&V techniques are giving the “usual” results, that the project follows usual quality patterns. 6

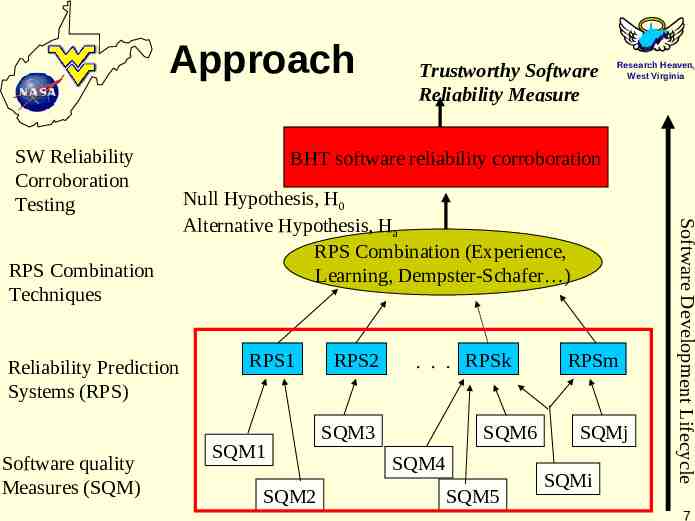

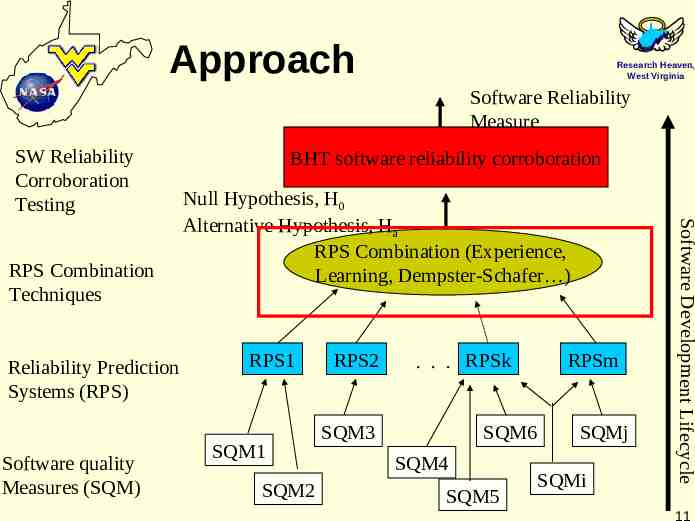

Approach SW Reliability Corroboration Testing Reliability Prediction Systems (RPS) Software quality Measures (SQM) Research Heaven, West Virginia BHT software reliability corroboration Null Hypothesis, H0 Alternative Hypothesis, Ha RPS Combination (Experience, Learning, Dempster-Schafer ) RPS1 SQM1 SQM2 RPS2 . . . RPSk SQM3 SQM6 SQM4 SQM5 RPSm SQMj SQMi Software Development Lifecycle RPS Combination Techniques Trustworthy Software Reliability Measure 7

Software Quality Measures (roots) Research Heaven, West Virginia The following ones used in experiments. – Lines of code – Defect density No defect that remain unresolved after testing, divided by the LOC. – Test coverage LOCtested / LOCtotal. – Requirements traceability RT # requirements implemented/# original requirements. – Function points – . . . In principle, any measures available could/should be taken into account. – Defining appropriate Reliability Prediction Systems (RPS). 8

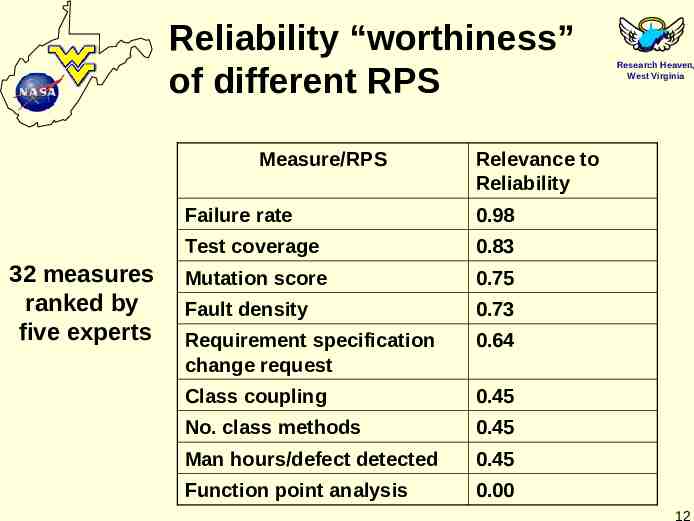

Reliability Prediction Systems Research Heaven, West Virginia An RPS is a complete set of measures from which software reliability can be predicted. The bridge between an RPS and software reliability is a MODEL. Therefore, select (and collect) those measures that have the highest relevance to reliability. – Relevance to reliability ranked from expert opinions [Smidts 2002]. 9

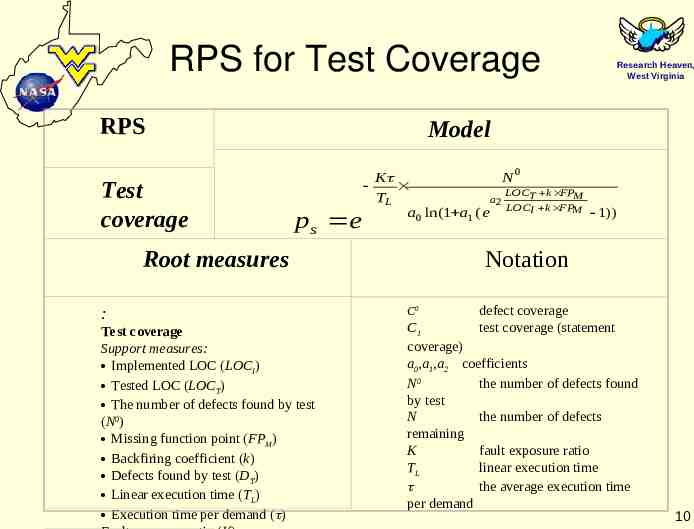

RPS for Test Coverage RPS Test coverage Research Heaven, West Virginia Model ps e K N0 LOCT k FPM TL a2 a0 ln(1 a1 ( e LOCI k FPM 1)) Root measures : Test coverage Support measures: Implemented LOC (LOCI) Tested LOC (LOCT) The number of defects found by test (N0) Missing function point (FPM) Backfiring coefficient (k) Defects found by test (DT) Linear execution time (TL) Execution time per demand ( ) Notation C0 defect coverage test coverage (statement C1 coverage) a0,a1,a2 coefficients N0 the number of defects found by test N the number of defects remaining K fault exposure ratio TL linear execution time the average execution time per demand 10

Approach Research Heaven, West Virginia Software Reliability Measure SW Reliability Corroboration Testing Reliability Prediction Systems (RPS) Software quality Measures (SQM) Null Hypothesis, H0 Alternative Hypothesis, Ha RPS Combination (Experience, Learning, Dempster-Schafer ) RPS1 SQM1 SQM2 RPS2 . . . RPSk SQM3 SQM6 SQM4 SQM5 RPSm SQMj SQMi Software Development Lifecycle RPS Combination Techniques BHT software reliability corroboration 11

Reliability “worthiness” of different RPS Measure/RPS 32 measures ranked by five experts Research Heaven, West Virginia Relevance to Reliability Failure rate 0.98 Test coverage 0.83 Mutation score 0.75 Fault density 0.73 Requirement specification change request 0.64 Class coupling 0.45 No. class methods 0.45 Man hours/defect detected 0.45 Function point analysis 0.00 12

Combining RPS Research Heaven, West Virginia Weighted sums used in initial experiments. – RPS results weighted by the expert opinion index. – Removing inherent dependencies/correlations. Dempster-Shafer (D-S) belief networks approach developed. – Network automatically built from datasets by the Induction Algorithm. Existence of suitable NASA datasets? – Pursuing leads with several CMM level 5 companies. 13

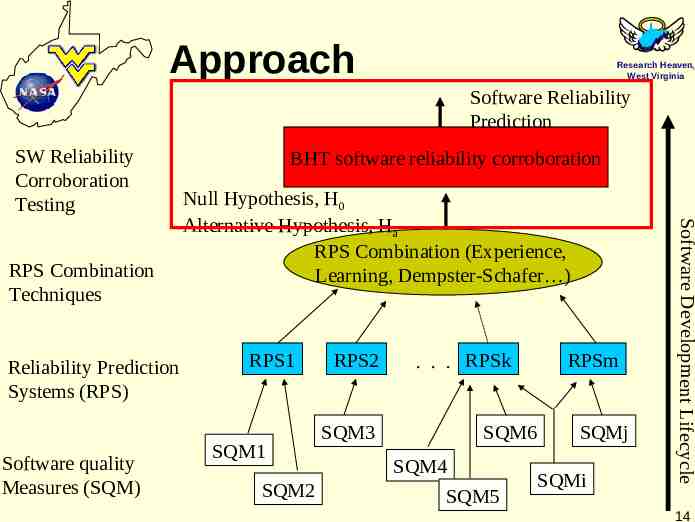

Approach Research Heaven, West Virginia Software Reliability Prediction SW Reliability Corroboration Testing Reliability Prediction Systems (RPS) Software quality Measures (SQM) Null Hypothesis, H0 Alternative Hypothesis, Ha RPS Combination (Experience, Learning, Dempster-Schafer ) RPS1 SQM1 SQM2 RPS2 SQM3 . . . RPSk SQM6 SQM4 SQM5 RPSm SQMj SQMi Software Development Lifecycle RPS Combination Techniques BHT software reliability corroboration 14

Bayesian Inference Research Heaven, West Virginia Allows for the inclusion of imprecise (subjective) probability of failure. Subjective estimate reflects beliefs. Hypothesis on the event occurrence probability is combined with new evidence, which may change the degree of belief. 15

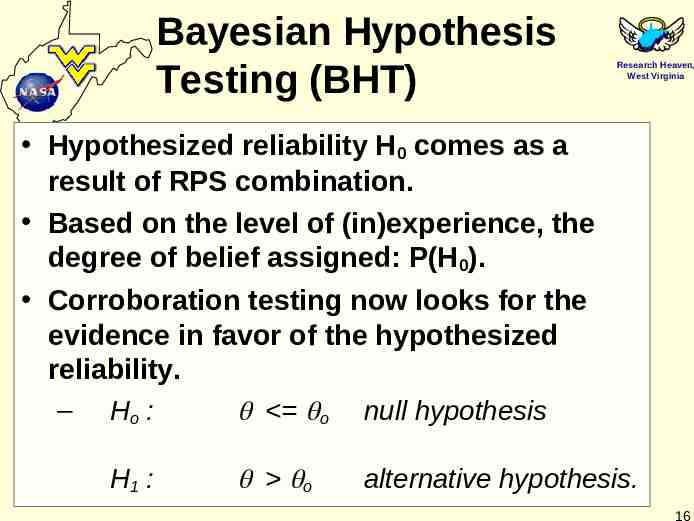

Bayesian Hypothesis Testing (BHT) Research Heaven, West Virginia Hypothesized reliability H0 comes as a result of RPS combination. Based on the level of (in)experience, the degree of belief assigned: P(H0). Corroboration testing now looks for the evidence in favor of the hypothesized reliability. – Ho : o null hypothesis H1 : o alternative hypothesis. 16

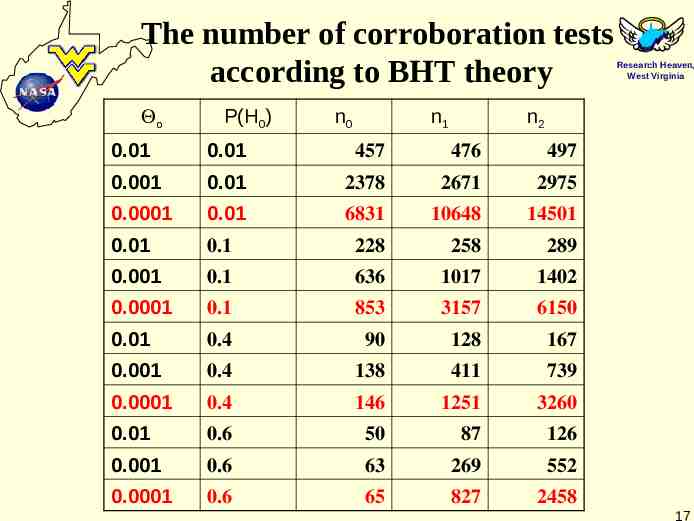

The number of corroboration tests according to BHT theory o P(H0) n0 n1 Research Heaven, West Virginia n2 0.01 0.01 457 476 497 0.001 0.01 2378 2671 2975 0.0001 0.01 6831 10648 14501 0.01 0.1 228 258 289 0.001 0.1 636 1017 1402 0.0001 0.1 853 3157 6150 0.01 0.4 90 128 167 0.001 0.4 138 411 739 0.0001 0.4 146 1251 3260 0.01 0.6 50 87 126 0.001 0.6 63 269 552 0.0001 0.6 65 827 2458 17

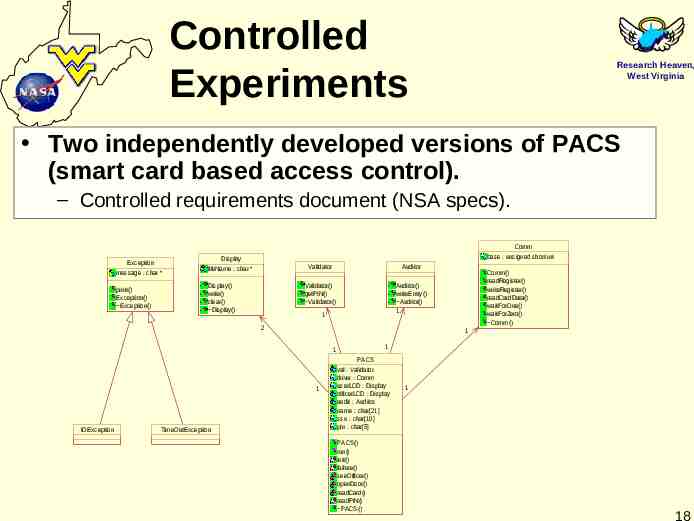

Controlled Experiments Research Heaven, West Virginia Two independently developed versions of PACS (smart card based access control). – Controlled requirements document (NSA specs). Exception message : char * print() Exception() Exception() Comm base : unsigned short int Display fileName : char * Display() write() clear() Display() Validator Auditor Validator() getPIN() Validator() Auditor() writeEntry() Auditor() 1 1 2 1 1 1 1 IOException TimeOutException Comm() readRegister() writeRegister() readCardData() waitForOne() waitForZero() Comm() PACS val : Validator driver : Comm userLCD : Display officerLCD : Display audit : Auditor name : char[21] ssn : char[10] pin : char[5] PACS() run() init() failure() seeOfficer() openDoor() readCard() readPIN() PACS() 1 18

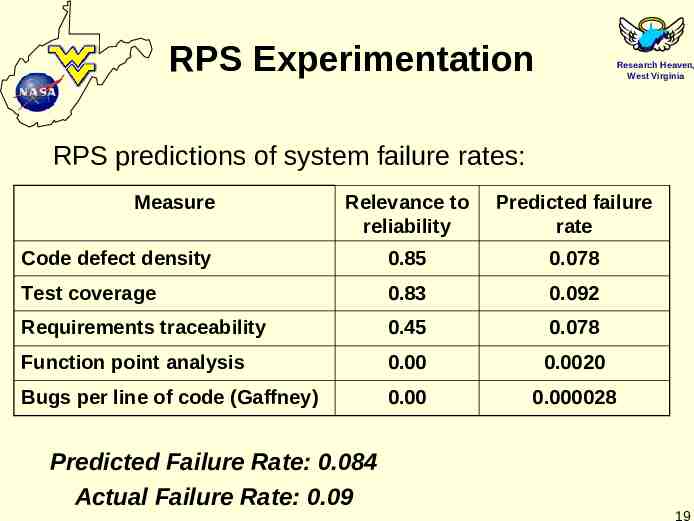

RPS Experimentation Research Heaven, West Virginia RPS predictions of system failure rates: Measure Relevance to reliability Predicted failure rate Code defect density 0.85 0.078 Test coverage 0.83 0.092 Requirements traceability 0.45 0.078 Function point analysis 0.00 0.0020 Bugs per line of code (Gaffney) 0.00 0.000028 Predicted Failure Rate: 0.084 Actual Failure Rate: 0.09 19

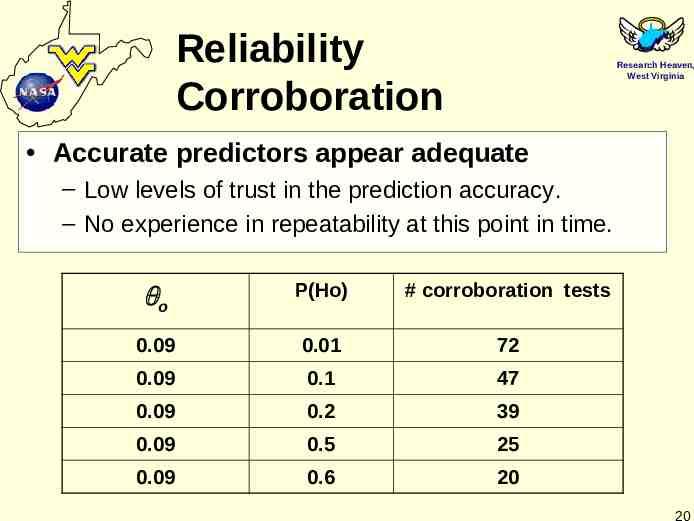

Reliability Corroboration Research Heaven, West Virginia Accurate predictors appear adequate – Low levels of trust in the prediction accuracy. – No experience in repeatability at this point in time. o P(Ho) # corroboration tests 0.09 0.01 72 0.09 0.1 47 0.09 0.2 39 0.09 0.5 25 0.09 0.6 20 20

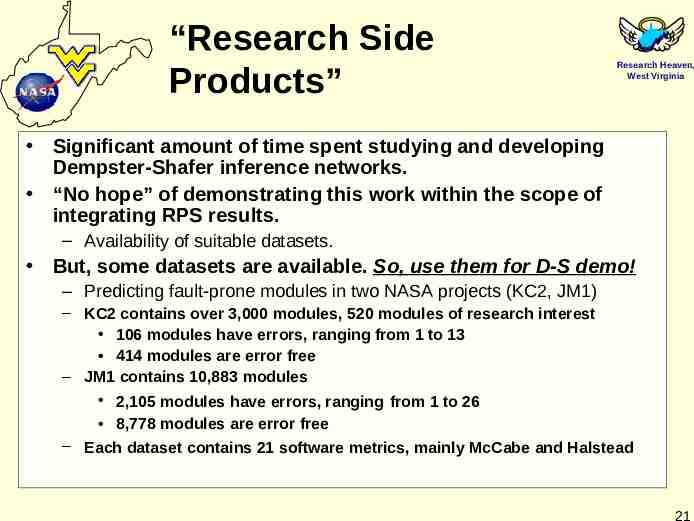

“Research Side Products” Research Heaven, West Virginia Significant amount of time spent studying and developing Dempster-Shafer inference networks. “No hope” of demonstrating this work within the scope of integrating RPS results. – Availability of suitable datasets. But, some datasets are available. So, use them for D-S demo! – Predicting fault-prone modules in two NASA projects (KC2, JM1) – KC2 contains over 3,000 modules, 520 modules of research interest 106 modules have errors, ranging from 1 to 13 414 modules are error free – JM1 contains 10,883 modules 2,105 modules have errors, ranging from 1 to 26 8,778 modules are error free – Each dataset contains 21 software metrics, mainly McCabe and Halstead 21

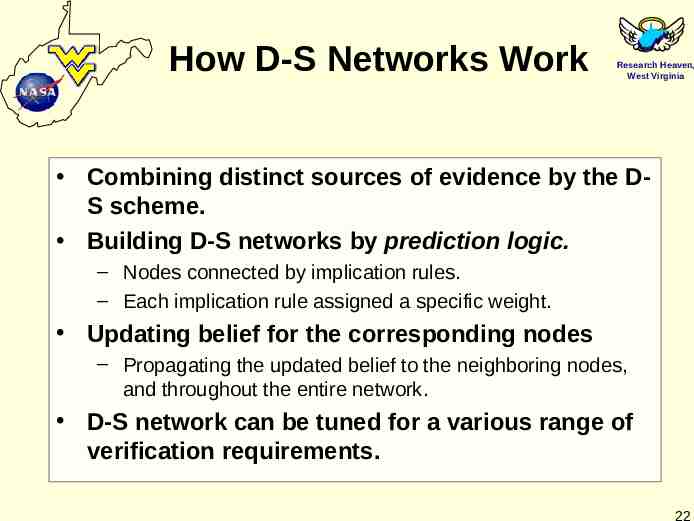

How D-S Networks Work Research Heaven, West Virginia Combining distinct sources of evidence by the DS scheme. Building D-S networks by prediction logic. – Nodes connected by implication rules. – Each implication rule assigned a specific weight. Updating belief for the corresponding nodes – Propagating the updated belief to the neighboring nodes, and throughout the entire network. D-S network can be tuned for a various range of verification requirements. 22

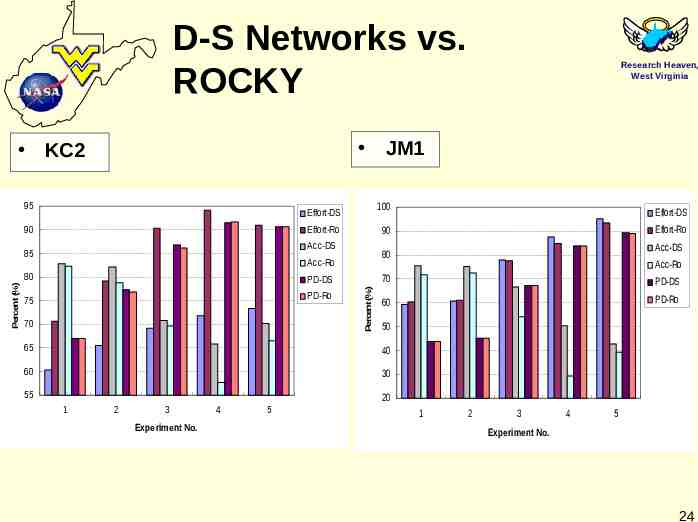

D-S Networks vs. ROCKY JM1 KC2 95 100 Effort-DS 90 Effort-Ro 75 PD-Ro 70 Percent (%) PD-DS Acc-DS Acc-Ro 70 PD-DS 60 PD-Ro 50 65 40 60 30 55 Effort-Ro 80 Acc-Ro 80 Effort-DS 90 Acc-DS 85 Percent (%) Research Heaven, West Virginia 20 1 2 3 Experiment No. 4 5 1 2 3 4 5 Experiment No. 24

D-S Networks vs. See5 KC2 JM1 90 90 PD-C5 PD-C5 85 PD-DS 80 80 Acc-C5 70 75 Acc-DS Acc-C5 60 Acc-DS 70 Percent (%) Percent (%) Research Heaven, West Virginia 65 60 50 40 55 30 50 20 45 10 40 PD-DS 0 DecisionTree RuleSet See5 Classifiers Boosting DecisiontTree RuleSet Boosting See5 Classifiers 25

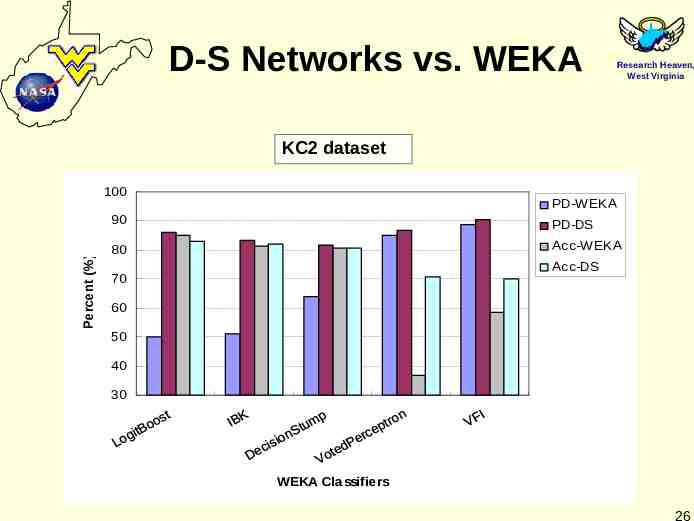

D-S Networks vs. WEKA Research Heaven, West Virginia KC2 dataset Percent (%) 100 PD-WEKA 90 PD-DS 80 Acc-WEKA Acc-DS 70 60 50 40 30 WEKA Classifiers 26

D-S Networks vs. WEKA Research Heaven, West Virginia JM1 100 PD-WEKA Percent (%) 90 80 PD-DS 70 Acc-WEKA 60 50 Acc-DS 40 30 20 10 0 y ic ple s it is t m n i g e S Lo es elD y n r a B Ke iv e a N 8 J4 I BK I B1 n tro p e erc P ted Vo VF I Hy s pe i P r pe WEKA Classifiers 27

Status and Perspectives Research Heaven, West Virginia Software reliability corroboration allows: – Inclusion of IV&V quality measures and activities into the reliability assessment. – A significant reduction in the number of (corroboration) tests. – Software reliability of safety/mission critical systems can be assessed with a reasonable effort. Research directions. – Further experimentation (data sets, measures, repeatability). – Defining RPS based on the “formality” of the IV&V methods. 28