QA Engagement during User Acceptance Testing Elizabeth

29 Slides214.62 KB

QA Engagement during User Acceptance Testing Elizabeth Wisdom 2/18/2015

Agenda What is User Acceptance Testing? What is the Objective of User Acceptance Testing? QA vs. UAT: Managing Expectations Planning for UAT Entry/Exit/Success Criteria for UAT UAT Execution UAT Metrics and Reporting What Happens After Go-Live?

Objective for Today How can QA effectively engage with the UAT team and project stakeholders before and during the UAT test event to maximize the effectiveness and efficiencies of both teams

What is User Acceptance Testing? User Acceptance Testing (UAT) is: “Formal testing conducted to determine whether or not a system satisfies its acceptance criteria based on the business requirements, which enables the functional users to determine whether or not to accept the system.”

Expectations for UAT Expect defects to be found! This adds to the overall quality of the solution. A thoughtfully planned and well-executed UAT will enable users to verify that the system under development meets business requirements. Associates/users will become more familiar with the new functionality that is being introduced – developing the expertise required for roll-out and training.

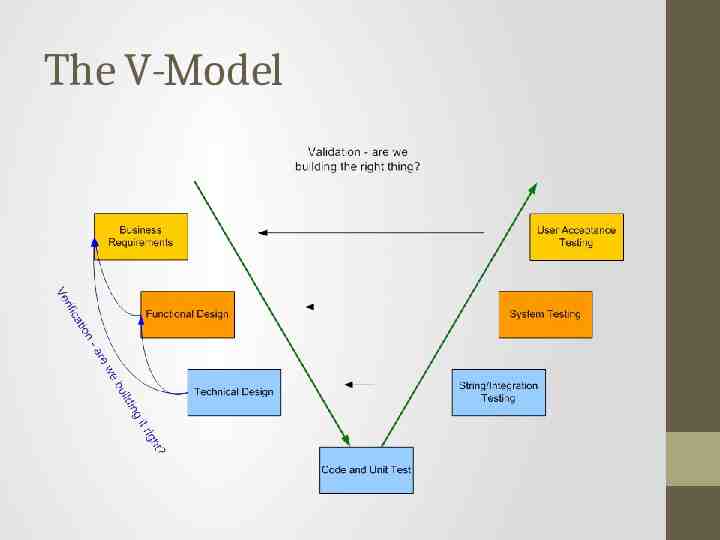

The V-Model

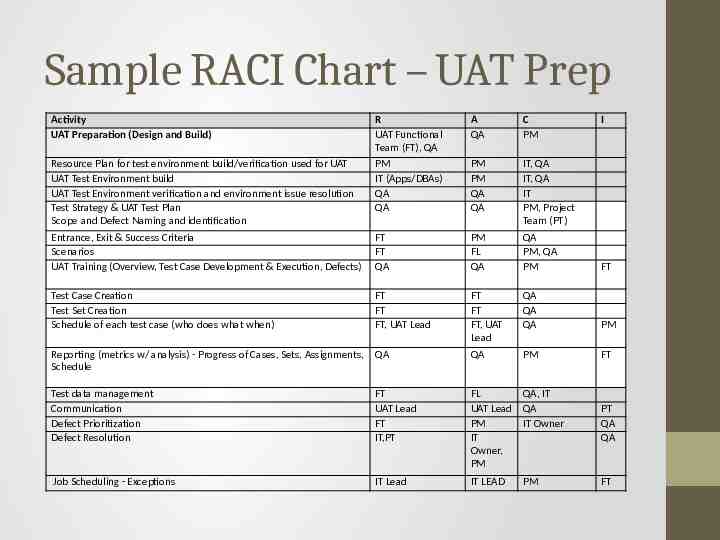

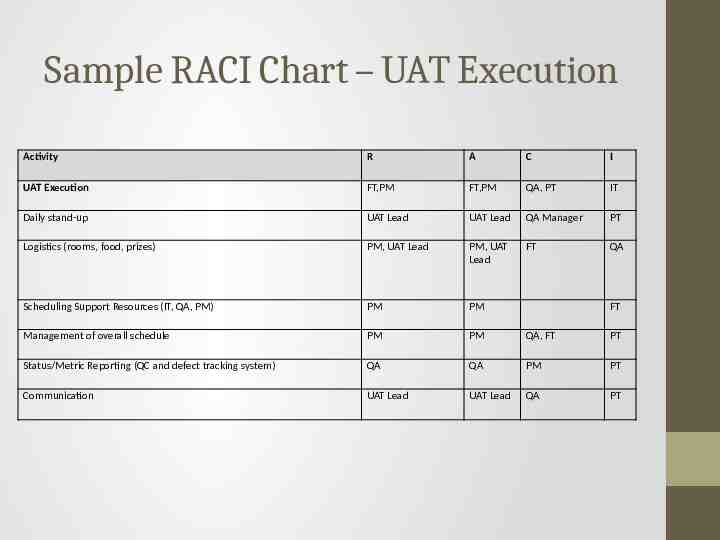

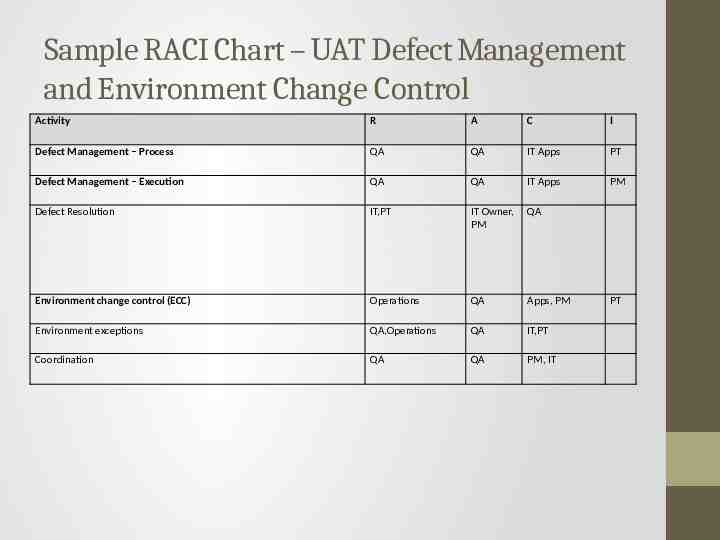

QA vs. UAT: Managing Expectations Clarify, document, review, and approve expectations for QA and UAT during the early planning stages of the project: Test Strategy: describes process, standards, and tools used Test Plan: identifies scope, assumptions, risks, dependencies, entry/exit/success criteria, data and environment requirements RACI chart for all QA and UAT test activities High level review at project core team meeting and with resource managers – get on the agenda!

Sample RACI - Definitions Responsible: Performs the activity. The Executer, the Doer. Accountable: Ensures that the activity is done on time or communicates delays. The Overseer. Has yes/no decision rights. Has veto power. Consulted: From whom input is required. It is not optional. The input must be considered. It may be overridden by the Accountable, but must be for a valid reason. Informed: To whom information must be reported. It is not optional. Team Definitions FL: Business Functional Lead FT: Business Functional Team PT: Project Team IT: IT Team (Apps, Ops) participating in the project QA: Quality Assurance Lead/Manager PM: Project Manager

Sample RACI Chart – UAT Prep Activity UAT Preparation (Design and Build) R UAT Functional Team (FT), QA A QA C PM Resource Plan for test environment build/verification used for UAT UAT Test Environment build UAT Test Environment verification and environment issue resolution Test Strategy & UAT Test Plan Scope and Defect Naming and identification PM IT (Apps/DBAs) QA QA PM PM QA QA IT, QA IT, QA IT PM, Project Team (PT) Entrance, Exit & Success Criteria Scenarios UAT Training (Overview, Test Case Development & Execution, Defects) FT FT QA PM FL QA QA PM, QA PM FT Test Case Creation Test Set Creation Schedule of each test case (who does what when) FT FT FT, UAT Lead FT FT FT, UAT Lead QA QA QA PM Reporting (metrics w/ analysis) - Progress of Cases, Sets, Assignments, Schedule QA QA PM FT Test data management Communication Defect Prioritization Defect Resolution FT UAT Lead FT IT,PT FL QA, IT UAT Lead QA PM IT Owner IT Owner, PM PT QA QA Job Scheduling - Exceptions IT Lead IT LEAD FT PM I

Sample RACI Chart – UAT Execution Activity R A C I UAT Execution FT,PM FT,PM QA, PT IT Daily stand-up UAT Lead UAT Lead QA Manager PT Logistics (rooms, food, prizes) PM, UAT Lead PM, UAT Lead FT QA Scheduling Support Resources (IT, QA, PM) PM PM Management of overall schedule PM PM QA, FT PT Status/Metric Reporting (QC and defect tracking system) QA QA PM PT Communication UAT Lead UAT Lead QA PT FT

Sample RACI Chart – UAT Defect Management and Environment Change Control Activity R A C I Defect Management – Process QA QA IT Apps PT Defect Management – Execution QA QA IT Apps PM Defect Resolution IT,PT IT Owner, PM QA Environment change control (ECC) Operations QA Apps, PM Environment exceptions QA,Operations QA IT,PT Coordination QA QA PM, IT PT

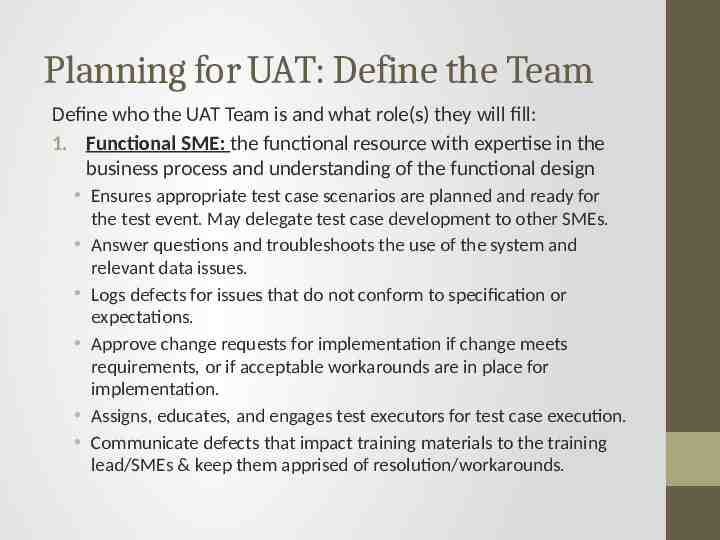

Planning for UAT: Define the Team Define who the UAT Team is and what role(s) they will fill: 1. Functional SME: the functional resource with expertise in the business process and understanding of the functional design Ensures appropriate test case scenarios are planned and ready for the test event. May delegate test case development to other SMEs. Answer questions and troubleshoots the use of the system and relevant data issues. Logs defects for issues that do not conform to specification or expectations. Approve change requests for implementation if change meets requirements, or if acceptable workarounds are in place for implementation. Assigns, educates, and engages test executors for test case execution. Communicate defects that impact training materials to the training lead/SMEs & keep them apprised of resolution/workarounds.

Planning for UAT: Define the Team 2. Test Executors: the functional subject matter experts in a particular business domain (e.g. accounts payable, invoicing, order fulfillment, supply chain, etc.) Set up required data in test environment as per data seeding approach, including job scheduling. Execute assigned test cases in the test environment and log results. Log defects or enhancements. Communicate with other test executors if prerequisites or handoffs are required to continue with testing. Communicate issues/challenges with functional tester immediately.

Planning for UAT: Define the Team 3. UAT Facilitator: answers questions and troubleshoots the use of defect/test management tools. The UAT Facilitator is an IT Project Manager or QA resource with expertise in testing policies, processes, and tools. Communicates and monitors target dates for test case preparation, including facilitation of weekly meetings if needed. Ensures test event has reserved conference room space, connectivity, monitors, etc. Monitors overall schedule and raises issues or risks that will hinder ability to meet entrance or exit criteria. Facilitates daily stand-up meeting. Implements process for prioritizing defects.

Planning for UAT: Define What to Test UAT Test Plan: Test scope Data requirements Environment requirements, including availability and access Assumptions, Dependencies, Risks When test cases are available: Identify who will be testing which test cases on which days – communicate the schedule prior to UAT Identify who will be creating the test data by when

Planning for UAT: Define When and Where to Test Centrally co-locate the test executors (same room is best!). Reserve room(s) and communicate the plan. Have the development lead and the QA lead also present during UAT for support and quick turnaround of blocking issues. Ensure test environment is available and test executors have proper access.

Planning for UAT: Define How to Test Define and communicate how UAT will look in terms of: Process Tools Test case management Defect management Reporting Provide logins and training for any test case management tool or defect tracking system before UAT. Otherwise, have a standard test execution template that is accessible for everyone and that the process for filling it out is understood.

Planning for UAT: Providing Training Provide separate and just-in-time training throughout the UAT planning phase: UAT Overview – the Why, What, Who, When, Where, and How of UAT UAT Test Planning Part 1 (Scenarios) – what is a scenario, how to document high level scenarios (how to put them in a test management repository, scenario standards, etc.), approving scenarios UAT Test Planning Part 2 (Test Cases) – breaking down scenarios into positive/negative test cases with detailed steps, prerequisites, test objectives, test case standards, and traceability back to business requirements. UAT Test Execution – how to execute test cases and document test results, reporting and logging defects, defect retest process, defect prioritization, UAT metrics, test case scheduling and assignment, logistics

UAT Entrance Criteria Document Entrance/Exit/Success in UAT Test Plan and reiterate at Core Team Meeting Obtain approval prior to the start of the UAT test event Example: Critical and High functionality is complete and deployed to the test environment Configuration guide is complete and all configurations are completed in the test environment System Test (QAT) is 95% complete, with 95% pass rate and no blocking/critical open issues. UAT test cases are complete and approved, and test case schedule is known to all participants.

UAT Exit Criteria Example: 96% of all planned tests are executed and reflected in test event metrics. All failed test cases have a defect logged and all business functionality defects are associated with a failed test case. Business review and agreement of all open defects by severity and priority by date . No unresolved Critical defects and all unresolved H/M/L defects have a resolution plan.

UAT Success Criteria Example: 98% of executed test cases pass

UAT Execution Communicate daily plan/schedule Prepare and distribute Daily UAT Metric Report (use a template) Conduct daily standup with all participants: Communicate and prioritize issues found during the day Ensure defect fixes are retested and when next set of fixes will be available Monitor planned vs. actual test execution to ensure testing stays on track (if not, provide mitigation plan)

UAT Metrics and Reporting At a minimum, UAT Metrics Report should include: Defects: #open/closed by severity and status (if many, then in priority order) Test execution: plan vs. actual and pass rate Break down by business area or team, if applicable Overall

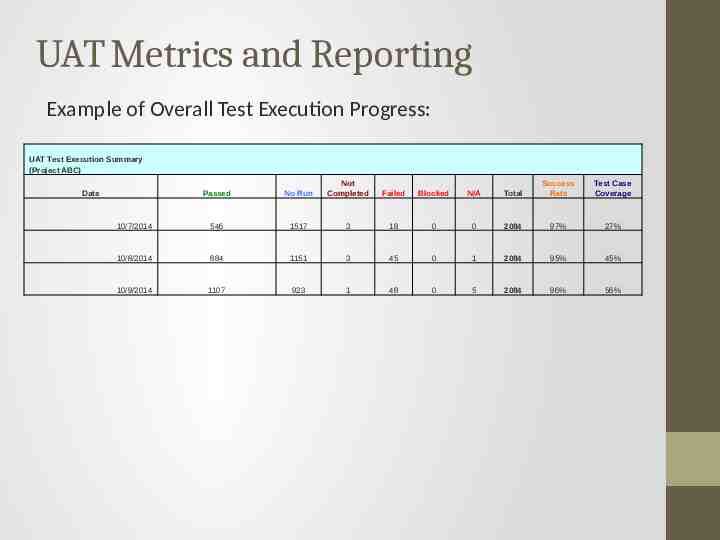

UAT Metrics and Reporting Example of Overall Test Execution Progress: UAT Test Execution Summary (Project ABC) Passed No Run Not Completed Failed Blocked N/A Total Success Rate Test Case Coverage 10/7/2014 546 1517 3 18 0 0 2084 97% 27% 10/8/2014 884 1151 3 45 0 1 2084 95% 45% 10/9/2014 1107 923 1 48 0 5 2084 96% 56% Date

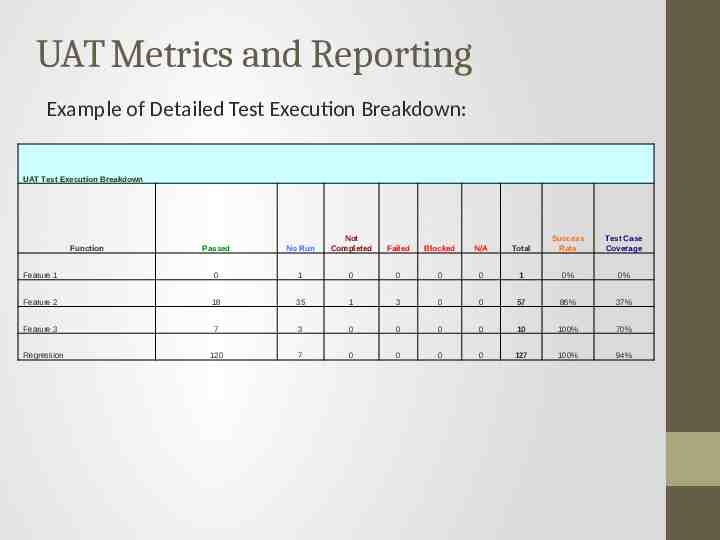

UAT Metrics and Reporting Example of Detailed Test Execution Breakdown: UAT Test Execution Breakdown Passed No Run Not Completed Failed Blocked N/A Total Success Rate Test Case Coverage Feature 1 0 1 0 0 0 0 1 0% 0% Feature 2 18 35 1 3 0 0 57 86% 37% Feature 3 7 3 0 0 0 0 10 100% 70% 120 7 0 0 0 0 127 100% 94% Function Regression

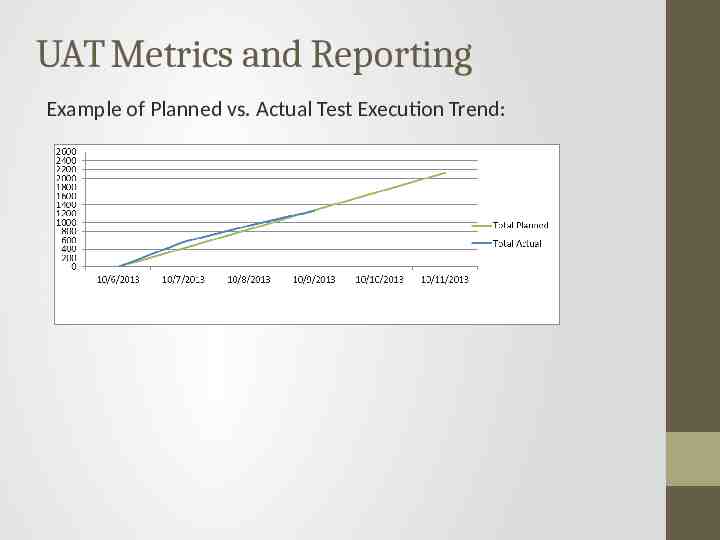

UAT Metrics and Reporting Example of Planned vs. Actual Test Execution Trend:

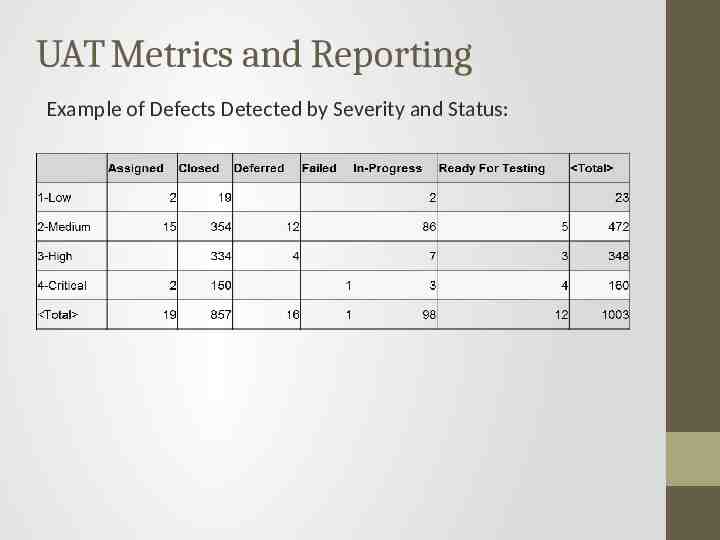

UAT Metrics and Reporting Example of Defects Detected by Severity and Status:

Post Go-Live: Lessons Learned and Continuous Improvement Issues will occur! Perform root cause analysis on post go-live issues to determine what process improvements need to be made and whether test cases or data need to be added or updated to the test suites. Conduct Lessons Learned session with all UAT participants and project stakeholders on what went well and what could have been done better.

Q&A