Morphus: Supporting Online Reconfigurations in Sharded NoSQL Systems

31 Slides1.27 MB

Morphus: Supporting Online Reconfigurations in Sharded NoSQL Systems Mainak Ghosh, Wenting Wang, Gopalakrishna Holla, Indranil Gupta

NoSQL Databases Predicted to become a 3.4B industry by 2018 2

Database Reconfiguration Problem: Changing database or table-level configuration parameters o Primary/Shard Key – MongoDB, Cassandra o Ring size - Cassandra Challenge: Affects a lot of data at once Motivation: o Initially DB configured based on guesses o Sys admin needs to play around with different parameters Seamlessly, and efficiently o Later, as workload changes and business/use case evolves: Change parameters, but do it in a live database 3

Today's Prevalent Solution Existing Solution: Create a new schema, export and re-import data Why Bad? o Massive unavailability of data: every second of outage costs 1.1K at Amazon and 1.5K at Google o Reconfiguration change caused outage at Foursquare o Manual change of primary key at Google: took 2 years and involved 2 dozen teams Need a solution that is automated, seamless and efficient 4

Goals Fast completion time Minimize the amount of data transfer required Highly available CRUD operations should be answered as much as possible with little impact on latency Network Aware Reconfiguration should adapt to underlying network latencies 5

Assumptions Master Slave Replication Range-based Partitioning Flexibility in data assignment MongoDB, RethinkDB, CouchDB, etc. 6

Optimal Algorithms

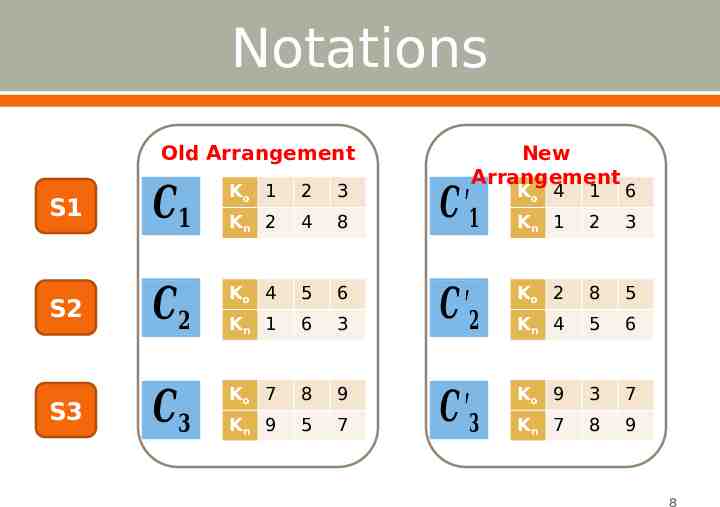

Notations Old Arrangement S1 𝑪𝟏 Ko 1 Kn 2 4 8 S2 𝑪𝟐 Ko 4 5 6 Kn 1 6 3 S3 𝑪𝟑 Ko 7 8 9 Kn 9 5 7 2 3 New Arrangement 𝑪′𝟏 Ko 4 1 6 Kn 1 2 3 𝑪′𝟐 Ko 2 8 5 Kn 4 5 6 𝑪′𝟑 Ko 9 3 7 Kn 7 8 9 8

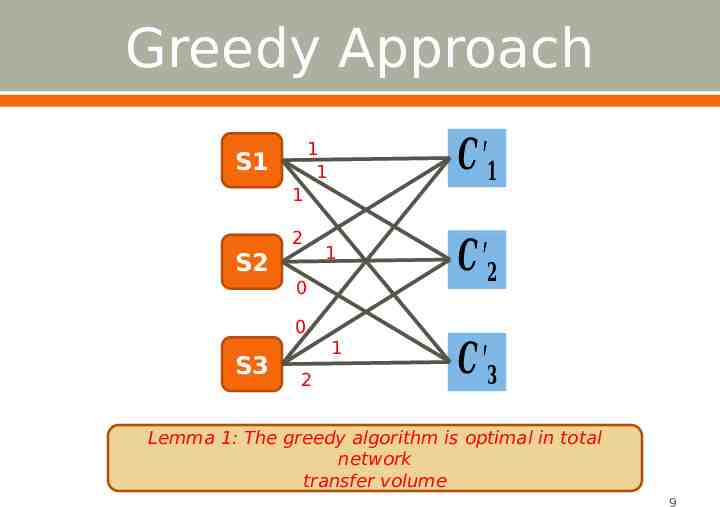

Greedy Approach 𝑪′𝟏 1 1 S1 1 2 S2 1 0 0 S3 1 2 𝑪′𝟐 𝑪′𝟑 Lemma 1: The greedy algorithm is optimal in total network transfer volume 9

Unbalanced Greedy Old Arrangement 𝑪𝟏 Ko 1 2 3 Kn 2 4 8 𝑪𝟑 Ko 7 8 9 Kn 9 5 7 𝑪𝟐 Ko 4 5 6 Kn 1 6 3 New Arrangement 𝑪′𝟏 Ko 4 1 6 Kn 1 2 3 𝑪′𝟐 Ko 2 8 5 Kn 4 5 6 𝑪′𝟑 Ko 9 3 7 Kn 7 8 9 S1 S2 S3 10

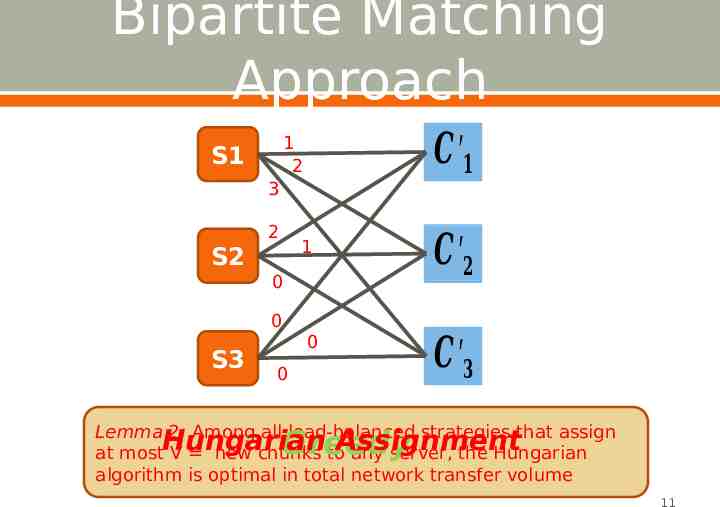

Bipartite Matching Approach 𝑪′𝟏 1 2 S1 3 2 S2 1 0 0 S3 0 0 𝑪′𝟐 𝑪′𝟑 Lemma 2: Among all load-balanced strategies that assign Assignment at mostHungarian V new chunks to any server, the Hungarian algorithm is optimal in total network transfer volume Greedy 11

System Design

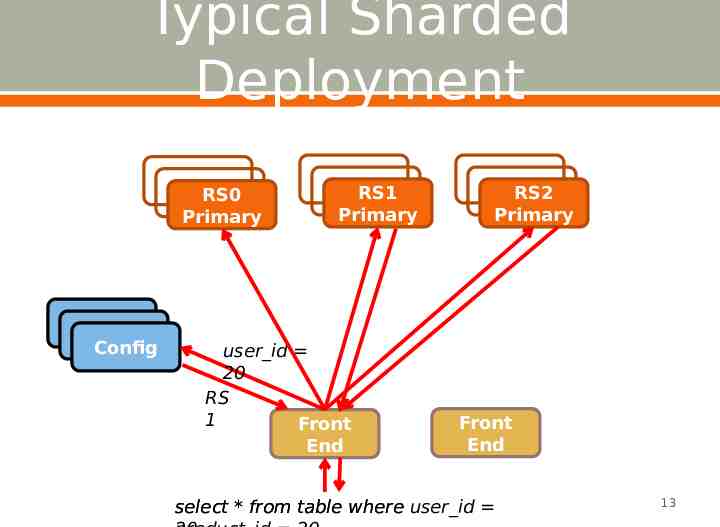

Typical Sharded Deployment RS0 Primary Config RS1 Primary user id 20 RS 1 Front End RS2 Primary Front End select * from table where user id 13

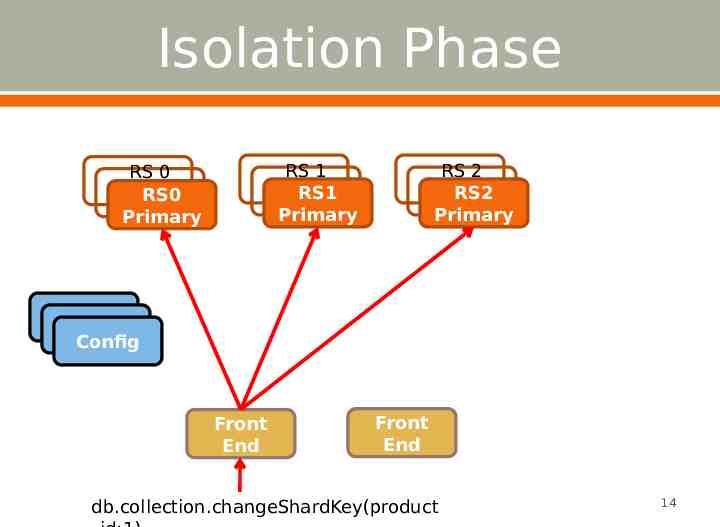

Isolation Phase RS 0 Secondar RS0 y Primary RS 1 Secondar RS1 y Primary RS 2 Secondar RS2 y Primary Config Front End Front End db.collection.changeShardKey(product 14

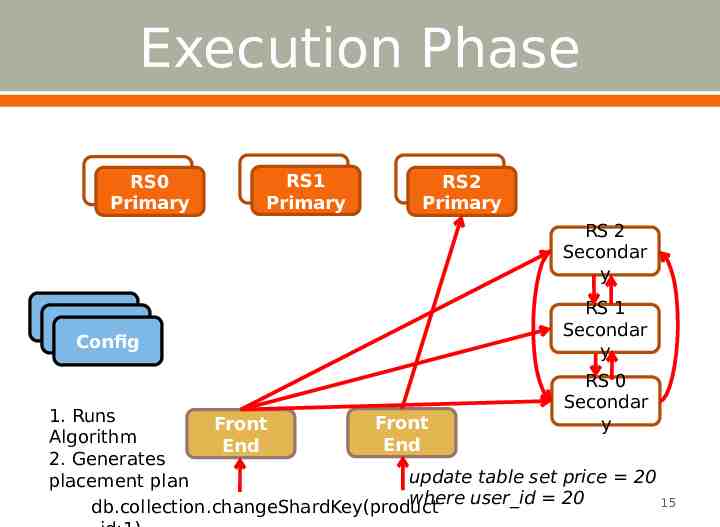

Execution Phase RS0 Primary RS1 Primary RS2 Primary RS 2 Secondar y Config RS 1 Secondar y RS 0 Secondar y 1. Runs Front Front Algorithm End End 2. Generates update table set price 20 placement plan where user id 20 15 db.collection.changeShardKey(product

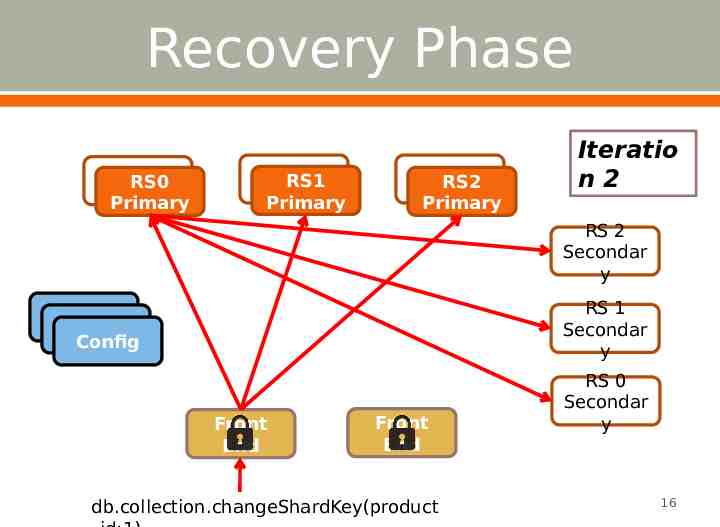

Recovery Phase RS0 Primary RS1 Primary RS2 Primary Iteratio n2 1 RS 2 Secondar y RS 1 Secondar y Config Front End Front End db.collection.changeShardKey(product RS 0 Secondar y 16

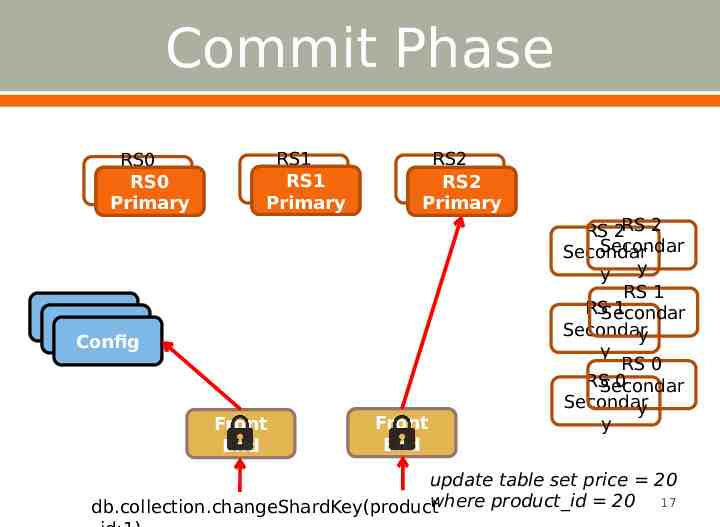

Commit Phase RS0 RS0 Secondar Primary y RS1 Secondar RS1 y Primary RS2 Secondar RS2 y Primary Config Front End Front End RS 2RS 2 Secondar Secondar y y RS 1 RSSecondar 1 Secondary y RS 0 RS 0 Secondar Secondar y y update table set price 20 where product id 20 17 db.collection.changeShardKey(product

Network Awareness

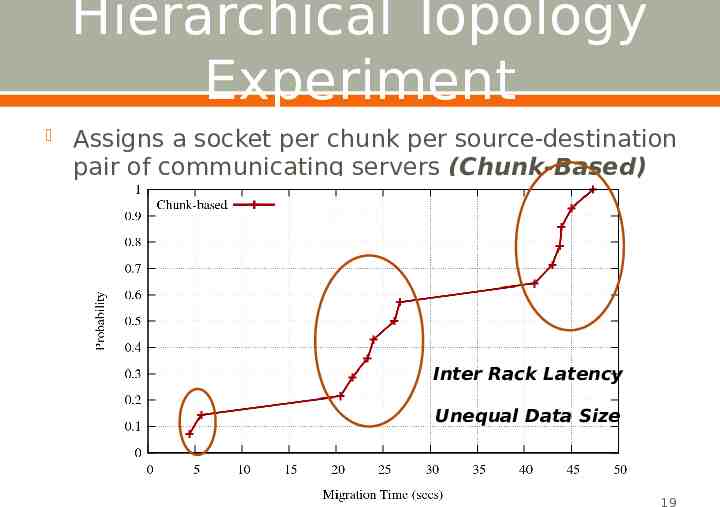

Hierarchical Topology Experiment Assigns a socket per chunk per source-destination pair of communicating servers (Chunk-Based) Inter Rack Latency Unequal Data Size 19

Weighted Fair Sharing Between a pair of source server i and destination server j, number of sockets assigned, : Total amount of data that i needs to transfer to j : Observed round trip latency between i and j This scheme is in contrast to Orchestra[Chowdhury et al] which only fair shares based on data size 20

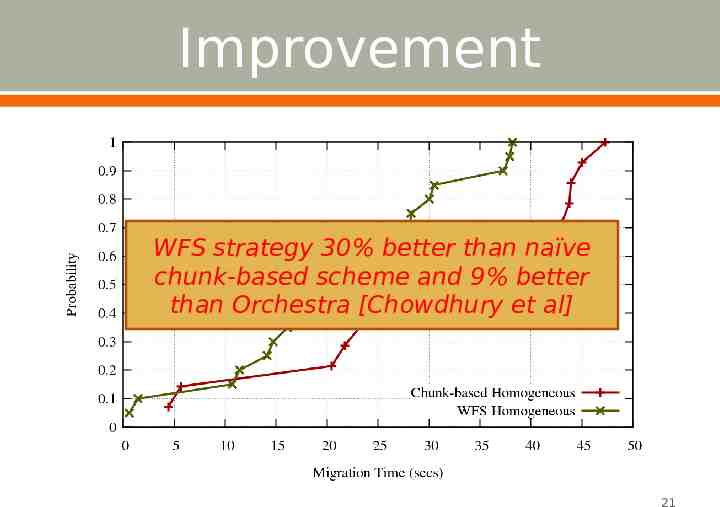

Improvement WFS strategy 30% better than naïve chunk-based scheme and 9% better than Orchestra [Chowdhury et al] 21

Geo-Distributed Optimization Morphus chooses slaves for reconfiguration during first Isolation phase In a geo-distributed setting, naïve choice can lead to bulk transfers over wide area network Solution: Localize bulk transfer by choosing replicas in the same datacenter o Morphus extracts the datacenter information from replica’s metadata. 2x-3x improvement observed in experiments 22

Evaluation

Setup Dataset: Amazon Reviews [Snap]. Cluster: Emulab d710 nodes, 100 Mbps LAN switch and Google Cloud (n1-standard-4 VMs), 1 Gbps network Workload: Custom generator similar to YCSB. Implements Uniform, Zipfian and Latest distribution for key access Morphus: Implemented on top of MongoDB 24

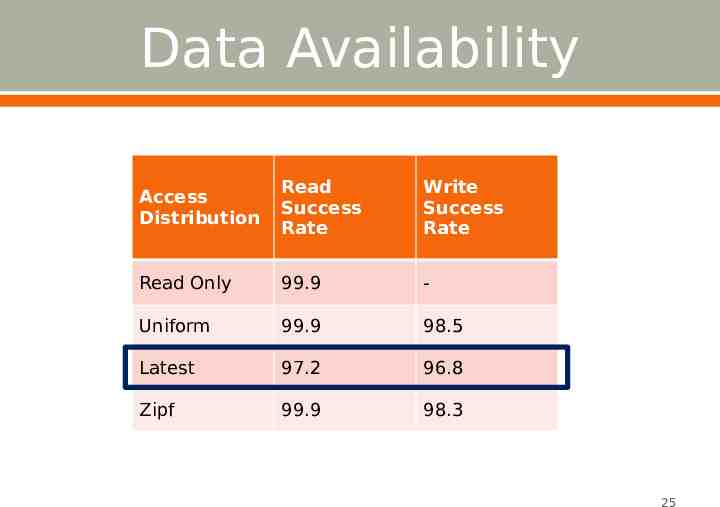

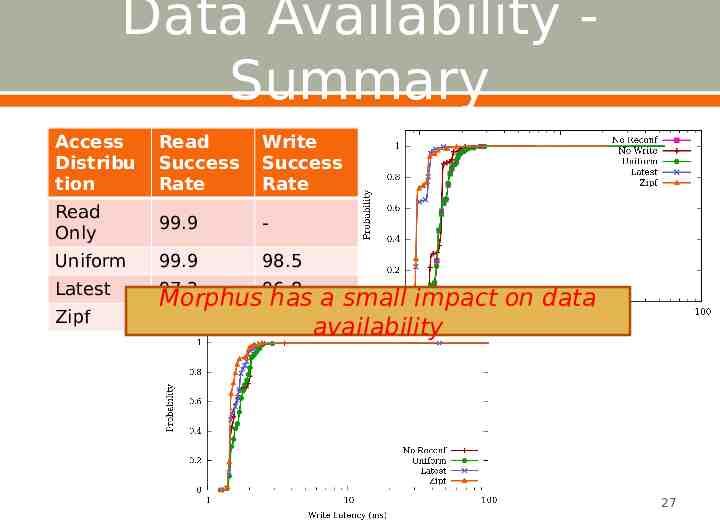

Data Availability Access Distribution Read Success Rate Write Success Rate Read Only 99.9 - Uniform 99.9 98.5 Latest 97.2 96.8 Zipf 99.9 98.3 25

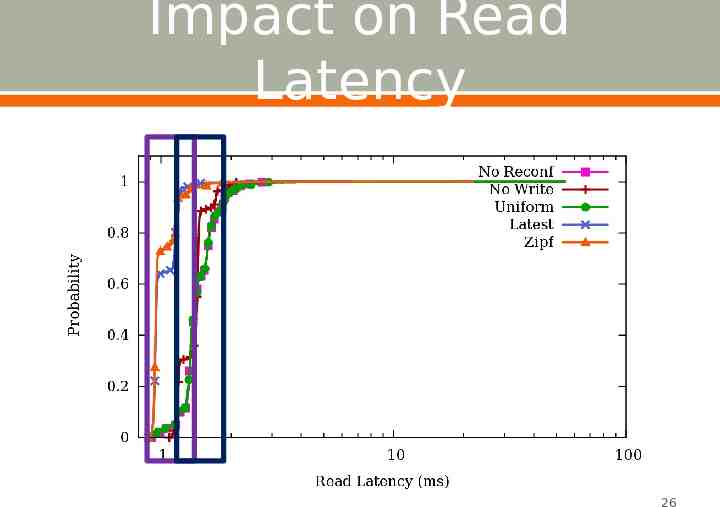

Impact on Read Latency 26

Data Availability Summary Access Distribu tion Read Success Rate Write Success Rate Read Only 99.9 - Uniform 99.9 98.5 Latest 97.2 Zipf Morphus96.8 has a small impact on data 99.9 98.3 availability 27

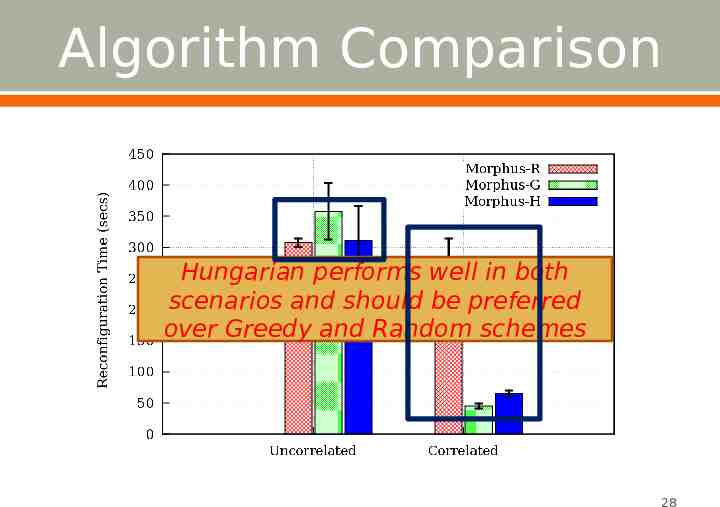

Algorithm Comparison Hungarian performs well in both scenarios and should be preferred over Greedy and Random schemes 28

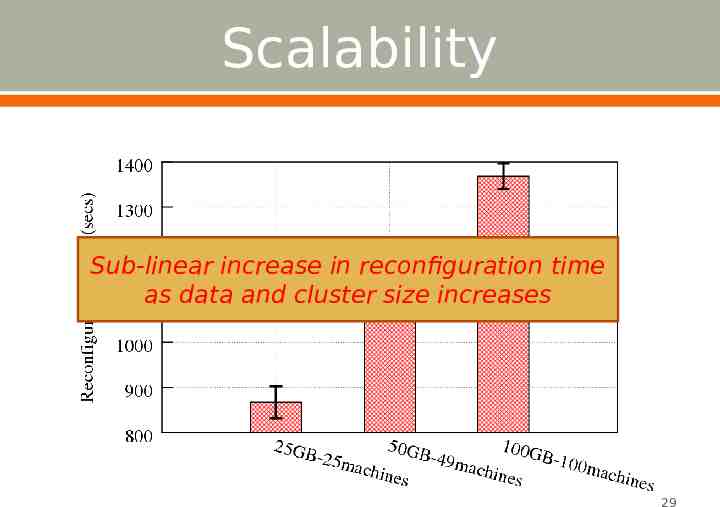

Scalability Sub-linear increase in reconfiguration time as data and cluster size increases 29

Related Work Online schema change [Rae et al.]: Resultant availabilities smaller. Live data migration: Attempted in databases [Albatross, ShuttleDB] and VMs [Bradford et al.]. Similar approach as ours. o Albatross and ShuttleDB ship whole state to a set of empty servers. Reactive reconfiguration proposed by Squall [Elmore et al]: Fully available but takes longer. 30

Takeaways Morphus is a system which allows live reconfiguration of a sharded NoSQL database. Morphus is implemented on top of MongoDB Morphus uses network efficient algorithms for placing chunks while changing shard key Morphus minimally affects data availability during reconfiguration Morphus mitigates stragglers during data migration by optimizing for the underlying network latencies. 31