Multimedia and Digital Video By Dr.U.Priya Head and

51 Slides354.39 KB

Multimedia and Digital Video By Dr.U.Priya Head and Assistant Professor of Commerce Bon Secours College for Women Thanjavur

Meaning of Multimedia Multimedia information is more than the plain text. It includes graphics, animation, sound and video. One type of multimedia that is becoming a key technology for e – commerce: digital video. Typical applications of digital video include video conferencing, video on demand, and distance education. We concentrate to the technology – compression, storage and transport – and use of digital video in desktop video conferencing.

Stages of transport in multimedia 1. Image capture / generation – like (Television camera/ computer) 2. Compression – raw images may be too large the data must be compressed to reduce its volume and sent the same. 3.Storage – compressed data are stored on CD – ROM or network storage servers 4. Transport – speed depends upon the characteristics of the medium 5. Desktop processing and display

KEY MULTIMEDIA CONCEPTS Multimedia: the use of digital data in more than one format, such as the combination of text, audio and image data in a computer file. The theory behind multimedia is digitizing traditional media like words, sounds, motion and mixing them together with elements of database. Multimedia data compression Data compression attempts to pack as much information as possible into a given amount of storage. The range of compression is 2:1 to 200:1.

Compression Methods 1. Sector-oriented disk compression integrated into the operating system, this compression is invisible to end user 2. Backup or archive-oriented compression- Compress file before they are downloaded over telephone lines 3. Graphic & video-oriented compressionCompress graphics & video file before they are downloaded 4. Compression of data being transmitted over low-speed network - tech used in modems, routers

Data compression in action: Data compression works by eliminating redundancy. In general a block of text data containing 1000 bits may have an underlying information content of 100 bits, remaining is the white space. The goal of compression is to make the size of the 1000-bit to 100-bit (size of underlying information).this is also applicable to audio and video files also.

Compression Techniques Compression techniques can be divided into two major categories: I. Lossy Lossy compression means that it given a set of data will undergo a loss of accuracy or resolution after a cycle of compression and decompression. it is mainly used for voice, audio and video data. The two popular standards for lossy tech is MPEG, JPEG. II. Lossless Lossless compression produces compressed output that is same as the input. It is mainly used for text and numerical data.

Multimedia Server A server is h/w & s/w systems that turns raw data into usable information and provide that to users when they needed. E-commerce application will require a server to manage application tasks, storage, security, transaction management and scalability.

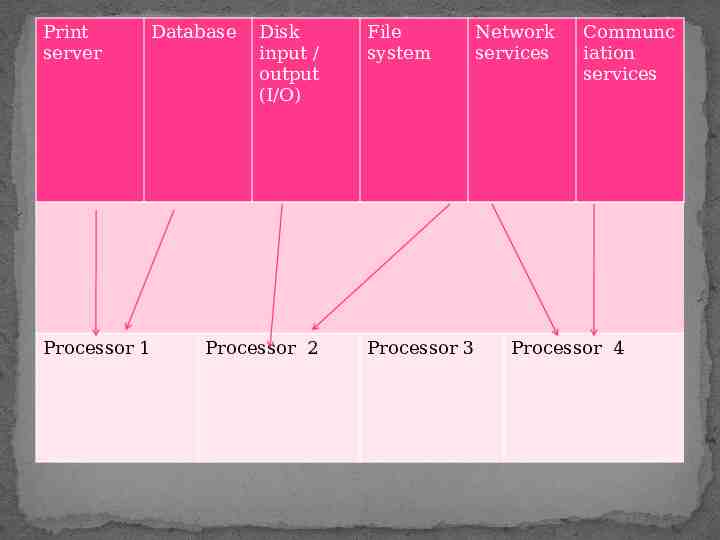

Multiprocessing Current execution of several tasks on multiple processors. this implies that the ability to use more than one CPU for executing programs. processors can be tightly or loosely coupled. Symmetric multiprocessing Symmetric multiprocessing treats all processors as equal I.e. any processor can do the work of any other processor. It dynamically assigns work to any processor.

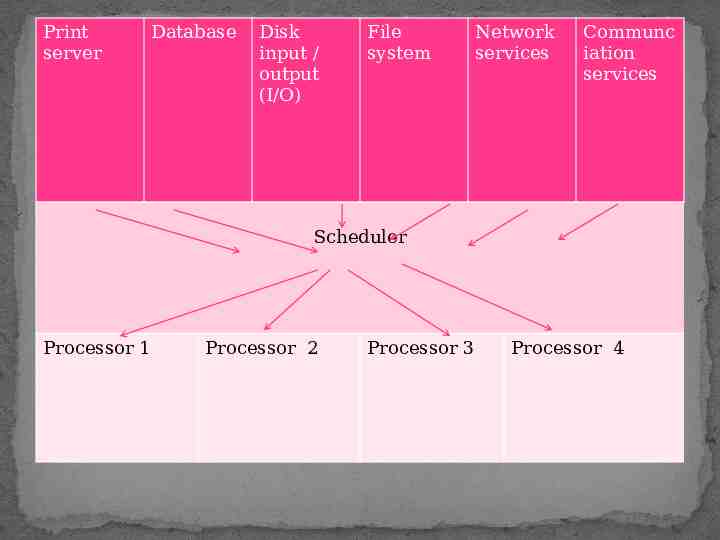

Print server Database Disk input / output (I/O) File system Network services Communc iation services Scheduler Processor 1 Processor 2 Processor 3 Processor 4

Multitasking Multitasking means that the server operating systems can run multiple programs and give the illustration that they are running simultaneously by switching control between them. Two types of multitasking are: 1. Preemptive - Preemptive multitasking is task in which a computer operating system uses some criteria to decide how long to allocate to any one task before giving another task a turn to use the operating system. The act of taking control of the operating system from one task and giving it to another task is called preempting 2. Non preemptive - Non-preemptive multitasking is a legacy multitasking technique where an operating system (OS) allocates an entire central processing unit (CPU) to a single process until the process is completed. The program releases the CPU itself or until a scheduled time has passed.

Multithreading: Multithreading is a sophisticated form of multitasking and refer to the ability to support separate paths of execution within a single address space. In this a process broken into independent executable tasks called threads

Print server Processor 1 Database Disk input / output (I/O) Processor 2 File system Processor 3 Network services Communc iation services Processor 4

Multimedia Storage Technology Storage technology is becoming a key player in electronic commerce because the storage requirements of modern-day information are enormous. Storage technology can be divided into two types: 1. Network-based (disk arrays) 2. Desktop-based (CD-ROM)

1. Network-based (Disk arrays) Disk arrays store enormous amounts of information and are becoming an important storage technologies for firewall servers and large servers. Range provided for small arrays is 5-10 gigabytes. Range provided for large arrays is 50-500 gigabytes Technology behind disk array is RAID(redundant array of inexpensive disk) RAID offers a high degree of data capacity, availability, and redundancy. Current RAIDs use multiple 51/2 –inch disks.

Desktop-based (CD-ROM) CD-ROM is premiere desktop stop storage. It is a read only memory, to read CD-ROM a special drive CD-ROM drive is required. The mail advantage is the incredible storage density. That allows a single CD-ROM disc contains 530MB for audio CD. That allows a single CD-ROM disc contains 4.8 GB for video CD.

CD-ROM Technology Exhibits The Following 1. High information density: It is with optical encoding, the CD can contain some 600-800 MB of data. 2. Low unit cost: Unit cost in large quantities is less than two dollars, because CDs are manufactured by well-developed process. 3. Read only memory: CD-ROM is read only memory so it cannot be written or erased. 4. Modest random access performance: Performance of the CDs is better than floppies because of optical encoding methods.

The Process of CD proceeds as follows: CD-ROM spiral surface contains shallow depressions called pits. These pits used to scatter light. CD-ROM spiral surface contains spaces between indentations called lands .these lands are used to reflect light. The laser projects a beam of light, which is focused by the focusing coils. The laser beam penetrates a protective layer of plastic & strikes the reflective aluminum layer on the surfaces Light striking a land reflects back to the detector. Light pulses are translated into small electrical voltage to generate 0’s & 1’s.

DIGITAL VIDEO AND ELECTRONIC COMMERCE Digital video is binary data that represents a sequence of frames, each representing one image. The frames must be shown at about 30 updates per sec. Digital video as a core element:

Digital video

Characteristics of Digital Video: It can be manipulated, transmitted and reproduced with no discernible image generation. It allows more flexible routing packet switching technology. Development of digital video compression technology has enabled the of new applications in consumer electronics, multimedia computers and communications market. It poses interesting technical challenges; they are constant rate and continuous time media instead of text, image, audio and video. Compression rate are 10 mb /min of video.

Digital video compression/decompression: Digital video compression takes the advantage of the fact that a substantial amount of redundancies exist in video. The hour-longer video that would require 100 CDs would only required one CD if video is compressed. The process of compression & decompression is commonly referred to as just compression, but it involves both processes. (Cont.)

Contd. Decompression is inextensible because once compressed, a digital video can be stored and decompressed many time. The adaptations of international standards are called codec. Mostly used codec today's are loss compression.

Types of Codec's: Most codec schemes can be categorized into two types: 1. Hybrid 2. Software-based. 1. Hybrid: Hybrid codec use combination of dedicated processors and software. It requires specialized add-on hardware. Best examples of hybrid codec are MPEG (moving picture expert group) JPEG(joint photographic expert group)

MPEG (moving picture expert group): Moving Picture Expert Group is an ISO group; the purpose of this is to generate high quality compression of digital videos. MPEG I (Moving Picture Expert Group I): MPEG I defines a bit steam for compressed video and audio optimized to a bandwidth of 1.5 Mbps, it is the data rate of audio CDs & DATs. The standard consists of three parts audio, video, and systems. A system allows the synchronization of video & audio. MPEG I implemented in commercial chips. resolution of the frames in MPEG I is 352X240 pixels at 30 frames per second. The video compression ratio for this is 26:1

MPEG II (Moving Picture Expert Group II): MPEG II specifies compression signals for broadcast-quality video. It defines a bit steam for high-quality “entertainment-level” digital video. MPEG-2 supports transmission range of about 2-15 Mbps over cable, satellite and other transmission channels. The standard consists of three parts audio, video, and systems. A system allows the synchronization of video & audio. MPEG II implemented in commercial chips.

Contd. Resolution of the frames in MPEG I is 720X480 pixels at60 frames per second. A data rate of the MPEG-2 is 4 to 8 Mbps. Future promising of this is rapid evolution of cable TV’s news channels. Two other MPEG standards are 1. MPEG-3(1920X1080 and data rates are 20 to 40) 2. MPEG-4(consisting of speech and video synthesis)

JPEG (Joint Photographic Expert Group): JPEG is a still-image compression algorithm defined by the joint photographic expert group and serves as the foundation for digital video. JPEG is used in two ways in digital video world: 1. as apart of MPEG 2. as motion JPEG JPEG standard has been widely adopted for video sequences. JPEG compression is fast and can capture full-screen, full-rate video. JPEG was designed for compressing either full-color or gray-scale Digital images of real world scenes.

JPEG is a highly sophisticated technique that uses three steps: The first step, a technique known as DCT (discrete cosine transformation). Next, a process called quantization manipulates the data and compresses strings of identical pixels by run length encoding method. Finally, the image is compressed using a variant of Huffman encoding. A use full property of the JPEG is the degree of looseness.

DESKTOP VIDEO PROCESSING Video on the desktop is a key element in turning a computer into a true multimedia platform. PC has steadily become a highly suitable platform for video. DESKTOP VIDEO PROCESSING includes upgrade kits, sound cards, video playback accelerator board, video capture hardware and editing software. Microphones, speakers, joystick, and other peripherals are also needed.

Desktop video hardware for playback and capture Desktop video require a substantial amounts of disk space and considerable CPU horsepower. It also requires specialized hardware to digitize and compress the incoming analog signal from video tapes. The two lines of video playback products become available in the marketplace i.e. video ASIC chips and board level products.

Video playback: The two lines of video playback products become available in the marketplace I.e. video ASIC chips and board level products. Broadly speaking, two types of accelerator boards are available: -1. Video -2. Graphics

Video capture and editing: Video capture board are essential for digitizing incoming video for use in multimedia presentations or video conferencing Video capture program also include video-editing functions that allows users crop, resize and converts formats and add special effects for both audio and video like fade-in, Embosses, zooma and echo's. Developers are crating next generation editing tools to meet business presenters and video enthusiasts. The best graphical editing tools make complex procedures accessible even to voice users.

Desktop video application software: The text that appear in the movie. Any PC wants to handle digital video must have a digital-video engine available. Two significant digital video engines are : 1. Apple’s QuickTime 2. Microsoft’s video for windows

These two are software's only; they don’t need any special hardware Apple’s QuickTime: QuickTime is a set of software programs from apple that allows the operating system to pay motion video sequences on a PC without specialized hardware. QuickTime has it s own set of compression/decompression drivers. Apple’s QuickTime was the first widely available desktop video technology to treat video as a standard data type. In this video data could not be cut, copied, and pasted like text in a page composition program. Apple’s QuickTime movie can have multiple sound tracks and multiple video tracks. Apple’s QuickTime engine also supports synchronize

Microsoft’s video for windows: Microsoft’s video for windows is a set of software programs from Microsoft that allows the operating system to pay motion video sequences on a PC without specialized hardware. Microsoft video for windows has its own set of compression/decompression drivers. Microsoft chooses a frame-based model, in contrast to QuickTime-based model.

Desktop video conferencing Desktop video conferencing is gaining momentum as a communication tool. Face-to-face video conferences are already a common practice, allowing distant colleagues to communicate without the expense and inconvenience of traveling. Early video conferencing utilized costly equipment to provide room-based conferencing, but now it becoming fast due to desktop video conferencing in this we participated by sit at their own desks, in their own offices, and call up others using their PCs much like telephone.

The Economics: Three factors have made desktop video conferencing: Price: The price fallen from 500,000 to 5001000 Standards: standards allowing interoperable communications between machines from different vendors. Compression: It uses better and faster compression methods.

Types of desktop video conferencing: Desk top video conferencing system coming onto the market today are divided into Three types they are based on plain old telephone lines: 1. POST 2. ISDN 3. Internet

Using POST for video conferencing: POST systems are especially attractive for Point-to-Point conferencing because no additional monthly charges are assessed and special arrangements with the telephone company are unnecessary. The drawback with a POST solution is a restriction to the top speed of today’s modems of 28.8 Kbps. It need a s/w ,once properly installing a s/w users allows to pipe video, audio, and data down a standard telephone line.

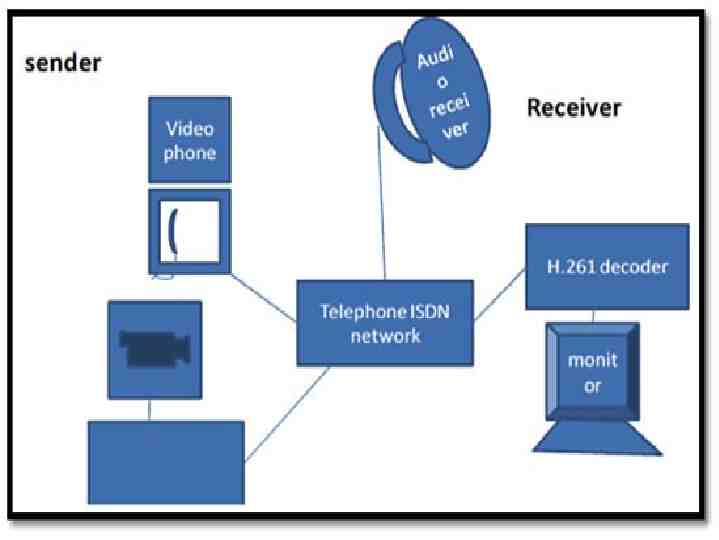

Using ISDN for video conferencing: ISDN lines mostly offer considerable more bandwidth up to 128 Kbps, but it require the installation of special hardware. The use of ISDN has been restricted to companies especially in private residence. The fallowing fig explains the basic architecture for television or video conferencing using ISDN network transport switching. This architecture is commonly found in videophones. Networks required for video conferencing are fiber optic cable or analog POST. For video compression and decompression, the ISDN networks uses the H.261 technology, it is specified by the international telegraph and telephone consultative committee algorithm.

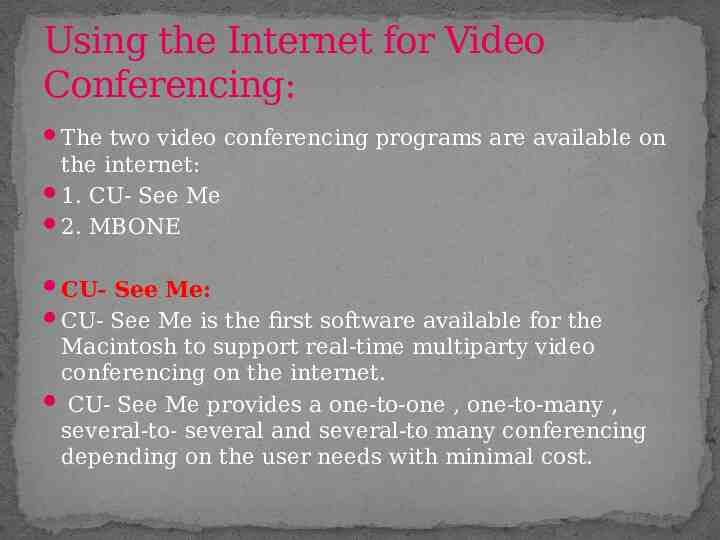

Using the Internet for Video Conferencing: The two video conferencing programs are available on the internet: 1. CU- See Me 2. MBONE CU- See Me: CU- See Me is the first software available for the Macintosh to support real-time multiparty video conferencing on the internet. CU- See Me provides a one-to-one , one-to-many , several-to- several and several-to many conferencing depending on the user needs with minimal cost.

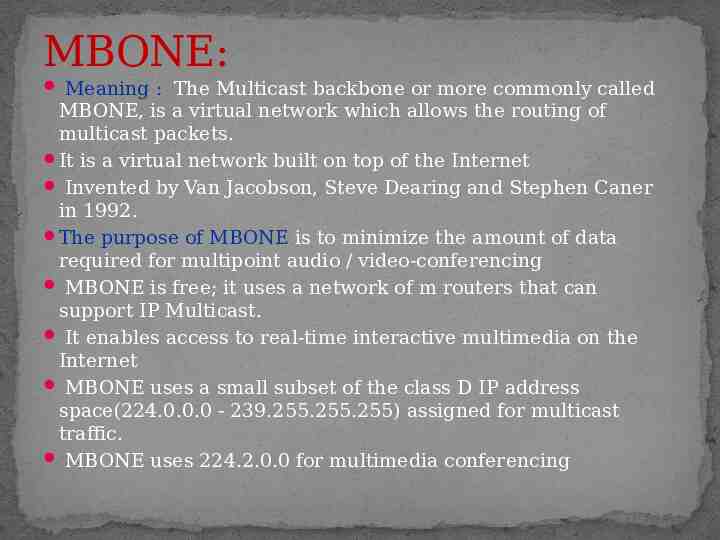

MBONE: Meaning : The Multicast backbone or more commonly called MBONE, is a virtual network which allows the routing of multicast packets. It is a virtual network built on top of the Internet Invented by Van Jacobson, Steve Dearing and Stephen Caner in 1992. The purpose of MBONE is to minimize the amount of data required for multipoint audio / video-conferencing MBONE is free; it uses a network of m routers that can support IP Multicast. It enables access to real-time interactive multimedia on the Internet MBONE uses a small subset of the class D IP address space(224.0.0.0 - 239.255.255.255) assigned for multicast traffic. MBONE uses 224.2.0.0 for multimedia conferencing

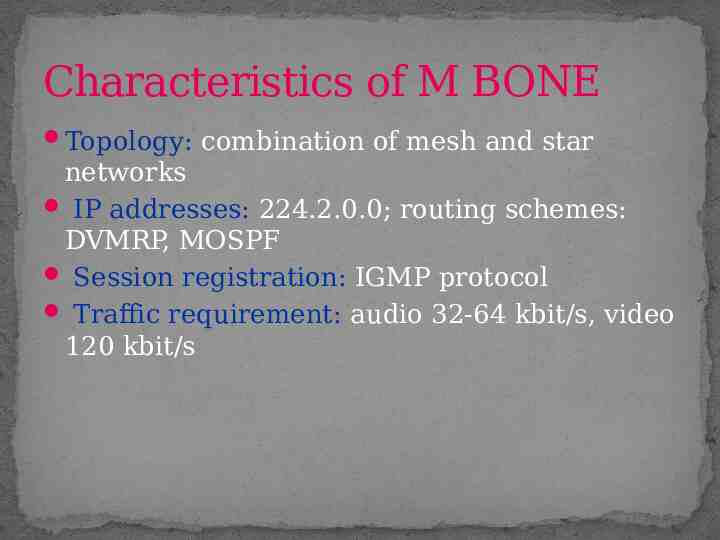

Characteristics of M BONE Topology: combination of mesh and star networks IP addresses: 224.2.0.0; routing schemes: DVMRP, MOSPF Session registration: IGMP protocol Traffic requirement: audio 32-64 kbit/s, video 120 kbit/s

MBONE tools: Video Conferencing: vic -t ttl destination- host/port (supports: NV, H.261, CellB, MPEG, MJPEG) Audio conferencing: vat -t ttl destinationhost/port (supports: LPC, PCMU, DVI4, GSM) Whiteboard: wb destination-host/port/ttl session directory: sdr

FRAME RELAY Frame relay is a data link layer, digital packet switching network protocol technology designed to connect Local Area Networks (LANs) and transfer data across Wide Area Networks (WANs). Frame Relay shares some of the same underlying technology as X – 25 and achieved some popularity in the United States as the underlying infrastructure for Integrated Services Digital Network (ISDN) services sold to business customers.

How Frame Relay Works Frame Relay supports multiplexing of traffic from multiple connections over a shared physical link using special-purpose hardware components including frame routers, bridges, and switches that package data into individual Frame Relay messages. Each connection utilizes a ten (10) bit . Data Link Connection Identifier (DLCI) for unique channel addressing. Two connection types exist:

Permanent Virtual Circuits (PVC) - for persistent connections intended to be maintained for long periods of time even if no data is actively being transferred Switched Virtual Circuits (SVC) - for temporary connections that last only for the duration of a single session.

Frame Relay achieves better performance than X.25 at a lower cost primarily not performing any error correction (that is instead offloaded to other components of the network), greatly reducing network latency. It also supports variable-length packet sizes for more efficient utilization of network bandwidth. Frame Relay operates over fiber optic or ISDN lines and can support different higherlevel network protocols including Internet Protocol (IP).

Thank you