HDF Cloud Services Moving HDF5 to the Cloud John Readey The HDF

31 Slides1.49 MB

HDF Cloud Services Moving HDF5 to the Cloud John Readey The HDF Group [email protected]

Outline Brief review of HDF5 Motivation for HDF Cloud Services The HDF REST API H5serv – REST API reference implementation Storage for data in the cloud HDF Scalable Data Service (HSDS) – HDF at Cloud Scale

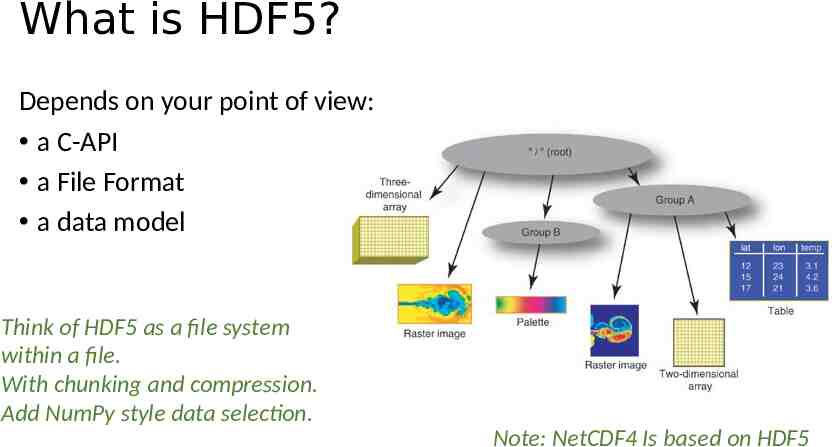

What is HDF5? Depends on your point of view: a C-API a File Format a data model Think of HDF5 as a file system within a file. With chunking and compression. Add NumPy style data selection. Note: NetCDF4 Is based on HDF5

HDF5 Features Some nice things about HDF5: Sophisticated type system Highly portable binary format Hierarchal objects (directed graph) Compression Fast data slicing/reduction Attributes Bindings for C, Fortran, Java, Python Things HDF5 doesn’t do (out of the box): Scalable analytics (other than MPI) Distributed Multiple writer/multiple reader Fine level access control Query/search Web accessible

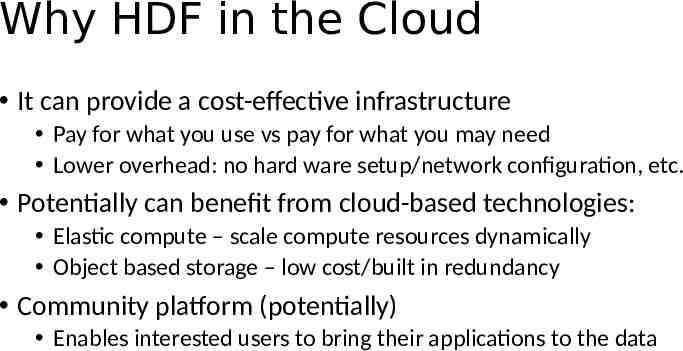

Why HDF in the Cloud It can provide a cost-effective infrastructure Pay for what you use vs pay for what you may need Lower overhead: no hard ware setup/network configuration, etc. Potentially can benefit from cloud-based technologies: Elastic compute – scale compute resources dynamically Object based storage – low cost/built in redundancy Community platform (potentially) Enables interested users to bring their applications to the data

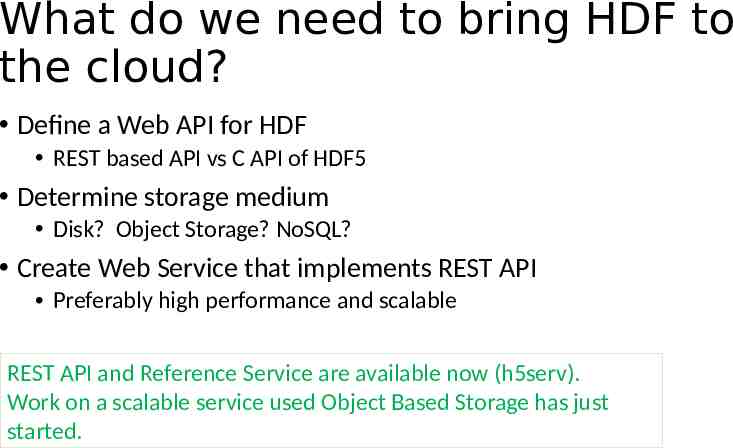

What do we need to bring HDF to the cloud? Define a Web API for HDF REST based API vs C API of HDF5 Determine storage medium Disk? Object Storage? NoSQL? Create Web Service that implements REST API Preferably high performance and scalable REST API and Reference Service are available now (h5serv). Work on a scalable service used Object Based Storage has just started.

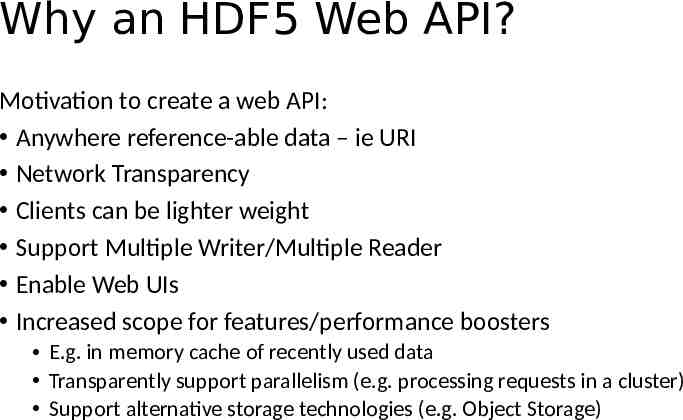

Why an HDF5 Web API? Motivation to create a web API: Anywhere reference-able data – ie URI Network Transparency Clients can be lighter weight Support Multiple Writer/Multiple Reader Enable Web UIs Increased scope for features/performance boosters E.g. in memory cache of recently used data Transparently support parallelism (e.g. processing requests in a cluster) Support alternative storage technologies (e.g. Object Storage)

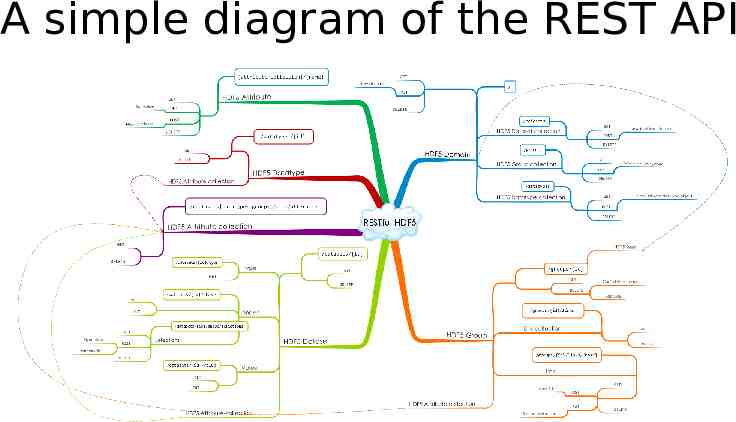

A simple diagram of the REST API

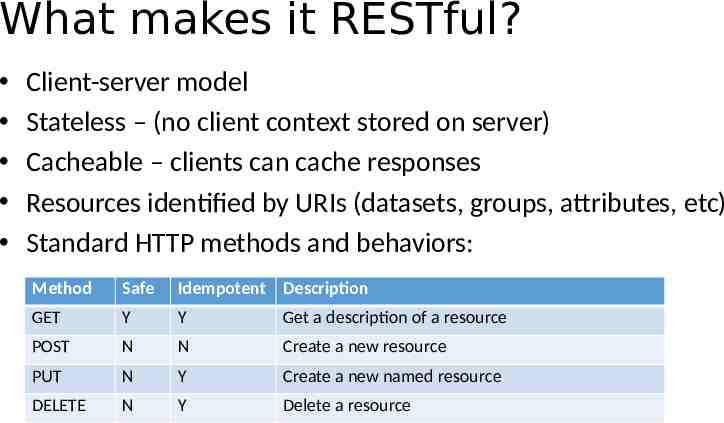

What makes it RESTful? Client-server model Stateless – (no client context stored on server) Cacheable – clients can cache responses Resources identified by URIs (datasets, groups, attributes, etc) Standard HTTP methods and behaviors: Method Safe Idempotent Description GET Y Y Get a description of a resource POST N N Create a new resource PUT N Y Create a new named resource DELETE N Y Delete a resource

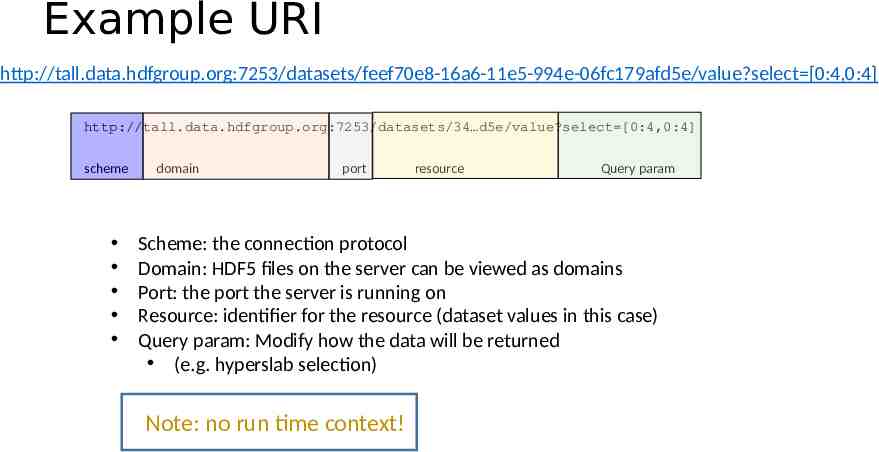

Example URI http://tall.data.hdfgroup.org:7253/datasets/feef70e8-16a6-11e5-994e-06fc179afd5e/value?select [0:4,0:4] http://tall.data.hdfgroup.org:7253/datasets/34 d5e/value?select [0:4,0:4] scheme domain port resource Query param Scheme: the connection protocol Domain: HDF5 files on the server can be viewed as domains Port: the port the server is running on Resource: identifier for the resource (dataset values in this case) Query param: Modify how the data will be returned (e.g. hyperslab selection) Note: no run time context!

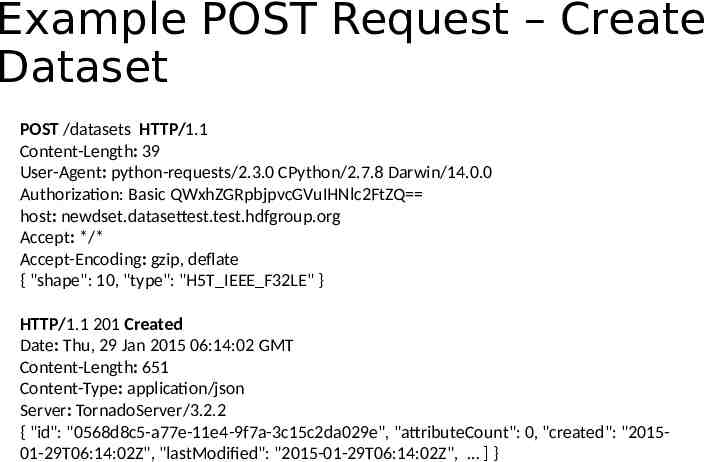

Example POST Request – Create Dataset POST /datasets HTTP/1.1 Content-Length: 39 User-Agent: python-requests/2.3.0 CPython/2.7.8 Darwin/14.0.0 Authorization: Basic QWxhZGRpbjpvcGVuIHNlc2FtZQ host: newdset.datasettest.test.hdfgroup.org Accept: */* Accept-Encoding: gzip, deflate { "shape": 10, "type": "H5T IEEE F32LE" } HTTP/1.1 201 Created Date: Thu, 29 Jan 2015 06:14:02 GMT Content-Length: 651 Content-Type: application/json Server: TornadoServer/3.2.2 { "id": "0568d8c5-a77e-11e4-9f7a-3c15c2da029e", "attributeCount": 0, "created": "201501-29T06:14:02Z", "lastModified": "2015-01-29T06:14:02Z", ] }

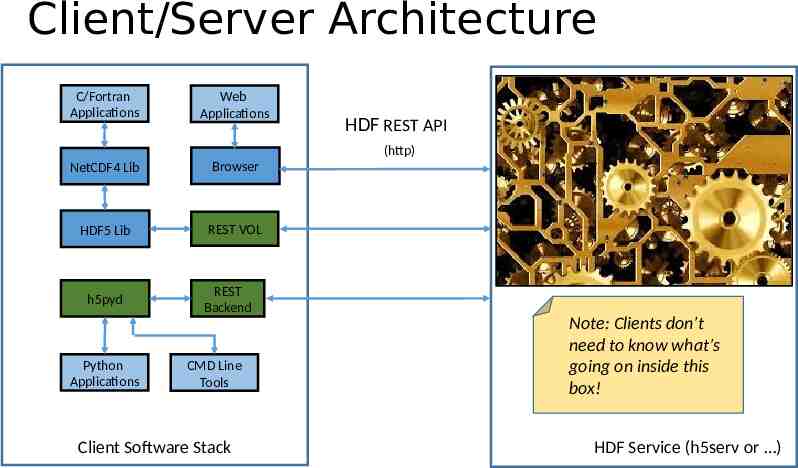

Client/Server Architecture C/Fortran Applications Web Applications HDF REST API (http) NetCDF4 Lib Browser HDF5 Lib REST VOL h5pyd Python Applications REST Backend CMD Line Tools Client Software Stack Note: Clients don’t need to know what’s going on inside this box! HDF Service (h5serv or )

Reference Implementation – h5serv Open Source implementation of the HDF REST API Get it at: https://github.com/HDFGroup/h5serv First release in 2015 – many features added since then Easy to setup and configure Runs on Windows/Linux/Mac Not intended for heavy production use Implementation is single threaded Each request is completed before the next one is processed

H5serv Highlights Written in Python using Tornado Framework (uses h5py & hdf5lib) REST-based API HTTP request/responses in JSON or binary Full CRUD (create/read/update/delete) support Most HDF5 features (Compound types, Compression, chunking, links) Content directory Self-contained web server Open Source (except web ui) UUID identifiers for Groups/Datasets/Datatypes Authentication and/or public access Object-level access control (read/write control per object) Query support

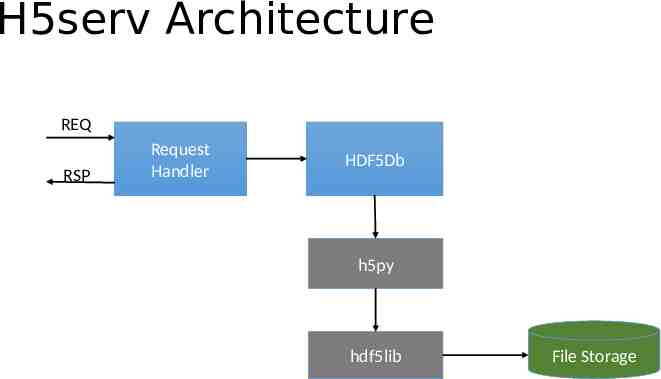

H5serv Architecture REQ RSP Request Handler HDF5Db h5py hdf5lib File Storage

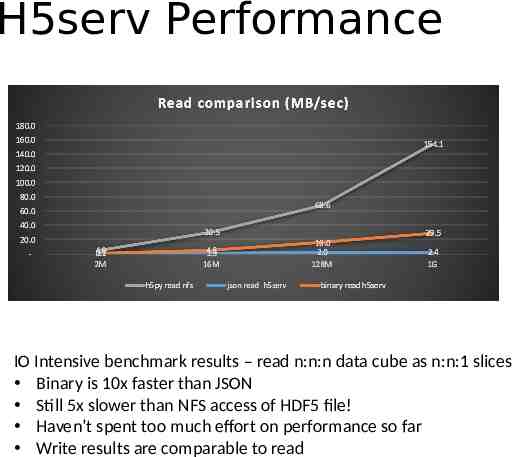

H5serv Performance IO Intensive benchmark results – read n:n:n data cube as n:n:1 slices Binary is 10x faster than JSON Still 5x slower than NFS access of HDF5 file! Haven’t spent too much effort on performance so far Write results are comparable to read

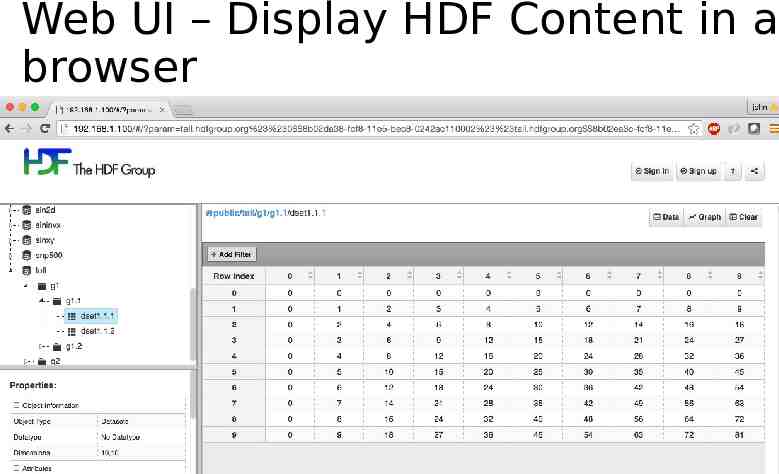

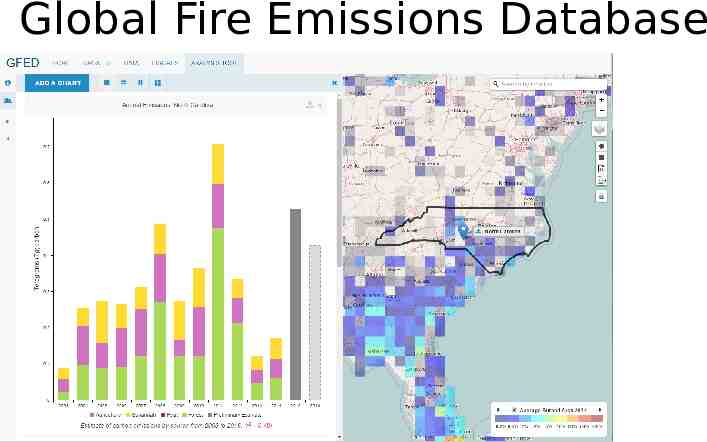

Sample Applications Even though h5serv has limited scalability, there have been some interesting applications built using it A couple of examples The HDF Group is developing a AJAX-based HDF Viewer for the web Anika Cartas at NASA Goddard developed a ”Global Fire Emissions Database” This is a Web-based app as well Ellen Johnson has created sample MATLAB scripts using the REST API (stay tuned for her talk) H5pyd – A h5py compatible Python SDK See: https://github.com/HDFGroup/h5pyd CMD Line tools – coming soon

Web UI – Display HDF Content in a browser

Global Fire Emissions Database

H5pyd – Python Client for REST API H5py-like client library for Python apps HDF5 library not needed on client Calls to HDF5 in h5py replaced by http requests to h5serv Provide most of the functionality of h5py high-level library Same code can work with local h5py (to files) or h5pyd (to REST API) Extensions for HDF REST API-specific features E.g.: query support Future Work: HDF5 Rest VOL Library for C/Fortran clients that provides HDF5 API with REST backend.

CMD Line Tools Tools for common admin tasks: List files (‘domains’ in HDF REST API parlance) hosted by service Update permissions Download content as local HDF5 files Upload local HDF5 files Output content of HDF5 domain (similar to h5dump or h5ls cmd tools)

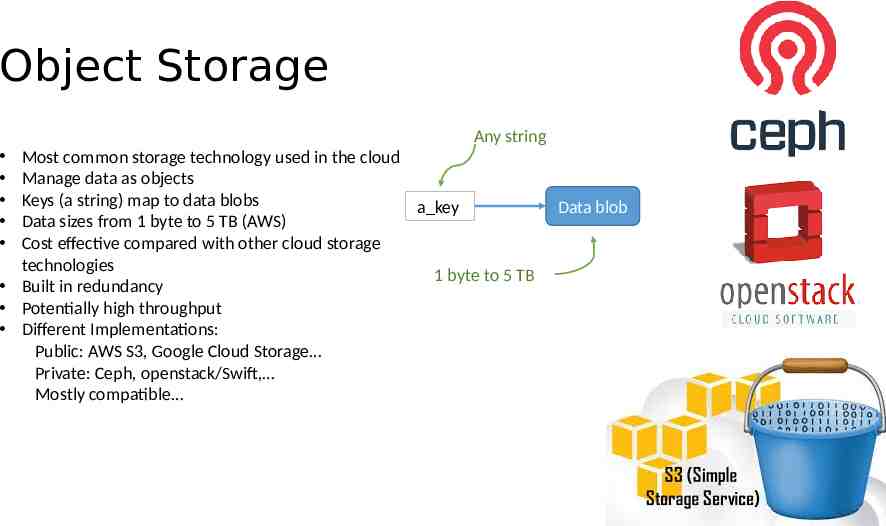

Object Storage Any string Most common storage technology used in the cloud Manage data as objects Keys (a string) map to data blobs a key Data sizes from 1 byte to 5 TB (AWS) Cost effective compared with other cloud storage technologies 1 byte to 5 TB Built in redundancy Potentially high throughput Different Implementations: Public: AWS S3, Google Cloud Storage Private: Ceph, openstack/Swift, Mostly compatible Data blob

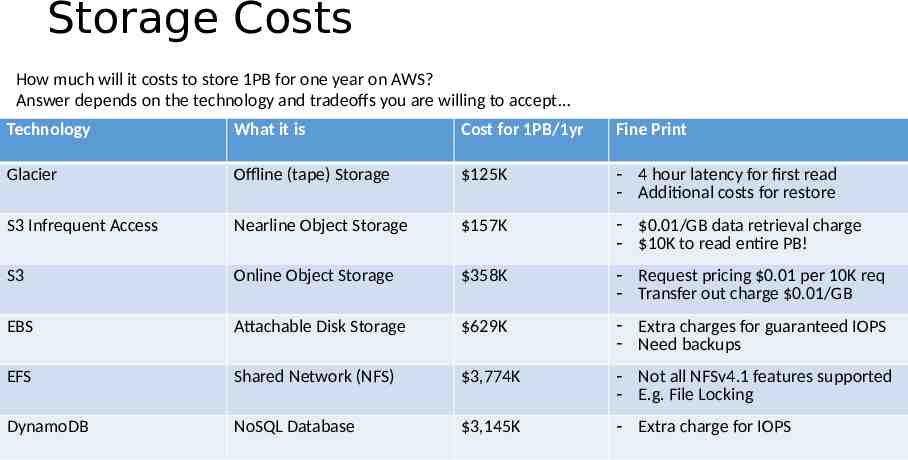

Storage Costs How much will it costs to store 1PB for one year on AWS? Answer depends on the technology and tradeoffs you are willing to accept Technology What it is Cost for 1PB/1yr Fine Print Glacier Offline (tape) Storage 125K - 4 hour latency for first read - Additional costs for restore S3 Infrequent Access Nearline Object Storage 157K - 0.01/GB data retrieval charge - 10K to read entire PB! S3 Online Object Storage 358K - Request pricing 0.01 per 10K req - Transfer out charge 0.01/GB EBS Attachable Disk Storage 629K - Extra charges for guaranteed IOPS - Need backups EFS Shared Network (NFS) 3,774K - Not all NFSv4.1 features supported - E.g. File Locking DynamoDB NoSQL Database 3,145K - Extra charge for IOPS

Object Storage Challenges for HDF Not POSIX! High latency (0.25s) per request Not write/read consistent High throughput needs some tricks (use many async requests) Request charges can add up (public cloud) For HDF5, using the HDF5 library directly on an object storage system is a non-starter. Will need an alternative solution

How to store HDF5 data in an object store? Store each HDF5 file as an object store object? Idea: Store each HDF5 file as an object Read on demand Update locally – write back entire file to store But. Slow – need to read entire file for each read Consistency issues for updates Limit to max file size (AWS 5TB)

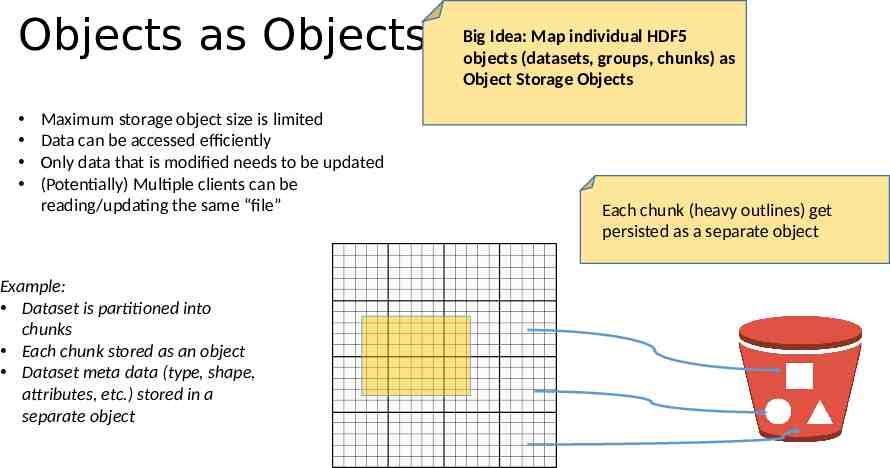

Objects as Objects! Maximum storage object size is limited Data can be accessed efficiently Only data that is modified needs to be updated (Potentially) Multiple clients can be reading/updating the same “file” Example: Dataset is partitioned into chunks Each chunk stored as an object Dataset meta data (type, shape, attributes, etc.) stored in a separate object Big Idea: Map individual HDF5 objects (datasets, groups, chunks) as Object Storage Objects Each chunk (heavy outlines) get persisted as a separate object

HDF Scalable Data Service (HSDS) A highly scalable implementation of the HDF REST API Goals: Support any sized repository Any number of users/clients Any request volume Provide data as fast as the client can pull it in Targeted for AWS but portable to other public/private clouds Cost effective Use AWS S3 as primary storage Decouple storage and compute costs Elastically scale compute with usage

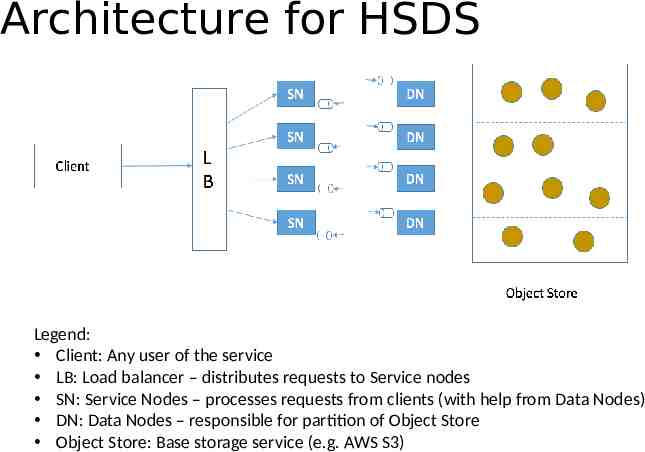

Architecture for HSDS Legend: Client: Any user of the service LB: Load balancer – distributes requests to Service nodes SN: Service Nodes – processes requests from clients (with help from Data Nodes) DN: Data Nodes – responsible for partition of Object Store Object Store: Base storage service (e.g. AWS S3)

HSDS Architecture Highlights DN’s provide read/write consistent layer on top of AWS S3 DN’s also serve as data cache (improve performance and lower S3 request cost) SN’s deterministically know which DN’s are needed to server a given request Number of DN’s/SN’s can grow or shrink depending on demand Minimal operational costs would be 1 SN, 1 DN and data storage costs for S3 Query operations can run across all data nodes in parallel

HSDS Timeline and next steps Work just started July 1, 2016 This work is being supported by NASA Cooperative Agreement NNX16AL91A Working with NASA OpenNEX team Also included client components: HDF REST VOL H5pyd CMD line tools Scope of project is for the next two years, but hoping to have prototype available sooner Would love feedback on design, use cases, or additional features you’d like to see

To Find out More: H5serv: https://github.com/HDFGroup/h5serv Documentation: http://h5serv.readthedocs.io/ H5pyd: https://github.com/HDFGroup/h5pyd RESTful HDF5 White Paper: https:// www.hdfgroup.org/pubs/papers/RESTful HDF5.pdf OpenNex: https://nex.nasa.gov/nex/static/htdocs/site/extra/opennex/ Blog articles: https://hdfgroup.org/wp/2015/04/hdf5-for-the-web-hdf-server/ https://hdfgroup.org/wp/2015/12/serve-protect-web-security-hdf5/