FlowRadar: A Better NetFlow For Data Centers Yuliang Li Rui

31 Slides4.88 MB

FlowRadar: A Better NetFlow For Data Centers Yuliang Li Rui Miao Changhoon Kim Minlan Yu 1

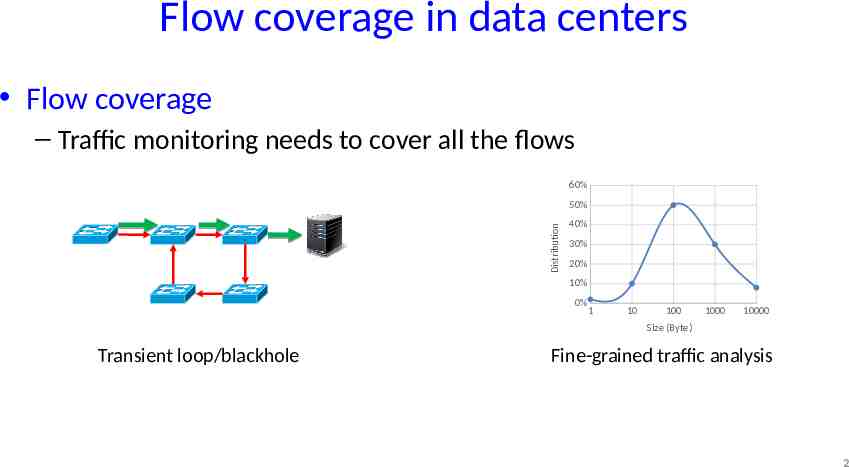

Flow coverage in data centers Flow coverage – Traffic monitoring needs to cover all the flows 60% Distribution 50% 40% 30% 20% 10% 0% 1 10 100 1000 10000 Size (Byte) Transient loop/blackhole Fine-grained traffic analysis 2

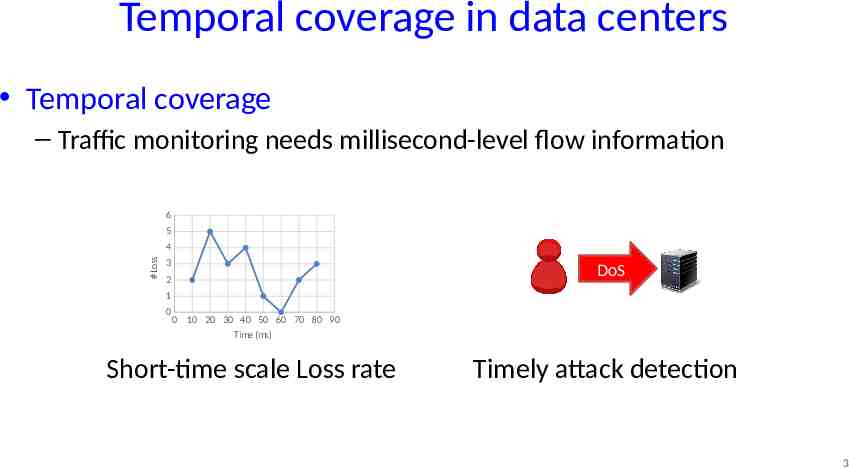

Temporal coverage in data centers Temporal coverage – Traffic monitoring needs millisecond-level flow information 6 5 # Loss 4 3 DoS 2 1 0 0 10 20 30 40 50 60 70 80 90 Time (ms) Short-time scale Loss rate Timely attack detection 3

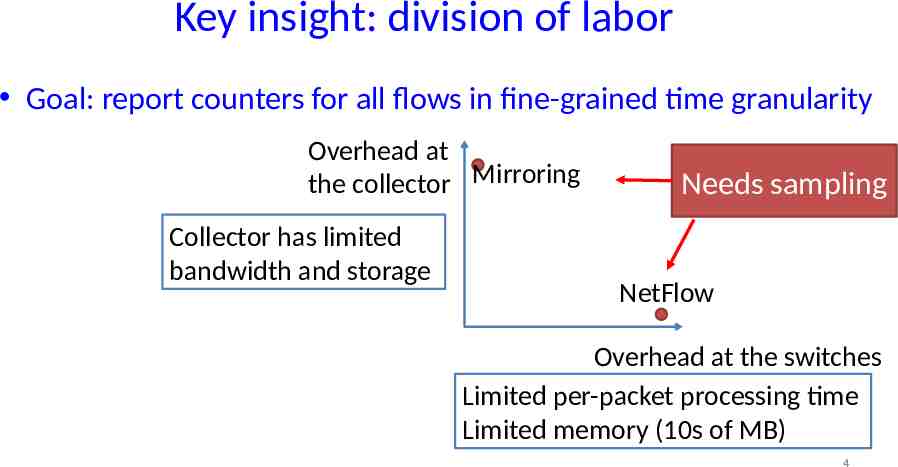

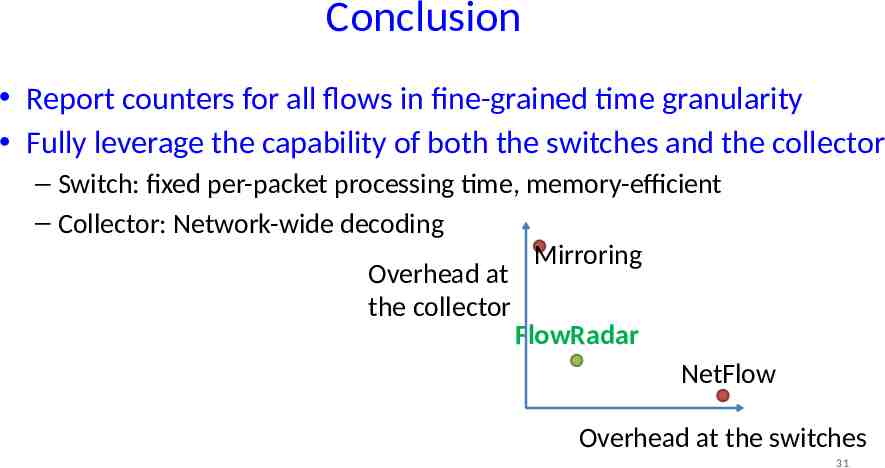

Key insight: division of labor Goal: report counters for all flows in fine-grained time granularity Overhead at the collector Mirroring Collector has limited bandwidth and storage Needs sampling NetFlow Overhead at the switches Limited per-packet processing time Limited memory (10s of MB) 4

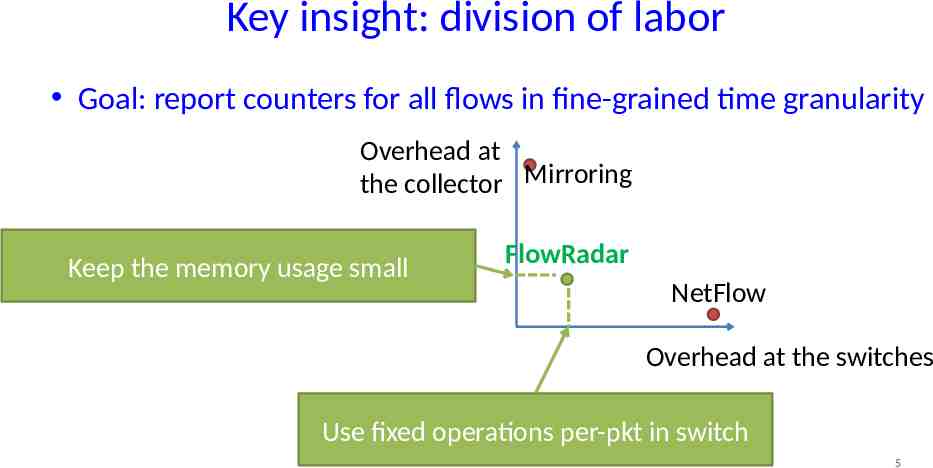

Key insight: division of labor Goal: report counters for all flows in fine-grained time granularity Overhead at the collector Mirroring Keep the memory usage small FlowRadar NetFlow Overhead at the switches Use fixed operations per-pkt in switch 5

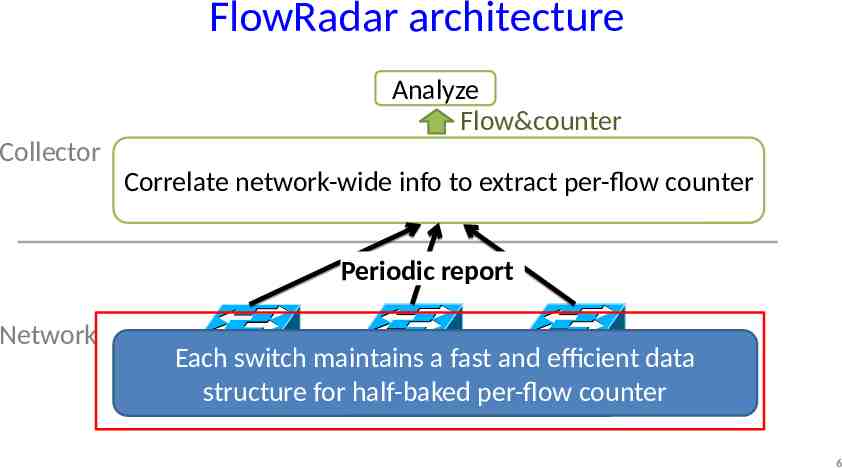

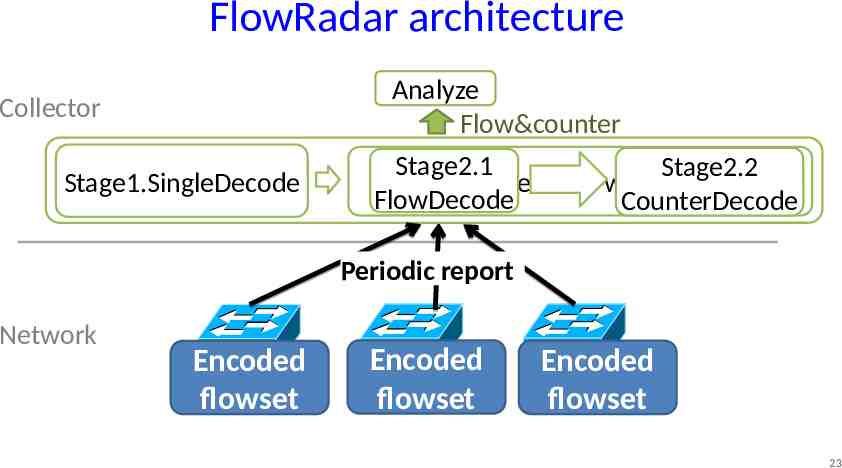

FlowRadar architecture Analyze Flow&counter Collector Correlate network-wide info to extract per-flow counter Periodic report Network Each switch maintains a fast and efficient data structure for half-baked per-flow counter 6

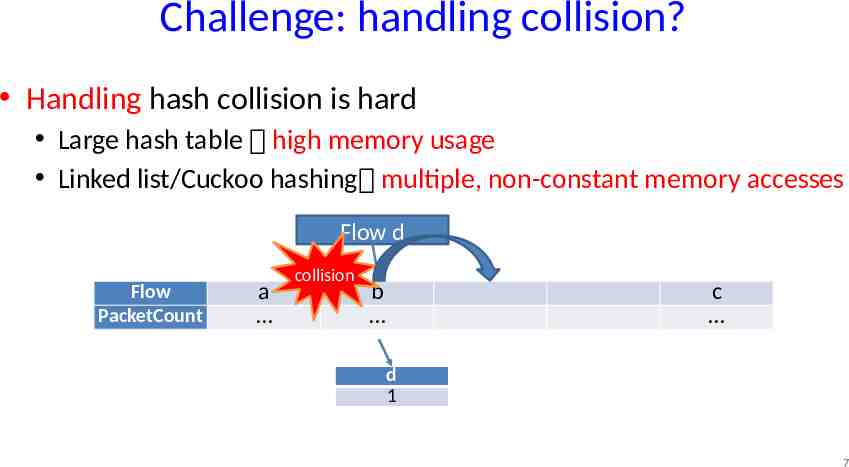

Challenge: handling collision? Handling hash collision is hard Large hash table high memory usage Linked list/Cuckoo hashing multiple, non-constant memory accesses Flow d Flow PacketCount a collision b c d 1 7

Switch embraces collisions! Handling hash collision is hard Large hash table high memory usage Linked list/Cuckoo hashing multiple, non-constant memory accesses Embrace the collision Less memory and constant #accesses 8

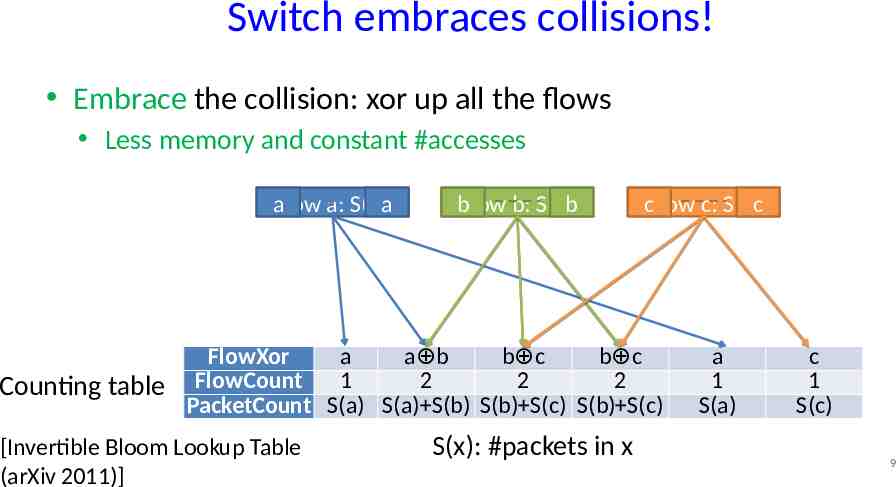

Switch embraces collisions! Embrace the collision: xor up all the flows Less memory and constant #accesses aflow a: S(a)a bFlow b: S(b)b cFlow c: S(c)c FlowXor a a b b c b c 2 2 2 Counting table FlowCount 1 PacketCount S(a) S(a) S(b) S(b) S(c) S(b) S(c) [Invertible Bloom Lookup Table (arXiv 2011)] S(x): #packets in x a 1 S(a) c 1 S(c) 9

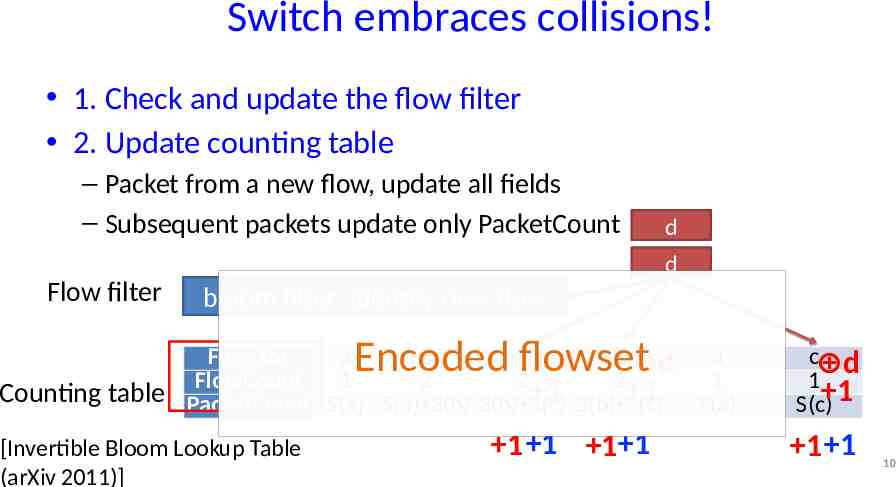

Switch embraces collisions! 1. Check and update the flow filter 2. Update counting table – Packet from a new flow, update all fields – Subsequent packets update only PacketCount d d Flow filter bloom filter: identify new flow Encoded flowset c d 1 S(c) 1 1 1 1 1 1 1 FlowXor a a b b c d b d c a FlowCount 1 2 2 2 1 1 1 Counting table PacketCount S(a) S(a) S(b) S(b) S(c) S(b) S(c) S(a) [Invertible Bloom Lookup Table (arXiv 2011)] 10

Easy to implement in merchant silicon Switch data plane – Fixed operations in hardware – Small memory, 2.36MB for 100K flows Switch control plane – Control plane gets the small flowset every 10ms We implemented it using P4 Language. 11

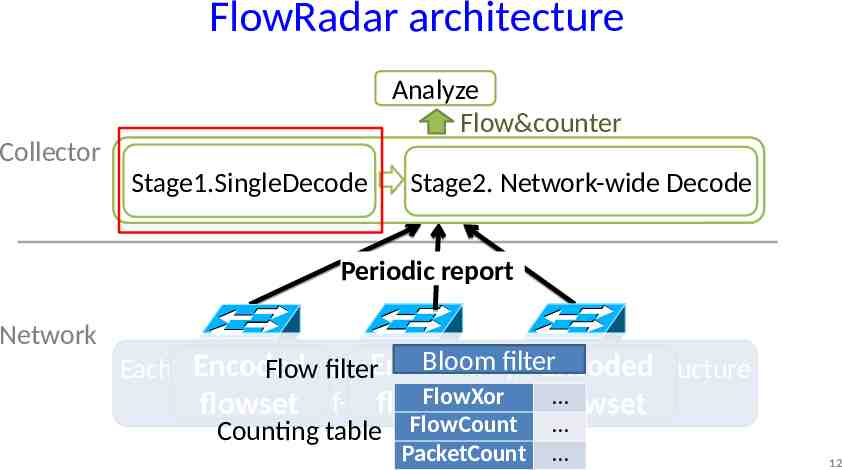

FlowRadar architecture Analyze Flow&counter Collector Correlate network wide info to extract per-flow counter Stage1.SingleDecode Stage2. Network-wide Decode Periodic report Network Bloom filter Encoded Encoded Flow filterEncoded Each switch maintains a fast and efficient data structure FlowXor counter for half-baked per-flow flowset flowset flowset Counting table FlowCount PacketCount 12

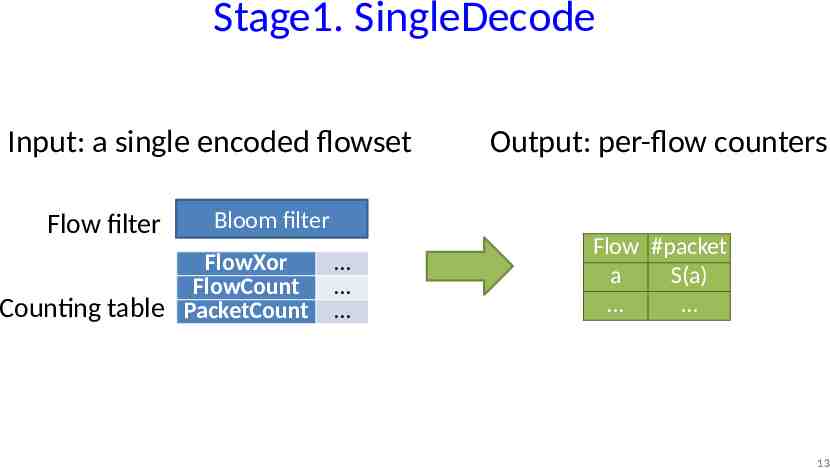

Stage1. SingleDecode Input: a single encoded flowset Flow filter Output: per-flow counters Bloom filter FlowXor FlowCount Counting table PacketCount Flow #packet a S(a) 13

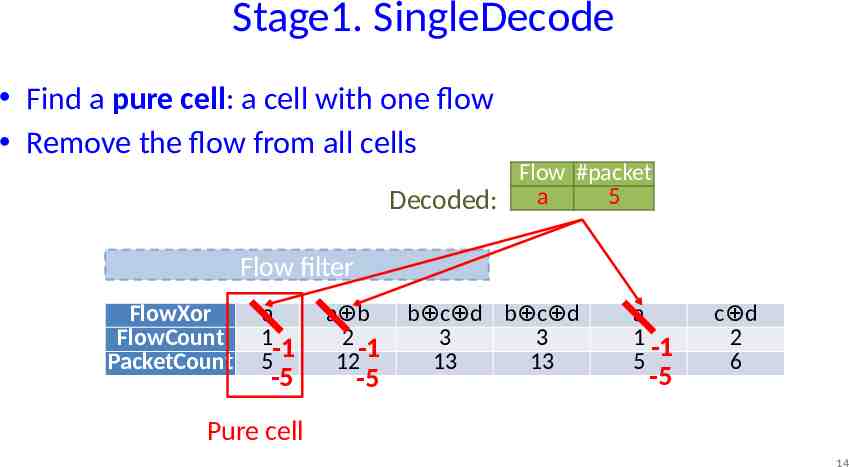

Stage1. SingleDecode Find a pure cell: a cell with one flow Remove the flow from all cells Flow #packet 5 Decoded: a Flow filter FlowXor FlowCount PacketCount a 1-1 5 -5 a b 2 -1 12 -5 b c d b c d 3 3 13 13 a 1 -1 5 -5 c d 2 6 Pure cell 14

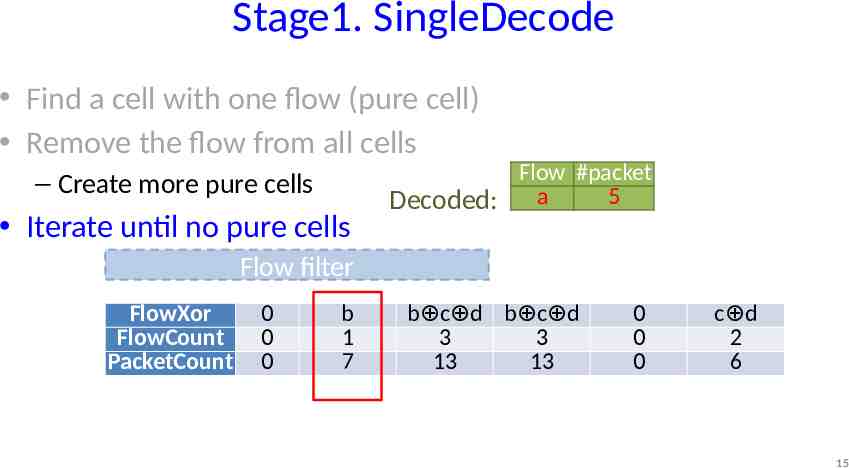

Stage1. SingleDecode Find a cell with one flow (pure cell) Remove the flow from all cells – Create more pure cells Iterate until no pure cells Flow #packet 5 Decoded: a Flow filter FlowXor FlowCount PacketCount 0 0 0 b 1 7 b c d b c d 3 3 13 13 0 0 0 c d 2 6 15

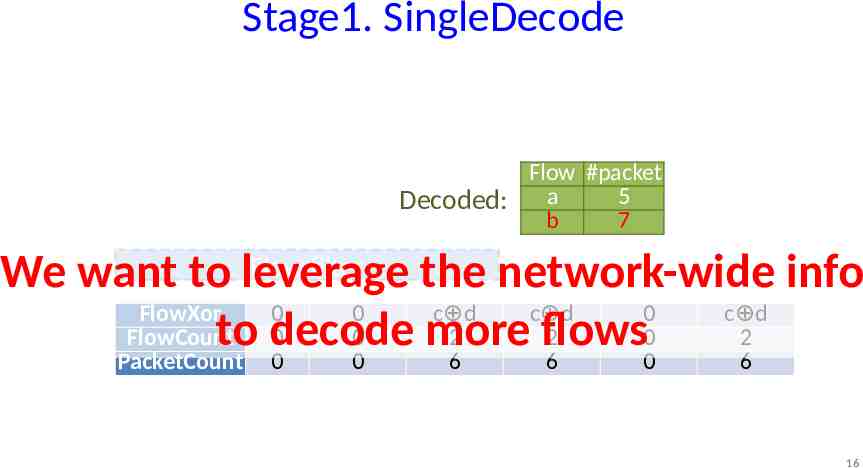

Stage1. SingleDecode Flow #packet 5 Decoded: a b 7 Flow filter We want to leverage the network-wide info FlowXor 0 0 c d c d 0 c d to decode more flows FlowCount 0 0 2 2 0 2 PacketCount 0 0 6 6 0 6 16

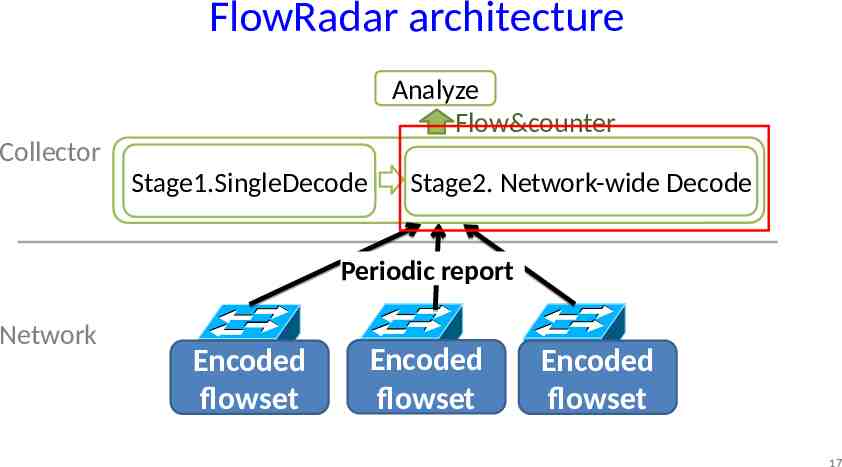

FlowRadar architecture Analyze Flow&counter Collector Stage1.SingleDecode Stage2. Network-wide Decode Periodic report Network Encoded flowset Encoded flowset Encoded flowset 17

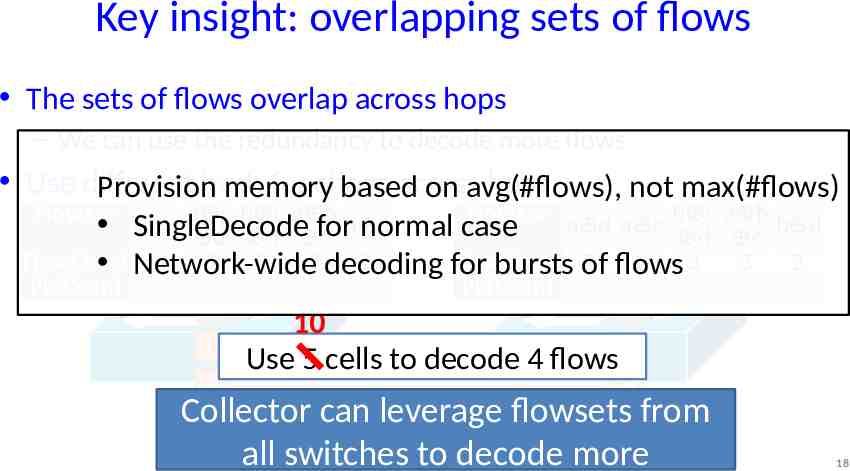

Key insight: overlapping sets of flows The sets of flows overlap across hops – We can use the redundancy to decode more flows Use different functions across hops Provisionhash memory based on avg(#flows), not max(#flows) FlowXor a a c b c a b b d FlowXor b c a b b d a d a c SingleDecode for normal case d d c d c FlowCount FlowCount 1 3a 3 3 decoding 2 2 flows Network-wide for bursts of a2 3 3 2 PktCount PktCount b b 10 c Use 5 cells to decode 4 flows c d d Collector can leverage flowsets from all switches to decode more 18

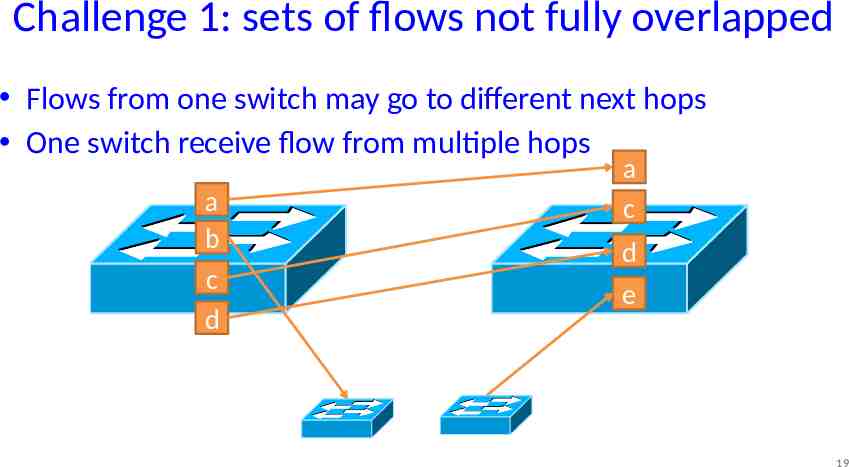

Challenge 1: sets of flows not fully overlapped Flows from one switch may go to different next hops One switch receive flow from multiple hops a b c d a c d e 19

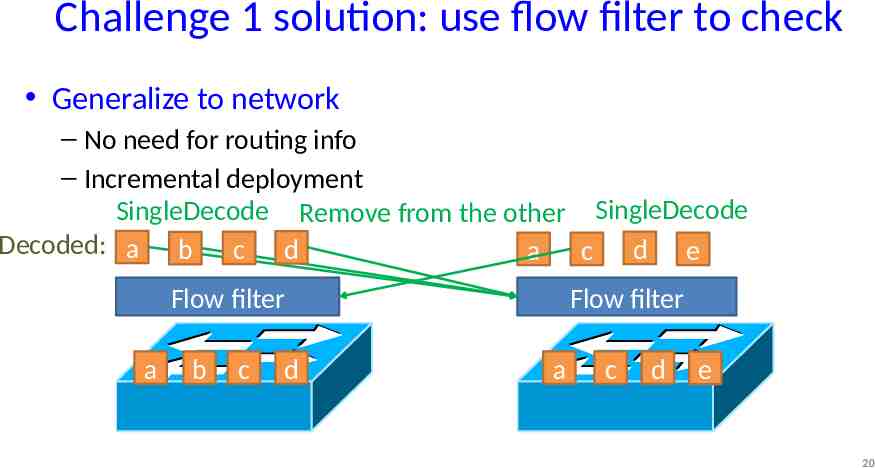

Challenge 1 solution: use flow filter to check Generalize to network – No need for routing info – Incremental deployment SingleDecode Remove from the other SingleDecode Decoded: a b c d a c d e Flow filter a b c d Flow filter a c d e 20

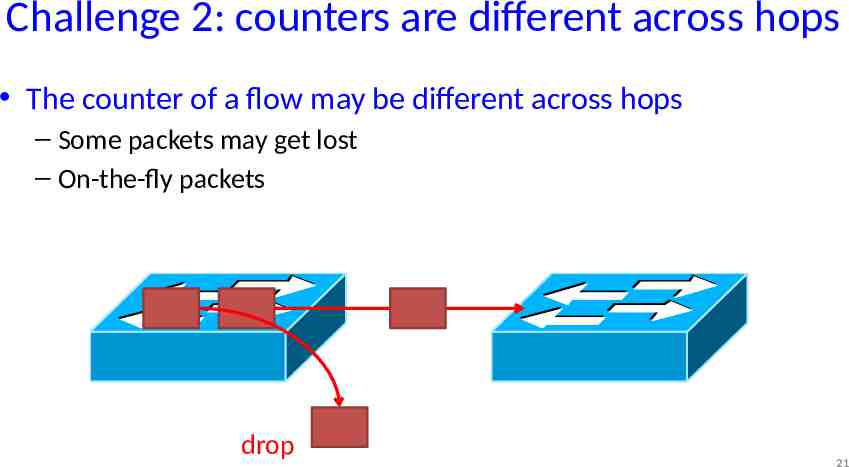

Challenge 2: counters are different across hops The counter of a flow may be different across hops – Some packets may get lost – On-the-fly packets drop 21

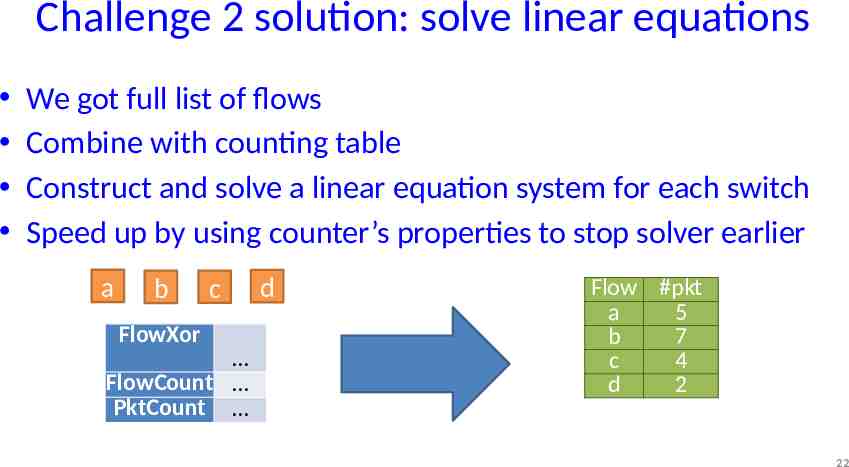

Challenge 2 solution: solve linear equations We got full list of flows Combine with counting table Construct and solve a linear equation system for each switch Speed up by using counter’s properties to stop solver earlier a b FlowXor c FlowCount PktCount d Flow #pkt a 5 b 7 c 4 d 2 22

FlowRadar architecture Analyze Flow&counter Collector Stage1.SingleDecode Stage2.1 Stage2.2 Stage2. Network-wide Decode FlowDecode CounterDecode Periodic report Network Encoded flowset Encoded flowset Encoded flowset 23

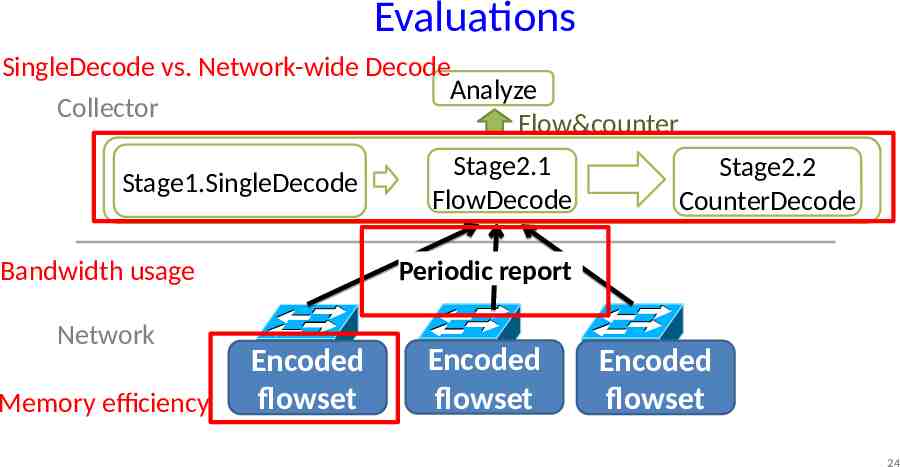

Evaluations SingleDecode vs. Network-wide Decode Analyze Collector Flow&counter Stage1.SingleDecode Memory efficiency Stage2.2 CounterDecode Periodic report Bandwidth usage Network Stage2.1 FlowDecode Encoded flowset Encoded flowset Encoded flowset 24

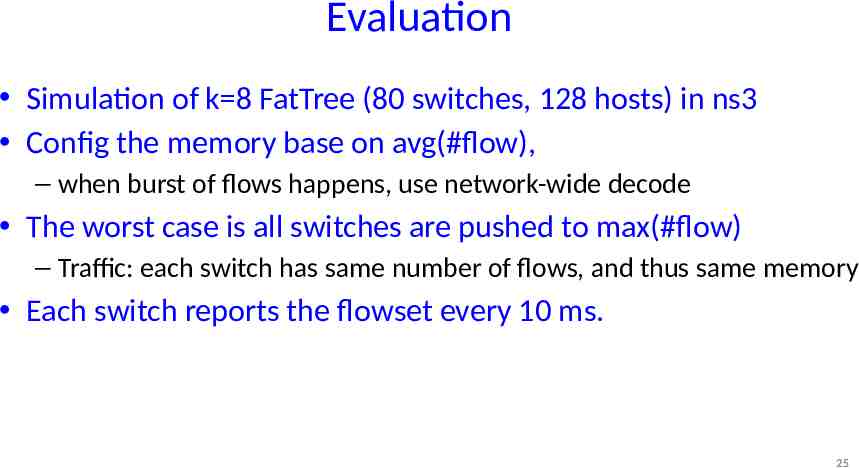

Evaluation Simulation of k 8 FatTree (80 switches, 128 hosts) in ns3 Config the memory base on avg(#flow), – when burst of flows happens, use network-wide decode The worst case is all switches are pushed to max(#flow) – Traffic: each switch has same number of flows, and thus same memory Each switch reports the flowset every 10 ms. 25

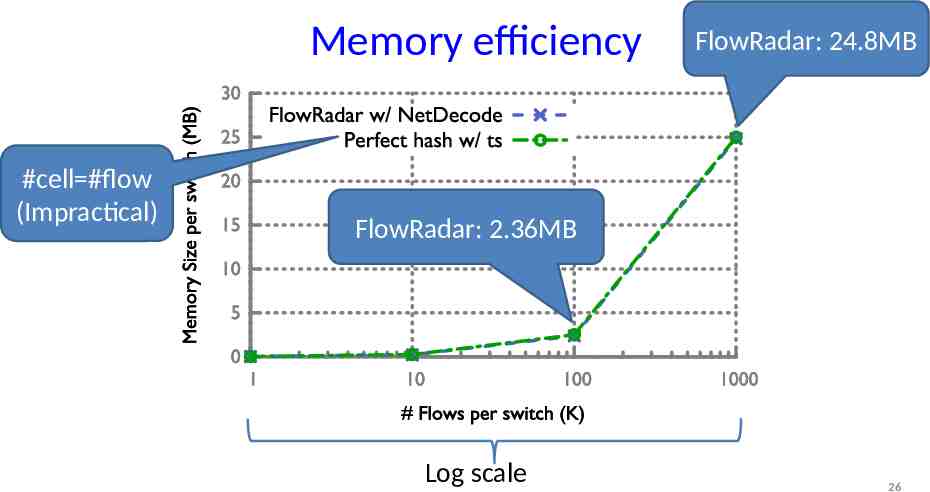

Memory efficiency #cell #flow (Impractical) FlowRadar: 24.8MB FlowRadar: 2.36MB Log scale 26

Other results Bandwidth usage – Only 0.52% based on topology and traffic of Facebook data centers (sigcomm’15) NetDecode improvement over SingleDecode – SingleDecode 100K flow, which takes 10ms – NetDecode 26.8% more flows with the same memory, which takes around 3 sec 27

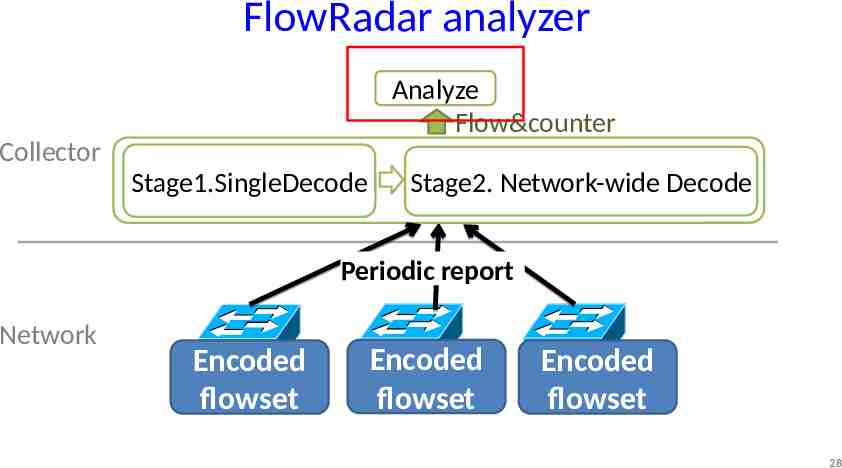

FlowRadar analyzer Analyze Flow&counter Collector Stage1.SingleDecode Stage2. Network-wide Decode Periodic report Network Encoded flowset Encoded flowset Encoded flowset 28

Analysis applications Flow coverage – Transient loop/blackhole – Error in match-action table – Fine-grained traffic analysis Temporal coverage – Short time-scale per-flow loss rate – ECMP load imbalance – Timely attack detection 29

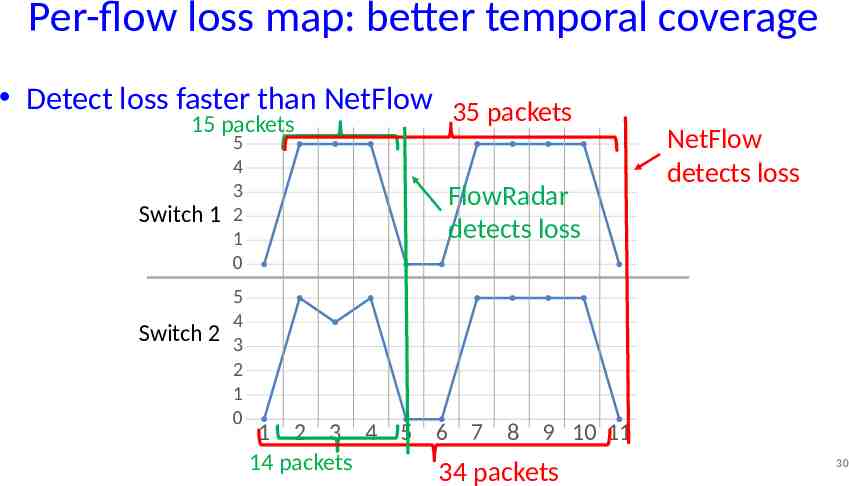

Per-flow loss map: better temporal coverage Detect loss faster than NetFlow 35 packets 15 packets 5 4 3 Switch 1 2 1 0 5 4 Switch 2 3 2 1 0 FlowRadar detects loss 1 2 3 4 5 6 7 8 9 10 11 14 packets 34 packets NetFlow detects loss 30

Conclusion Report counters for all flows in fine-grained time granularity Fully leverage the capability of both the switches and the collector – Switch: fixed per-packet processing time, memory-efficient – Collector: Network-wide decoding Mirroring Overhead at the collector FlowRadar NetFlow Overhead at the switches 31