Critical Thinking Unmasked: How to Infuse It into

71 Slides286.86 KB

Critical Thinking Unmasked: How to Infuse It into a Discipline-Based Course Linda B. Nilson, Ph.D. Director Emerita, Office of Teaching Effectiveness and Innovation Clemson University * 864.261.9200 * [email protected] * www.linkedin.com/in/lindabnilson

Outcomes for You To explain what critical thinking (CT) is To identify what course content it can and cannot be applied to To write assessable CT student learning outcomes compatible with your discipline and the CT VALUE Rubric

To select and adapt methods and strategies for teaching CT at beginning and advanced levels To compose true/false, matching, multiple choice, and multiple true/false items that assess most of your students’ CT skills

To design constructed response prompts that give sufficient guidance, assess all CT skills authentically, and enhance students’ self-awareness of their thinking processes. To develop high-quality, CT-focused assessment rubrics To obtain artifacts relevant to the CT VALUE Rubric

Where CT doesn’t apply Lower-level thinking/learning: knowledge, remembering, recognizing, reproducing, simple (non-interpretive) comprehension /understanding “Cookbook” or “plug-&-chug” procedures and solutions

Where CT Does Apply When a “claim” may or may not be valid, complete, or the best possible. “Claim” belief, value, assumption, theory, interpretation, problem definition, analysis, generalization, viewpoint, contention, opinion, hypothesis, solution, inference, decision, prediction, or conclusion – not a fact or agreed-upon definition.

Why a “Claim” May Be Questionable Evidence is uncertain, ambiguous, or contradictory. Multiple respectable claims exist (issues of disagreement, debate, controversy). Source is suspect. Evaluation process is unclear. Other reasons?

What content in your courses relies on “claims” that may or may not be valid, complete, or the best possible? (Look for areas of uncertainty or future predictions.)

Many Different CT Frameworks – Brookfield (focus on assumptions) – Higher-level cognitive operations in Bloom’s Taxonomy – Perry’s Stages of UG Cognitive Development – Halpern (cognitive psychology) – Wolcott (& Lynch) – Steps to More Complex/ Critical Thinking – Paul & Elder, Foundation for Critical Thinking – Facione and Delphi Report

Points of Overlap CT interpretation/analysis evaluation CT is difficult and unnatural; it takes time to learn. CT is not only cognition but also “character” (motivation, ability).

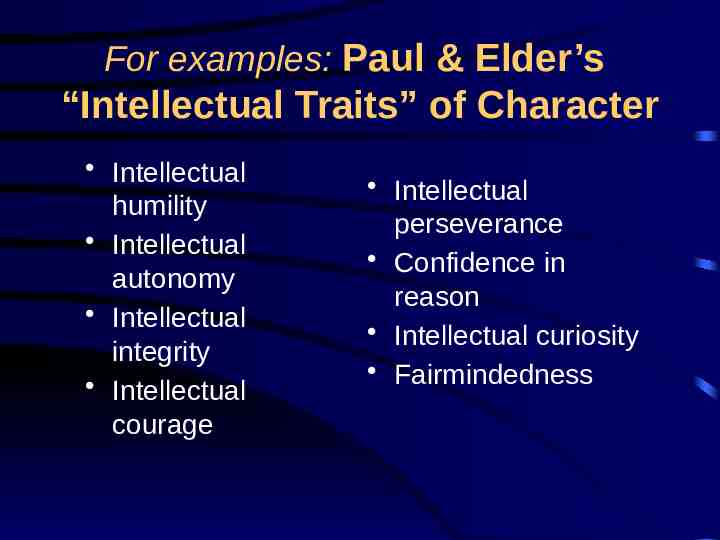

For examples: Paul & Elder’s “Intellectual Traits” of Character Intellectual humility Intellectual autonomy Intellectual integrity Intellectual courage Intellectual perseverance Confidence in reason Intellectual curiosity Fairmindedness

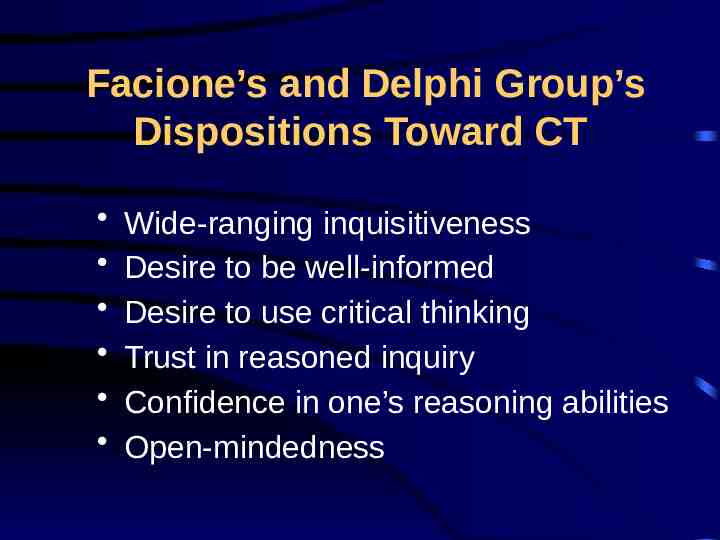

Facione’s and Delphi Group’s Dispositions Toward CT Wide-ranging inquisitiveness Desire to be well-informed Desire to use critical thinking Trust in reasoned inquiry Confidence in one’s reasoning abilities Open-mindedness

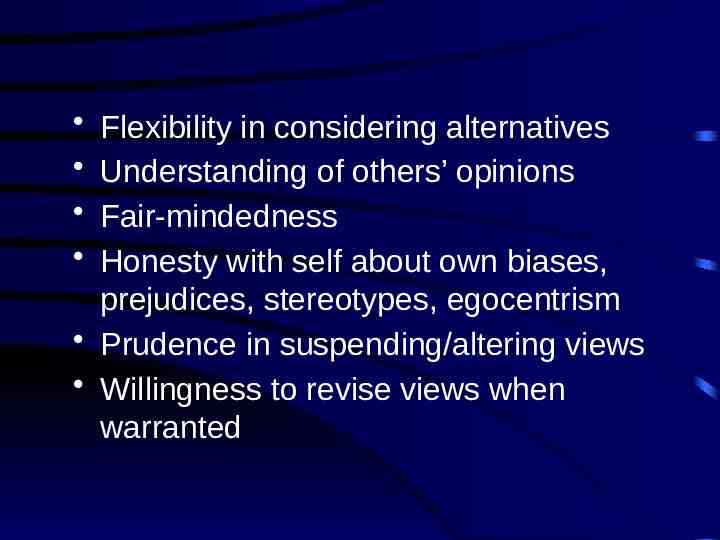

Flexibility in considering alternatives Understanding of others’ opinions Fair-mindedness Honesty with self about own biases, prejudices, stereotypes, egocentrism Prudence in suspending/altering views Willingness to revise views when warranted

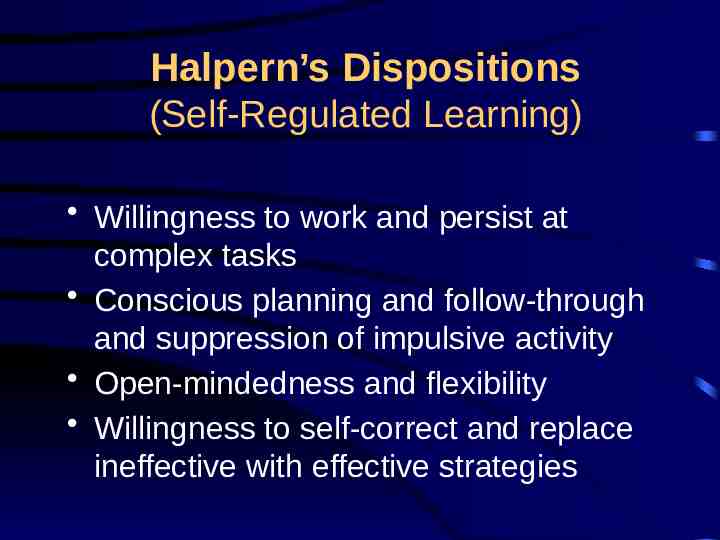

Halpern’s Dispositions (Self-Regulated Learning) Willingness to work and persist at complex tasks Conscious planning and follow-through and suppression of impulsive activity Open-mindedness and flexibility Willingness to self-correct and replace ineffective with effective strategies

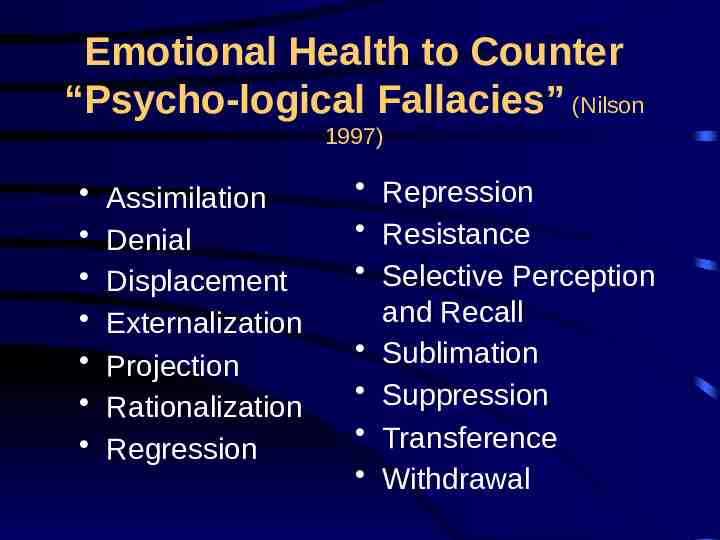

Emotional Health to Counter “Psycho-logical Fallacies” (Nilson 1997) Assimilation Denial Displacement Externalization Projection Rationalization Regression Repression Resistance Selective Perception and Recall Sublimation Suppression Transference Withdrawal

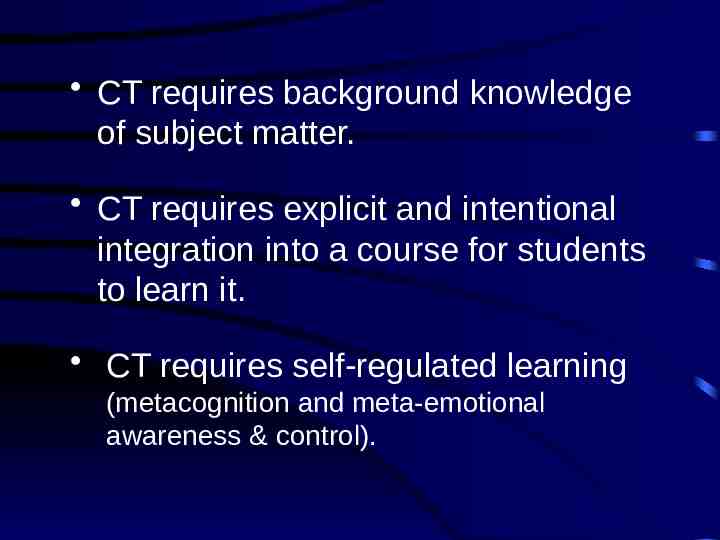

CT requires background knowledge of subject matter. CT requires explicit and intentional integration into a course for students to learn it. CT requires self-regulated learning (metacognition and meta-emotional awareness & control).

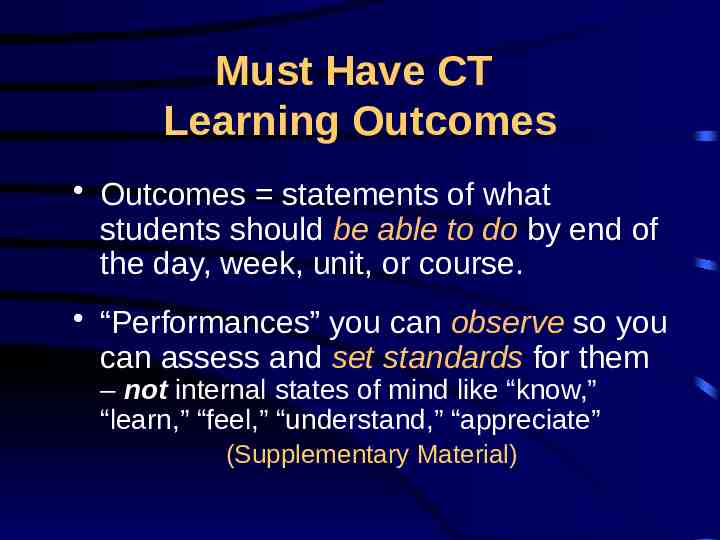

Must Have CT Learning Outcomes Outcomes statements of what students should be able to do by end of the day, week, unit, or course. “Performances” you can observe so you can assess and set standards for them – not internal states of mind like “know,” “learn,” “feel,” “understand,” “appreciate” (Supplementary Material)

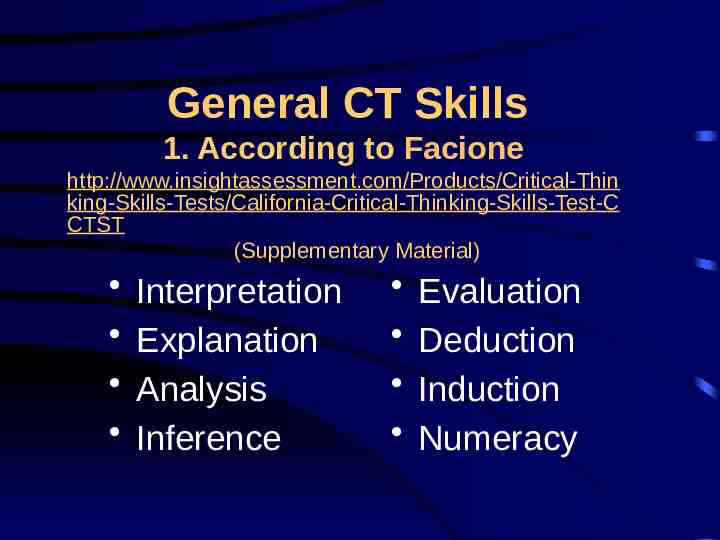

General CT Skills 1. According to Facione http://www.insightassessment.com/Products/Critical-Thin king-Skills-Tests/California-Critical-Thinking-Skills-Test-C CTST (Supplementary Material) Interpretation Explanation Analysis Inference Evaluation Deduction Induction Numeracy

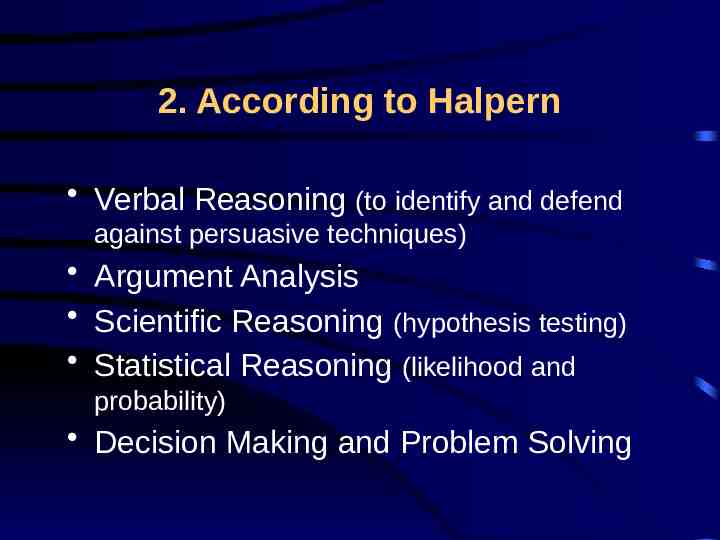

2. According to Halpern Verbal Reasoning (to identify and defend against persuasive techniques) Argument Analysis Scientific Reasoning (hypothesis testing) Statistical Reasoning (likelihood and probability) Decision Making and Problem Solving

Discipline-Relevant CT Skills/Outcomes (Supplementary Material) Check those relevant to your courses Make more specific to your courses. Write more as necessary. Fit them into CT VALUE Rubric criteria. Sequencing them: In what order will students achieve them?

Basic Teaching Principles Address misconceptions about CT and subject matter early. Ask your students what they think CT is. – Negative? – Purely critical? – Anti-the-way-things-are?

Teach some CT theory and vocab. – Operational terms/thinking verbs (Supplementary Material) – Logical fallacies: practice identifying and avoiding. List at http ://utminers.utep.edu/omwilliamson/ENGL13 11/fallacies.htm

Ask CT questions and assign CT tasks that match your outcomes and content low/no-stakes practice with your or peer feedback. (Supplementary Material)

– Methods for practice with feedback: Class discussions (e.g., cases, arguments) Debates and structured controversy Inquiry-guided activities (e.g., make sense of data) Journaling and other writing-to-learn exercises Worksheets

Simulations and role plays with debriefing discussions or papers Drafts of papers, presentations, and projects Brookfield’s in-class CT exercises http://www.stephenbrookfield.com/Dr. Stephen D. Brookfield/Workshop Mat erials files/Developing Critical Thinker s.pdf pp. 17-44

To advance students’ CT skills 1) give them increasingly complex material to interpret/analyze/evaluate over time.

OR 2) move them through a stages model: Perry at http://www.cse.buffalo.edu/ rapaport/perry.positi ons.html or http://perrynetwork.org/?page id 2%3E Wolcott at http://www.wolcottlynch.com/ Paul & Elder at http://www.criticalthinking.org/pages/critical-think ing-development-a-stage-theory/483

Have students observe and articulate their reasoning. – After every CT question/task, ask “How did you arrive at your response?” – Assign reflective writing to identify beliefs and misconceptions that may interfere with clear reasoning, such as “What part of the learning experience challenged what you thought about the subject? Did you find yourself resisting it? If so, how did you overcome your resistance?”

Mistakes to Avoid Low-level questions/tasks Claims without ambiguous evidence, uncertainty, or controversy Insufficient wait time for responses No feedback No reflection or self-regulation

Assessments Should Mirror Outcomes. Assessment Outcome

That is . If you want your students to be able to do X, Y, and Z, have them do X, Y, and Z to assess whether they can.

Assessment Guidelines Each outcome deserves assessment: formative (informal/ungraded/lowstakes) or summative (formal/graded). Assess authentically: real-life skills, knowledge, situations. Align cognitive levels of assessments with those of outcomes and teaching.

Before you assess summatively, assess formatively to: – Give students practice with feedback from you, peers, or computer program. – Get frequent feedback for yourself on their progress.

Don’t move on until almost all students have made acceptable progress. Set performance standards of “acceptable/unacceptable” for formative assessments and points/grades for summative assessments.

Assessment Instruments Objective items fill-in-the-blank (completion), T/F, matching, multiple choice, multiple T/F (Student-) Constructed responses writing assignments, essay test questions, oral or multimedia presentations, programs, projects, research reports, designs, artistic works or performances, portfolios, etc.

Most Types of Objective Items Can Require and Assess Interpretation Generalization Inference Problem solving Conclusion drawing Comprehension Application Analysis Synthesis Evaluation

Fill-in-the-Blank/Completion Focus on memorization (which you may want) – not CT. True/False Can assess CT IF “stimulus-based”; see multiple choice and multiple true/false below.

Matching Items Homogenous items within set every option plausible for every item in list – “Match each theory with its originator.” – Cause with effect – Definition with term – Achievement/work with person/author

– Pictures of objects with names – Symbol with concept – Organ/equipment/tool/apparatus with use or function – Foreign word with translation – Labeled parts in a picture with function

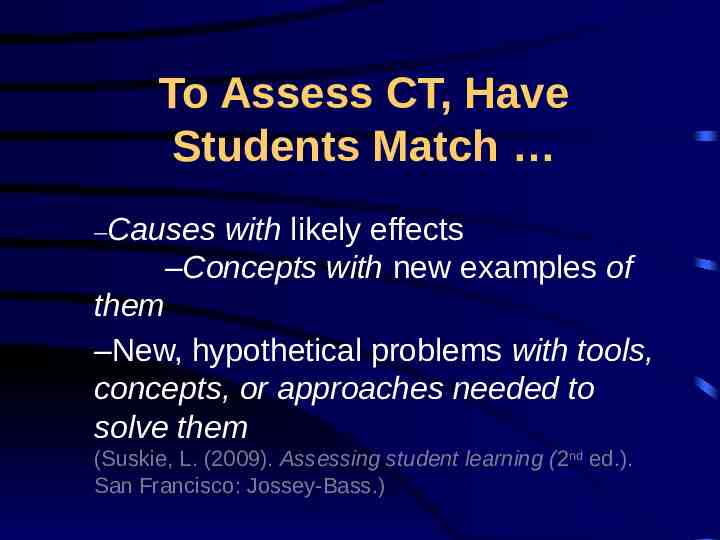

To Assess CT, Have Students Match –Causes with likely effects –Concepts with new examples of them –New, hypothetical problems with tools, concepts, or approaches needed to solve them (Suskie, L. (2009). Assessing student learning (2nd ed.). San Francisco: Jossey-Bass.)

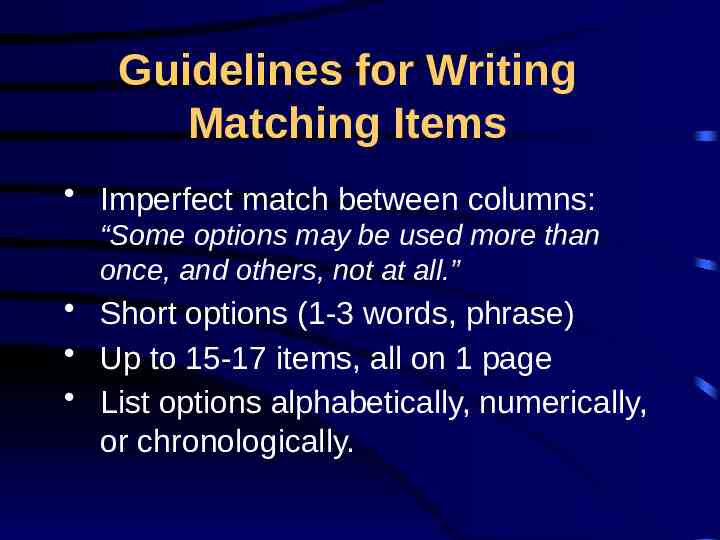

Guidelines for Writing Matching Items Imperfect match between columns: “Some options may be used more than once, and others, not at all.” Short options (1-3 words, phrase) Up to 15-17 items, all on 1 page List options alphabetically, numerically, or chronologically.

What two sets of items could you have your students match to assess their CT skills?

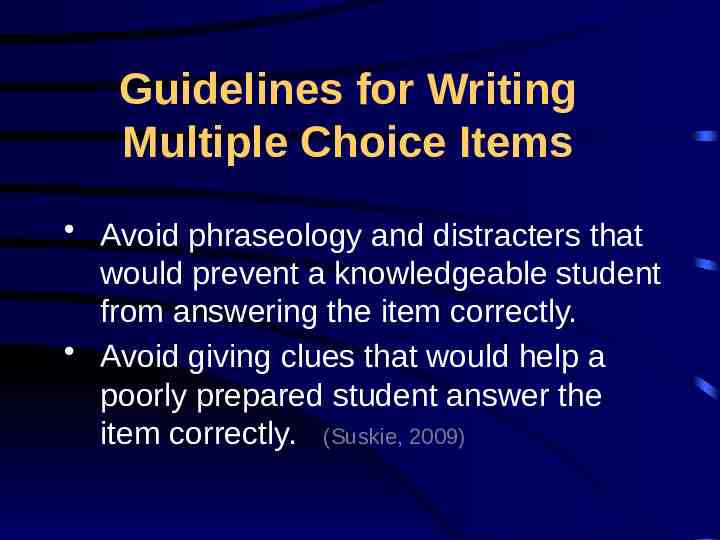

Guidelines for Writing Multiple Choice Items Avoid phraseology and distracters that would prevent a knowledgeable student from answering the item correctly. Avoid giving clues that would help a poorly prepared student answer the item correctly. (Suskie, 2009)

More specifically: List options alphabetically, numerically, chronologically. Make all distracters plausible, grammatically parallel, and just as long as correct response. Create distracters from elements of correct response.

Use sparingly: no, not, never, none, except Use generously – not just when correct: – all of the above – none of the above -

Multiple True/False Each option below stem is a T/F item. Superior flexible, efficiency, reliability Easier and quicker to develop More challenge, no process-ofelimination Stem must be clear.

To Assess CT, Compose: a series of multiple choice or multiple T/F items (or both) around a new*, realistic stimulus that students must interpret/analyze correctly to answer the items accurately. * New to the students

Possible Stimuli Text: claim, statement, passage, minicase, quote, report, text-based data set, description of an experiment Graphic: chart, graph, table, map, picture, model, diagram, drawing, schematic, spreadsheet

Guidelines for Writing Stimulus-Based Items New stimulus, but students must have prior practice in the CT skills assessed Few interlocking items Be creative with stimulus! (Supplementary Material)

What stimuli could you use for a series of multiple choice or multiple T/F items to assess your students’ CT skills? Write a series of multiple T/F items for it.

Strengths and Limitations of Stimulus-Based Items Assess more CT skills more efficiently than constructed responses - Cannot assess abilities to communicate, create, organize, define problems, or conduct research. Only constructed responses can.

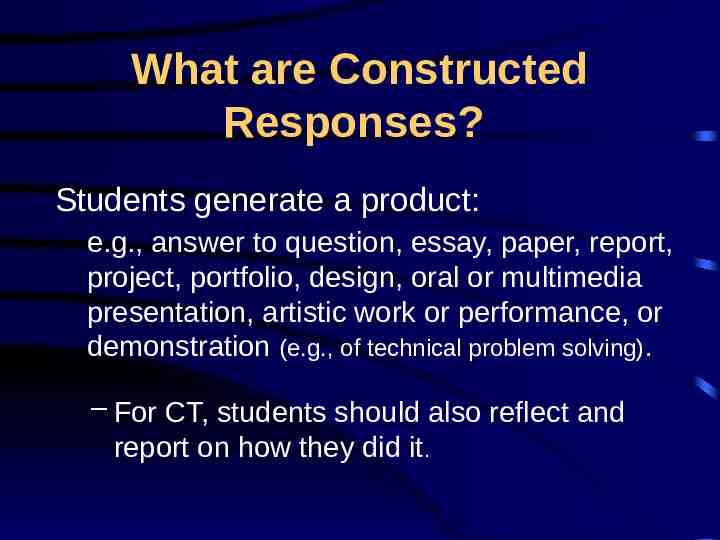

What are Constructed Responses? Students generate a product: e.g., answer to question, essay, paper, report, project, portfolio, design, oral or multimedia presentation, artistic work or performance, or demonstration (e.g., of technical problem solving). – For CT, students should also reflect and report on how they did it.

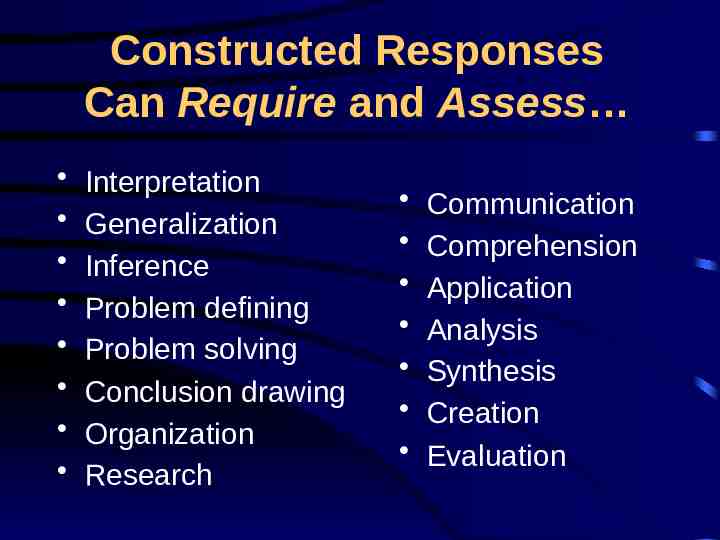

Constructed Responses Can Require and Assess Interpretation Generalization Inference Problem defining Problem solving Conclusion drawing Organization Research Communication Comprehension Application Analysis Synthesis Creation Evaluation

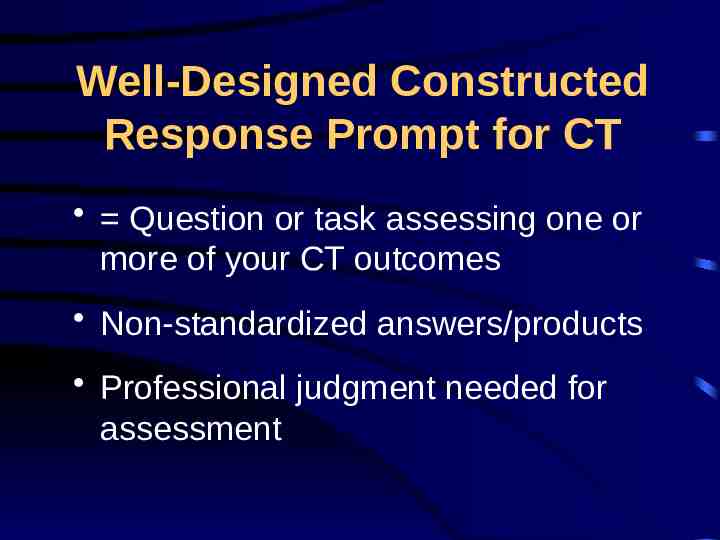

Well-Designed Constructed Response Prompt for CT Question or task assessing one or more of your CT outcomes Non-standardized answers/products Professional judgment needed for assessment

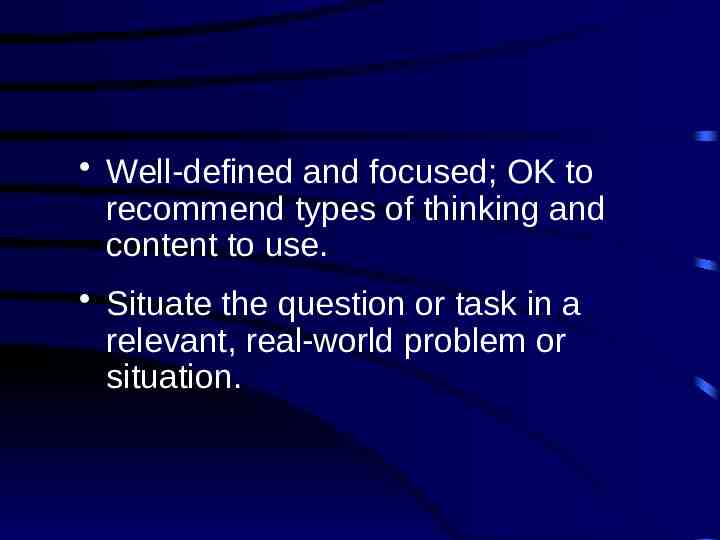

Well-defined and focused; OK to recommend types of thinking and content to use. Situate the question or task in a relevant, real-world problem or situation.

Examples of Poor and Improved Constructed Response Prompts VAGUE: To what factors have historians attributed the decline of the Roman Empire? IMPROVED: Some people argue that the United States is following the same path of decline as the Roman Empire. Write a critical examination of this claim analyzing how the United States is and is not declining due to similar factors.

VAGUE, LOW-LEVEL: What should a nurse do when a patient has a bad reaction to an immunotherapy injection? IMPROVED; PROBLEM-FOCUSED: After the first injection of an immunotherapy program, you notice a large, red wheal on your patient’s arm. Then the patient begins coughing and expiratory wheezing. What series of interventions should you implement? Justify your interventions and their sequence.

LOW-LEVEL: What is the relationship between education and income? To what extent has it changed recently? IMPROVED PARADOX-FOCUSED: The education of the working and middle classes has been increasing for decades while their income has been flat or decreasing for the past decade. How can you resolve this trend and the well-established positive relationship between education and income? (Consider other factors that may affect income.)

VAGUE, LOW-LEVEL: What will happen to the hydrosphere, the geosphere, and the biosphere if a large amount of sulfur dioxide is released into the atmosphere?

IMPROVED; PROBLEM-FOCUSED: Some geoscientists maintain that the mega-magna chamber below Yellowstone National Park is leaking increasing amounts of sulfur dioxide into the atmosphere and will cause a mass extinction within 70,000 years. They rest this claim on the mass extinction that happened 250 million years ago. Why or why not do you accept this claim? To what extent are the hydrospheric, atmospheric, and biospheric conditions comparable to those 250 million years ago?

Possible Reflective Meta-Assignments How did you arrive at your response/solution? How did you define the task/problem, decide which principles and concepts to apply, develop alternative approaches and solutions, and assess their feasibility, trade-offs, and relative worth? How did you conduct your design/problemsolving/research process (steps taken, strategies used, problems encountered, how overcome)? What skills did you use or improve, and when will they be useful in the future?

Evaluate your strategies, performance, and success in achieving your goals. What goals and strategies will guide your revision (if applicable)? What learning value did this task have? What would you do differently? What part of the learning experience challenged what you thought about the subject? Did you find yourself resisting it? If so, how did you overcome your resistance? What advice would you give next semester’s students before they do this assignment (preparation, strategies, pitfalls, value)?

Think of a relevant, real-world problem or situation for your students to solve or resolve. Choose an appropriate reflective metaassignment (assignment “wrapper”) to raise your students’ awareness of their thinking while solving or resolving it.

To Assess CT Questions and Tasks Analytical Rubric an assessment/grading tool that lays out specific expectations for a piece of work and describes each level of performance quality on the selected assessment criteria/skills.

For Rubrics, Accept That: You can’t assess/grade student work on every criterion/skill you can think of. Students can’t work on improving their performance on every criterion/skill. They don’t even know what those criteria/skills are. You must chose just a few criteria/skills.

Step 1 : Choose CT Criteria Based on Your Outcomes. What CT skills/outcomes are most important for students to demonstrate in a given assignment or essay? What CT skills/outcomes is it supposed to assess?

Step 2: Define Levels and Their Values. Number or range of points for each level Grades (A, B, C, etc. or 4.0. 3.7, 3.3, etc.) Descriptive levels (e.g., high, average, low mastery; exemplary, competent, developing, unacceptable) Combination

Step 3: Describe the Performance for Each Level on Each Criterion. Usually in a table in sentences, phrases, or lists; “all or most ” alternative. Write out descriptions of each level of performance on each assessment criterion.

Look to CT VALUE Rubric for ways to phrase descriptions. (See last page of Supplementary Material for locations of rubric and phraseology alternatives.) Connect descriptions to CT VALUE Rubric to yield artifacts for institutional assessment.

Step 4: Use Rubric to Teach. Distribute and explain your rubric to students as part of assignment or essay test instructions. Teach analysis and evaluation: Best to have students in groups use rubric to grade models of varying quality.

Step 5: Use Rubric to Assess. Have students submit rubric with their work. Mark relevant descriptors on rubric and write comments on work, as time permits. Demand any grade challenges in writing with justifications within a tight time limit.